K8S add-ons附加组件metrics-server实战案例

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.metrics-server概述

1.什么metrics-server

metrics-server为K8S集群的"kubectl top"命令提供数据监控,也提供了"HPA(Horizontal Pod Autoscaler)"和"VPA(Vertical Pod Autoscaler)"的使用。

[root@master231 ~]# kubectl top pods

error: Metrics API not available

[root@master231 ~]#

[root@master231 ~]# kubectl top nodes

error: Metrics API not available

[root@master231 ~]#

2.hpa和vpa的区别

- hpa:(水平扩容,Horizontal Pod Autoscaler)

表示Pod数量资源不足时,可以自动增加Pod副本数量,以抵抗流量过多的情况,降低负载。

- vpa: (垂直扩容,Vertical Pod Autoscaler)

表示可以动态调整容器的资源上限,比如一个Pod一开始是200Mi内存,如果资源达到定义的阈值,就可以扩展内存,但不会增加pod副本数量。

典型的区别在于vpa具有一定的资源上限问题,因为pod是K8S集群调度的最小单元,不可拆分,因此这个将来扩容时,取决于单节点的资源上限。

部署文档

https://github.com/kubernetes-sigs/metrics-server

3.metrics-server组件本质上是从kubelet组件获取监控数据

[root@master231 pki]# pwd

/etc/kubernetes/pki

[root@master231 pki]#

[root@master231 pki]# ll apiserver-kubelet-client.*

-rw-r--r-- 1 root root 1164 Apr 7 11:00 apiserver-kubelet-client.crt

-rw------- 1 root root 1679 Apr 7 11:00 apiserver-kubelet-client.key

[root@master231 pki]#

[root@master231 pki]# curl -s -k --key apiserver-kubelet-client.key --cert apiserver-kubelet-client.crt https://10.0.0.231:10250/metrics/resource | wc -l

102

[root@master231 pki]#

[root@master231 pki]# curl -s -k --key apiserver-kubelet-client.key --cert apiserver-kubelet-client.crt https://10.0.0.232:10250/metrics/resource | wc -l

67

[root@master231 pki]#

[root@master231 pki]# curl -s -k --key apiserver-kubelet-client.key --cert apiserver-kubelet-client.crt https://10.0.0.233:10250/metrics/resource | wc -l

57

[root@master231 pki]#

二.部署metrics-server组件

1 下载资源清单

[root@master231 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/high-availability-1.21+.yaml

SVIP:

[root@master231 ~]# wget http://192.168.14.253/Resources/Kubernetes/Add-ons/metrics-server/0.6.x/high-availability-1.21%2B.yaml

2 编辑配置文件

[root@master231 ~]# vim high-availability-1.21+.yaml

...

114 apiVersion: apps/v1

115 kind: Deployment

116 metadata:

...

144 - args:

145 - --kubelet-insecure-tls # 不要验证Kubelets提供的服务证书的CA。不配置则会报错x509。

...

... image: registry.aliyuncs.com/google_containers/metrics-server:v0.7.2

3 部署metrics-server组件

[root@master231 ~]# kubectl apply -f high-availability-1.21+.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

poddisruptionbudget.policy/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@master231 ~]#

镜像下载地址:

http://192.168.16.253/Resources/Kubernetes/Add-ons/metrics-server/0.7.x/

4 查看镜像是否部署成功

[root@master231 metrics-server]# kubectl get pods,svc -n kube-system -l k8s-app=metrics-server -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/metrics-server-57c6f647bb-727dz 1/1 Running 0 3m56s 10.100.203.130 worker232 <none> <none>

pod/metrics-server-57c6f647bb-bm6tb 1/1 Running 0 3m56s 10.100.140.120 worker233 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/metrics-server ClusterIP 10.200.10.142 <none> 443/TCP 3m56s k8s-app=metrics-server

[root@master231 metrics-server]#

[root@master231 metrics-server]# kubectl -n kube-system describe svc metrics-server

Name: metrics-server

Namespace: kube-system

Labels: k8s-app=metrics-server

Annotations: <none>

Selector: k8s-app=metrics-server

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.200.10.142

IPs: 10.200.10.142

Port: https 443/TCP

TargetPort: https/TCP

Endpoints: 10.100.140.120:10250,10.100.203.130:10250

Session Affinity: None

Events: <none>

[root@master231 metrics-server]#

5 验证metrics组件是否正常工作

[root@master231 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master231 136m 6% 2981Mi 78%

worker232 53m 2% 1707Mi 45%

worker233 45m 2% 1507Mi 39%

[root@master231 ~]#

[root@master231 ~]# kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

coredns-6d8c4cb4d-bknzr 1m 11Mi

coredns-6d8c4cb4d-cvp9w 1m 31Mi

etcd-master231 10m 75Mi

kube-apiserver-master231 33m 334Mi

kube-controller-manager-master231 8m 56Mi

kube-proxy-29dbp 4m 19Mi

kube-proxy-hxmzb 7m 18Mi

kube-proxy-k92k2 1m 31Mi

kube-scheduler-master231 2m 17Mi

metrics-server-6b4f784878-gwsf5 2m 17Mi

metrics-server-6b4f784878-qjvwr 2m 17Mi

[root@master231 ~]#

[root@master231 ~]# kubectl top pods -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

calico-apiserver calico-apiserver-64b779ff45-cspxl 4m 28Mi

calico-apiserver calico-apiserver-64b779ff45-fw6pc 3m 29Mi

calico-system calico-kube-controllers-76d5c7cfc-89z7j 3m 16Mi

calico-system calico-node-4cvnj 16m 140Mi

calico-system calico-node-qbxmn 16m 143Mi

calico-system calico-node-scwkd 17m 138Mi

calico-system calico-typha-595f8c6fcb-bhdw6 1m 18Mi

calico-system calico-typha-595f8c6fcb-f2fw6 2m 22Mi

calico-system csi-node-driver-2mzq6 1m 8Mi

calico-system csi-node-driver-7z4hj 1m 8Mi

calico-system csi-node-driver-m66z9 1m 15Mi

default xiuxian-6dffdd86b-m8f2h 1m 33Mi

kube-system coredns-6d8c4cb4d-bknzr 1m 11Mi

kube-system coredns-6d8c4cb4d-cvp9w 1m 31Mi

kube-system etcd-master231 16m 74Mi

kube-system kube-apiserver-master231 35m 334Mi

kube-system kube-controller-manager-master231 9m 57Mi

kube-system kube-proxy-29dbp 4m 19Mi

kube-system kube-proxy-hxmzb 7m 18Mi

kube-system kube-proxy-k92k2 10m 31Mi

kube-system kube-scheduler-master231 2m 17Mi

kube-system metrics-server-6b4f784878-gwsf5 2m 17Mi

kube-system metrics-server-6b4f784878-qjvwr 2m 17Mi

kuboard kuboard-agent-2-6964c46d56-cm589 5m 9Mi

kuboard kuboard-agent-77dd5dcd78-jc4rh 5m 24Mi

kuboard kuboard-etcd-qs5jh 4m 35Mi

kuboard kuboard-v3-685dc9c7b8-2pd2w 36m 353Mi

metallb-system controller-686c7db689-cnj2c 1m 18Mi

metallb-system speaker-srvw8 3m 31Mi

metallb-system speaker-tgwql 3m 17Mi

metallb-system speaker-zpn5c 3m 17Mi

tigera-operator tigera-operator-8d497bb9f-bcj5s 2m 27Mi

[root@master231 ~]#

6 metrics-server的API测试验证

1.获取metrics的测试API,观察是否有NodeMetrics和PodMetrics类型。

[root@master241 ~]# kubectl get --raw /apis/metrics.k8s.io/v1beta1 | python3 -m json.tool

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "metrics.k8s.io/v1beta1",

"resources": [

{

"name": "nodes",

"singularName": "",

"namespaced": false,

"kind": "NodeMetrics",

"verbs": [

"get",

"list"

]

},

{

"name": "pods",

"singularName": "",

"namespaced": true,

"kind": "PodMetrics",

"verbs": [

"get",

"list"

]

}

]

}

[root@master241 ~]#

2.基于metrics的API获取Pod相关的指标

[root@master241 ~]# kubectl get pods,svc -n kube-system -l k8s-app=metrics-server -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/metrics-server-7cd44b454f-rgb26 1/1 Running 0 16m 10.100.207.18 worker243 <none> <none>

pod/metrics-server-7cd44b454f-zbn4g 1/1 Running 0 16m 10.100.165.155 worker242 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/metrics-server ClusterIP 10.193.112.117 <none> 443/TCP 16m k8s-app=metrics-server

[root@master241 ~]#

[root@master241 ~]# kubectl get --raw /apis/metrics.k8s.io/v1beta1/namespaces/kube-system/pods/metrics-server-7cd44b454f-zbn4g | python3 -m json.tool

{

"kind": "PodMetrics",

"apiVersion": "metrics.k8s.io/v1beta1",

"metadata": {

"name": "metrics-server-7cd44b454f-zbn4g",

"namespace": "kube-system",

"creationTimestamp": "2025-09-23T10:55:51Z",

"labels": {

"k8s-app": "metrics-server",

"pod-template-hash": "7cd44b454f"

}

},

"timestamp": "2025-09-23T10:55:28Z",

"window": "10.257s",

"containers": [

{

"name": "metrics-server",

"usage": {

"cpu": "1688992n",

"memory": "17Mi"

}

}

]

}

[root@master241 ~]#

3.基于metrics的API获取Node相关的指标

[root@master241 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master241 Ready control-plane 130d v1.31.9 10.0.0.241 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

worker242 Ready <none> 130d v1.31.9 10.0.0.242 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

worker243 Ready <none> 130d v1.31.9 10.0.0.243 <none> Ubuntu 22.04.4 LTS 5.15.0-144-generic containerd://1.6.36

[root@master241 ~]#

[root@master241 ~]# kubectl get --raw /apis/metrics.k8s.io/v1beta1/nodes/worker243 | python3 -m json.tool

{

"kind": "NodeMetrics",

"apiVersion": "metrics.k8s.io/v1beta1",

"metadata": {

"name": "worker243",

"creationTimestamp": "2025-09-23T10:57:07Z",

"labels": {

"beta.kubernetes.io/arch": "amd64",

"beta.kubernetes.io/os": "linux",

"kubernetes.io/arch": "amd64",

"kubernetes.io/hostname": "worker243",

"kubernetes.io/os": "linux"

}

},

"timestamp": "2025-09-23T10:56:53Z",

"window": "20.062s",

"usage": {

"cpu": "54830026n",

"memory": "2518172Ki"

}

}

[root@master241 ~]#

三.水平Pod伸缩基于CPU的HPA实战案例

1.什么是hpa

hpa是k8s集群内置的资源,全称为"HorizontalPodAutoscaler"。

可以自动实现Pod水平伸缩,说白了,在业务高峰期可以自动扩容Pod副本数量,在集群的低谷期,可以自动缩容Pod副本数量。

2.基于CPU的HPA实战案例

2.1 创建Pod

[root@master231 horizontalpodautoscalers]# cat 01-deploy-hpa.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-stress

spec:

replicas: 1

selector:

matchLabels:

app: stress

template:

metadata:

labels:

app: stress

spec:

containers:

- image: jasonyin2020/oldboyedu-linux-tools:v0.1

name: oldboyedu-linux-tools

args:

- tail

- -f

- /etc/hosts

resources:

requests:

cpu: 0.2

memory: 300Mi

limits:

cpu: 0.5

memory: 500Mi

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: stress-hpa

spec:

maxReplicas: 5

minReplicas: 2

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: deploy-stress

targetCPUUtilizationPercentage: 95

[root@master231 horizontalpodautoscalers]#

[root@master231 horizontalpodautoscalers]# kubectl apply -f 01-deploy-hpa.yaml

deployment.apps/deploy-stress created

horizontalpodautoscaler.autoscaling/stress-hpa created

[root@master231 horizontalpodautoscalers]#

2.2 测试验证

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide # 第一次查看发现Pod副本数量只有1个

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 1/1 1 1 11s oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress <unknown>/95% 2 5 0 11s

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-rzgsm 1/1 Running 0 11s 10.100.140.121 worker233 <none> <none>

[root@master231 horizontalpodautoscalers]#

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide # 第N次查看发现Pod副本数量只有2个

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 2/2 2 2 51s oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress 0%/95% 2 5 2 51s

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-f9rff 1/1 Running 0 36s 10.100.203.150 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-rzgsm 1/1 Running 0 51s 10.100.140.121 worker233 <none> <none>

[root@master231 horizontalpodautoscalers]#

彩蛋:(响应式创建hpa)

[root@master231 horizontalpodautoscalers]# kubectl autoscale deploy deploy-stress --min=2 --max=5 --cpu-percent=95 -o yaml --dry-run=client

2.3 压力测试

[root@master231 ~]# kubectl exec deploy-stress-5d7c796c97-f9rff -- stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 10m

stress: info: [7] dispatching hogs: 8 cpu, 4 io, 2 vm, 0 hdd

2.4 查看Pod副本数量

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 3/3 3 3 4m3s oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress 105%/95% 2 5 2 4m3s

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-f9rff 1/1 Running 0 3m48s 10.100.203.150 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-rzgsm 1/1 Running 0 4m3s 10.100.140.121 worker233 <none> <none>

pod/deploy-stress-5d7c796c97-zxgp6 1/1 Running 0 3s 10.100.140.122 worker233 <none> <none>

[root@master231 horizontalpodautoscalers]#

2.5 再次压测

[root@master231 ~]# kubectl exec deploy-stress-5d7c796c97-rzgsm -- stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 10m

stress: info: [6] dispatching hogs: 8 cpu, 4 io, 2 vm, 0 hdd

[root@master231 ~]# kubectl exec deploy-stress-5d7c796c97-zxgp6 -- stress --cpu 8 --io 4 --vm 2 --vm-bytes 128M --timeout 10m

stress: info: [7] dispatching hogs: 8 cpu, 4 io, 2 vm, 0 hdd

2.6 发现最多有5个Pod创建

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 5/5 5 5 5m50s oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress 249%/95% 2 5 5 5m50s

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-dnlzj 1/1 Running 0 34s 10.100.203.180 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-f9rff 1/1 Running 0 5m35s 10.100.203.150 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-ld8s9 1/1 Running 0 19s 10.100.140.123 worker233 <none> <none>

pod/deploy-stress-5d7c796c97-rzgsm 1/1 Running 0 5m50s 10.100.140.121 worker233 <none> <none>

pod/deploy-stress-5d7c796c97-zxgp6 1/1 Running 0 110s 10.100.140.122 worker233 <none> <none>

[root@master231 horizontalpodautoscalers]#

2.7 取消压测后

需要等待10min会自动缩容Pod数量到2个。

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 2/2 2 2 20m oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress 0%/95% 2 5 5 20m

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-dnlzj 1/1 Running 0 15m 10.100.203.180 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-f9rff 1/1 Running 0 20m 10.100.203.150 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-ld8s9 1/1 Terminating 0 14m 10.100.140.123 worker233 <none> <none>

pod/deploy-stress-5d7c796c97-rzgsm 1/1 Terminating 0 20m 10.100.140.121 worker233 <none> <none>

pod/deploy-stress-5d7c796c97-zxgp6 1/1 Terminating 0 16m 10.100.140.122 worker233 <none> <none>

[root@master231 horizontalpodautoscalers]#

[root@master231 horizontalpodautoscalers]#

[root@master231 horizontalpodautoscalers]# kubectl get deploy,hpa,po -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-stress 2/2 2 2 21m oldboyedu-linux-tools harbor250.oldboyedu.com/oldboyedu-casedemo/oldboyedu-linux-tools:v0.1 app=stress

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/stress-hpa Deployment/deploy-stress 0%/95% 2 5 2 21m

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-stress-5d7c796c97-dnlzj 1/1 Running 0 16m 10.100.203.180 worker232 <none> <none>

pod/deploy-stress-5d7c796c97-f9rff 1/1 Running 0 21m 10.100.203.150 worker232 <none> <none>

[root@master231 horizontalpodautoscalers]#

四.水平Pod伸缩基于内存的HPA实战案例

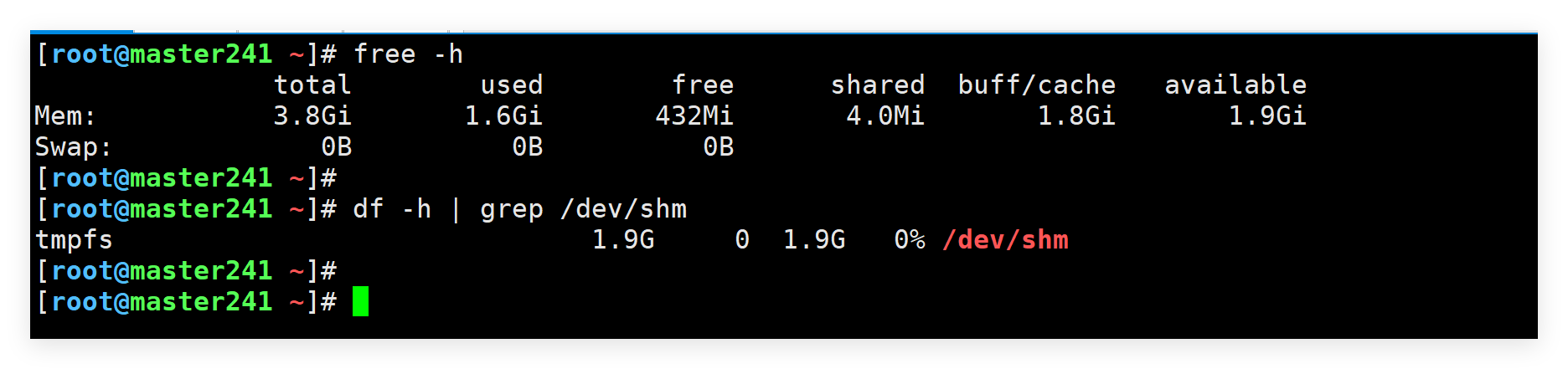

1 tmpfs概述

如上图所示,tmpfs是一个临时文件系统,驻留在内存中,所以"/dev/shm"这个目录不在硬盘上,而是在内存里,断电后数据会丢失。

因为在内存里,所以读写速度非常快,可以提供较高的速度,在Linux系统下,tmpfs默认最大为内存的一般大小。

了解tmpfs这个特性可以用来提供服务器性能,把一些读写性能要求较高,但是数据又可以丢失的这样的数据保存在/dev/shm设备中来提供访问速度。

接下来,我们使用dd命令产生数据写入tmpfs文件系统测试案例:

1.在tmp目录下创建100M类型的tmpfs文件系统

[root@master241 ~]# mkdir /tmp/yinzhengjie

[root@master241 ~]# mount -t tmpfs -o size=100M tmpfs /tmp/yinzhengjie/

[root@master241 ~]# df -h | grep yinzhengjie

tmpfs 100M 0 100M 0% /tmp/yinzhengjie

[root@master241 ~]#

2.使用dd命令产生写入200M数据无法写入成功

[root@master241 ~]# dd if=/dev/zero of=/tmp/yinzhengjie/bigfile.log bs=1M count=200

dd: error writing '/tmp/yinzhengjie/bigfile.log': No space left on device

101+0 records in

100+0 records out

104857600 bytes (105 MB, 100 MiB) copied, 0.0775754 s, 1.4 GB/s

[root@master241 ~]#

[root@master241 ~]# ll -h /tmp/yinzhengjie/bigfile.log

-rw-r--r-- 1 root root 100M Sep 24 14:52 /tmp/yinzhengjie/bigfile.log

[root@master241 ~]#

2 基于内存的HPA实战案例

2.1 deploymen,cm,hpa的测试环境准备

[root@master241 ~]# cat 01-deploy-cm-memory-case.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-memory

data:

start.sh: |

#!/bin/sh

mkdir /tmp/yinzhengjie

mount -t tmpfs -o size=90M tmpfs /tmp/yinzhengjie/

dd if=/dev/zero of=/tmp/yinzhengjie/bigfile.log

sleep 60

rm /tmp/yinzhengjie/bigfile.log

umount /tmp/yinzhengjie

rm -rf /tmp/yinzhengjie

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian-memory

spec:

replicas: 1

selector:

matchLabels:

apps: xiuxian

template:

metadata:

labels:

apps: xiuxian

spec:

volumes:

- name: data

configMap:

name: cm-memory

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- name: data

mountPath: /data

resources:

requests:

memory: 100Mi

cpu: 100m

securityContext:

privileged: true

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: hpa-memory

spec:

minReplicas: 1

maxReplicas: 5

metrics:

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 60

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: deploy-xiuxian-memory

[root@master241 ~]#

[root@master241 ~]#

[root@master241 ~]# kubectl apply -f 01-deploy-cm-memory-case.yaml

configmap/cm-memory created

deployment.apps/deploy-xiuxian-memory created

horizontalpodautoscaler.autoscaling/hpa-memory created

[root@master241 ~]#

2.1 压力测试

[root@master241 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-memory-5df7549d99-v2pkh 1/1 Running 0 95s 10.100.207.38 worker243 <none> <none>

[root@master241 ~]#

[root@master241 ~]# kubectl exec -it deploy-xiuxian-memory-5df7549d99-v2pkh -- sh

/ # ls -l /data/

total 0

lrwxrwxrwx 1 root root 15 Sep 24 08:02 start.sh -> ..data/start.sh

/ #

/ # sh /data/start.sh # 执行该脚本需要等待1min,因为的的脚本逻辑要睡1min。

dd: error writing '/tmp/yinzhengjie/bigfile.log': No space left on device

184321+0 records in

184320+0 records out

/ #

/ #

[root@master241 ~]#

[root@master241 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-memory-5df7549d99-rjr7f 1/1 Running 0 84s 10.100.165.171 worker242 <none> <none>

deploy-xiuxian-memory-5df7549d99-v2pkh 1/1 Running 0 3m34s 10.100.207.38 worker243 <none> <none>

[root@master241 ~]#

[root@master241 ~]# kubectl get pods -o wide # 大约5min中左右会自动缩容哟~

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-memory-5df7549d99-v2pkh 1/1 Running 0 8m59s 10.100.207.38 worker243 <none> <none>

[root@master241 ~]#

2.2 结合hpa实时结果查看

[root@master241 ~]# kubectl get deploy,rs,po,hpa -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/deploy-xiuxian-memory 1/1 1 1 80s c1 registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 apps=xiuxian

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/deploy-xiuxian-memory-5df7549d99 1 1 1 80s c1 registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 apps=xiuxian,pod-template-hash=5df7549d99

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/deploy-xiuxian-memory-5df7549d99-v2pkh 1/1 Running 0 80s 10.100.207.38 worker243 <none> <none>

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 1 40s

[root@master241 ~]#

[root@master241 ~]#

[root@master241 ~]# kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 1 43s

hpa-memory Deployment/deploy-xiuxian-memory memory: 93%/60% 1 5 1 90s

hpa-memory Deployment/deploy-xiuxian-memory memory: 93%/60% 1 5 2 105s

hpa-memory Deployment/deploy-xiuxian-memory memory: 48%/60% 1 5 2 2m15s

hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 2 2m45s

hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 2 3m16s

hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 2 7m31s

hpa-memory Deployment/deploy-xiuxian-memory memory: 2%/60% 1 5 1 7m46s

五.故障排查案例

[root@master231 ~]# kubectl get pods -o wide -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

metrics-server-6f5b66d8f9-fvbqm 0/1 Running 0 15m 10.100.203.151 worker232 <none> <none>

metrics-server-6f5b66d8f9-n2zxs 0/1 Running 0 15m 10.100.140.77 worker233 <none> <none>

[root@master231 ~]#

[root@master231 ~]# kubectl -n kube-system logs metrics-server-6f5b66d8f9-fvbqm

...

E0414 09:30:03.341444 1 scraper.go:149] "Failed to scrape node" err="Get \"https://10.0.0.233:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 10.0.0.233 because it doesn't contain any IP SANs" node="worker233"

E0414 09:30:03.352008 1 scraper.go:149] "Failed to scrape node" err="Get \"https://10.0.0.232:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 10.0.0.232 because it doesn't contain any IP SANs" node="worker232"

E0414 09:30:03.354140 1 scraper.go:149] "Failed to scrape node" err="Get \"https://10.0.0.231:10250/metrics/resource\": tls: failed to verify certificate: x509: cannot validate certificate for 10.0.0.231 because it doesn't contain any IP SANs" node="master231"

问题分析:

证书认证失败,导致无法获取数据。

解决方案:

[root@master231 ~]# vim high-availability-1.21+.yaml

...

114 apiVersion: apps/v1

115 kind: Deployment

116 metadata:

...

144 - args:

145 - --kubelet-insecure-tls # 不要验证Kubelets提供的服务证书的CA。不配置则会报错x509。

...

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/19003670,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号