Calico路由反射器模式与NetworkPolicy

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.BGP概述

1.Calico在各节点基于BGP传播路由信息

BGP是路由器间交换路由信息的标准路由协议,典型代表有iBGP和eBGP。

- iBGP(Interior Border Gateway Protocol)

负责在同一AS内的BGP路由器间传播路由,它通过递归方式进行路由选择。

- eBGP(Exterior Border Gateway Protocol)

用于在不同AS间传播BGP路由,它基于hop-by-hop机制进行路径选择。

每个路由器都存在一到多个BGP Peer(对等端)。

Calico node能够基于BGP协议将物理路由器作为BGP Peer。

2.BGP常见的拓扑

Full-mesh:

- 1.启用BGP时,Calico默认在所有节点间建立一个ASN(64512),并基于iBGP为它们创建full-mesh连接;

[root@master231 ~]# calicoctl get nodes -o wide

NAME ASN IPV4 IPV6

master231 (64512) 10.0.0.231/24

worker232 (64512) 10.0.0.232/24

worker233 (64512) 10.0.0.233/24

[root@master231 ~]#

- 2.该模式适用于集群规模较小(100个节点以内)的场景;

Route Reflectors:

- 1.在大规模的iBGP场景中,BGP route reflectors能显著降低每个节点需要维护的BGP Peer的数量;

- 2.可选择几个节点作为BGP RR,并在这些RR之间建立full mesh拓扑;

- 3.其他节点只需要同这些RR之间建立Peer连接即可;

ToR(Top of Rack):

- 1.节点直接同机柜栈顶的L3交换机建立Peer连接,并禁用默认的full模式;

- 2.Calico也能够完全支持eBGP机制,从而允许用户灵活构建出需要的网络拓扑;

二.配置Route Reflectors模式

1.Route Reflectors(反射器)概述

在100个节点规模以上的Calico集群环境中,为提升iBGP的效率,通常应该配置Route Reflectors。

配置Route Reflectors步骤如下:

- 1.禁用默认的full-mesh拓扑;

- 2.在选定的RR节点上添加专用的节点标签;

- 3.配置集群节点同RR节点建立BGP会话;

2.配置单Route Reflectors

2.1 查看各节点的PEER TYPE

1.查看节点状态【主要观察PEER ADDRESS和PEER TYPE两个字段即可】

1.1 查看master231节点配置信息

[root@master231 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 10.0.0.232 | node-to-node mesh | up | 00:59:12 | Established |

| 10.0.0.233 | node-to-node mesh | up | 00:59:35 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@master231 ~]#

1.2 查看worker232节点配置信息

[root@worker232 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 10.0.0.231 | node-to-node mesh | up | 01:28:36 | Established |

| 10.0.0.233 | node-to-node mesh | up | 01:28:35 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker232 ~]#

1.3 查看worker233节点配置信息

[root@worker233 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 10.0.0.231 | node-to-node mesh | up | 01:28:01 | Established |

| 10.0.0.232 | node-to-node mesh | up | 01:28:35 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker233 ~]#

2.关于网络效果分析

其中"node-to-node mesh"表示每个节点都会保存其他节点路由相关信息,也就是说,每个节点都要维护N-1个路由配置信息,整个集群需要维护"N * (N-1)"个路由效果,当节点数量非常多的场景下压力会很大。

所以我们需要实现一种"BGP reflecter"效果,需要对反射器角色做冗余,如果我们的集群是一个多主集群的话,可以做如下步骤:

- 1.定制反射器角色;

- 2.后端节点使用反射器;

- 3.关闭默认的网络效果(对于最新版本的Calico来说此步骤可以忽略,老版本需要手动操作);

2.2 BGP reflecter(反射器)实战

1.编写资源清单

[root@master231 ~]# cat calico-bgp-reflector-single.yaml

apiVersion: v1

kind: Node

metadata:

name: worker233

labels:

yinzhengjie-k8s-route-reflector: "true"

spec:

podCIDR: 10.100.2.0/24

podCIDRs:

- 10.100.2.0/24

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: yinzhengjie-bgppeer-rr

spec:

# 节点标签选择器,定义当前配置要生效的目标节点

nodeSelector: all()

# 指定反射器的标签,要和对应节点匹配

peerSelector: yinzhengjie-k8s-route-reflector=="true"

---

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

# 这里最好是default,因为我们要对BGP默认的网络效果进行关闭

name: default

spec:

# 定义输出到stdout的日志级别,若不配置,默认为"INFO"

logSeverityScreen: Info

# 是否启用full-mesh模式,默认为true。

nodeToNodeMeshEnabled: false

# 使用的自治系统号,指定的是后端节点间使用反射器的时候,我们要在一个标志号,默认值为"64512",可以自定义。

asNumber: 65200

# BGP要对外通告的Service CIDR

serviceClusterIPs:

- cidr: 10.200.0.0/16

# 默认监听在179端口

listenPort: 179

[root@master231 ~]#

2.应用配置

[root@master231 ~]# kubectl apply -f calico-bgp-reflector-single.yaml

[root@master231 ~]#

[root@master231 ~]# kubectl get nodes -l yinzhengjie-k8s-route-reflector --show-labels

NAME STATUS ROLES AGE VERSION LABELS

worker233 Ready <none> 82d v1.23.17 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker233,kubernetes.io/os=linux,yinzhengjie-k8s-route-reflector=true

[root@master231 ~]#

2.3 查看各节点的PEER TYPE

1.查看master231节点的PEER ADDRESS

[root@master231 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.233.port.179 | node specific | up | 01:08:00 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@master231 ~]#

2.查看worker232节点的PEER ADDRESS

[root@worker232 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.233.port.179 | node specific | up | 01:08:01 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker232 ~]#

3.查看worker233节点的PEER ADDRESS

[root@worker233 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.231.port.179 | node specific | up | 01:08:00 | Established |

| 10.0.0.232.port.179 | node specific | up | 01:08:00 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker233 ~]#

3.配置多Route Reflectors冗余

3.1 编写资源清单

1.编写资源清单【说白了,就是新增了一个Node配置而已】

[root@master231 ~]# cat calico-bgp-reflector-multiple.yaml

apiVersion: v1

kind: Node

metadata:

labels:

yinzhengjie-k8s-route-reflector: "true"

name: master231

spec:

podCIDR: 10.100.0.0/24

podCIDRs:

- 10.100.0.0/24

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: v1

kind: Node

metadata:

name: worker233

labels:

yinzhengjie-k8s-route-reflector: "true"

spec:

podCIDR: 10.100.2.0/24

podCIDRs:

- 10.100.2.0/24

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: yinzhengjie-bgppeer-rr

spec:

nodeSelector: all()

peerSelector: yinzhengjie-k8s-route-reflector=="true"

---

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false

asNumber: 65200

serviceClusterIPs:

- cidr: 10.200.0.0/16

listenPort: 179

[root@master231 ~]#

2.应用配置

[root@master231 ~]# kubectl apply -f calico-bgp-reflector-multiple.yaml

3.2 测试验证

1.查看master231节点的PEER ADDRESS

[root@master231 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.233.port.179 | node specific | up | 01:08:01 | Established |

| 10.0.0.232.port.179 | node specific | up | 01:19:35 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@master231 ~]#

2.查看worker232节点的PEER ADDRESS

[root@worker232 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.233.port.179 | node specific | up | 01:08:01 | Established |

| 10.0.0.231.port.179 | node specific | up | 01:19:34 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker232 ~]#

2.查看worker233节点的PEER ADDRESS

[root@worker233 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------------+---------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------------+---------------+-------+----------+-------------+

| 10.0.0.231.port.179 | node specific | up | 01:08:00 | Established |

| 10.0.0.232.port.179 | node specific | up | 01:08:00 | Established |

+---------------------+---------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

[root@worker233 ~]#

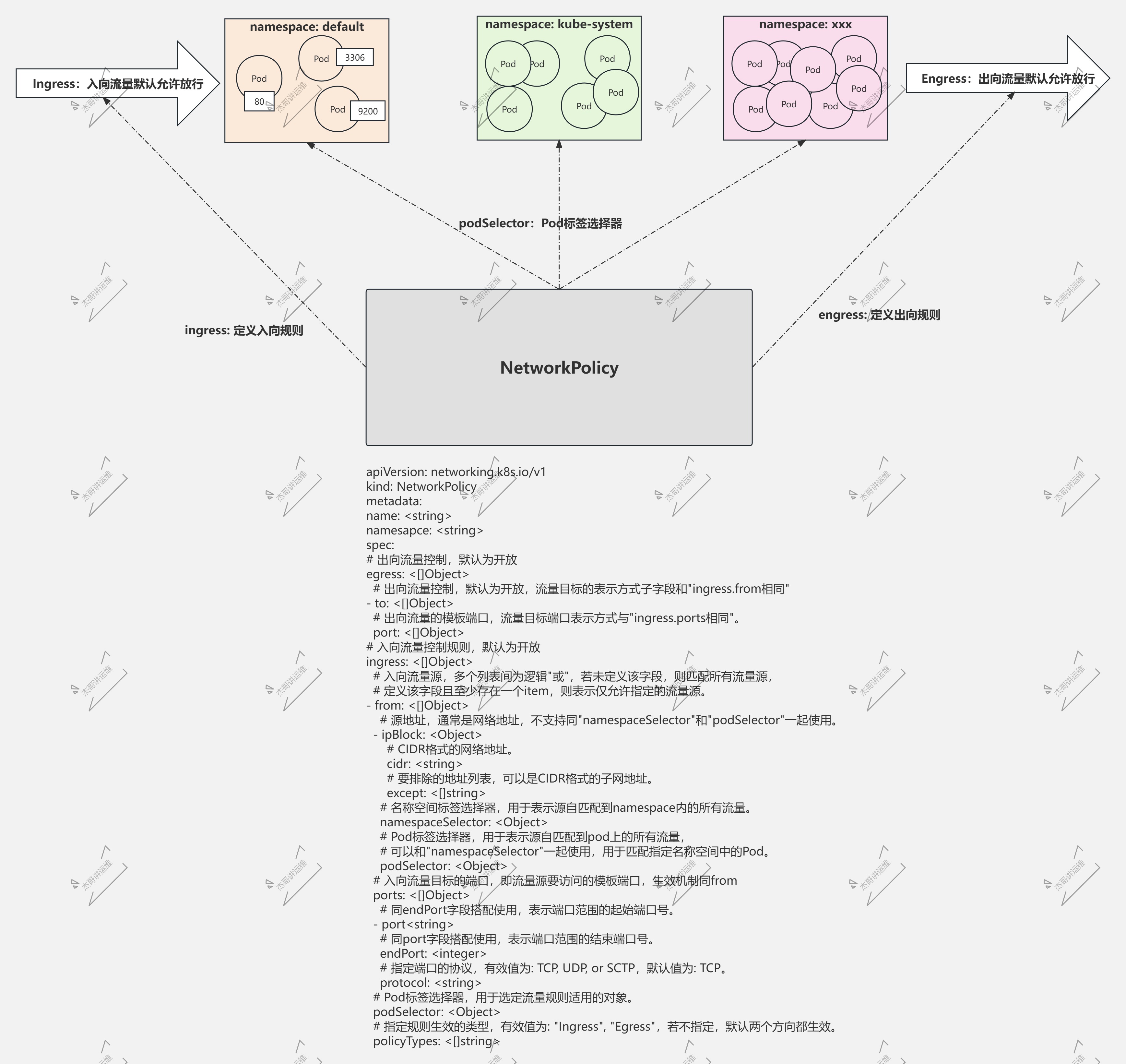

三.网络策略(NetworkPolicy)

1.Network Policy概述

什么是Network Policy:

- 1.属于K8S集群标准的API资源类型;

- 2.由网络插件负责转换为节点上的iptables filter表规则,以定义Pod间的通信许可;

- 3.主要针对TCP,UDP和SCTP协议,实现在IP地址或Port层面进行流量控制;

Network Policy的功能:

- 1.针对一组pod,定义同对端实体通信时,在入向(Ingress)或出向(Egress)流量上的控制规则;

- 2.描述对端实体的方法有以下几种:

- 2.1 一组pod对象,通常基于标签选择器定义筛选条件;

- 2.2 单个或一组名称空间;

- 2.3 IP地址块(但pod同其所在的节点间的通信不受限制);

- 3.Network Policy的具体实现依赖于Network Plugin;

Network Policy的生效机制:

- 1.默认情况下,一组pod的入向和出向流量均被允许;

- 2.同一方向上,适用于一组Pod的多个规则遵循"加法"机制;

- 2.1 一组Pod上,多个Ingress策略相加所生成的集合(并集)是最为最终生效的结果;

- 2.2 一组Pod上,多个Egress策略相同所生成的集合是为最终生效的策略;

- 3.若同时定义了Ingress和Egress规则,针对一个对端,双向策略都为"许可",通信才能真正实现;

推荐阅读:

https://kubernetes.io/zh-cn/docs/concepts/services-networking/network-policies/

https://kubernetes.io/zh-cn/docs/reference/kubernetes-api/policy-resources/network-policy-v1/

2.NetworkPolicy资源规范

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: <string>

namesapce: <string>

spec:

# 出向流量控制,默认为开放

egress: <[]Object>

# 出向流量控制,默认为开放,流量目标的表示方式子字段和"ingress.from相同"

- to: <[]Object>

# 出向流量的模板端口,流量目标端口表示方式与"ingress.ports相同"。

port: <[]Object>

# 入向流量控制规则,默认为开放

ingress: <[]Object>

# 入向流量源,多个列表间为逻辑"或",若未定义该字段,则匹配所有流量源,

# 定义该字段且至少存在一个item,则表示仅允许指定的流量源。

- from: <[]Object>

# 源地址,通常是网络地址,不支持同"namespaceSelector"和"podSelector"一起使用。

- ipBlock: <Object>

# CIDR格式的网络地址。

cidr: <string>

# 要排除的地址列表,可以是CIDR格式的子网地址。

except: <[]string>

# 名称空间标签选择器,用于表示源自匹配到namespace内的所有流量。

namespaceSelector: <Object>

# Pod标签选择器,用于表示源自匹配到pod上的所有流量,

# 可以和"namespaceSelector"一起使用,用于匹配指定名称空间中的Pod。

podSelector: <Object>

# 入向流量目标的端口,即流量源要访问的模板端口,生效机制同from

ports: <[]Object>

# 同endPort字段搭配使用,表示端口范围的起始端口号。

- port <string>

# 同port字段搭配使用,表示端口范围的结束端口号。

endPort: <integer>

# 指定端口的协议,有效值为: TCP, UDP, or SCTP,默认值为: TCP。

protocol: <string>

# Pod标签选择器,用于选定流量规则适用的对象。

podSelector: <Object>

# 指定规则生效的类型,有效值为: "Ingress", "Egress",若不指定,默认两个方向都生效。

policyTypes: <[]string>

3.网络策略案例实战

3.1 网络策略环境准备

1.环境准备

[root@master231 networkpolciy]# cat deploy-xiuxian.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

class: linux93

name: oldboyedu

---

apiVersion: v1

kind: Namespace

metadata:

labels:

school: oldboyedu

name: jasonyin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: xiuxian-v1

namespace: oldboyedu

spec:

replicas: 1

selector:

matchLabels:

apps: v1

template:

metadata:

labels:

apps: v1

spec:

nodeName: worker232

containers:

- image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

name: c1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: xiuxian-v2

namespace: jasonyin

spec:

replicas: 1

selector:

matchLabels:

apps: v2

template:

metadata:

labels:

apps: v2

spec:

nodeName: worker233

containers:

- image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

name: c1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: xiuxian-v3

spec:

replicas: 1

selector:

matchLabels:

apps: v3

template:

metadata:

labels:

apps: v3

spec:

nodeName: master231

containers:

- image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v3

name: c1

[root@master231 networkpolciy]#

2.创建资源

[root@master231 networkpolciy]# kubectl apply -f deploy-xiuxian.yaml

namespace/oldboyedu created

namespace/jasonyin created

deployment.apps/xiuxian-v1 created

deployment.apps/xiuxian-v2 created

deployment.apps/xiuxian-v3 created

[root@master231 networkpolciy]#

3.2 测试连通性,默认情况下都是可以正常访问的

1.查看资源

[root@master231 networkpolciy]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v3-fbbcf9474-bwmq2 1/1 Running 0 4s 10.100.160.133 master231 <none> <none>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -o wide -n oldboyedu

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v1-6545d56f7c-w9c77 1/1 Running 0 7s 10.100.203.135 worker232 <none> <none>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -o wide -n jasonyin

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v2-768f95c4d8-8bpm4 1/1 Running 0 9s 10.100.140.70 worker233 <none> <none>

[root@master231 networkpolciy]#

2.连通性测试

[root@master231 networkpolciy]# kubectl exec xiuxian-v3-fbbcf9474-bwmq2 -- curl -s 10.100.203.135

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl exec xiuxian-v3-fbbcf9474-bwmq2 -- curl -s 10.100.140.70

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v2</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: red">凡人修仙传 v2 </h1>

<div>

<img src="2.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n oldboyedu exec xiuxian-v1-6545d56f7c-w9c77 -- curl -s 10.100.140.70

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v2</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: red">凡人修仙传 v2 </h1>

<div>

<img src="2.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n oldboyedu exec xiuxian-v1-6545d56f7c-w9c77 -- curl -s 10.100.160.133

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v3</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: pink">凡人修仙传 v3 </h1>

<div>

<img src="3.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n jasonyin exec xiuxian-v2-768f95c4d8-8bpm4 -- curl -s 10.100.203.135

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n jasonyin exec xiuxian-v2-768f95c4d8-8bpm4 -- curl -s 10.100.160.133

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v3</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: pink">凡人修仙传 v3 </h1>

<div>

<img src="3.jpg">

<div>

</body>

</html>

[root@master231 networkpolciy]#

3.3 基于ipBlock定义网络访问策略

1.编写网络策略

[root@master231 networkpolciy]# cat networkPolicy-ipBlock.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: oldboyedu

spec:

# 匹配Pod指定的Pod,若不定义该字段则默认匹配当前名称空间下的所有Pod。

podSelector:

matchLabels:

apps: v1

# 表示给定的策略是应用于进入所选Pod的入站流量(Ingress)还是来自所选Pod的出站流量(Egress),或两者兼有。

# 如果 NetworkPolicy未指定policyTypes,则默认情况下始终设置Ingress。

# 如果 NetworkPolicy有任何出口规则的话则设置Egress。

# 此处我不仅仅使用入站流量,还使用了出站流量

policyTypes:

- Ingress

- Egress

# 定义Pod的入站流量,谁可以访问我?

ingress:

# 表示定义目标来源

- from:

# 基于IP地址匹配

- ipBlock:

# 指定特定的网段

cidr: 10.100.0.0/16

# 排除指定的网段

except:

- 10.100.140.0/24

ports:

- protocol: TCP

port: 80

# 定义匹配Pod的出站流量,表示我可以访问谁?

egress:

# 定义可以访问的目标

- to:

# 基于IP地址匹配目标

- ipBlock:

#cidr: 10.100.160.0/24

cidr: 10.100.140.0/24

# 定义可以访问的目标端口

ports:

- protocol: TCP

port: 80

[root@master231 networkpolciy]#

2.应用策略

[root@master231 networkpolciy]# kubectl apply -f networkPolicy-ipBlock.yaml

networkpolicy.networking.k8s.io/test-network-policy created

[root@master231 networkpolciy]#

3.测试验证

[root@master231 networkpolciy]# kubectl exec -it xiuxian-v3-fbbcf9474-bwmq2 -- sh

/ # curl 10.100.203.135

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

/ #

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n jasonyin exec -it xiuxian-v2-768f95c4d8-8bpm4 -- sh

/ # curl 10.100.203.135 # 无法访问!

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n oldboyedu exec xiuxian-v1-6545d56f7c-w9c77 -it -- sh

/ # curl 10.100.140.70 # 可以正常访问

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v2</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: red">凡人修仙传 v2 </h1>

<div>

<img src="2.jpg">

<div>

</body>

</html>

/ #

/ # curl 10.100.160.133 # 无法访问!

3.4 基于名称空间空间namespaceSelector进行访问

1.编写网络策略

[root@master231 networkpolciy]# cat networkPolicy-namespaceSelector.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: test-network-policy

namespace: oldboyedu

spec:

# 匹配Pod指定的Pod,若不定义该字段则默认匹配所有的Pod。

podSelector:

matchLabels:

apps: v1

# 表示给定的策略是应用于进入所选Pod的入站流量(Ingress)还是来自所选Pod的出站流量(Egress),或两者兼有。

# 如果 NetworkPolicy未指定policyTypes,则默认情况下始终设置Ingress。

# 如果 NetworkPolicy有任何出口规则的话则设置Egress。

policyTypes:

- Ingress

- Egress

# 定义Pod的入站流量,谁可以访问我?

ingress:

# 表示定义目标来源

- from:

# 基于名称空间匹配Pod

- namespaceSelector:

matchLabels:

school: oldboyedu

# 匹配目标来源的端口号,可以指定协议和端口

ports:

- protocol: TCP

port: 80

# 定义匹配Pod的出站流量,表示我可以访问谁?

egress:

# 定义可以访问的目标

- to:

# 基于IP地址匹配目标

- ipBlock:

cidr: 10.100.140.0/24

# 定义可以访问的目标端口

ports:

- protocol: TCP

port: 80

# port: 22

[root@master231 networkpolciy]#

2.应用网络策略

[root@master231 networkpolciy]# kubectl apply -f networkPolicy-namespaceSelector.yaml

networkpolicy.networking.k8s.io/test-network-policy created

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get ns -l school=oldboyedu --show-labels

NAME STATUS AGE LABELS

jasonyin Active 21m kubernetes.io/metadata.name=jasonyin,school=oldboyedu

[root@master231 networkpolciy]#

3.测试验证

[root@master231 networkpolciy]# kubectl -n jasonyin exec xiuxian-v2-768f95c4d8-8bpm4 -it -- sh

/ #

/ # curl 10.100.203.135

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

/ #

[root@master231 networkpolciy]# kubectl exec xiuxian-v3-fbbcf9474-bwmq2 -it -- sh

/ # curl 10.100.203.135 # 无法访问!

3.5 基于Pod的标签podSelector进行访问

1.应用策略

[root@master231 networkpolciy]# kubectl apply -f networkPolicy-podSelector.yaml

networkpolicy.networking.k8s.io/test-network-policy created

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v3-fbbcf9474-bwmq2 1/1 Running 0 32m 10.100.160.133 master231 <none> <none>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -o wide -n oldboyedu

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v1-6545d56f7c-w9c77 1/1 Running 0 32m 10.100.203.135 worker232 <none> <none>

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -o wide -n jasonyin

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

xiuxian-v2-768f95c4d8-8bpm4 1/1 Running 0 32m 10.100.140.70 worker233 <none> <none>

[root@master231 networkpolciy]#

2.测试验证

[root@master231 networkpolciy]# kubectl -n jasonyin exec xiuxian-v2-768f95c4d8-8bpm4 -it -- sh

/ # curl 10.100.203.135 # 无法访问

^C

/ #

command terminated with exit code 130

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl exec xiuxian-v3-fbbcf9474-bwmq2 -it -- sh

/ #

/ # curl 10.100.203.135 # 无法访问

^C

/ #

[root@master231 networkpolciy]# kubectl run test-pods --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 -l apps=xiuxian

pod/test-pods created

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -l apps=xiuxian --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-pods 1/1 Running 0 2m31s apps=xiuxian

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl exec -it test-pods -- sh

/ #

/ # curl 10.100.203.135 # 尽管标签匹配,但不同名称空间的Pod无法访问!

[root@master231 networkpolciy]# kubectl -n oldboyedu run test-pods-2 --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 -l apps=xiuxian

pod/test-pods-2 created

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -l apps=xiuxian --show-labels -n oldboyedu

NAME READY STATUS RESTARTS AGE LABELS

test-pods-2 1/1 Running 0 115s apps=xiuxian

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n oldboyedu exec test-pods-2 -it -- sh

/ #

/ # curl 10.100.203.135 # 标签匹配且在同一个名称空间的Pod是可以正常访问的

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

/ #

[root@master231 networkpolciy]# kubectl -n oldboyedu run test-pods-3 --image=registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1 -l apps=haha

pod/test-pods-3 created

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl get pods -l apps --show-labels -n oldboyedu

NAME READY STATUS RESTARTS AGE LABELS

test-pods-2 1/1 Running 0 2m46s apps=xiuxian

test-pods-3 1/1 Running 0 9s apps=haha

xiuxian-v1-6545d56f7c-w9c77 1/1 Running 0 39m apps=v1,pod-template-hash=6545d56f7c

[root@master231 networkpolciy]#

[root@master231 networkpolciy]# kubectl -n oldboyedu exec -it test-pods-3 -- sh

/ #

/ # curl 10.100.203.135 # 标签不匹配,尽管在同一个名称空间也是无法访问的!

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/18758843,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号