详细介绍:特征匹配(FeatureMatching)

特征匹配

特征匹配旨在将检测到的特征从一张图像匹配到另一张图像,比较不同图像中的关键属性以找到相似之处。它在计算机视觉中应用广泛,包括场景理解、图像拼接、物体追踪和模式识别。

1. 暴力搜索(Brute Force)

暴力搜索是最直观的匹配方法,几乎不需要技巧,但在大图像或大规模图像集合中非常耗时。在特征匹配中,它类似于将一幅图像的每个像素与另一幅图像的每个像素进行比较,效率低下。

1.1 使用 SIFT 算法暴力搜索

SIFT(Scale-Invariant Feature Transform,尺度不变特征变换)是一种经典的特征检测算法,具有尺度、旋转和部分光照变化鲁棒性。其核心阶段包括:

- 尺度空间极值点检测

- 关键点精确定位

- 方向赋值

- 关键点描述子生成

详细介绍请参考我的文章。

1.1.1 导入必要模块

import cv2

import numpy

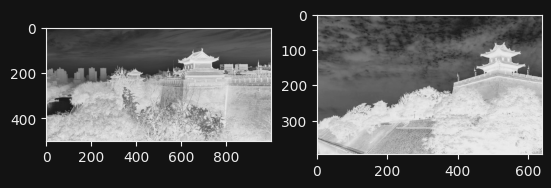

import matplotlib.pyplot as plt1.1.2 读取图片

img1 = cv2.imread("img.png", cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread("img_1.png", cv2.IMREAD_GRAYSCALE)

plt.subplot(121)

plt.imshow(img1, cmap='gray')

plt.subplot(122)

plt.imshow(img2, cmap='gray')

plt.show()

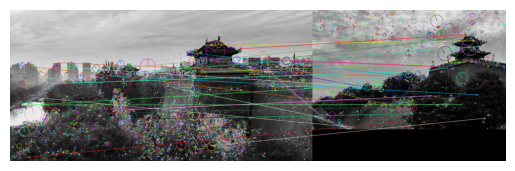

1.1.3 SIFT 匹配

sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1, des2, k=2)

good = []

for m, n in matches:

if m.distance < 0.75 * n.distance:

good.append([m])

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good, None,

flags=cv2.DrawMatchesFlags_DRAW_RICH_KEYPOINTS)

plt.imshow(img3, cmap='gray')

plt.axis("off")

plt.show()

1.2 使用 ORB 暴力搜索

ORB(Oriented FAST and Rotated BRIEF)是一种高效特征检测和描述算法,结合了 FAST 关键点检测和 BRIEF 描述子,适合实时应用。

特点:

- 高效性:计算速度快,适合资源受限设备

- 二进制描述子:存储和匹配效率高

- 旋转不变性:通过关键点方向调整实现

- 尺度适应性:通过图像金字塔实现部分尺度不变

img1 = cv2.imread("img.png", cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread("img_1.png", cv2.IMREAD_GRAYSCALE)

orb = cv2.ORB_create()

kp1, des1 = orb.detectAndCompute(img1, None)

kp2, des2 = orb.detectAndCompute(img2, None)

bf = cv2.BFMatcher()

match = bf.knnMatch(des1, des2, k=2)

good = []

for m, n in match:

if m.distance < 0.75 * n.distance:

good.append([m])

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, good, None,

flags=cv2.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS)

2. 使用 FLANN(快速最近邻)匹配

FLANN(Fast Library for Approximate Nearest Neighbors)通过高效的搜索结构(如 k-D 树)快速找到近似相似特征,显著提高匹配速度。

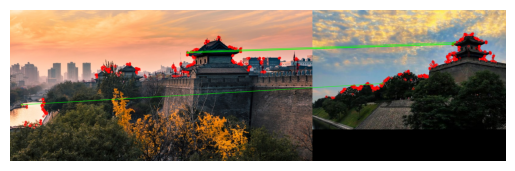

2.1 SIFT + FLANN

img1 = cv2.imread("img.png", cv2.IMREAD_COLOR_RGB)

img2 = cv2.imread("img_1.png", cv2.IMREAD_COLOR_RGB)

sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

matchesMask = [[0, 0] for i in range(len(matches))]

for i, (m, n) in enumerate(matches):

if m.distance < 0.7 * n.distance:

matchesMask[i] = [1, 0]

draw_params = dict(

matchColor=(0, 255, 0),

singlePointColor=(255, 0, 0),

matchesMask=matchesMask,

flags=cv2.DrawMatchesFlags_DEFAULT,

)

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, matches, None, **draw_params)

2.2 ORB + FLANN

orb = cv2.ORB_create()

kp1, des1 = orb.detectAndCompute(img1, None)

kp2, des2 = orb.detectAndCompute(img2, None)

FLANN_INDEX_LSH = 6

index_params = dict(algorithm=FLANN_INDEX_LSH, table_number=12, key_size=20, multi_probe_level=2)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

matchesMask = [[0, 0] for i in range(len(matches))]

for i, match in enumerate(matches):

if len(match) == 2:

m, n = match

if m.distance < 0.7 * n.distance:

matchesMask[i] = [1, 0]

draw_params = dict(

matchColor=(0, 255, 0),

singlePointColor=(255, 0, 0),

matchesMask=matchesMask,

flags=cv2.DrawMatchesFlags_DEFAULT,

)

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, matches, None, **draw_params)

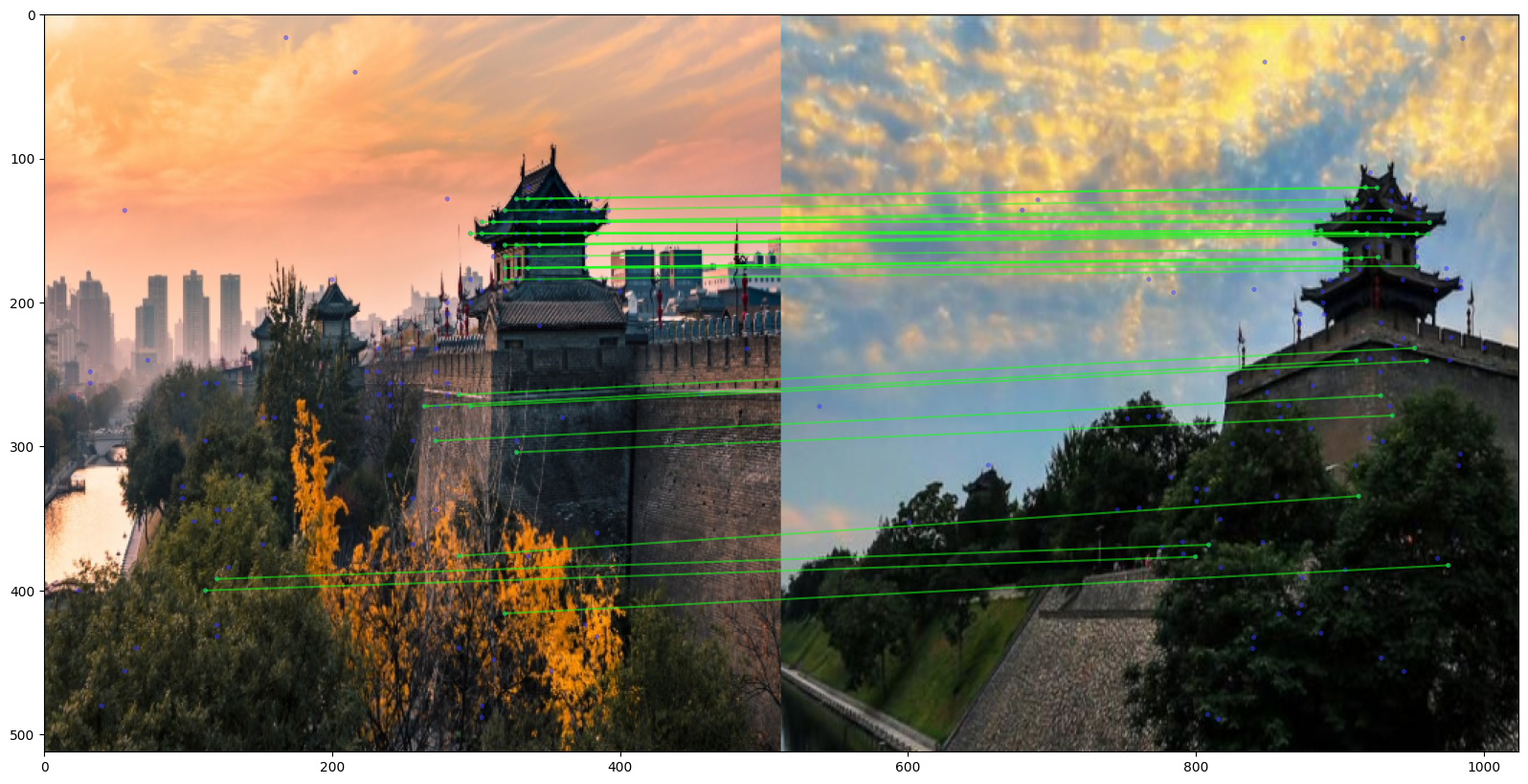

3. 局部特征匹配与 Transformer(LoFTR)

LoFTR(Local Feature TRansformer)由 Sun 等人提出,是一种基于 Transformer 的无检测器局部特征匹配方法。它不依赖传统特征检测器,而是直接学习匹配图像中的关键点,具有稳健性和变换不变性。

3.1 导入必要模块

import kornia as K

import kornia.feature as KF

import torch

from kornia_moons.viz import draw_LAF_matches

from kornia.feature import LoFTR3.2 LoFTR 匹配

img1 = K.io.load_image("img.png", K.io.ImageLoadType.RGB32)[None, ...]

img2 = K.io.load_image("img_1.png", K.io.ImageLoadType.RGB32)[None, ...]

img1 = K.geometry.resize(img1, (512, 512), antialias=True)

img2 = K.geometry.resize(img2, (512, 512), antialias=True)

matcher = LoFTR(pretrained="outdoor")

input_dict = {

"image0": K.color.rgb_to_grayscale(img1),

"image1": K.color.rgb_to_grayscale(img2),

}

with torch.inference_mode():

correspondences = matcher(input_dict)

mkpts0 = correspondences["keypoints0"].cpu().numpy()

mkpts1 = correspondences["keypoints1"].cpu().numpy()

Fm, inliers = cv2.findFundamentalMat(mkpts0, mkpts1,

cv2.USAC_MAGSAC, 0.5, 0.999, 100000)

inliers = inliers > 03.3 可视化

draw_LAF_matches(

KF.laf_from_center_scale_ori(

torch.from_numpy(mkpts0).view(1, -1, 2),

torch.ones(mkpts0.shape[0]).view(1, -1, 1, 1),

torch.ones(mkpts0.shape[0]).view(1, -1, 1),

),

KF.laf_from_center_scale_ori(

torch.from_numpy(mkpts1).view(1, -1, 2),

torch.ones(mkpts1.shape[0]).view(1, -1, 1, 1),

torch.ones(mkpts1.shape[0]).view(1, -1, 1),

),

torch.arange(mkpts0.shape[0]).view(-1, 1).repeat(1, 2),

K.tensor_to_image(img1),

K.tensor_to_image(img2),

inliers,

draw_dict={

"inlier_color": (0.1, 1, 0.1, 0.5),

"tentative_color": None,

"feature_color": (0.2, 0.2, 1, 0.5),

"vertical": False,

},

)

浙公网安备 33010602011771号

浙公网安备 33010602011771号