python爬取通讯录

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

import csv

# 1.创建浏览器对象

#chrome版本较高,禁用GPU加速,否则一直报错

chrome_opt = webdriver.ChromeOptions()

chrome_opt.add_argument('--disable-gpu')

path = r"chromedriver.exe"

driver = webdriver.Chrome(executable_path=path,chrome_options=chrome_opt)

# 2.操作浏览器对象

driver.get('http://111111111/tx.aspx?fid=0')

#取出内容放入列表

def get_content():

list=[]

for i in range(2,30):

for s in range(1,10):

#遍历出xpath路径

str=f'//*[@id="form1"]/table/tbody/tr[{i}]/td[{s}]'

text=driver.find_element_by_xpath(str).text

list.append(text)

return list

#对内容列表进行分组,形成列表的列表

def sort_writer(*list):

step=9

listers=[list[i:i+9] for i in range(0,len(list),step)]

with open("./zhaopin.csv","w",newline='') as f:

writer=csv.writer(f)

writer.writerows(listers)

#循环控制页数

for i in range(1,400):

try:

a=get_content()

sort_writer(*a)

driver.find_element_by_link_text("下一页").click()

except Exception as ide:

print("出错了!停止")

driver.quit()

break

finally:

time.sleep(1)

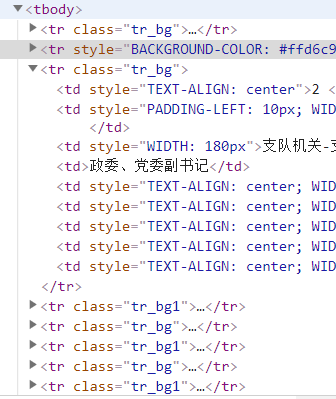

所有通讯录内容在 tbody》tr》td 中

from pyquery import PyQuery as pq

import requests

import csv

url="http://localhost:8080/index.htm"

res=requests.get(url).content

opq=pq(res)

#把查找到的文本组装成list

listconters=[]

conters=opq("tbody").eq(1).find("tr").children()

for td in conters:

w=td.text

listconters.append(w)

#列表按个数重新分组,形成列表的列表,类似[ [a],[b],[c]..]

step=9

listconter=[listconters[i:i+step]for i in range(0,len(listconters),step)]

print(listconter)

#writerow写一行,writerows写列表每一项为一行,newline属性可以避免多一行空白行

with open("./通讯录.csv","w",newline="") as f:

writer = csv.writer (f)

writer.writerows(listconter)

浙公网安备 33010602011771号

浙公网安备 33010602011771号