linux运维、架构之路-分布式存储GlusterFS

一、GlusterFS介绍

GlusterFS是一种全对称的开源分布式文件系统,所谓全对称是指GlusterFS采用弹性哈希算法,没有中心节点,所有节点全部平等。GlusterFS配置方便,稳定性好,可轻松达到PB级容量以及数千个节点。PB级容量、高可用、读写性能、基于文件系统级别共享、分布式、无metadata(元数据)的存储方式。

二、GlusterFS特性

全对称架构、支持多种卷类型(类似RAID0/1/5/10/01)、支持卷级别的压缩、支持FUSE、支持NFS、支持SMB、支持Hadoop、支持OpenStack。

三、GlusterFS概念说明

- birck:GlusterFS的基本元素,以节点服务器目录形式展现;

- volume:多个brick的逻辑集合;

- metadata:元数据,用于描述文件、目录等的信息;

- self-heal:用于后台运行检测副本卷中文件和目录的不一致性并解决这些不一致;

- FUSE:Filesystem Userspace是一个可加载的内核模块,其支持非特权用户创建自己的文件系统而不需要修改内核代码通过在用户空间运行文件系统的代码通过FUSE代码与内核进行桥接;

- Gluster Server:数据存储服务器,即组成GlusterFS存储集群的节点;

- Gluster Client:使用GlusterFS存储服务的服务器,如KVM、OpenStack、LB RealServer、HA node。

四、GlusterFS部署

1、服务器规划

|

系统 |

IP地址 |

主机名 |

CPU |

内存 |

|

CentOS 7.5 |

192.168.56.10 |

server1 |

2C |

2G |

|

CentOS 7.5 |

192.168.56.11 |

server2 |

2C |

2G

|

①/etc/hosts解析

cat >> /etc/hosts <<EOF 192.168.56.10 server1 192.168.56.11 server2 EOF

②关闭防火墙、SElinux等

setenforce 0 systemctl stop firewalld systemctl disable firewalld sed -i 's/enforcing/disabled/g' /etc/sysconfig/selinux

2、GlusterFS安装部署

yum -y install epel-release yum -y install centos-release-gluster yum -y install glusterfs glusterfs-server glusterfs-fuse

①客户端安装

yum -y install glusterfs glusterfs-fuse

②启动服务(所有节点)

systemctl enable glusterd.service && systemctl start glusterd.service

③GlusterFS节点信任

假设现在只有两个节点

server1上执行:gluster peer probe server2

server2上执行:gluster peer probe server1

从集群中移除节点

gluster peer detach server2 #从集群中去除节点(该节点中不能存在卷中正在使用的brick)

④创建存储卷并启动(任意一个节点执行)

mkdir /data/gluster_volumes -p #准备目录创建存储卷 gluster volume create gluster_volumes replica 2 server1:/data/gluster_volumes server2:/data/gluster_volumes #创建存储卷 gluster volume start gluster_volumes #启动卷

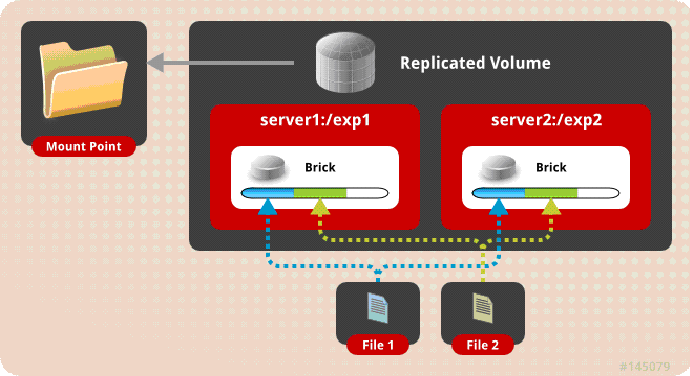

注:GlusterFS支持多种存储类型,不同的类型存储数据的方式是不同的,我这里使用的是Replicate,即两台节点机器存储内容完全一致。这样做的好处是,如果出现单机故障,那么另一台节点上也有完整数据。原理图如下:

多个文件在多个brick上复制多份,brick的数目要与需要复制的份数相等,replica = brick,建议brick分布在不同的服务器上。应用场景:对可靠性高和读写性能要求高的场景

⑤查看存储卷信息

[root@server1 ~]# gluster volume info Volume Name: gluster_volumes Type: Replicate Volume ID: 80c40e90-d912-414a-a510-2f30cb746c03 Status: Started Snapshot Count: 0 Number of Bricks: 1 x 2 = 2 Transport-type: tcp Bricks: Brick1: server1:/data/gluster_volumes Brick2: server2:/data/gluster_volumes Options Reconfigured: transport.address-family: inet storage.fips-mode-rchecksum: on nfs.disable: on performance.client-io-threads: off

⑥查看集群状态

[root@server1 ~]# gluster peer status Number of Peers: 1 Hostname: server2 Uuid: 0eda1c59-cad7-4e33-a028-99027c6e99b4 State: Peer in Cluster (Connected)

3、配置客户端使用卷

①将卷挂载到本地目录

mkdir /app && mount -t glusterfs server1:/gluster_volumes /app [root@server1 ~]# df -h 文件系统 容量 已用 可用 已用% 挂载点 /dev/sda3 17G 2.0G 16G 12% / devtmpfs 981M 0 981M 0% /dev tmpfs 992M 0 992M 0% /dev/shm tmpfs 992M 9.6M 982M 1% /run tmpfs 992M 0 992M 0% /sys/fs/cgroup /dev/sda1 1014M 124M 891M 13% /boot tmpfs 199M 0 199M 0% /run/user/0 server1:/gluster_volumes 17G 2.1G 15G 13% /app

②设置开机自动挂载

vim /etc/fstab加入: server1:/gluster_volumes /app glusterfs defaults,_netdev 0 0

③对卷目录写入文件测试

for i in `seq -w 1 100`; do cp -rp /var/log/messages /app/copy-test-$i; done

④查看每个节点的GlusterFS卷目录

[root@server1 ~]# ls /data/gluster_volumes copy-test-001 copy-test-014 copy-test-027 copy-test-040 copy-test-053 copy-test-066 copy-test-079 copy-test-092 copy-test-002 copy-test-015 copy-test-028 copy-test-041 copy-test-054 copy-test-067 copy-test-080 copy-test-093 copy-test-003 copy-test-016 copy-test-029 copy-test-042 copy-test-055 copy-test-068 copy-test-081 copy-test-094 copy-test-004 copy-test-017 copy-test-030 copy-test-043 copy-test-056 copy-test-069 copy-test-082 copy-test-095 copy-test-005 copy-test-018 copy-test-031 copy-test-044 copy-test-057 copy-test-070 copy-test-083 copy-test-096 copy-test-006 copy-test-019 copy-test-032 copy-test-045 copy-test-058 copy-test-071 copy-test-084 copy-test-097 copy-test-007 copy-test-020 copy-test-033 copy-test-046 copy-test-059 copy-test-072 copy-test-085 copy-test-098 copy-test-008 copy-test-021 copy-test-034 copy-test-047 copy-test-060 copy-test-073 copy-test-086 copy-test-099 copy-test-009 copy-test-022 copy-test-035 copy-test-048 copy-test-061 copy-test-074 copy-test-087 copy-test-100 copy-test-010 copy-test-023 copy-test-036 copy-test-049 copy-test-062 copy-test-075 copy-test-088 copy-test-011 copy-test-024 copy-test-037 copy-test-050 copy-test-063 copy-test-076 copy-test-089 copy-test-012 copy-test-025 copy-test-038 copy-test-051 copy-test-064 copy-test-077 copy-test-090 copy-test-013 copy-test-026 copy-test-039 copy-test-052 copy-test-065 copy-test-078 copy-test-091

[root@server2 ~]# ls /data/gluster_volumes/ copy-test-001 copy-test-014 copy-test-027 copy-test-040 copy-test-053 copy-test-066 copy-test-079 copy-test-092 copy-test-002 copy-test-015 copy-test-028 copy-test-041 copy-test-054 copy-test-067 copy-test-080 copy-test-093 copy-test-003 copy-test-016 copy-test-029 copy-test-042 copy-test-055 copy-test-068 copy-test-081 copy-test-094 copy-test-004 copy-test-017 copy-test-030 copy-test-043 copy-test-056 copy-test-069 copy-test-082 copy-test-095 copy-test-005 copy-test-018 copy-test-031 copy-test-044 copy-test-057 copy-test-070 copy-test-083 copy-test-096 copy-test-006 copy-test-019 copy-test-032 copy-test-045 copy-test-058 copy-test-071 copy-test-084 copy-test-097 copy-test-007 copy-test-020 copy-test-033 copy-test-046 copy-test-059 copy-test-072 copy-test-085 copy-test-098 copy-test-008 copy-test-021 copy-test-034 copy-test-047 copy-test-060 copy-test-073 copy-test-086 copy-test-099 copy-test-009 copy-test-022 copy-test-035 copy-test-048 copy-test-061 copy-test-074 copy-test-087 copy-test-100 copy-test-010 copy-test-023 copy-test-036 copy-test-049 copy-test-062 copy-test-075 copy-test-088 copy-test-011 copy-test-024 copy-test-037 copy-test-050 copy-test-063 copy-test-076 copy-test-089 copy-test-012 copy-test-025 copy-test-038 copy-test-051 copy-test-064 copy-test-077 copy-test-090 copy-test-013 copy-test-026 copy-test-039 copy-test-052 copy-test-065 copy-test-078 copy-test-091

如果在两个节点上都看到有101个文件,到此GlusterFS部署结束。

五、配置GlusterFS作为k8s的持久化存储

1、创建endpoint

[root@k8s-node1 gluster]# cat glusterfs-ep.yaml apiVersion: v1 kind: Endpoints metadata: name: glusterfs namespace: default subsets: - addresses: - ip: 192.168.56.175 - ip: 192.168.56.176 ports: - port: 49152 protocol: TCP

2、创建service

[root@k8s-node1 gluster]# cat glusterfs-svc.yaml apiVersion: v1 kind: Service metadata: name: glusterfs namespace: default spec: ports: - port: 49152 protocol: TCP targetPort: 49152 sessionAffinity: None type: ClusterIP

3、创建PV

[root@k8s-node1 gluster]# cat glusterfs-pv.yaml apiVersion: v1 kind: PersistentVolume metadata: name: gluster labels: type: glusterfs spec: capacity: storage: 100Gi accessModes: - ReadWriteMany glusterfs: endpoints: "glusterfs" path: "gluster_volumes" readOnly: false

4、创建PVC

[root@k8s-node1 gluster]# cat glusterfs-pvc.yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: gluster spec: accessModes: - ReadWriteMany resources: requests: storage: 20Gi

5、创建应用挂载测试

apiVersion: v1 kind: Pod metadata: name: nginx-demo spec: containers: - name: nginx image: nginx:1.14.2 ports: - containerPort: 80 volumeMounts: - name: nginx-volume mountPath: /usr/share/nginx/html volumes: - name: nginx-volume persistentVolumeClaim: claimName: gluster

成功最有效的方法就是向有经验的人学习!

浙公网安备 33010602011771号

浙公网安备 33010602011771号