|NO.Z.00021|——————————|^^ 案例 ^^|——|Hadoop&Spark.V09|——|Spark.v09|sparkcore|算子综合案例&wordcount-Java|

一、算子综合案例wordcount-java

### --- WordCount - java

~~~ Spark提供了:Scala、Java、Python、R语言的API;对 Scala 和 Java 语言的支持最好;### --- 源码地址说明

~~~ 地址:https://spark.apache.org/docs/latest/rdd-programming-guide.html

二、编程实现wordcount-java程序

### --- 创建wordcount-java程序

package cn.yanqi.sparkcore;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import scala.Tuple2;

import java.util.Arrays;

public class JavaWordCount {

public static void main(String[] args) {

// 1 创建 JavaSparkContext

SparkConf conf = new SparkConf().setAppName("JavaWordCount").setMaster("local[*]");

JavaSparkContext jsc = new JavaSparkContext(conf);

jsc.setLogLevel("warn");

// 2 生成RDD

JavaRDD<String> lines = jsc.textFile("file:///E:\\NO.Z.10000——javaproject\\NO.Z.00002.Hadoop\\SparkBigData\\data\\wc.txt");

// 3 RDD转换

JavaRDD<String> words = lines.flatMap(line -> Arrays.stream(line.split("\\s+")).iterator());

JavaPairRDD<String, Integer> wordsMap = words.mapToPair(word -> new Tuple2<>(word, 1));

JavaPairRDD<String, Integer> results = wordsMap.reduceByKey((x, y) -> x + y);

// 4 结果输出

results.foreach(elem -> System.out.println(elem));

// 5 关闭SparkContext

jsc.stop();

}

}### --- 备注:

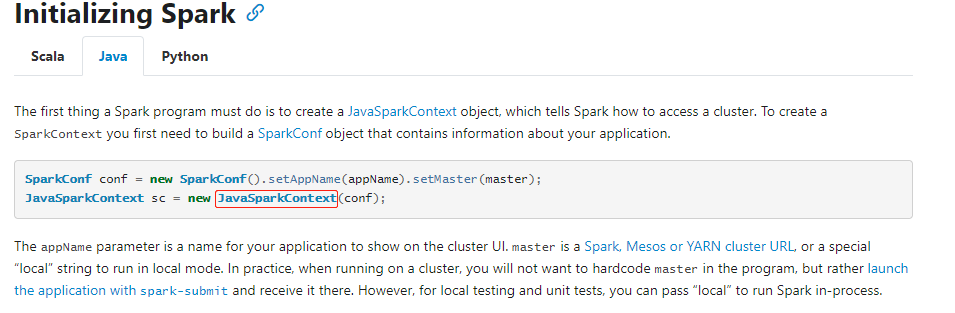

~~~ Spark入口点:JavaSparkContext

~~~ Value-RDD:JavaRDD;key-value RDD:JavaPairRDD

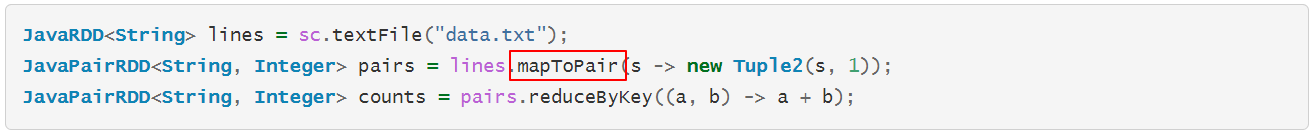

~~~ JavaRDD 和 JavaPairRDD转换

~~~ JavaRDD => JavaPairRDD:通过mapToPair函数

~~~ JavaPairRDD => JavaRDD:通过map函数转换lambda表达式使用 ->### --- 编译打印:

(yanqi,3)

(hadoop,2)

(mapreduce,5)

(hdfs,1)

(yarn,2)Walter Savage Landor:strove with none,for none was worth my strife.Nature I loved and, next to Nature, Art:I warm'd both hands before the fire of life.It sinks, and I am ready to depart

——W.S.Landor

浙公网安备 33010602011771号

浙公网安备 33010602011771号