【ELK】分布式日志平台搭建全攻略 - 详解

>ELK 是一个由 Elasticsearch、Logstash 和 Kibana 组成的开源日志收集、存储、搜索和可视化分析平台。

目录

一、环境准备

1.1 创建目录

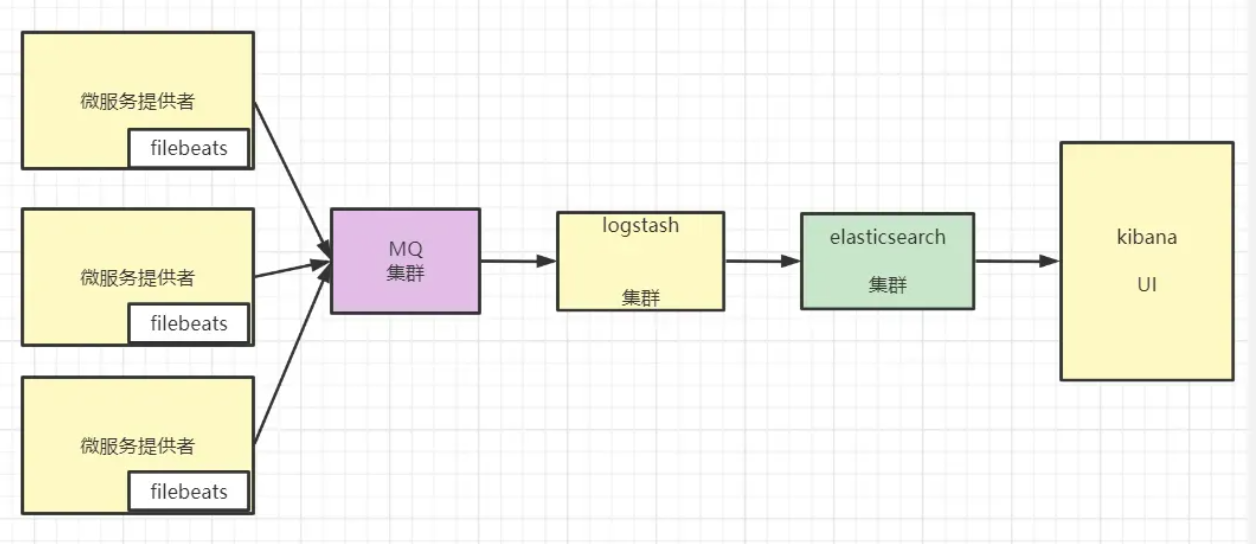

elk架构图

创建目录结构

mkdir -p /opt/elk/{elasticsearch/{data,logs,plugins,config},logstash/{config,pipeline},kibana/config,filebeat/{config,data}}设置权限

chomd -R 777 elasticsearch

chmod -R 777 logstash

chmod -R 777 kibana

chmod -R 777 filebeat

1.2 创建配置文件

Logstash配置:

vim logstash/config/logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.hosts: ["http://elasticsearch:9200"]

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "mH0awV4RrkN2"

# 日志级别

log.level: infovim logstash/pipeline/logstash.conf

input {

beats {

port => 5045

ssl => false

}

tcp {

port => 5044

codec => json

}

}

filter {

# RuoYi 应用日志(优先级最高)

if [app_name] {

mutate {

add_field => { "[@metadata][target_index]" => "ruoyi-logs-%{+YYYY.MM.dd}" }

}

}

# 系统日志

else if [fields][log_type] == "system" {

grok {

match => { "message" => "%{SYSLOGLINE}" }

}

date {

match => [ "timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

target => "@timestamp"

}

mutate {

add_field => { "[@metadata][target_index]" => "system-log-%{+YYYY.MM.dd}" }

}

}

# Docker 容器日志

else if [container] {

# 尝试解析 JSON 消息

if [message] =~ /^\{.*\}$/ {

json {

source => "message"

skip_on_invalid_json => true

}

}

mutate {

add_field => { "[@metadata][target_index]" => "docker-log-%{+YYYY.MM.dd}" }

}

}

# 其他未分类日志

else {

mutate {

add_field => { "[@metadata][target_index]" => "logstash-%{+YYYY.MM.dd}" }

}

}

# 清理不需要的字段

mutate {

remove_field => ["agent", "ecs", "input"]

}

}

output {

elasticsearch {

hosts => ["elasticsearch:9200"]

user => "elastic"

password => "mH0awV4RrkN2"

index => "%{[@metadata][target_index]}"

}

# 调试输出(生产环境建议关闭)

# stdout {

# codec => rubydebug

# }

}Kibana配置

vim kibana/config/kibana.yml

server.name: kibana

server.host: "0.0.0.0"

server.port: 5601

elasticsearch.hosts: ["http://elasticsearch:9200"]

# 中文界面

i18n.locale: "zh-CN"

# 监控配置

monitoring.ui.container.elasticsearch.enabled: truefilebeat配置

这里我加上了系统和docker的运行日志,是为了给读者扩展的,各位读者可以参考修改,让elk不仅是只会接受服务的日志,还能接受nginx日志,mysql慢日志等。

vim /filebeat/config/filebeat.yml

filebeat.inputs:

# 收集系统日志

- type: log

enabled: true

paths:

- /var/log/messages

- /var/log/syslog

tags: ["system"]

fields:

log_type: system

# 收集 Docker 容器日志

- type: container

enabled: true

paths:

- '/var/lib/docker/containers/*/*.log'

processors:

- add_docker_metadata:

host: "unix:///var/run/docker.sock"

# 输出到 Logstash

output.logstash:

hosts: ["logstash:5045"]

# 或者直接输出到 ES(二选一)

#output.elasticsearch:

# hosts: ["elasticsearch:9200"]

# username: "elastic"

# password: "mH0awV4RrkN2"

# index: "filebeat-%{+yyyy.MM.dd}"

# Kibana 配置

setup.kibana:

host: "kibana:5601"

username: "elastic"

password: "mH0awV4RrkN2"

# 日志级别

logging.level: info

logging.to_files: true

logging.files:

path: /usr/share/filebeat/logs

name: filebeat

keepfiles: 7

permissions: 0644

# 启用监控

monitoring.enabled: true

monitoring.elasticsearch:

hosts: ["elasticsearch:9200"]

username: "elastic"

password: "mH0awV4RrkN2"

filebeat.inputs:

# 收集系统日志

- type: log

enabled: true

paths:

- /var/log/messages

- /var/log/syslog

tags: ["system"]

fields:

log_type: system

# 收集 Docker 容器日志

- type: container

enabled: true

paths:

- '/var/lib/docker/containers/*/*.log'

processors:

- add_docker_metadata:

host: "unix:///var/run/docker.sock"

# 输出到 Logstash

output.logstash:

hosts: ["logstash:5045"]

# 或者直接输出到 ES(二选一)

#output.elasticsearch:

# hosts: ["elasticsearch:9200"]

# username: "elastic"

# password: "mH0awV4RrkN2"

# index: "filebeat-%{+yyyy.MM.dd}"

# Kibana 配置

setup.kibana:

host: "kibana:5601"

username: "elastic"

password: "mH0awV4RrkN2"

# 日志级别

logging.level: info

logging.to_files: true

logging.files:

path: /usr/share/filebeat/logs

name: filebeat

keepfiles: 7

permissions: 0644

# 启用监控

monitoring.enabled: true

monitoring.elasticsearch:

hosts: ["elasticsearch:9200"]

username: "elastic"

password: "mH0awV4RrkN2"compose配置

vim docker-compose.yml

version: '3.8'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.12.1

container_name: elasticsearch

environment:

- node.name=es-node-1

- cluster.name=elk-cluster

- discovery.type=single-node

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512M -Xmx1g"

- http.cors.enabled=true

- http.cors.allow-origin=*

# === 安全认证配置 ===

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false # 禁用 HTTP SSL

- xpack.security.transport.ssl.enabled=false # 禁用内部通信 SSL

# 设置 elastic 用户的初始密码(重要!)

- ELASTIC_PASSWORD=mH0awV4RrkN2

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- ./elasticsearch/data:/usr/share/elasticsearch/data

- ./elasticsearch/logs:/usr/share/elasticsearch/logs

- ./elasticsearch/plugins:/usr/share/elasticsearch/plugins

ports:

- "9200:9200"

- "9300:9300"

networks:

- elk-network

restart: unless-stopped

healthcheck:

# 健康检查需要认证

test: ["CMD-SHELL", "curl -u elastic:mH0awV4RrkN2 -f http://localhost:9200/_cluster/health || exit 1"]

interval: 30s

timeout: 10s

retries: 5

logstash:

image: docker.elastic.co/logstash/logstash:7.12.1

container_name: logstash

environment:

- "LS_JAVA_OPTS=-Xms512m -Xmx512m"

# 使用 elastic 超级用户(后续可改为 logstash_system)

- ELASTICSEARCH_USERNAME=elastic

- ELASTICSEARCH_PASSWORD=mH0awV4RrkN2

volumes:

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml

- ./logstash/pipeline:/usr/share/logstash/pipeline

ports:

- "5044:5044" # TCP输入

- "5045:5045" # Beats输入

- "9600:9600" # Logstash API

networks:

- elk-network

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

kibana:

image: docker.elastic.co/kibana/kibana:7.12.1

container_name: kibana

environment:

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200

- I18N_LOCALE=zh-CN

# 使用 elastic 超级用户(后续可改为 kibana_system)

- ELASTICSEARCH_USERNAME=elastic

- ELASTICSEARCH_PASSWORD=mH0awV4RrkN2

volumes:

- ./kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml

ports:

- "5601:5601"

networks:

- elk-network

depends_on:

elasticsearch:

condition: service_healthy

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:5601/api/status || exit 1"]

interval: 30s

timeout: 10s

retries: 5

filebeat:

image: docker.elastic.co/beats/filebeat:7.12.1

container_name: filebeat

user: root

volumes:

- ./filebeat/config/filebeat.yml:/usr/share/filebeat/filebeat.yml:ro

- ./filebeat/data:/usr/share/filebeat/data

# 挂载宿主机日志目录(根据实际需求调整)

- /var/log:/var/log:ro

# 如果需要收集 Docker 容器日志

- /var/lib/docker/containers:/var/lib/docker/containers:ro

- /var/run/docker.sock:/var/run/docker.sock:ro

command: filebeat -e -strict.perms=false

networks:

- elk-network

depends_on:

- elasticsearch

- logstash

restart: unless-stopped

networks:

elk-network:

driver: bridge

volumes:

elasticsearch-data:

driver: local启动所有服务

docker-compose up -d

浏览器访问

http://127/.0.0.1:5601

二、系统集成

2.1 FileBeat

规范的流程是先通过filebeat给logstash插入到es,但是笔者实在不想折腾了,我这里就直接省去filebeat这一流程。

正常部署方式为:在每台应用服务器上安装Filebeat,配置相应的日志收集路径,指向中心化的ELK服务器地址,启动Filebeat服务

2.2 项目集成

依赖引入

net.logstash.logback

logstash-logback-encoder

7.2

本地日志文件配置,在resouces目录下创建logback-elk.xml

${log.pattern}

${log.path}/info.log

${log.path}/info.%d{yyyy-MM-dd}.log

60

${log.pattern}

INFO

ACCEPT

DENY

${log.path}/error.log

${log.path}/error.%d{yyyy-MM-dd}.log

60

${log.pattern}

ERROR

ACCEPT

DENY

${LOGSTASH_HOST}

5000

1000

16384

5000

{"app_name":"${APP_NAME}"}

true

true

false

5 minutes

0

512

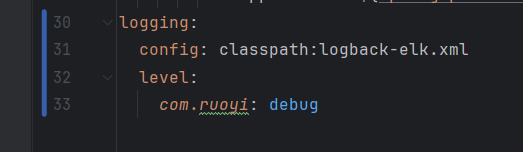

bootstrap.yml配置

2.3 日志查看

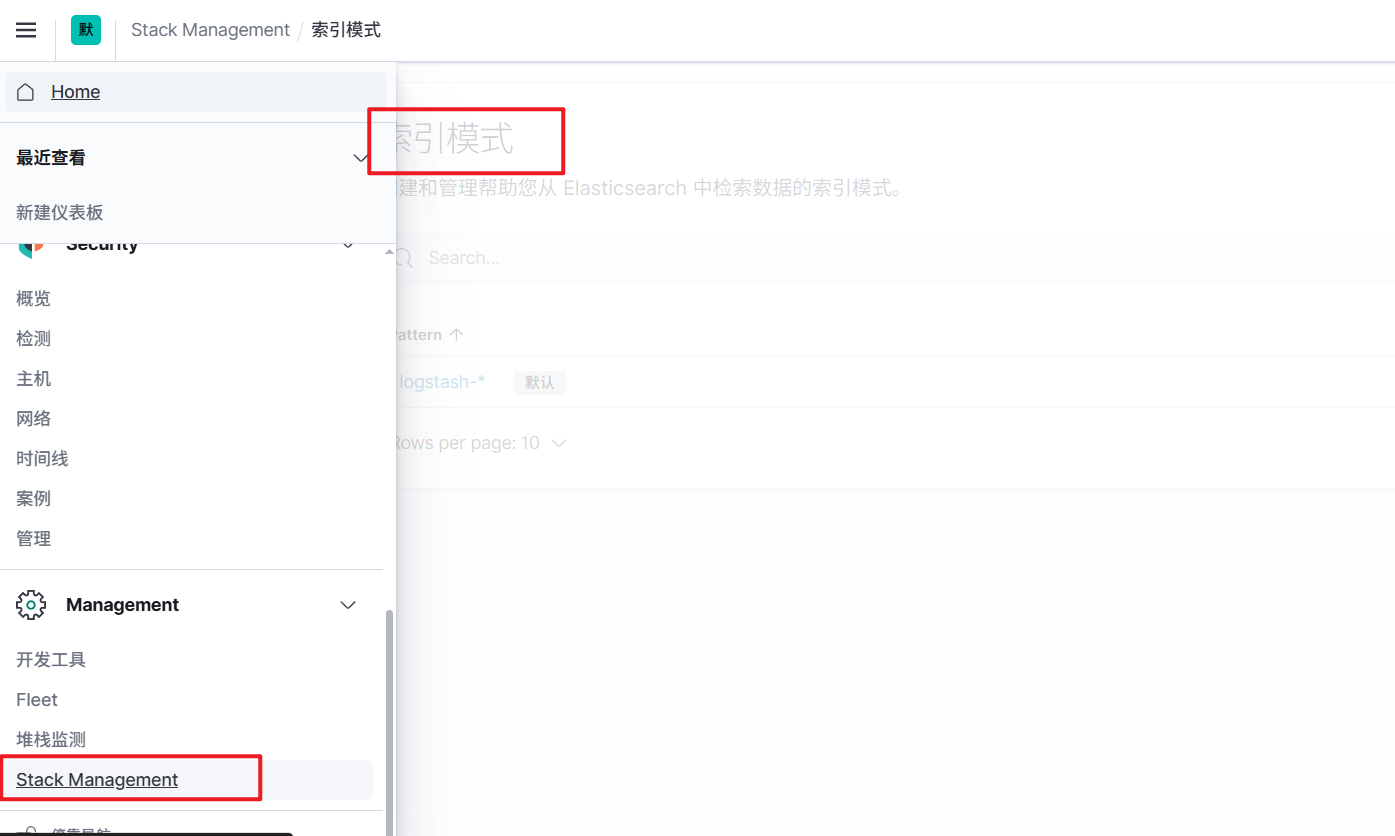

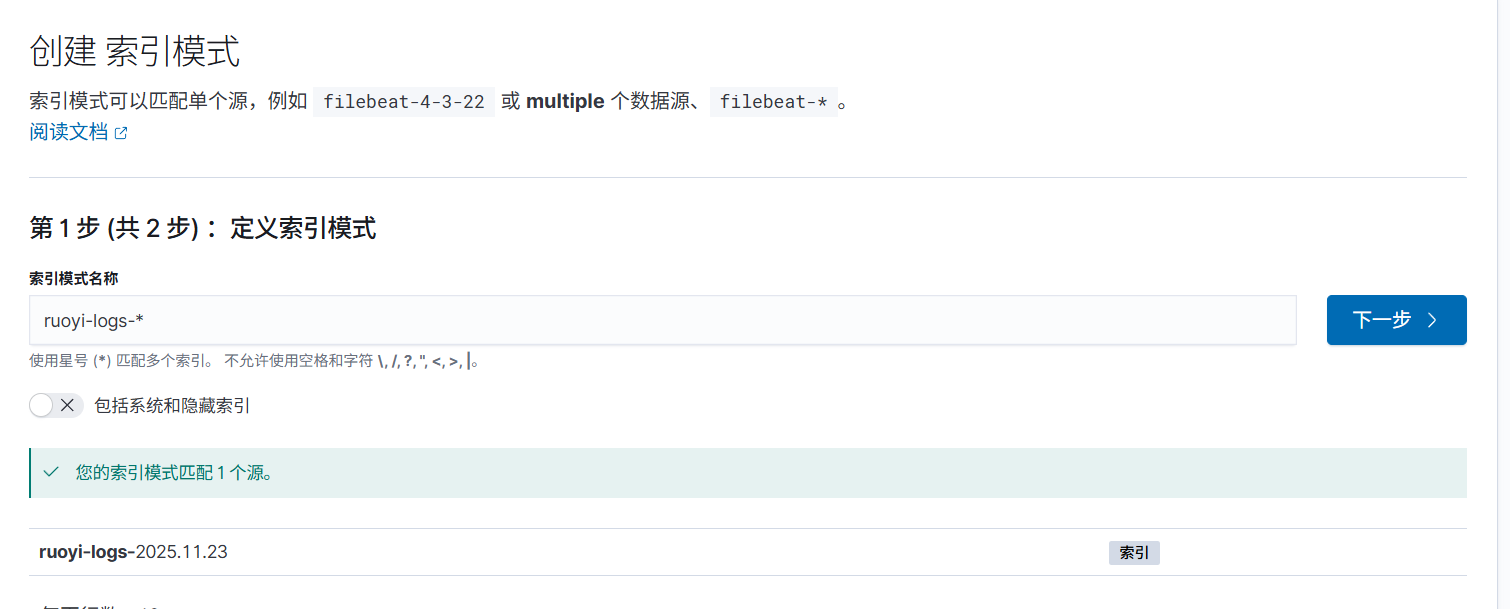

访问kibana地址,输入我们配置的用户名和账号,打开索引模式。

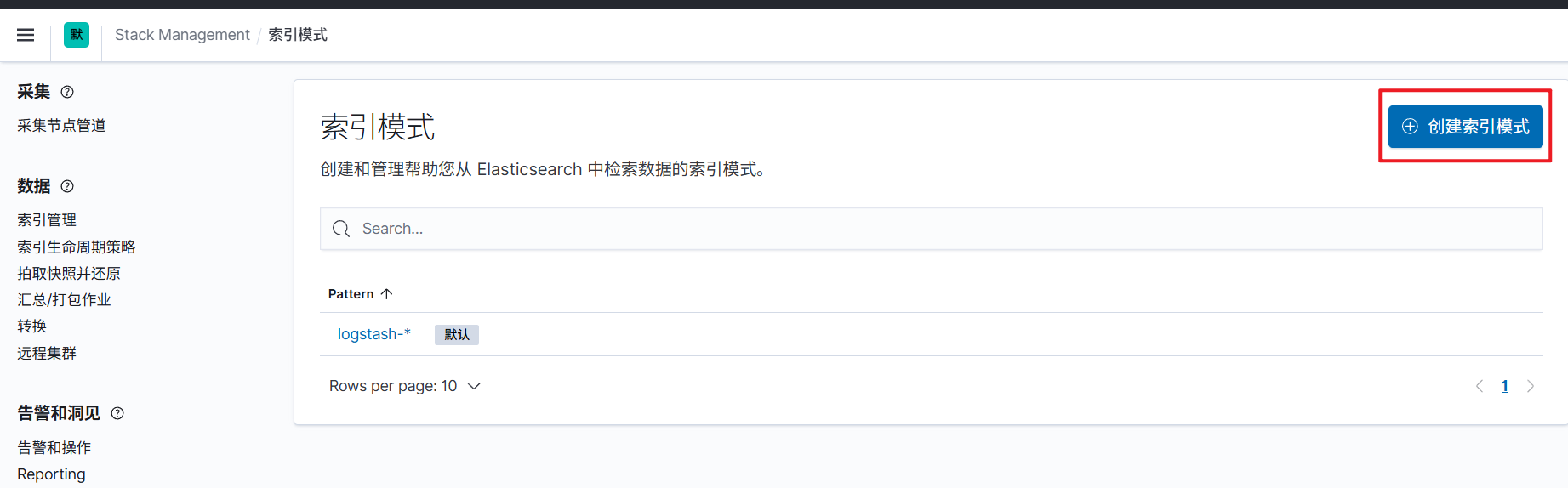

点击创建索引模式

输入:ruoyi-logs-*后点击下一步

时间字段选择如下:

这样我们的索引就创建完成

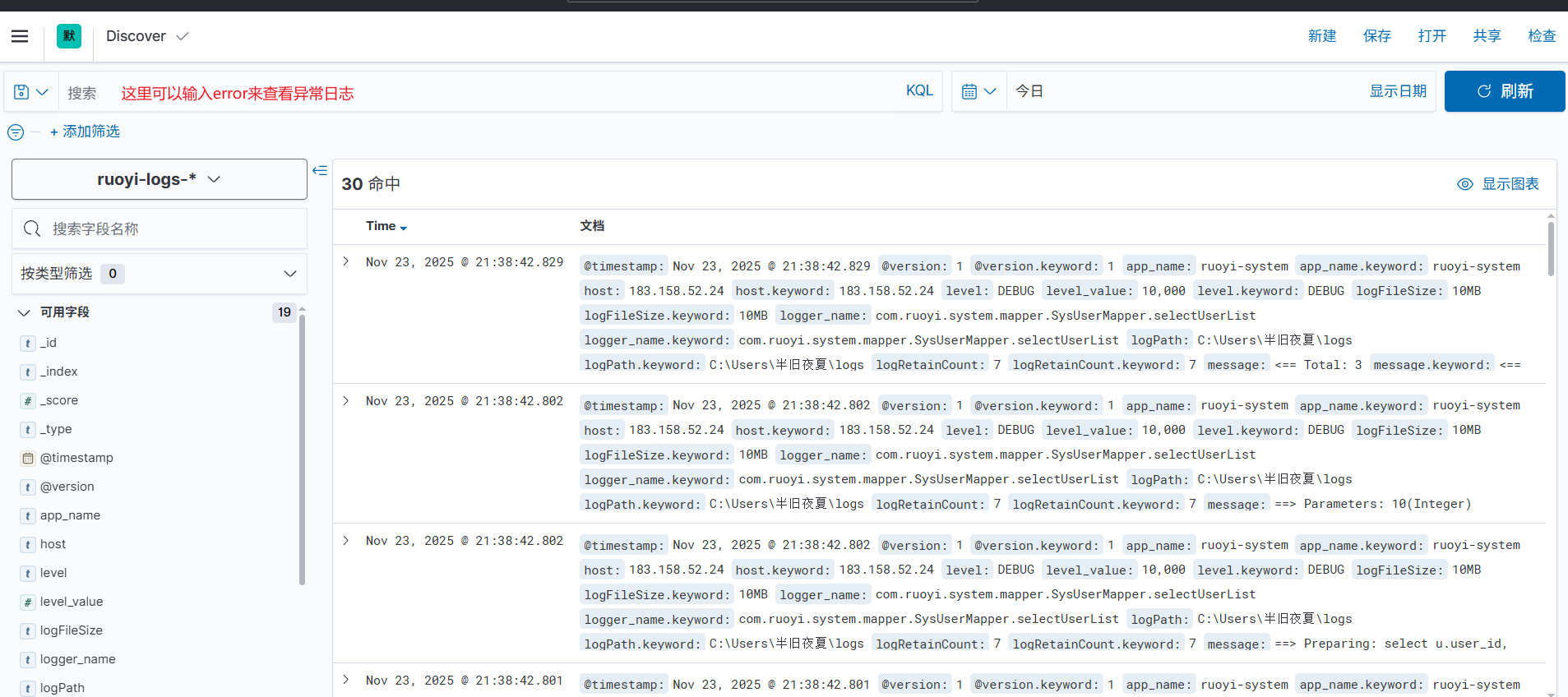

接下来打开左侧菜单栏的Discover页面

后记:目前的文档没有结合kafaka集群,因为笔者不想再折腾了;如果读者知道如何结合mq或kafaka可以在评论区分享一下。

浙公网安备 33010602011771号

浙公网安备 33010602011771号