Kubernetes之基础概念

1. kubernetes基本概念

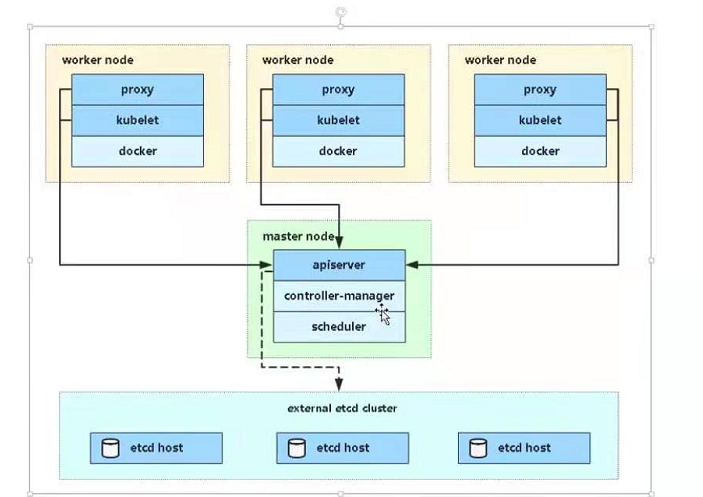

1. master组件

kube-apiserver

集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储

kube-controller-manager

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的

kube-scheduler

根据调度算法为新创建的pod选择一个node节点,可以任意部署,可以部署到同一个节点上,也可以部署到不同的节点上。

etcd

分布式键值存储系统。用于保存集群状态数据,比如:pod,service等对象信息

2. node组件

kubelet

是master在node节点上的agent

kube-proxy

该模块实现了Kubernetes中的服务发现和反向代理功能

在node节点上实现pod网络代理,在node节点上创建网络规则

docker

容器引擎

3. 结构图

1. pod

1. 最小部署单元

2. 一组容器的集合

3. 一个pod中的容器共享网络命名空间

4. pod是短暂的

2. controllers

1. replicaSet: 确保预期的pod副本数量

2. Deployment: 无状态应用部署

3. DaemonSet: 有状态应用部署

4. Job: 一次性任务

5. Cronjob: 定时任务

3. service

1. 防止pod失联

2. 定义一组pod的访问策略

4. label 标签,附加到某个资源上,用于关联对象查询和筛选

5. namespaces 命名空间,将对象逻辑上隔离

4. 核心附件

1. KubeDNS

在kubernetes集群中调度运行提供DNS服务的pod,同一集群中的其它pod可使用此DNS服务解析主机名。kubernetes自1.11版本开始默认使用coredns项目为集群提供名称解析服务。

2. Kubernets Dashboard

基于web的UI,来管理集群中的应用甚至集群自身

3. Heapster

容器和几点的性能监控与分析系统,以后会被prometheus代替

4. Ingress Controller

在应用层实现的HTTP负载均衡机制

2. 在kubernetes中部署java应用

构建镜像---上传私库---创建pod---创建service暴露---创建存储卷---日志、监控

1. 构建镜像

使用dockerfile构建

2. 私库

使用harbor做私库

3. 创建pod

kubectl create deployment java-demo --image=lizhenliang/java-demo --dry-run -o yaml > deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: java-demo

name: java-demo

spec:

replicas: 2

selector:

matchLabels:

app: java-demo

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: java-demo

spec:

containers:

- image: lizhenliang/java-demo

name: java-demo

resources: {}

status: {}

kubectl apply -f deploy.yaml

4. 查看一下pod

kubectl get pods

kubectl get deployment

5. 创建service

kubectl expose deployment java-demo --port=80 --target-port=8080 --type=NodePort -o yaml --dry-run > service.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: java-demo

name: java-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

nodePort: 31003

selector:

app: java-demo

type: NodePort

status:

loadBalancer: {}

kubectl apply -f service.yaml

3. 理解pod对象

1. pod为亲密性应用而存在

2. pod应用场景

两个应用之间发生文件交互

两个应用需要通过127.0.0.1或者socket通信

两个应用发生频繁调用

4. Pod实现机制

1. 共享网络

同一个pod中的容器都处于同一个网络命名空间

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-java

name: nginx-java

spec:

replicas: 2

selector:

matchLabels:

app: nginx-java

strategy: {}

template:

metadata:

labels:

app: nginx-java

spec:

containers:

- image: lizhenliang/java-demo

name: java-demo

- image: nginx

name: nginx

status: {}

进入同一个pod中的两个容器中,分别查看一下网卡信息

kubectl exec -it nginx-java-644bc77879-8jdz8 -c java-demo /bin/bash

会发现同一个pod的两个容器使用同一个IP地址

2. 共享存储

使用数据卷实现共享存储

3. 共享端口

7. Pod分类与Pod Template常见字段

1. 分类

Infrastructure Container:基础容器

维护整个pod网络空间

InitContainers:初始化容器

先于业务容器开始执行

Containers:业务容器

2. Template常见字段

变量

拉取镜像

资源限制

健康检查

8. Deployment控制器

1. pod与controllers的关系

controllers: 在集群上管理和运行容器的对象

通过label-selector相关联

pod通过控制器实现应用的运维,弹性伸缩和滚动升级

2. Yaml字段分析

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deployment

name: nginx-deployment 定义pod的名称前缀

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

标红的地方,两个标签需要完全一致,才能匹配到。

kubectl apply -f nginx.yaml

3. 暴露端口

kubectl expose deployment java-demo --port=80 --target-port=8080 --type=NodePort -o yaml --dry-run > service.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nginx-deployment

name: nginx-deployment

spec:

ports:

- port: 80 ClusterIP对应的端口

protocol: TCP

targetPort: 80

nodePort: 31004

selector:

app: nginx 这个要与对应的pod名称匹配

type: NodePort

status:

loadBalancer: {}

4. 应用升级,回滚,弹性伸缩

1. 升级

1. 启用nginx,使用的镜像为nginx1.17版本

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: home-deployment

name: home-deployment

spec:

replicas: 2

selector:

matchLabels:

app: home-nginx

template:

metadata:

labels:

app: home-nginx

spec:

containers:

- image: nginx:1.17

name: home-nginx

2. 将nginx版本升级为1.19,使用命令行

kubectl set image deployment home-deployment home-nginx=nginx:1.19

3. 进入到节点上的容器中,查看一下nginx版本

[root@k8s-master ~]# kubectl exec -it home-deployment-58d757c7b6-bqspw -c home-nginx /bin/bash root@home-deployment-58d757c7b6-bqspw:/# nginx -v nginx version: nginx/1.19.1

4. 除非容器被销毁,不然nginx版本一直是新的版本

2. 回滚

1. 回滚前一个版本

kubectl rollout undo deployment home-deployment 返回之前的版本

2. 根据版本进行回滚

kubectl rollout history deployment/home-deployment 查看版本

kubectl rollout undo deployment/home-deployment --to-revision=2 回滚到某个版本

3. 弹性伸缩

1. 扩展

kubectl scale --replicas=5 deployment/home-deployment

2. 查看pods

kubectl get pods -o wide

3. 缩减

kubectl scale --replicas=2 deployment/home-deployment

4. 删除

1. kubectl delete deployment/home-deployment

2. kubectl delete service/nginx

3. kubectl delete pod/home-deployment-58d757c7b6-6dmlg

9. Service控制器

7. Service服务发现

1. 使用环境变量

1. 限制

pod和service的创建顺序是有要求的,service必须在pod创建之前被创建,否则环境变量不会设置到pod中。

pod只能获取同namespace的service环境变量

2. 使用DNS

DNS服务监视kubernetes API,为每一个service创建DNS记录用于域名解析。这样pod中就可以通过DNS域名获取service的访问地址。apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes CLUSTER_DOMAIN REVERSE_CIDRS {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . UPSTREAMNAMESERVER {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}STUBDOMAINS

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: CLUSTER_DNS_IP

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

配置好coredns以后,pod之间直接使用service的name就可以通讯

10. Kubectl使用

2. kubectl管理工具详细使用

1.

1. 创建deployment

kubectl run tls-nginx --replicas=3 --labels="app=tls-nginx" --image=nginx:1.10 --port=80

2. 查看

kubectl get deployment 查看所有的deployment

kubectl get pods 查看所有的pods

kubectl get pods --show-labels 查看所有的pod和标签

kubectl get pods -o wide 查看pods在哪些节点上

kubectl get pods -l app=nginx 查看具体某个标签的pods

kubectl get pods -w 实时跟踪某个pod

kubectl get pods -n default

3. 发布

kubectl expose deployment tls-nginx --port=88 --target-port=80 --name=tls-nginx

4. 查看资源信息

kubectl get service

kubectl get svc

kubectl describe service tls-nginx 查看资源的详细信息

kubectl describe node node_name 查看节点的资源信息

5. 故障排查

kubectl describe TYPE NAME

kubectl logs pods_name

kubectl exec -it pods_name /bin/bash

6. 更新

kubectl set image deployment/nginx nginx=nginx:1.11 更新镜像

kubectl set image deployment/nginx nginx=nginx:1.11 --record=true 记录更新镜像

kubectl edit deployment/nginx 编辑配置

7. 资源发布管理

kubectl rollout status deployment/nginx

kubectl rollout history deployment/nginx

kubectl rollout status deployment/nginx --revision=3

8. 回滚

kubectl rollout undo deployment/nginx-deployment

kubectl rollout undo deployment/nginx-deployment --to-revision=3

9. 删除

kubectl delete deployment/nginx

kubectl delete svc/nginx-service

10, 扩容缩容

kubectl scale deployment nginx --replicas=10

11. kubectl补全命令

yum install bash-completion -y

bash

source <(kubectl completion bash)

12. 查看资源集群状态

1. 查看master节点的组件

kubectl get cs

2. 查看k8s支持的资源

kubectl api-resources

3. 查看集群信息

kubectl cluster-info

kubectl cluster-info dump 查看更加详细的集群信息

5. 查看日志

1. 查看k8s系统的组件日志

1. 使用systemd守护进程

journalctl -u kubelet

2. 查看pod的日志

kubectl logs tomcat-oms-54ddd5f898-blprn

kubectl logs tomcat-oms-54ddd5f898-blprn -f 查看实时的日志信息

kubectl logs tomcat-oms-54ddd5f898-blprn -c 容器名称 适用于多个容器

kubectl logs kube-proxy-8t9z4 -n kube-system

pod日志目录对应的是节点上的目录

1. 找到容器的ID

2. 进入节点固定目录

/var/lib/docker/containers/decc9e3f7617bd0cd26b61e9ab9b6058bf7dfd05e6ef8c8c335354e295e8e83f/decc9e3f7617bd0cd26b61e9ab9b6058bf7dfd05e6ef8c8c335354e295e8e83f-json.log

3. 查看系统日志

/var/log/messages

2. k8s cluster部署的应用程序日志

标准输出

日志文件

11. YAML配置文件管理资源

1. 配置文件说明

1. 定义配置时,指定最新稳定版API(当前为v1)

2. 配置文件应该存储在集群之外的版本控制仓库中

3. 应该使用YAML格式编写配置文件

4. 将相关对象组合成单个文件,通常会更容易管理

5. 不要没必要的指定默认值,简单和最小配置减少错误

6. 在YAML文件中添加注释

2. 实战例子

1. deployment的yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deployment

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:latest

name: nginx

2. service的yaml文件

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nginx-deployment

name: nginx-deployment

spec:

ports:

- port: 81

protocol: TCP

targetPort: 80

nodePort: 31004

selector:

app: nginx

type: NodePort

status:

loadBalancer: {}

3. ingress的yaml文件

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: http-test

spec:

rules:

- host: test.zhen.net

http:

paths:

- backend:

serviceName: nginx-deployment

servicePort: 81

12. Pod使用

1. pod基本管理

1. 创建

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

kubectl create -f pod.yaml

kubectl apply -f pod.yaml

2. 查询

kubectl get pods nginx-pod

kubectl describe pod nginx-pod

3. 更新

kubectl replace -f pod.yaml

4. 删除

kubectl delete pod nginx-pod

kubectl delete -f pod.yaml

2. pod资源限制

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

resources:

requests: 最低要求

memory: "64Mi"

cpu: "250m" 相对的权重值

limits: 最高要求

memory: "128Mi"

cpu: "500m"

3. pod调度约束

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

# nodeName: node01

nodeSelector:

env_role: dev

containers:

- name: nginx

image: nginx

4. 重启策略

三种策略

1. Always: 当容器停止,总是重建容器,默认策略

2. OnFailure: 当容器异常退出时,才重启容器

3. Never: 当容器终止退出,从不重启容器

4. 例子

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

restartPolicy: OnFailure

14. 在k8s集群添加一个数据节点

1. 准备工作做完成

2. 在数据节点执行kubeadm join,如果token过期了,执行下面的操作。

3. 重新创建一个token

[root@k8s-master ~]# kubeadm token create kk0ee6.nhvz5p85avmzyof3 [root@k8s-master ~]# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS bgis60.aubeva3pjl9vf2qx 6h 2020-02-04T17:24:00+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token kk0ee6.nhvz5p85avmzyof3 23h 2020-02-05T11:02:44+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

4. 生成hash值

kubeadm token create --print-join-command 生成加入集群的命令

[root@k8s-master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 9db128fe4c68b0e65c19bb49226fc717e64e790d23816c5615ad6e21fbe92020

5. 把节点加入到k8s集群

[root@k8s-node1 ~]# kubeadm join --token kk0ee6.nhvz5p85avmzyof3 --discovery-token-ca-cert-hash sha256:9db128fe4c68b0e65c19bb49226fc717e64e790d23816c5615ad6e21fbe92020 192.168.31.35:6443 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Activating the kubelet service [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

15. 使用二进制搭建一个k8s集群

16. Helm安装与使用

1. Helm介绍

k8s的包管理器

核心术语

1. helm: 一个命令行的管理工具

2. chart: 把yaml打包成一个文件

3. release: 特定的chart部署于目标集群上的一个实例

4. Repository: charts仓库,http/https服务器

chart---->config---->release

2. 解决问题

1. 把k8s的yaml文件集中管理

2. 高效服用yaml文件

3. 支持应用级别的版本控制

3. helm V3版本改变

1. 架构的改变,去掉tiller,helm通过kubeconfig连接apiserver

2. release名称可以在不同命名空间重用

3. chart支持上传到docker镜像仓库

4. 安装

1. 去github.com上下载指定的helm https://github.com/helm/helm/releases

2. 解压tar -zxvf helm-v3.0.0-linux-amd64.tar.gz

3. 移动helm二进制包到/usr/bin目录下即可

5. 配置使用

1. 配置镜像地址

helm repo add stable http://mirror.azure.cn/kubernetes/charts

2. 查看镜像地址

helm repo list

3. 在镜像地址上搜索chart包

helm search repo elk

NAME CHART VERSION APP VERSION DESCRIPTION stable/elastic-stack 2.0.3 6 A Helm chart for ELK

4. 安装chart包

helm install elk stable/elastic-stack

6. 自己制作chart包

1. 创建chart包

helm create mychart

使用此命令以后,会在当前目录下创建一个mychart的目录。

mychart目录的结构如下:

charts

chart.yaml 记录当前chart版本,应用程序版本等元数据

templates 模板文件,yaml放到这个目录下

values.yaml 所有yaml的全局变量

2. 把自己的yaml文件放到mychart/templates目录下

service.yaml

deployment.yaml

helm install tomcat-oms mychart

3. 升级chart包

helm upgrade tomcat-oms mychart

7. chart开发与模板使用

1. 一套yaml部署不同应用,需要修改的地方

资源名称

镜像

标签

副本数

端口

2. 修改values.yaml

replicaCount: 1

image:

repository: harbor.zp.com/dev_zp/tomcat

tag: v2

name: oms

service:

targetPort: 8080

ingress:

enabled: true

hosts:

- host: back.dev.zp.com

resources:

limits:

cpu: 500m

memory: 2048Mi

requests:

cpu: 100m

memory: 128Mi

3. 修改templates中的yaml文件,引用上面的变量

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: tomcat-{{ .Values.name }}

name: tomcat-{{ .Values.name }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: tomcat-{{ .Values.name }}

template:

metadata:

labels:

app: tomcat-{{ .Values.name }}

spec:

containers:

- image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

name: tomcat-{{ .Values.name }}

volumeMounts:

- name: tomcat-{{ .Values.name }}-logs

mountPath: /usr/local/apache-tomcat-8.5.43/logs

- name: tomcat-{{ .Values.name }}-webapps

mountPath: /usr/local/apache-tomcat-8.5.43/webapps

ports:

- containerPort: {{ .Values.service.targetPort }}

resources:

requests:

memory: "{{ .Values.resources.requests.memory }}"

cpu: "{{ .Values.resources.requests.cpu }}"

limits:

memory: "{{ .Values.resources.limits.memory }}"

cpu: "{{ .Values.resources.limits.cpu }}"

imagePullSecrets:

- name: myregsecret

volumes:

- name: tomcat-{{ .Values.name }}-logs

nfs:

server: harbor.zp.com

path: /zp/dev/{{ .Values.name }}/logs

- name: tomcat-{{ .Values.name }}-webapps

nfs:

server: harbor.zp.com

path: /zp/dev/{{ .Values.name }}/webapps

service.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: tomcat-{{ .Values.name }}

name: tomcat-{{ .Values.name }}

spec:

ports:

- port: 80

protocol: TCP

targetPort: {{ .Values.service.targetPort }}

selector:

app: tomcat-{{ .Values.name }}

4. chart版本升级

5. 动态更新chart

helm upgrade --set replicaCount=3 tomcat-oms tomcat-oms/

6. 回滚

helm history tomcat-oms 查看历史版本

helm rollback tomcat-oms 1 回滚到某个版本

7. 语法检查

helm lint tomcat-oms

==> Linting tomcat-oms [INFO] Chart.yaml: icon is recommended 1 chart(s) linted, 0 chart(s) failed

8. 打包chart

helm package tomcat-oms

17. 部署EFK日志收集系统

18. k8s网络组件

1. calico

2. flannel

19. 应用程序生命周期管理

1. Deployment的功能

1. 管理Pod和RelicaSet

2. 具有上线部署

20. 普通用户使用kubectl命令

# 创建用户 useradd xuel passwd xuel # 切换到普通用户 su - xuel mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown xuel:xuel $HOME/.kube/config # 配置环境变量 export KUBECONFIG=/etc/kubernetes/admin.conf echo "source <(kubectl completion bash)" >> ~/.bashrc

21. k8s使用harbor做镜像仓库

1. 在k8s-master上登录harbor

docker login harbor.xxx.com

2. 生成一个文件config.json

使用哪个账户,就在该用户的家目录创建一个隐藏文件,例如:/root/.docker/config.json

3. 创建一个secret

kubectl create secret docker-registry myregsecret --namespace=default --docker-server=172.16.1.237 --docker-username=admin --docker-password=aaaa --docker-email=aaaaa@zhen.com

4. 查看secret

kubectl get secret

5. 删除一个secret

kubectl get secret myregsecret

22. k8s报错

1. 执行命令kubectl的时候,出现错误:Unable to connect to the server: x509: certificate has expired or is not yet valid

浙公网安备 33010602011771号

浙公网安备 33010602011771号