深度神经网络 —— 运行tensorrt报错 —— Unable to determine GPU memory usage: In getGpuMemStatsInBytes at common/extended/resources.cpp:1167

[10/27/2025-20:40:38] [TRT] [W] Unable to determine GPU memory usage: In getGpuMemStatsInBytes at common/extended/resources.cpp:1167

[10/27/2025-20:40:38] [TRT] [W] Unable to determine GPU memory usage: In getGpuMemStatsInBytes at common/extended/resources.cpp:1167

terminate called after throwing an instance of 'nvinfer1::APIUsageError'

what(): CUDA initialization failure with error: 35. Please check your CUDA installation: http://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html In checkCudaInstalledAndPrintMemoryUsage at optimizer/api/builder.cpp:1238

Aborted (core dumped)

解决方法:

参考:

https://forums.developer.nvidia.com/t/deepstream-sample-code-cannot-work/341684/2

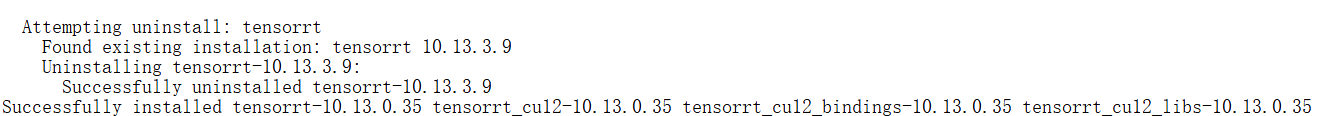

最终,通过安装低版本的tensorrt解决,

pip install tensorrt==10.13.0.35

也就是说将tensorrt版本从tensorrt 10.13.3.9降低到10.13.0.35即可。

本机的具体配置为:

NVIDIA-SMI 550.127.08 Driver Version: 550.127.08 CUDA Version: 12.4

也就是说,CUDA版本为12.4的情况下,安装tensorrt 10.13.3.9是会报错的,而安装10.13.0.35版本的tensorrt则可以成功运行。

也有网友给出自己的配置,如下:

I solved the problem myself by verifying that the TensorRT version (10.7.0.23-1+cuda12.6) matches the CUDA version.

也就是说CUDA版本为12.6时,安装tensorrt 10.7.0.23版本可以成功运行。

总结来说,tensorrt的版本是和CUDA版本相关的,如果运行tensorrt报错,那么就需要考虑是否是并不匹配所安装的CUDA版本。

posted on 2025-10-27 21:07 Angry_Panda 阅读(54) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号