数据采集综合实践

课程 2025数据采集与融合技术

项目整体

组名、项目简介

组名:基米大哈气

项目背景:针对B站视频评论信息量大、内容杂乱的问题,提供智能化的筛选与分类方案,帮助用户快速了解视频评论风向。

项目目标:开发一个支持评论爬取、智能分类、违禁词管理及可视化分析的综合系统,实现对评论内容的精准筛选与多维度展示。

技术路线:前端采用 React + React Router 实现组件化开发;后端使用 Flask + MySQL 管理数据与接口;核心算法基于本地部署的 Qwen2.5 大模型,并应用 LoRA 微调与 4 位量化技术优化性能;系统最终部署于华为云平台。

团队成员学号

102302113(王光诚)、102302115(方朴)、102302119(庄靖轩)、102302120(刘熠黄)、102302121(许友钿)、102302122(许志安)、102302123(许洋)、102302147(傅乐宜)

项目目标

- 智能分类:结合视频类型(如游戏、二次元),将评论自动归类为正常、争论、广告、@某人、无意义五大类。

- 数据可视化:提供评论统计、分类分布、高频词云及评论变化曲线图,直观展示数据特征。

- 违禁词管理:支持实时增删查改违禁词库,保障过滤机制的高效性。

- 自动化爬取:用户只需输入B站链接,系统即可自动抓取评论并进行智能处理,爬取过程中支持播放背景音乐。

其他参考文献

[1]Joshua Ainslie, James Lee-Thorp, Michiel de Jong, Yury Zemlyanskiy, Federico Lebr´on, and SumitSanghai. GQA: Training generalized multi-query Transformer models from multi-head checkpoints. InEMNLP, pp. 4895–4901. Association for Computational Linguistics, 2023.

码云链接(由于git上上传不了大于1GB的文件,所以我们将所有源码都放到了github上,小组成员间底代码不分开)

项目代码(GitHub):https://github.com/liuliuliuliu617-maker/-/tree/master <br> 项目演示网址: http://1.94.247.8/(31号前可以查看,之后代金券应该过期了)

分工部分

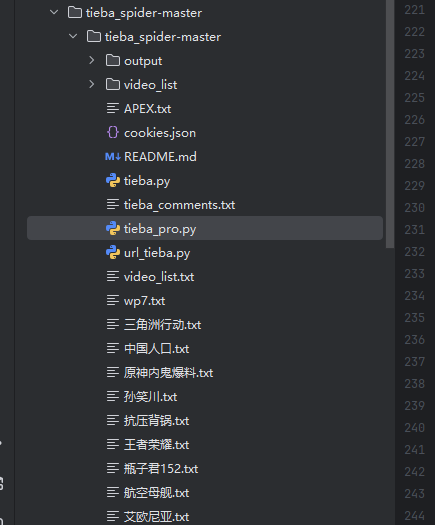

在本项目中,我主要负责贴吧不同领域的评论图片及表情包,主要由于B站的评论太过于局限,用于训练时的区别度不高,于是引入贴吧评论增加数据集规模及质量。核心任务包括采集流程设计、反爬策略控制,数据清洗及数据结构规范化。

由于想要爬取贴吧等网站需要模拟登录和找到目标URL,所以代码分为两部分:

获取Cookies和帖子URL部分

通过 Selenium 手动登录贴吧自动保存Cookies,爬虫程序直接复用该 Cookies 进行接口访问爬取目标词条下的URL。

import requests

from lxml import etree

import time

import random

from urllib.parse import quote

import json

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

# ===================================================

# 1. 登录贴吧并保存 Cookie

# ===================================================

def login_and_save_cookie():

url = "https://tieba.baidu.com/"

print(">>> 正在启动 Chrome,请手动登录贴吧 ...")

service = Service(ChromeDriverManager().install())

options = webdriver.ChromeOptions()

options.add_experimental_option("detach", True) # 登录后不自动关闭

driver = webdriver.Chrome(service=service, options=options)

driver.get(url)

input(">>> 登录成功后按回车继续保存 cookie ... ")

cookies = driver.get_cookies()

driver.quit()

with open("cookies.json", "w", encoding="utf-8") as f:

json.dump(cookies, f, ensure_ascii=False, indent=4)

print(">>> Cookie 已保存为 cookies.json\n")

# ===================================================

# 2. Requests 读取 Cookie 并构造 headers

# ===================================================

def load_cookie_string():

with open("cookies.json", "r", encoding="utf-8") as f:

cookies = json.load(f)

cookie_str = "; ".join([f"{c['name']}={c['value']}" for c in cookies])

return cookie_str

# ===================================================

# 3. 抓取帖子

# ===================================================

def fetch_post_urls(keyword, min_reply=40, max_pages=50, max_count=100):

print(f"\n>>> 正在抓取关键字 [{keyword}] 的帖子,回复数 ≥ {min_reply}")

print(f">>> 最多抓取 {max_count} 条帖子 URL\n")

encoded_kw = quote(keyword)

all_urls = []

cookie_str = load_cookie_string()

headers = {

"User-Agent": "Mozilla/5.0",

"Referer": "https://tieba.baidu.com/",

"Cookie": cookie_str

}

for page in range(max_pages):

# 达到上限 → 退出

if len(all_urls) >= max_count:

print("\n>>> 已达到最大抓取数量限制,停止翻页。")

break

pn = page * 50

url = f"https://tieba.baidu.com/f?kw={encoded_kw}&ie=utf-8&pn={pn}"

print(f"\n>>> 正在访问:{url}")

html = requests.get(url, headers=headers).text

tree = etree.HTML(html)

li_list = tree.xpath('//li[contains(@class,"j_thread_list")]')

if not li_list:

print("该页无帖子,说明到底了。")

break

for li in li_list:

# 再次检查上限

if len(all_urls) >= max_count:

print("\n>>> 已达到最大抓取数量限制,停止本页解析。")

break

# 回复数

reply_text = li.xpath('.//span[@class="threadlist_rep_num center_text"]/text()')

if not reply_text:

continue

reply = reply_text[0].strip()

if not reply.isdigit():

continue

reply = int(reply)

if reply < min_reply:

continue

# 帖子链接

href = li.xpath('.//a[contains(@class,"j_th_tit")]/@href')

if not href:

continue

post_url = "https://tieba.baidu.com" + href[0]

print(f"✓ 捕获:{post_url} 回复:{reply}")

all_urls.append(post_url)

time.sleep(random.uniform(1, 2))

return all_urls

# ===================================================

# 4. 主程序

# ===================================================

def main():

print("如果你已保存 cookie 可按 Enter 跳过,否则输入 1 登录贴吧:")

choice = input("> ").strip()

if choice == "1":

login_and_save_cookie()

keyword = input("请输入贴吧关键词:").strip()

urls = fetch_post_urls(keyword)

filename = f"{keyword}.txt"

with open(filename, "w", encoding="utf-8") as f:

for u in urls:

f.write(u + "\n")

print(f"\n>>> 共保存 {len(urls)} 条帖子 URL 到文件:{filename}")

if __name__ == "__main__":

main()根据保存的URL爬取评论代码部分

# tieba_requests_crawler.py

import requests

import json

import time

import os

import re

import random

from requests.adapters import HTTPAdapter

from urllib3.util.retry import Retry

import urllib3

from lxml import etree

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

# ================================================

# 0. 文本清洗模块

# ================================================

def clean_comment(text: str) -> str:

"""

输入一个完整 text 字段,输出纯评论内容。

删除:

- “该楼层疑似违规已被系统折叠 隐藏此楼查看此楼”

- “送TA礼物……”

- “IP属地:xxx 来自客户端 xxx楼 时间 回复”

- “收起回复”

- 禁言提示

"""

if not text:

return ""

# 统一空白为一个空格

t = re.sub(r"\s+", " ", text).strip()

# 删除违规折叠提示

t = re.sub(

r"^该楼层疑似违规已被系统折叠 隐藏此楼查看此楼",

"",

t

).strip()

# 删除“送TA礼物”后面的所有内容

t = t.split("送TA礼物")[0].strip()

# 删除 IP 属地及其后续内容

t = re.sub(r"IP属地:.*$", "", t).strip()

# 删除 “来自XXXX客户端”

t = re.sub(r"来自[^ ]*客户端.*$", "", t).strip()

# 删除 “XX楼 20xx-xx-xx 时间 回复”

t = re.sub(r"\d+楼\d{4}-\d{2}-\d{2}.*$", "", t).strip()

# 删除“回复 收起回复”相关字段

t = re.sub(r"回复收起回复.*$", "", t).strip()

t = re.sub(r"回复.*$", "", t).strip()

# 删除“被楼主禁言,将不能再进行回复”

t = re.sub(r"被楼主禁言,将不能再进行回复.*$", "", t).strip()

return t

def clean_record(record: dict) -> dict:

"""清洗单条 JSON 数据"""

if "text" in record:

record["text"] = clean_comment(record["text"])

return record

# ================================================

# 1. Session & Retry

# ================================================

def make_session():

session = requests.Session()

retries = Retry(

total=6,

backoff_factor=1,

status_forcelist=[500, 502, 503, 504, 429],

allowed_methods=["GET", "POST", "HEAD"]

)

adapter = HTTPAdapter(max_retries=retries, pool_connections=10, pool_maxsize=10)

session.mount("https://", adapter)

session.mount("http://", adapter)

return session

session = make_session()

DOMAIN_SLEEP = 8

REQUEST_TIMEOUT = 30

RETRY_DELAY = 5

# ================================================

# 2. 加载 cookie

# ================================================

def load_cookies_for_requests(session):

cookie_file = "cookies.json"

if not os.path.exists(cookie_file):

raise FileNotFoundError("cookies.json 未找到,请用 Selenium 登录并保存 cookies.json")

with open(cookie_file, "r", encoding="utf-8") as f:

cookie_list = json.load(f)

cookie_dict = {}

cookie_str_parts = []

for c in cookie_list:

name = c.get("name")

value = c.get("value")

if not name:

continue

cookie_dict[name] = value

cookie_str_parts.append(f"{name}={value}")

session.cookies.update(cookie_dict)

cookie_str = "; ".join(cookie_str_parts)

return cookie_str

def make_headers(cookie_str=""):

return {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"Referer": "https://tieba.baidu.com/",

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8",

"Accept-Language": "zh-CN,zh;q=0.9",

"Cookie": cookie_str

}

# ================================================

# 3. 工具:提取 post_id

# ================================================

def extract_post_id(url):

m = re.search(r"/p/(\d+)", url)

return m.group(1) if m else None

# ================================================

# 4. 提取 + 清洗 raw 评论

# ================================================

def is_meaningless_short(text: str) -> bool:

"""全面过滤无意义回复:数字、短句、符号、emoji 等"""

if not text:

return True

# 原始清洗:去空白/不可见字符

t = re.sub(r"[\s\u200b\ufeff\u3000]+", "", text)

# 去 emoji

t = re.sub(r"[\U00010000-\U0010ffff]", "", t)

# 去 HTML 标签(楼中楼、a 标签残留)

t = re.sub(r"<[^>]+>", "", t)

# 去常见广告字段

t = re.sub(r"该楼层.*?查看此楼", "", t)

t = t.strip()

# 过滤纯数字(任意位数,但实际短数字你全部不要)

if re.fullmatch(r"\d+", t):

return True

# 长度 <= 2 一律无意义

if len(t) <= 2:

return True

# 过滤纯标点符号(包括中文符号)

if re.fullmatch(r"[^\w\u4e00-\u9fff]+", t):

return True

# 过滤类似 “。。。。。” “???”

if re.fullmatch(r"[\.。??!!~~·…]+", t):

return True

return False

def extract_clean_comment(post_el):

# ------------------------

# 情形 A:包含 class 为 j-no-opener-url 的 <a>(你的示例中有这个 class)

if post_el.xpath('.//a[contains(@class, "j-no-opener-url")]'):

return None

# 情形 B:存在 id 以 post_content_ 开头的 div,且该 div 下有直接的 a 子元素

# (对应类似 //*[@id="post_content_152750964834"]/a 的情况)

if post_el.xpath('.//div[starts-with(@id, "post_content_")]/a'):

return None

# ------------------------

# 原有楼中楼过滤

# ------------------------

if post_el.xpath('.//div[contains(@class,"lzl_cnt")]'):

return None

content_el = post_el.xpath('.//div[contains(@class,"d_post_content")]')

if not content_el:

return None

el = content_el[0]

# 过滤只有 <a> 的评论(通常为跳转/卡片)

a_only = el.xpath('./a')

if a_only and len(el.getchildren()) == 1:

return None

# 获取原始文本

try:

raw_text = el.xpath('string(.)').strip()

except:

raw_text = "".join(el.itertext()).strip()

# 文本清洗

text = clean_comment(raw_text)

# 清洗后长度判断(<=1 视为无效)

if len(text) <= 1:

return None

# 强力过滤短内容(保留你已有规则)

if is_meaningless_short(text):

return None

# 图片与表情

pics = []

emote_pics = []

img_srcs = el.xpath('.//img[@class="BDE_Image"]/@src')

for s in img_srcs:

if s.startswith("//"):

s = "https:" + s

pics.append(s)

emo = el.xpath('.//img[contains(@class,"BDE_Smiley") or contains(@class,"BDE_M")]//@src')

for s in emo:

if s.startswith("//"):

s = "https:" + s

emote_pics.append(s)

return {

"text": text,

"pictures": pics,

"emote_pictures": emote_pics

}

# ================================================

# 5. 分页获取评论

# ================================================

def fetch_comments(post_id, headers, limit=20):

collected = []

page = 1

max_empty_pages = 3 # 原来用于页面结构找不到

empty_page_count = 0

empty_valid_count = 0

MAX_EMPTY_VALID = 2

while len(collected) < limit:

url = f"https://tieba.baidu.com/p/{post_id}?pn={page}"

headers["Referer"] = f"https://tieba.baidu.com/p/{post_id}"

try:

resp = session.get(url, headers=headers, timeout=REQUEST_TIMEOUT, verify=False)

resp.raise_for_status()

html = resp.text

tree = etree.HTML(html)

post_blocks = tree.xpath('//div[contains(@class,"l_post")]')

# 页面为空的容错逻辑(保留你的原结构)

if not post_blocks:

post_blocks = tree.xpath(

'//div[contains(@class,"d_post_content_wrapper")]//div[contains(@class,"d_post_content")]'

)

if not post_blocks:

empty_page_count += 1

if empty_page_count >= max_empty_pages:

break

page += 1

time.sleep(random.uniform(1, 2))

continue

# 本页有效评论计数

valid_count_this_page = 0

for pb in post_blocks:

clean = extract_clean_comment(pb)

if clean is None:

continue

if not clean["text"] and not clean["pictures"] and not clean["emote_pictures"]:

continue

collected.append(clean)

valid_count_this_page += 1

if len(collected) >= limit:

break

# ============================================================

#连续 2 页没有有效评论 → 终止该帖子抓取

# ============================================================

if valid_count_this_page == 0:

empty_valid_count += 1

else:

empty_valid_count = 0

if empty_valid_count >= MAX_EMPTY_VALID:

print(f"⚠ 帖子 {post_id} 连续 {MAX_EMPTY_VALID} 页无有效评论,提前终止爬取")

break

page += 1

time.sleep(random.uniform(1, 2))

except requests.exceptions.RequestException as e:

print(f" 获取帖子 {post_id} 第 {page} 页失败: {e}")

if isinstance(e, requests.exceptions.Timeout):

print(f"超时,等待 {RETRY_DELAY} 秒后重试...")

time.sleep(RETRY_DELAY)

continue

break

return collected[:limit]

# ================================================

# 6. 爬单个帖子(已整合文本清洗)

# ================================================

def scrape_single_post(post_id, domain_name, headers):

time.sleep(random.uniform(1, 3))

print(f"开始爬取帖子:{post_id}")

raw_comments = fetch_comments(post_id, headers, limit=20)

if not raw_comments:

print(f" 未获取到评论:{post_id}")

return False

#清洗每条评论

cleaned_comments = [clean_record(r) for r in raw_comments]

save_dir = os.path.join("output", domain_name)

os.makedirs(save_dir, exist_ok=True)

out_path = os.path.join(save_dir, f"{post_id}.json")

try:

with open(out_path, "w", encoding="utf-8") as f:

json.dump(cleaned_comments, f, ensure_ascii=False, indent=2)

print(f" 已保存:{out_path} (共 {len(cleaned_comments)} 条评论)")

return True

except Exception as e:

print(f" 保存文件失败:{e}")

return False

# ================================================

# 7. 主流程(批量爬)

# ================================================

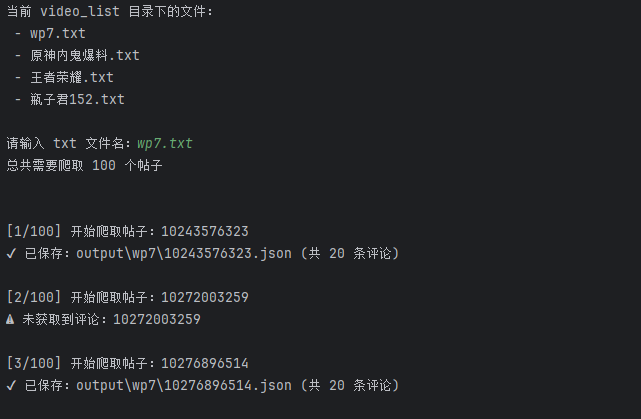

def main():

try:

cookie_str = load_cookies_for_requests(session)

except Exception as e:

print(" 加载 cookies 失败:", e)

return

headers = make_headers(cookie_str)

print("当前 video_list 目录下的文件:")

video_list_dir = "video_list"

if not os.path.exists(video_list_dir):

print(f"{video_list_dir} 不存在")

return

txt_files = [f for f in os.listdir(video_list_dir) if f.endswith(".txt")]

for f in txt_files:

print(" -", f)

if not txt_files:

print(" 无 txt 文件")

return

target_txt = input("\n请输入 txt 文件名:").strip()

if target_txt not in txt_files:

print(" 文件不存在")

return

domain_name = target_txt.replace(".txt", "")

txt_path = os.path.join(video_list_dir, target_txt)

with open(txt_path, "r", encoding="utf-8") as f:

urls = [line.strip() for line in f if line.strip()]

print(f"总共需要爬取 {len(urls)} 个帖子\n")

BATCH_SIZE = 10

BATCH_SLEEP = 300

success = 0

fail = 0

failed_posts = []

for i, url in enumerate(urls, 1):

post_id = extract_post_id(url)

if not post_id:

print(f" 无法解析 post_id:{url}")

fail += 1

continue

print(f"\n[{i}/{len(urls)}] ", end="")

if scrape_single_post(post_id, domain_name, headers):

success += 1

else:

fail += 1

failed_posts.append(post_id)

if i % BATCH_SIZE == 0 and i < len(urls):

print(f"\n 完成 {i} 个帖子,休息 {BATCH_SLEEP} 秒...")

time.sleep(BATCH_SLEEP)

if failed_posts:

failed_file = os.path.join("output", domain_name, "failed_tasks.txt")

with open(failed_file, "w", encoding="utf-8") as f:

for pid in failed_posts:

f.write(pid + "\n")

print(f" 失败任务已保存:{failed_file}")

print(f"\n 完成领域 {domain_name} 的爬取 | 成功 {success} | 失败 {fail}")

if __name__ == "__main__":

main()

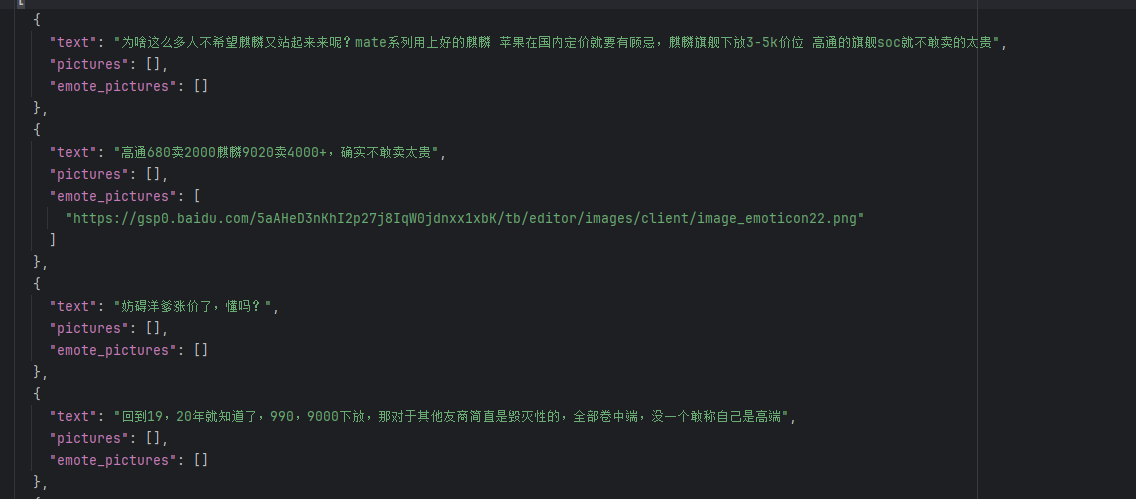

相较于B站的数据采集,贴吧可能是因为网站比较旧的缘故没有进行什么太多的管理,根据我们采集到的数据,我们不难看出其中包含大量明显或隐晦的目标违禁词句,并且包含大量广告或者其他的无用信息,所以具有较多需要进行清理的点,又因其大量的楼中楼,帖子裹挟帖子的查询方式,其兼容我们目前开发出的评论管理系统难度较大,所以我们并未扩展出这类模块,只将从贴吧中爬取的大量数据整合成更优质的数据集,旨在提高我们模型训练的准确性。

总结

这次实践由于数据集为项目开始的关键,于是我提前预习了一部分数据采集内容,在不断的试错和征求同伴意见下,摸索出了如何通过调整数据量及API访问频率实现反爬功能和具体的目标数据和数据结构,实现了可复现迁移可持续性的爬取方式,这次实践让我对数据采集在整个模型训练流程中的基础性作用有了更直观的认识。

浙公网安备 33010602011771号

浙公网安备 33010602011771号