中文命名实体识别

1.数据读取与预处理

data.py

from os.path import join from codecs import open def build_corpus(split, make_vocab=True, data_dir="./ResumeNER"): """读取数据""" assert split in ['train', 'dev', 'test'] word_lists = [] tag_lists = [] with open(join(data_dir, split+".char.bmes"), 'r', encoding='utf-8') as f: word_list = [] tag_list = [] for line in f: # 如果不是换行,就把word tag 分开装入列表中 if line != '\n': word, tag = line.strip('\n').split() word_list.append(word) tag_list.append(tag) # 如果是换行,就把上面的当做一整个列表装入列表中 else: word_lists.append(word_list) tag_lists.append(tag_list) word_list = [] tag_list = [] # 如果make_vocab为True,还需要返回word2id和tag2id if make_vocab: word2id = build_map(word_lists) tag2id = build_map(tag_lists) return word_lists, tag_lists, word2id, tag2id else: return word_lists, tag_lists def build_map(lists: object) -> object: maps = {} for list_ in lists: for e in list_: if e not in maps: maps[e] = len(maps) return maps print("读取数据...") train_word_lists, train_tag_lists, word2id, tag2id = build_corpus("train") print(train_word_lists[:10]) print(train_tag_lists[:10]) print(word2id) print(tag2id) # dev_word_lists, dev_tag_lists = build_corpus("dev", make_vocab=False) # test_word_lists, test_tag_lists = build_corpus("test", make_vocab=False)

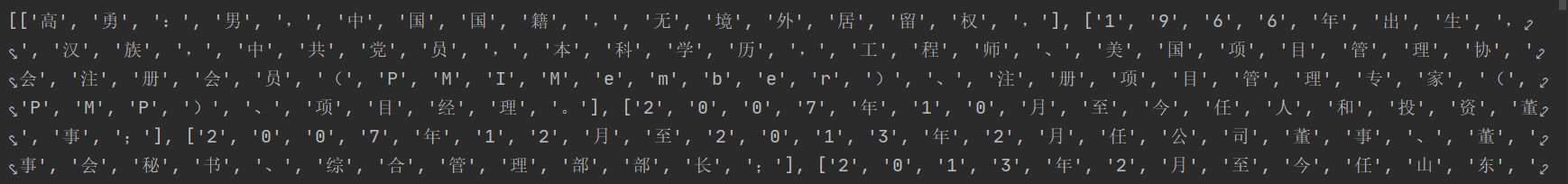

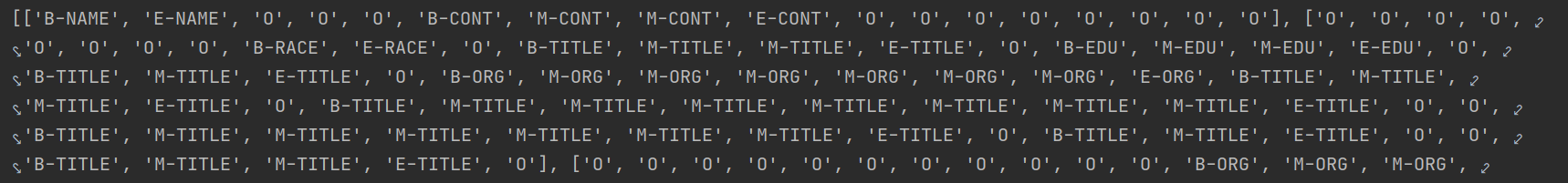

train_word_lists

train_tag_lists

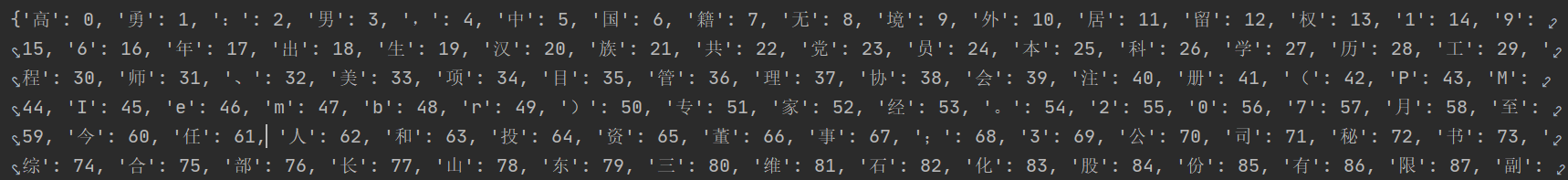

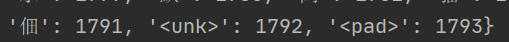

word2id字典

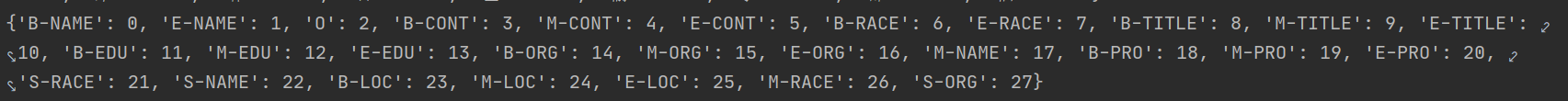

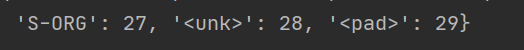

tag2id字典

2.CRF模型

用sklearn_crfsuite中的CRF库实现

首先提取句子的特征,包括:

# 使用的特征:

# 前一个词,当前词,后一个词,

# 前一个词+当前词, 当前词+后一个词

features = {

'w': word,

'w-1': prev_word,

'w+1': next_word,

'w-1:w': prev_word+word,

'w:w+1': word+next_word,

'bias': 1

}

输出示例:{'w': '高', 'w-1': '<s>', 'w+1': '勇', 'w-1:w': '<s>高', 'w:w+1': '高勇', 'bias': 1}, {'w': '勇', 'w-1': '高', 'w+1': ':', 'w-1:w': '高勇', 'w:w+1': '勇:', 'bias': 1}

再将特征fit到模型中

1 from sklearn_crfsuite import CRF 2 from models.util import sent2features 3 4 5 class CRFModel(object): 6 def __init__(self, 7 algorithm='lbfgs', 8 c1=0.1, 9 c2=0.1, 10 max_iterations=100, 11 all_possible_transitions=False 12 ): 13 14 self.model = CRF(algorithm=algorithm, 15 c1=c1, 16 c2=c2, 17 max_iterations=max_iterations, 18 all_possible_transitions=all_possible_transitions) 19 20 def train(self, sentences, tag_lists): 21 features = [sent2features(s) for s in sentences] 22 print(features[:10]) 23 self.model.fit(features, tag_lists) 24 25 def test(self, sentences): 26 features = [sent2features(s) for s in sentences] 27 pred_tag_lists = self.model.predict(features) 28 return pred_tag_lists

3.训练与评估模型

1 print("正在训练评估CRF模型...") 2 crf_pred = crf_train_eval( 3 (train_word_lists, train_tag_lists), 4 (test_word_lists, test_tag_lists) 5 ) 6 def crf_train_eval(train_data, test_data, remove_O=False): 7 8 # 训练CRF模型 9 train_word_lists, train_tag_lists = train_data 10 test_word_lists, test_tag_lists = test_data 11 12 crf_model = CRFModel() 13 crf_model.train(train_word_lists, train_tag_lists)

#保存模型参数 14 save_model(crf_model, "./ckpts/crf.pkl") 15 #测试数据输出结果 16 pred_tag_lists = crf_model.test(test_word_lists) 17 #Metrics用于评价模型,计算每个标签的准确率,召回率,F1值 18 metrics = Metrics(test_tag_lists, pred_tag_lists, remove_O=remove_O) 19 metrics.report_scores() 20 metrics.report_confusion_matrix() 21 22 return pred_tag_lists

输出的pred_tag_lists[0]与test_tag_list[0]如下:可以看到还是一致的,那么所有的tag的准确率有多少呢?

['B-NAME', 'M-NAME', 'E-NAME', 'O', 'O', 'O']

['B-NAME', 'M-NAME', 'E-NAME', 'O', 'O', 'O']

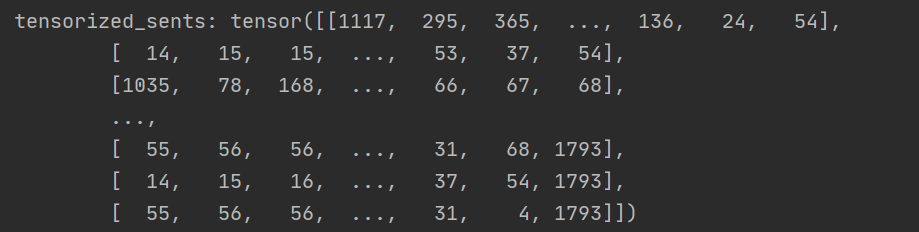

LSTM模型训练的时候需要在word2id和tag2id加入PAD和UNK

开心,有收获,就好

浙公网安备 33010602011771号

浙公网安备 33010602011771号