16-【拓展】Python高阶技巧

Python高阶技巧全面详解

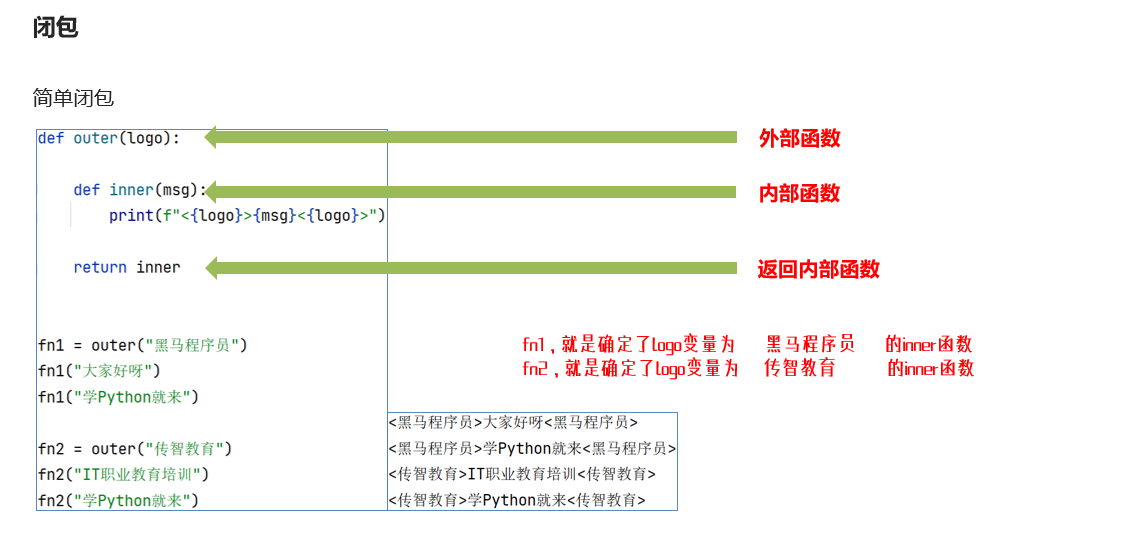

一、闭包(Closure)

1.1 闭包的概念与作用

1.1.1 为什么需要闭包

在编程中,我们经常需要维护某些状态信息。传统做法是使用全局变量,但全局变量存在命名冲突和意外修改的风险。

传统全局变量方式的缺点:

account_amount = 0 # 全局变量记录余额

def atm(num, deposit=True):

global account_amount

if deposit:

account_amount += num

print(f"存款:+{num}, 账户余额:{account_amount}")

else:

account_amount -= num

print(f"取款:-{num}, 账户余额:{account_amount}")

# 使用全局变量的问题:

# 1. 变量在全局命名空间,容易污染全局环境

# 2. 有被意外修改的风险

# 3. 代码组织不够优雅

1.1.2 闭包的解决方案

闭包提供了一种更优雅的方式来维护状态,将状态封装在函数内部。

让 fn1 是调用 outer("黑马程序员") 后返回的那个函数 inner,那么这个函数 inner 里面,它的 logo 其实就固定了。

我们以图中的代码为例:

def outer(logo): # 1. 外部函数

def inner(msg): # 2. 内部函数

print(f"<{logo}>{msg}</{logo}>")

return inner # 3. 返回内部函数(注意:是返回函数本身,不是调用它)

第一步:普通函数调用(无闭包)

如果没有闭包,logo 这样的变量在函数执行完就消失了。但这里的关键是,inner 函数体内使用了 logo 这个变量,而 logo 是 outer 函数的参数,属于 inner 的外部作用域。这就建立了“引用关系”。

第二步:制造闭包

当你执行 fn1 = outer("黑马程序员") 时,发生了以下神奇的事情:

- 调用

outer函数:传入参数logo = "黑马程序员"。 - 定义

inner函数:此时,Python 解释器发现inner函数内部使用了外部变量logo。它不会立刻去找logo的值,而是把logo这个变量和它与当前outer函数局部作用域的“引用关系”打包记录下来。 - 返回

inner函数本身:outer函数执行完毕,按理说它的局部变量(包括logo)应该被销毁。但是,因为返回的inner函数“记住”了(引用了)logo,Python 会为了inner能正常工作,而将logo这个变量(连同它的值"黑马程序员")保留下来,和inner函数绑定在一起。 fn1诞生:fn1现在不再是一个普通的函数,而是一个闭包函数。你可以把它看作一个“定制好的函数模板”:inner(msg)的模板,且其logo已经被固定填充为"黑马程序员"。

同理,fn2 = outer("传智教育") 会创建另一个独立的闭包,其中被固定的 logo 是 "传智教育"。

简单说,fn1 和 fn2 是 inner 函数的两个“变体”或“实例”,它们各自携带了不同的“记忆”(logo 的值)。

一个生活化的比喻

想象一家可以定制餐盒的餐厅:

outer函数 就像餐厅的生产线,它有一个固定餐盒模具(inner函数) 和一个食材填充口(logo变量)。- 你说:

fn1 = outer("黑马程序员"),就相当于你第一次按下生产线按钮,并往食材填充口倒入了“黑马程序员”这种食材。生产线运转一次,产出了一个已经封装好“黑马程序员”食材的定制餐盒,交给你。这个餐盒就是fn1。 - 后来你又用

outer("传智教育")生产了另一个装着不同食材的餐盒fn2。 - 当你需要吃饭(调用函数)时,你只需往

fn1这个餐盒里加入主菜(传入msg参数),比如“大家好呀”,它就会自动把你当初封装的“黑马程序员”和现在加入的“大家好呀”组合成最终菜品输出。

重点:fn1 和 fn2 是两个完全独立的餐盒,它们各自“记忆”了自己最初被生产时装入的特定食材。

总结一下:

闭包就是“带着记忆的函数”。 你图中的 fn1 和 fn2 各自带着 "黑马程序员" 和 "传智教育" 这段记忆,所以每次被调用时,都能用上这份记忆,并且这份记忆是可以用 nonlocal 然后来修改的,修改后的记忆是一直有效的,始终跟对应的 fn 绑定在一起。

搞不清楚时,就回想那个“定制餐盒”的比喻,或者多看看图中 fn1 和 fn2 的输出结果,它们最直观地展示了:同一个 inner 函数模板,因为诞生时“记住”了不同的 logo,从而产生了不同的行为。 这个“诞生时记住”的关系,就是闭包的精髓。

def account_create(initial_amount=0):

"""创建账户闭包"""

def atm(num, deposit=True):

nonlocal initial_amount # 声明使用外部变量

if deposit:

initial_amount += num

print(f"存款:+{num}, 账户余额:{initial_amount}")

else:

if num <= initial_amount:

initial_amount -= num

print(f"取款:-{num}, 账户余额:{initial_amount}")

else:

print("余额不足")

return atm # 返回内部函数

# 使用闭包

my_account = account_create(1000) # 创建初始余额1000的账户

my_account(500) # 存款500

my_account(200, False) # 取款200

1.2 闭包的定义与特性

1.2.1 闭包的三要素

- 函数嵌套:外层函数包含内层函数

- 内部函数使用外部变量:内层函数引用外层函数的变量

- 返回内部函数:外层函数返回内层函数

def outer_function(x):

"""外层函数"""

# 自由变量(在内部函数中使用但不是内部函数定义的变量)

free_variable = x

def inner_function(y):

"""内层函数(闭包)"""

nonlocal free_variable # 使用外部变量

result = free_variable + y

free_variable = result # 修改外部变量

return result

return inner_function # 返回闭包函数

# 创建闭包

closure_func = outer_function(10)

print(closure_func(5)) # 输出:15

print(closure_func(3)) # 输出:18(记住之前的状态)

1.2.2 nonlocal关键字的作用

nonlocal关键字用于声明变量来自外层作用域(非全局作用域),允许内部函数修改外部函数的变量,并且修改其实就是拿到这个餐盒,然后换掉所谓的初始食材,那是一直有效的,对于这个餐盒来说。

def counter():

"""计数器闭包"""

count = 0

def increment():

nonlocal count # 声明count来自外层作用域

count += 1

return count

def decrement():

nonlocal count

count -= 1

return count

def get_count():

return count

return increment, decrement, get_count

# 使用计数器

inc, dec, get = counter()

print(inc()) # 输出:1

print(inc()) # 输出:2

print(dec()) # 输出:1

1.3 闭包的实际应用

1.3.1 状态保持应用

def create_multiplier(factor):

"""创建乘法器闭包"""

def multiplier(x):

return x * factor

return multiplier

# 创建不同的乘法器

double = create_multiplier(2)

triple = create_multiplier(3)

print(double(5)) # 输出:10

print(triple(5)) # 输出:15

1.3.2 配置化函数生成

def create_greeter(greeting):

"""创建问候语生成器"""

def greet(name):

return f"{greeting}, {name}!"

return greet

# 创建不同的问候函数

say_hello = create_greeter("Hello")

say_hi = create_greeter("Hi")

say_hola = create_greeter("Hola")

print(say_hello("Alice")) # 输出:Hello, Alice!

print(say_hi("Bob")) # 输出:Hi, Bob!

print(say_hola("Carlos")) # 输出:Hola, Carlos!

1.4 闭包的优缺点

1.4.1 优点

- 避免全局变量污染:状态封装在闭包内部

- 数据隐藏:外部无法直接访问闭包内部状态

- 代码组织:相关功能组织在一起,提高可读性

1.4.2 缺点

- 内存占用:闭包会持续引用外部变量,导致内存无法释放

- 调试困难:闭包内部状态变化不易追踪

- 过度使用可能导致代码复杂:不恰当的闭包使用会让代码难以理解

1.4.3 内存管理示例

import sys

def memory_demo():

"""闭包内存占用演示"""

large_data = [i for i in range(10000)] # 大量数据

def inner():

return len(large_data) # 闭包引用大量数据

return inner

# 测试内存占用

closure = memory_demo()

print(f"闭包大小: {sys.getsizeof(closure)} 字节")

# 即使删除外部引用,闭包仍然持有数据

del memory_demo

# closure仍然可以正常工作,large_data不会被垃圾回收

二、装饰器(Decorator)

2.1 装饰器基础

2.1.1 装饰器的概念

装饰器是一种特殊类型的闭包,用于在不修改原函数代码的情况下,为函数添加新功能。

相当于 outer 参数为 func 的闭包。

原始函数:

def sleep():

import random

import time

print("睡眠中......")

time.sleep(random.randint(1, 5))

2.1.2 手动实现装饰器(闭包方式)

def add_sleep_log(func):

"""为函数添加睡眠日志的装饰器"""

def wrapper():

print("我要睡觉了") # 添加前置功能

func() # 执行原函数

print("我起床了") # 添加后置功能

return wrapper

# 使用装饰器

decorated_sleep = add_sleep_log(sleep)

decorated_sleep()

2.1.3 语法糖写法

加完 @ 了之后,其实就相当于让 sleep=add_sleep_log(sleep),跟上面那个没区别。

@add_sleep_log

def sleep():

import random

import time

print("睡眠中......")

time.sleep(random.randint(1, 5))

# 直接调用,自动添加装饰功能

sleep()

2.2 装饰器高级用法

2.2.1 带参数的装饰器

def repeat(num_times):

"""重复执行装饰器"""

def decorator(func):

def wrapper(*args, **kwargs):

for i in range(num_times):

print(f"第{i+1}次执行:")

result = func(*args, **kwargs)

return result

return wrapper

return decorator

@repeat(num_times=3)

def greet(name):

print(f"Hello, {name}!")

greet("Alice")

2.2.2 保留函数元信息

import functools

def logger(func):

"""日志装饰器(保留元信息)"""

@functools.wraps(func) # 保留原函数信息

def wrapper(*args, **kwargs):

print(f"调用函数: {func.__name__}")

print(f"参数: {args}, {kwargs}")

result = func(*args, **kwargs)

print(f"结果: {result}")

return result

return wrapper

@logger

def add(a, b):

"""加法函数"""

return a + b

print(add.__name__) # 输出:add(而不是wrapper)

print(add.__doc__) # 输出:加法函数

2.3 类装饰器

2.3.1 类实现装饰器

class Timer:

"""计时装饰器(类实现)"""

def __init__(self, func):

self.func = func

functools.update_wrapper(self, func)

def __call__(self, *args, **kwargs):

import time

start_time = time.time()

result = self.func(*args, **kwargs)

end_time = time.time()

print(f"函数 {self.func.__name__} 执行时间: {end_time - start_time:.4f}秒")

return result

@Timer

def slow_function():

import time

time.sleep(2)

return "完成"

slow_function()

2.3.2 带参数的类装饰器

class Retry:

"""重试装饰器"""

def __init__(self, max_retries=3):

self.max_retries = max_retries

def __call__(self, func):

@functools.wraps(func)

def wrapper(*args, **kwargs):

for attempt in range(self.max_retries):

try:

return func(*args, **kwargs)

except Exception as e:

print(f"尝试 {attempt + 1} 失败: {e}")

if attempt == self.max_retries - 1:

raise

return None

return wrapper

@Retry(max_retries=3)

def risky_operation():

import random

if random.random() < 0.7:

raise ValueError("随机失败")

return "成功"

risky_operation()

三、设计模式(Design Patterns)

3.1 单例模式(Singleton)

3.1.1 单例模式概念

确保一个类只有一个实例,并提供一个全局访问点。

问题场景:

class DatabaseConnection:

pass

# 传统方式创建多个实例

db1 = DatabaseConnection()

db2 = DatabaseConnection()

print(db1 is db2) # False,不是同一个实例

3.1.2 单例模式实现

class Singleton:

"""单例模式基类"""

_instance = None

def __new__(cls, *args, **kwargs):

if not cls._instance:

cls._instance = super().__new__(cls)

return cls._instance

class DatabaseConnection(Singleton):

def __init__(self):

# 防止多次初始化

if not hasattr(self, 'initialized'):

self.connection_string = "localhost:5432"

self.initialized = True

# 测试单例

db1 = DatabaseConnection()

db2 = DatabaseConnection()

print(db1 is db2) # True,是同一个实例

print(db1.connection_string) # localhost:5432

3.1.3 模块级单例

# database.py

class _DatabaseConnection:

def __init__(self):

self.connection_string = "localhost:5432"

def connect(self):

print(f"连接到: {self.connection_string}")

# 模块级别单例

database_instance = _DatabaseConnection()

# main.py

from database import database_instance

# 在整个应用中共享同一个实例

db1 = database_instance

db2 = database_instance

print(db1 is db2) # True

3.2 工厂模式(Factory)

3.2.1 简单工厂模式

from abc import ABC, abstractmethod

class Animal(ABC):

"""动物抽象类"""

@abstractmethod

def speak(self):

pass

class Dog(Animal):

def speak(self):

return "汪汪汪"

class Cat(Animal):

def speak(self):

return "喵喵喵"

class AnimalFactory:

"""动物工厂"""

@staticmethod

def create_animal(animal_type):

if animal_type == "dog":

return Dog()

elif animal_type == "cat":

return Cat()

else:

raise ValueError(f"不支持的动物类型: {animal_type}")

# 使用工厂

dog = AnimalFactory.create_animal("dog")

cat = AnimalFactory.create_animal("cat")

print(dog.speak()) # 汪汪汪

print(cat.speak()) # 喵喵喵

3.2.2 工厂方法模式

from abc import ABC, abstractmethod

class Vehicle(ABC):

"""交通工具抽象类"""

@abstractmethod

def deliver(self):

pass

class Truck(Vehicle):

def deliver(self):

return "卡车陆路运输"

class Ship(Vehicle):

def deliver(self):

return "轮船海运"

class Logistics(ABC):

"""物流抽象类(工厂)"""

@abstractmethod

def create_vehicle(self) -> Vehicle:

pass

def plan_delivery(self):

vehicle = self.create_vehicle()

return f"物流计划: {vehicle.deliver()}"

class RoadLogistics(Logistics):

def create_vehicle(self) -> Vehicle:

return Truck()

class SeaLogistics(Logistics):

def create_vehicle(self) -> Vehicle:

return Ship()

# 使用

road_logistics = RoadLogistics()

sea_logistics = SeaLogistics()

print(road_logistics.plan_delivery()) # 物流计划: 卡车陆路运输

print(sea_logistics.plan_delivery()) # 物流计划: 轮船海运

四、多线程编程

4.1 进程与线程基础

4.1.1 进程与线程概念

进程:程序的一次执行,拥有独立的内存空间

线程:进程内的执行单元,共享进程内存空间

4.1.2 多线程优势

import time

def task(name, duration):

"""模拟耗时任务"""

print(f"{name} 开始执行")

time.sleep(duration)

print(f"{name} 执行完成,耗时{duration}秒")

# 顺序执行(总耗时6秒)

start_time = time.time()

task("任务1", 2)

task("任务2", 3)

task("任务3", 1)

print(f"顺序执行总耗时: {time.time() - start_time:.2f}秒")

4.2 threading模块使用

4.2.1 基础多线程

import threading

import time

def sing():

"""唱歌任务"""

for i in range(3):

print("正在唱歌...")

time.sleep(1)

def dance():

"""跳舞任务"""

for i in range(3):

print("正在跳舞...")

time.sleep(1)

# 创建线程

sing_thread = threading.Thread(target=sing)

dance_thread = threading.Thread(target=dance)

# 启动线程

start_time = time.time()

sing_thread.start()

dance_thread.start()

# 等待线程结束

sing_thread.join()

dance_thread.join()

print(f"多线程执行总耗时: {time.time() - start_time:.2f}秒")

4.2.2 带参数的多线程

import threading

def worker(name, task_count):

"""工作线程函数"""

for i in range(task_count):

print(f"{name} 正在处理任务 {i+1}/{task_count}")

# 模拟工作

threading.Event().wait(0.5)

print(f"{name} 工作完成")

# 创建多个工作线程

threads = []

workers = [("Alice", 3), ("Bob", 2), ("Charlie", 4)]

for name, count in workers:

thread = threading.Thread(target=worker, args=(name, count))

threads.append(thread)

thread.start()

# 等待所有线程完成

for thread in threads:

thread.join()

print("所有工作完成!")

4.3 线程同步与通信

4.3.1 线程锁(Lock)

import threading

class BankAccount:

"""银行账户(线程安全)"""

def __init__(self, balance=0):

self.balance = balance

self.lock = threading.Lock()

def deposit(self, amount):

"""存款"""

with self.lock: # 自动获取和释放锁

old_balance = self.balance

# 模拟一些处理时间

threading.Event().wait(0.001)

self.balance = old_balance + amount

def withdraw(self, amount):

"""取款"""

with self.lock:

if self.balance >= amount:

old_balance = self.balance

threading.Event().wait(0.001)

self.balance = old_balance - amount

return True

return False

# 测试线程安全

account = BankAccount(1000)

def concurrent_operations():

for _ in range(100):

account.deposit(10)

account.withdraw(10)

# 创建多个线程同时操作账户

threads = []

for _ in range(10):

thread = threading.Thread(target=concurrent_operations)

threads.append(thread)

thread.start()

for thread in threads:

thread.join()

print(f"最终余额: {account.balance}") # 应该是1000(线程安全)

五、网络编程

5.1 Socket基础

5.1.1 Socket概念

Socket是进程间通信的端点,支持不同主机间的网络通信。

5.1.2 服务端开发

import socket

import threading

def handle_client(conn, address):

"""处理客户端连接"""

print(f"客户端 {address} 连接成功")

try:

while True:

# 接收数据

data = conn.recv(1024).decode('utf-8')

if not data or data.lower() == 'exit':

break

print(f"收到来自 {address} 的消息: {data}")

# 发送回应

response = f"服务器已收到: {data}"

conn.send(response.encode('utf-8'))

except Exception as e:

print(f"处理客户端 {address} 时出错: {e}")

finally:

conn.close()

print(f"客户端 {address} 断开连接")

def start_server(host='localhost', port=8888):

"""启动Socket服务器"""

# 创建Socket对象

server_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

# 绑定地址和端口

server_socket.bind((host, port))

# 开始监听

server_socket.listen(5)

print(f"服务器启动在 {host}:{port}")

try:

while True:

# 接受客户端连接

conn, address = server_socket.accept()

# 为每个客户端创建新线程

client_thread = threading.Thread(

target=handle_client,

args=(conn, address)

)

client_thread.daemon = True

client_thread.start()

except KeyboardInterrupt:

print("服务器关闭")

finally:

server_socket.close()

# 启动服务器

# start_server()

5.1.3 客户端开发

import socket

def start_client(host='localhost', port=8888):

"""启动Socket客户端"""

# 创建Socket对象

client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

try:

# 连接服务器

client_socket.connect((host, port))

print(f"已连接到服务器 {host}:{port}")

while True:

# 获取用户输入

message = input("请输入消息 (输入exit退出): ")

if message.lower() == 'exit':

break

# 发送消息到服务器

client_socket.send(message.encode('utf-8'))

# 接收服务器响应

response = client_socket.recv(1024).decode('utf-8')

print(f"服务器响应: {response}")

except Exception as e:

print(f"连接错误: {e}")

finally:

client_socket.close()

print("连接已关闭")

# 启动客户端

# start_client()

5.2 多客户端聊天室

5.2.1 简易聊天室服务端

import socket

import threading

class ChatServer:

"""聊天室服务器"""

def __init__(self, host='localhost', port=8888):

self.host = host

self.port = port

self.clients = []

self.lock = threading.Lock()

self.server_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

def broadcast(self, message, sender_conn=None):

"""广播消息给所有客户端(除了发送者)"""

with self.lock:

for client in self.clients:

if client != sender_conn:

try:

client.send(message.encode('utf-8'))

except:

# 发送失败,移除客户端

self.clients.remove(client)

def handle_client(self, conn, address):

"""处理单个客户端连接"""

print(f"新客户端加入: {address}")

with self.lock:

self.clients.append(conn)

try:

# 通知所有客户端有新用户加入

self.broadcast(f"用户 {address} 加入了聊天室", conn)

while True:

message = conn.recv(1024).decode('utf-8')

if not message:

break

print(f"{address}: {message}")

# 广播消息

self.broadcast(f"{address}: {message}", conn)

except Exception as e:

print(f"客户端 {address} 错误: {e}")

finally:

with self.lock:

if conn in self.clients:

self.clients.remove(conn)

conn.close()

self.broadcast(f"用户 {address} 离开了聊天室")

print(f"客户端 {address} 断开连接")

def start(self):

"""启动服务器"""

self.server_socket.bind((self.host, self.port))

self.server_socket.listen(5)

print(f"聊天室服务器启动在 {self.host}:{port}")

try:

while True:

conn, address = self.server_socket.accept()

# 为新客户端创建线程

thread = threading.Thread(

target=self.handle_client,

args=(conn, address)

)

thread.daemon = True

thread.start()

except KeyboardInterrupt:

print("服务器关闭")

finally:

self.server_socket.close()

# 启动聊天室服务器

# chat_server = ChatServer()

# chat_server.start()

六、正则表达式

6.1 正则表达式基础

6.1.1 三个基础方法

import re

# 测试字符串

text = "Python是一门强大的编程语言,Python简单易学"

# 1. match - 从开头匹配

result_match = re.match(r'Python', text)

print(f"match结果: {result_match.group() if result_match else 'None'}")

# 2. search - 搜索第一个匹配

result_search = re.search(r'编程', text)

print(f"search结果: {result_search.group() if result_search else 'None'}")

# 3. findall - 查找所有匹配

result_findall = re.findall(r'Python', text)

print(f"findall结果: {result_findall}")

6.1.2 原始字符串(r前缀)

# 普通字符串 vs 原始字符串

normal_str = "Hello\nWorld" # \n 被解释为换行符

raw_str = r"Hello\nWorld" # \n 被当作普通字符

print("普通字符串:", repr(normal_str))

print("原始字符串:", repr(raw_str))

# 在正则表达式中使用原始字符串很重要

pattern = r'\d+' # 匹配数字,使用原始字符串避免转义问题

6.2 元字符匹配

6.2.1 字符类匹配

import re

text = "itheima1@@python2!!666##itcast3"

# 单字符匹配

print("数字:", re.findall(r'\d', text)) # 匹配数字

print("非数字:", re.findall(r'\D', text)) # 匹配非数字

print("单词字符:", re.findall(r'\w', text)) # 匹配字母、数字、下划线

print("非单词字符:", re.findall(r'\W', text)) # 匹配特殊字符

print("空白字符:", re.findall(r'\s', "a b c")) # 匹配空白

print("任意字符:", re.findall(r'.', "abc")) # 匹配任意字符(除换行)

# 自定义字符集

print("元音字母:", re.findall(r'[aeiou]', text, re.IGNORECASE))

print("数字和字母:", re.findall(r'[a-zA-Z0-9]', text))

6.2.2 数量匹配

text = "a ab abbb abbbb abbbbb"

# 数量限定

print("a后跟0个或多个b:", re.findall(r'ab*', text))

print("a后跟1个或多个b:", re.findall(r'ab+', text))

print("a后跟0个或1个b:", re.findall(r'ab?', text))

print("a后跟2个b:", re.findall(r'ab{2}', text))

print("a后跟2到3个b:", re.findall(r'ab{2,3}', text))

print("a后跟2个以上b:", re.findall(r'ab{2,}', text))

6.3.1 数据验证(续)

import re

def validate_email(email):

"""验证邮箱格式"""

pattern = r'^[\w\.-]+@[\w\.-]+\.\w+$'

return bool(re.match(pattern, email))

def validate_phone(phone):

"""验证手机号格式"""

pattern = r'^1[3-9]\d{9}$'

return bool(re.match(pattern, phone))

def validate_password(password):

"""验证密码强度:8-20位,包含大小写字母和数字"""

pattern = r'^(?=.*[a-z])(?=.*[A-Z])(?=.*\d)[a-zA-Z\d]{8,20}$'

return bool(re.match(pattern, password))

# 测试验证函数

test_emails = ["test@example.com", "invalid.email", "user@domain.co.uk"]

test_phones = ["13812345678", "12345678901", "18887654321"]

test_passwords = ["Abc12345", "weakpass", "ABC123abc"]

print("邮箱验证:")

for email in test_emails:

print(f"{email}: {validate_email(email)}")

print("\n手机号验证:")

for phone in test_phones:

print(f"{phone}: {validate_phone(phone)}")

print("\n密码验证:")

for pwd in test_passwords:

print(f"{pwd}: {validate_password(pwd)}")

6.3.2 数据提取

import re

def extract_emails(text):

"""从文本中提取所有邮箱地址"""

pattern = r'[\w\.-]+@[\w\.-]+\.\w+'

return re.findall(pattern, text)

def extract_phone_numbers(text):

"""从文本中提取手机号码"""

pattern = r'1[3-9]\d{9}'

return re.findall(pattern, text)

def extract_urls(text):

"""从文本中提取URL链接"""

pattern = r'https?://[^\s]+'

return re.findall(pattern, text)

# 测试数据提取

sample_text = """

联系我们:service@company.com 或 sales@example.cn

电话:13812345678,官网:https://www.company.com

备用邮箱:admin@test.org,技术支持:13987654321

访问我们的博客:http://blog.company.com 获取更多信息

"""

print("提取的邮箱:", extract_emails(sample_text))

print("提取的手机号:", extract_phone_numbers(sample_text))

print("提取的URL:", extract_urls(sample_text))

6.3.3 数据清洗和格式化

import re

def clean_whitespace(text):

"""清理多余空白字符"""

# 将多个连续空白替换为单个空格

return re.sub(r'\s+', ' ', text.strip())

def format_phone_number(phone):

"""格式化手机号码为 138-1234-5678 格式"""

pattern = r'(\d{3})(\d{4})(\d{4})'

return re.sub(pattern, r'\1-\2-\3', phone)

def extract_numbers(text):

"""提取文本中的所有数字"""

return re.findall(r'\d+', text)

def remove_special_chars(text):

"""移除非字母数字字符(保留中文)"""

return re.sub(r'[^\w\u4e00-\u9fa5]', '', text)

# 测试数据清洗

dirty_text = " Hello World! 电话:13812345678 "

numbers_text = "价格:299元,数量:5件,总计:1495元"

special_text = "Hello@World!#测试文本$$"

print("清理空白:", clean_whitespace(dirty_text))

print("格式化电话:", format_phone_number("13812345678"))

print("提取数字:", extract_numbers(numbers_text))

print("移除特殊字符:", remove_special_chars(special_text))

6.4 高级正则技巧

6.4.1 分组和回溯引用

import re

def find_repeated_words(text):

"""查找重复的单词"""

pattern = r'\b(\w+)\s+\1\b'

return re.findall(pattern, text, re.IGNORECASE)

def reformat_date(date_str):

"""将日期从 YYYY-MM-DD 格式改为 DD/MM/YYYY"""

pattern = r'(\d{4})-(\d{2})-(\d{2})'

return re.sub(pattern, r'\3/\2/\1', date_str)

def extract_html_tags(html):

"""提取HTML标签和内容"""

pattern = r'<(\w+)>(.*?)</\1>'

return re.findall(pattern, html)

# 测试高级功能

text_with_repeats = "这是一个测试测试文本,包含重复重复的单词"

date_str = "2024-01-15"

html_content = "<title>网页标题</title><p>段落内容</p>"

print("重复单词:", find_repeated_words(text_with_repeats))

print("日期格式化:", reformat_date(date_str))

print("HTML标签:", extract_html_tags(html_content))

6.4.2 零宽断言

import re

def extract_price(text):

"""提取价格(数字前有¥或$符号)"""

# 正向肯定预查

pattern = r'(?<=[¥$])\d+(?:\.\d{2})?'

return re.findall(pattern, text)

def find_words_after_keyword(text, keyword):

"""查找关键词后的单词"""

pattern = rf'(?<={keyword}\s)(\w+)'

return re.findall(pattern, text, re.IGNORECASE)

def validate_complex_password(password):

"""复杂密码验证:必须包含大小写字母、数字、特殊字符"""

pattern = r'^(?=.*[a-z])(?=.*[A-Z])(?=.*\d)(?=.*[@$!%*?&])[A-Za-z\d@$!%*?&]{8,}$'

return bool(re.match(pattern, password))

# 测试零宽断言

price_text = "商品价格:¥299.99,折扣价:$199.50"

keyword_text = "请查看文档 documentation 部分和示例 example 代码"

print("提取价格:", extract_price(price_text))

print("关键词后单词:", find_words_after_keyword(keyword_text, "文档"))

print("复杂密码验证:", validate_complex_password("Abc123!@#"))

七、递归(Recursion)

7.1 递归基础概念

7.1.1 什么是递归

递归是一种通过函数调用自身来解决问题的方法。它将复杂问题分解为更小的同类问题。

递归的三要素:

- 基准情况:递归结束的条件

- 递归调用:函数调用自身

- 逐步推进:每次调用都向基准情况靠近

def factorial(n):

"""阶乘函数的递归实现"""

# 基准情况

if n == 0 or n == 1:

return 1

# 递归调用

return n * factorial(n - 1)

# 测试阶乘

print(f"5! = {factorial(5)}") # 输出:120

print(f"10! = {factorial(10)}") # 输出:3628800

7.1.2 递归 vs 迭代

def factorial_iterative(n):

"""阶乘的迭代实现"""

result = 1

for i in range(1, n + 1):

result *= i

return result

def factorial_recursive(n):

"""阶乘的递归实现"""

if n <= 1:

return 1

return n * factorial_recursive(n - 1)

# 性能比较

import time

n = 100

start = time.time()

result_iter = factorial_iterative(n)

time_iter = time.time() - start

start = time.time()

result_recur = factorial_recursive(n)

time_recur = time.time() - start

print(f"迭代结果: {result_iter}, 时间: {time_iter:.6f}秒")

print(f"递归结果: {result_recur}, 时间: {time_recur:.6f}秒")

print(f"结果相同: {result_iter == result_recur}")

7.2 文件系统遍历(递归经典应用)

7.2.1 递归遍历目录

import os

def find_files(directory, extension=None):

"""

递归查找目录下所有文件

Args:

directory: 要搜索的目录

extension: 文件扩展名过滤(如 '.py')

Returns:

文件路径列表

"""

file_list = []

# 检查目录是否存在

if not os.path.exists(directory):

print(f"目录不存在: {directory}")

return file_list

try:

for item in os.listdir(directory):

full_path = os.path.join(directory, item)

if os.path.isfile(full_path):

# 如果是文件,检查扩展名

if extension is None or item.endswith(extension):

file_list.append(full_path)

elif os.path.isdir(full_path):

# 如果是目录,递归调用

file_list.extend(find_files(full_path, extension))

except PermissionError:

print(f"权限不足,无法访问: {directory}")

return file_list

# 使用示例

python_files = find_files("/path/to/project", ".py")

print(f"找到 {len(python_files)} 个Python文件")

for file in python_files[:5]: # 显示前5个文件

print(f" {file}")

7.2.2 目录树形结构展示

import os

def print_directory_tree(directory, prefix="", is_last=True, level=0, max_level=3):

"""

递归打印目录树形结构

Args:

directory: 目录路径

prefix: 前缀字符串,用于缩进

is_last: 是否是最后一个项目

level: 当前层级

max_level: 最大显示层级

"""

if level > max_level:

return

# 当前项目的连接符

connector = "└── " if is_last else "├── "

print(prefix + connector + os.path.basename(directory))

# 更新前缀用于子项目

new_prefix = prefix + (" " if is_last else "│ ")

try:

items = os.listdir(directory)

# 过滤出目录和文件,并排序

dirs = sorted([d for d in items if os.path.isdir(os.path.join(directory, d))])

files = sorted([f for f in items if os.path.isfile(os.path.join(directory, f))])

all_items = dirs + files

for i, item in enumerate(all_items):

item_path = os.path.join(directory, item)

is_last_item = (i == len(all_items) - 1)

if os.path.isdir(item_path):

# 递归处理子目录

print_directory_tree(item_path, new_prefix, is_last_item, level + 1, max_level)

else:

# 打印文件

file_connector = "└── " if is_last_item else "├── "

print(new_prefix + file_connector + item)

except PermissionError:

print(new_prefix + "└── [权限不足]")

# 使用示例

print_directory_tree("/path/to/your/project", max_level=2)

7.3 递归算法实例

7.3.1 斐波那契数列

def fibonacci(n):

"""递归计算斐波那契数列"""

if n <= 0:

return 0

elif n == 1:

return 1

else:

return fibonacci(n-1) + fibonacci(n-2)

def fibonacci_memo(n, memo={}):

"""使用备忘录优化的斐波那契数列"""

if n in memo:

return memo[n]

if n <= 0:

result = 0

elif n == 1:

result = 1

else:

result = fibonacci_memo(n-1, memo) + fibonacci_memo(n-2, memo)

memo[n] = result

return result

# 测试斐波那契数列

print("斐波那契数列前10项:")

for i in range(10):

print(f"F({i}) = {fibonacci(i)}")

# 性能对比

import time

n = 35

print(f"\n计算 F({n}) 的性能对比:")

start = time.time()

result_naive = fibonacci(n)

time_naive = time.time() - start

start = time.time()

result_memo = fibonacci_memo(n)

time_memo = time.time() - start

print(f"朴素递归: {result_naive}, 时间: {time_naive:.4f}秒")

print(f"备忘录优化: {result_memo}, 时间: {time_memo:.6f}秒")

7.3.2 汉诺塔问题

def hanoi_tower(n, source, target, auxiliary):

"""

解决汉诺塔问题

Args:

n: 盘子数量

source: 源柱子

target: 目标柱子

auxiliary: 辅助柱子

"""

if n == 1:

print(f"将盘子 1 从 {source} 移动到 {target}")

return

# 将 n-1 个盘子从源柱子移动到辅助柱子

hanoi_tower(n-1, source, auxiliary, target)

# 将最大的盘子从源柱子移动到目标柱子

print(f"将盘子 {n} 从 {source} 移动到 {target}")

# 将 n-1 个盘子从辅助柱子移动到目标柱子

hanoi_tower(n-1, auxiliary, target, source)

# 测试汉诺塔

print("3层汉诺塔解决方案:")

hanoi_tower(3, 'A', 'C', 'B')

7.4 递归的注意事项和优化

7.4.1 递归深度限制

import sys

def check_recursion_limit():

"""检查并演示递归深度限制"""

print(f"当前递归深度限制: {sys.getrecursionlimit()}")

def cause_recursion_error(n=0):

"""引发递归深度错误"""

print(f"递归深度: {n}")

cause_recursion_error(n + 1)

try:

cause_recursion_error()

except RecursionError as e:

print(f"递归错误: {e}")

# 修改递归深度限制(谨慎使用)

# sys.setrecursionlimit(5000)

check_recursion_limit()

7.4.2 尾递归优化(模拟)

def factorial_tail_recursive(n, accumulator=1):

"""尾递归形式的阶乘函数"""

if n == 0:

return accumulator

return factorial_tail_recursive(n - 1, n * accumulator)

def factorial_iterative_from_recursive(n):

"""将尾递归转换为迭代"""

accumulator = 1

while n > 0:

accumulator *= n

n -= 1

return accumulator

# 测试

n = 5

print(f"{n}! = {factorial_tail_recursive(n)}")

print(f"{n}! = {factorial_iterative_from_recursive(n)}")

八、综合实战案例

8.1 配置文件解析器

8.1.1 支持多种格式的配置解析

import re

import json

import os

from typing import Dict, Any

class ConfigParser:

"""多功能配置文件解析器"""

def __init__(self):

self.supported_formats = ['.ini', '.json', '.conf']

def parse_ini(self, file_path: str) -> Dict[str, Any]:

"""解析INI格式配置文件"""

config = {}

if not os.path.exists(file_path):

raise FileNotFoundError(f"配置文件不存在: {file_path}")

with open(file_path, 'r', encoding='utf-8') as f:

current_section = 'DEFAULT'

config[current_section] = {}

for line_num, line in enumerate(f, 1):

line = line.strip()

# 跳过空行和注释

if not line or line.startswith(';') or line.startswith('#'):

continue

# 解析节(section)

section_match = re.match(r'^\[(.*)\]$', line)

if section_match:

current_section = section_match.group(1)

config[current_section] = {}

continue

# 解析键值对

key_value_match = re.match(r'^(\w+)\s*=\s*(.*)$', line)

if key_value_match:

key = key_value_match.group(1)

value = key_value_match.group(2).strip()

# 处理值的类型转换

if value.isdigit():

value = int(value)

elif value.replace('.', '').isdigit():

value = float(value)

elif value.lower() in ('true', 'false'):

value = value.lower() == 'true'

config[current_section][key] = value

else:

print(f"警告: 第{line_num}行格式错误: {line}")

return config

def parse_json(self, file_path: str) -> Dict[str, Any]:

"""解析JSON格式配置文件"""

with open(file_path, 'r', encoding='utf-8') as f:

return json.load(f)

def auto_parse(self, file_path: str) -> Dict[str, Any]:

"""自动检测格式并解析配置文件"""

_, ext = os.path.splitext(file_path)

if ext == '.ini':

return self.parse_ini(file_path)

elif ext == '.json':

return self.parse_json(file_path)

else:

raise ValueError(f"不支持的配置文件格式: {ext}")

# 使用示例

parser = ConfigParser()

# 创建示例配置文件

ini_config = """

[database]

host = localhost

port = 3306

username = admin

password = secret

enable_ssl = true

[server]

host = 0.0.0.0

port = 8080

debug_mode = false

"""

with open('config.ini', 'w', encoding='utf-8') as f:

f.write(ini_config)

# 解析配置

config = parser.parse_ini('config.ini')

print("解析的配置:")

for section, settings in config.items():

print(f"[{section}]")

for key, value in settings.items():

print(f" {key} = {value} ({type(value).__name__})")

8.2 网络爬虫数据提取

8.2.1 网页内容解析器

import re

import requests

from typing import List, Dict

from urllib.parse import urljoin

class WebContentExtractor:

"""网页内容提取器"""

def __init__(self):

self.session = requests.Session()

self.session.headers.update({

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

})

def extract_emails(self, html: str) -> List[str]:

"""从HTML中提取邮箱地址"""

# 更精确的邮箱正则

pattern = r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b'

return re.findall(pattern, html, re.IGNORECASE)

def extract_links(self, html: str, base_url: str) -> List[str]:

"""提取页面中的所有链接"""

pattern = r'href="([^"]*)"'

raw_links = re.findall(pattern, html, re.IGNORECASE)

# 处理相对链接

full_links = []

for link in raw_links:

if link.startswith(('http://', 'https://')):

full_links.append(link)

else:

full_links.append(urljoin(base_url, link))

return full_links

def extract_phone_numbers(self, html: str) -> List[str]:

"""提取电话号码"""

# 匹配多种电话号码格式

patterns = [

r'\b1[3-9]\d{9}\b', # 手机号

r'\b0\d{2,3}-\d{7,8}\b', # 带区号的固定电话

r'\b\d{7,8}\b' # 无区号固定电话

]

phones = []

for pattern in patterns:

phones.extend(re.findall(pattern, html))

return phones

def extract_content(self, url: str) -> Dict[str, List[str]]:

"""从URL提取各种信息"""

try:

response = self.session.get(url, timeout=10)

response.raise_for_status()

html = response.text

return {

'emails': self.extract_emails(html),

'phones': self.extract_phone_numbers(html),

'links': self.extract_links(html, url),

'title': self.extract_title(html)

}

except requests.RequestException as e:

print(f"请求失败: {e}")

return {}

def extract_title(self, html: str) -> List[str]:

"""提取页面标题"""

pattern = r'<title>(.*?)</title>'

titles = re.findall(pattern, html, re.IGNORECASE | re.DOTALL)

return [title.strip() for title in titles]

# 使用示例

extractor = WebContentExtractor()

# 注意:实际使用时请遵守robots.txt和网站使用条款

sample_html = """

<html>

<head><title>联系我们 - Example公司</title></head>

<body>

<p>邮箱:contact@example.com, support@example.cn</p>

<p>电话:13812345678, 010-12345678</p>

<a href="/about">关于我们</a>

<a href="https://external.com">外部链接</a>

</body>

</html>

"""

print("提取的邮箱:", extractor.extract_emails(sample_html))

print("提取的电话:", extractor.extract_phone_numbers(sample_html))

print("提取的链接:", extractor.extract_links(sample_html, "https://example.com"))

print("提取的标题:", extractor.extract_title(sample_html))

九、性能优化与最佳实践

9.1 正则表达式优化

9.1.1 编译正则表达式

import re

import time

class OptimizedRegex:

"""优化正则表达式性能"""

def __init__(self):

# 预编译常用正则表达式

self.email_pattern = re.compile(r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b', re.IGNORECASE)

self.phone_pattern = re.compile(r'\b1[3-9]\d{9}\b')

self.url_pattern = re.compile(r'https?://[^\s]+')

def find_emails_fast(self, text: str) -> List[str]:

"""使用预编译正则快速查找邮箱"""

return self.email_pattern.findall(text)

def find_phones_fast(self, text: str) -> List[str]:

"""使用预编译正则快速查找手机号"""

return self.phone_pattern.findall(text)

# 性能对比

def performance_comparison():

"""性能对比测试"""

text = "联系邮箱: test@example.com, 电话: 13812345678 " * 1000

optimizer = OptimizedRegex()

# 测试预编译正则

start = time.time()

for _ in range(100):

emails = optimizer.find_emails_fast(text)

compiled_time = time.time() - start

# 测试未编译正则

start = time.time()

for _ in range(100):

emails = re.findall(r'\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b', text, re.IGNORECASE)

uncompiled_time = time.time() - start

print(f"预编译正则时间: {compiled_time:.4f}秒")

print(f"未编译正则时间: {uncompiled_time:.4f}秒")

print(f"性能提升: {uncompiled_time/compiled_time:.2f}倍")

performance_comparison()

9.2 递归优化策略

9.2.1 使用LRU缓存优化递归

import functools

from typing import Dict

def recursive_with_cache():

"""使用缓存优化递归性能"""

@functools.lru_cache(maxsize=None)

def fibonacci_cached(n):

if n <= 1:

return n

return fibonacci_cached(n-1) + fibonacci_cached(n-2)

def fibonacci_naive(n):

if n <= 1:

return n

return fibonacci_naive(n-1) + fibonacci_naive(n-2)

# 测试性能

n = 35

import time

start = time.time()

result_cached = fibonacci_cached(n)

time_cached = time.time() - start

start = time.time()

result_naive = fibonacci_naive(n)

time_naive = time.time() - start

print(f"斐波那契 F({n}):")

print(f"缓存优化: {result_cached}, 时间: {time_cached:.6f}秒")

print(f"朴素递归: {result_naive}, 时间: {time_naive:.4f}秒")

print(f"性能提升: {time_naive/time_cached:.0f}倍")

recursive_with_cache()

10.1 正则表达式调试(续)

import re

def debug_regex(pattern, text, flags=0):

"""调试正则表达式匹配过程"""

print(f"正则模式: {pattern}")

print(f"测试文本: {text}")

print("-" * 50)

try:

# 测试完整匹配

match = re.match(pattern, text, flags)

if match:

print("完整匹配成功!")

print(f"匹配内容: '{match.group()}'")

print(f"匹配位置: {match.span()}")

# 显示分组

if match.groups():

print("分组内容:")

for i, group in enumerate(match.groups(), 1):

print(f" 分组{i}: '{group}'")

else:

print("完整匹配失败")

# 测试搜索匹配

search = re.search(pattern, text, flags)

if search:

print("\n搜索匹配成功!")

print(f"匹配内容: '{search.group()}'")

print(f"匹配位置: {search.span()}")

else:

print("\n搜索匹配失败")

# 测试查找所有匹配

finds = re.findall(pattern, text, flags)

if finds:

print(f"\n找到 {len(finds)} 个匹配:")

for i, found in enumerate(finds, 1):

print(f" 匹配{i}: {found}")

else:

print("\n未找到任何匹配")

except re.error as e:

print(f"正则表达式错误: {e}")

except Exception as e:

print(f"发生错误: {e}")

# 测试正则调试

print("=== 正则表达式调试示例 ===")

debug_regex(r'(\d{3})-(\d{4})', "电话: 123-4567 和 890-1234")

10.2 递归深度监控

import sys

import functools

def monitor_recursion(max_depth=1000):

"""递归深度监控装饰器"""

def decorator(func):

@functools.wraps(func)

def wrapper(*args, **kwargs):

# 获取当前递归深度

depth = len([f for f in sys._current_frames().values()

if func.__name__ in str(f)])

if depth > max_depth:

raise RecursionError(f"递归深度超过限制: {depth} > {max_depth}")

if depth > max_depth * 0.8:

print(f"警告: 递归深度接近限制: {depth}/{max_depth}")

return func(*args, **kwargs)

return wrapper

return decorator

# 使用递归监控

@monitor_recursion(max_depth=100)

def recursive_function(n):

"""受监控的递归函数"""

if n <= 0:

return 0

return n + recursive_function(n - 1)

# 测试

try:

result = recursive_function(50)

print(f"递归结果: {result}")

except RecursionError as e:

print(f"递归错误: {e}")

10.3 装饰器错误处理

import functools

import time

from typing import Any, Callable

def handle_errors(max_retries=3, delay=1):

"""错误处理装饰器"""

def decorator(func: Callable) -> Callable:

@functools.wraps(func)

def wrapper(*args, **kwargs) -> Any:

for attempt in range(max_retries + 1):

try:

return func(*args, **kwargs)

except Exception as e:

if attempt == max_retries:

print(f"函数 {func.__name__} 最终失败: {e}")

raise

else:

print(f"尝试 {attempt + 1} 失败: {e}, {delay}秒后重试...")

time.sleep(delay)

return None

return wrapper

return decorator

def log_execution(func: Callable) -> Callable:

"""执行日志装饰器"""

@functools.wraps(func)

def wrapper(*args, **kwargs) -> Any:

start_time = time.time()

print(f"开始执行: {func.__name__}")

try:

result = func(*args, **kwargs)

end_time = time.time()

print(f"完成执行: {func.__name__}, 耗时: {end_time - start_time:.4f}秒")

return result

except Exception as e:

end_time = time.time()

print(f"执行失败: {func.__name__}, 耗时: {end_time - start_time:.4f}秒, 错误: {e}")

raise

return wrapper

# 组合使用装饰器

@log_execution

@handle_errors(max_retries=2, delay=0.5)

def risky_operation(should_fail=False):

"""有风险的操作"""

if should_fail:

raise ValueError("模拟失败")

return "操作成功"

# 测试错误处理

print("=== 测试成功情况 ===")

result1 = risky_operation(False)

print(f"结果: {result1}")

print("\n=== 测试失败情况 ===")

try:

result2 = risky_operation(True)

except Exception as e:

print(f"捕获到异常: {e}")

10.4 网络编程错误处理

import socket

import threading

from typing import Optional

class RobustSocketServer:

"""健壮的Socket服务器"""

def __init__(self, host='localhost', port=8888):

self.host = host

self.port = port

self.clients = []

self.running = False

self.server_socket: Optional[socket.socket] = None

def handle_client(self, conn: socket.socket, addr: tuple):

"""处理客户端连接(带错误处理)"""

client_info = f"{addr[0]}:{addr[1]}"

print(f"客户端 {client_info} 连接成功")

try:

with conn: # 使用上下文管理器确保连接关闭

while self.running:

try:

# 设置超时以避免阻塞

conn.settimeout(1.0)

data = conn.recv(1024)

if not # 客户端断开连接

break

message = data.decode('utf-8').strip()

print(f"收到来自 {client_info} 的消息: {message}")

# 处理特殊命令

if message.lower() == 'exit':

conn.send("再见!".encode('utf-8'))

break

elif message.lower() == 'shutdown':

self.stop()

break

# 回声响应

response = f"服务器收到: {message}"

conn.send(response.encode('utf-8'))

except socket.timeout:

continue # 超时是正常的,继续循环

except UnicodeDecodeError:

conn.send("错误: 消息编码无效".encode('utf-8'))

except Exception as e:

print(f"处理客户端 {client_info} 数据时错误: {e}")

break

except Exception as e:

print(f"客户端 {client_info} 处理错误: {e}")

finally:

if conn in self.clients:

self.clients.remove(conn)

print(f"客户端 {client_info} 断开连接")

def start(self):

"""启动服务器"""

try:

self.server_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

self.server_socket.setsockopt(socket.SOL_SOCKET, socket.SO_REUSEADDR, 1)

self.server_socket.bind((self.host, self.port))

self.server_socket.listen(5)

self.server_socket.settimeout(1.0) # 设置接受超时

self.running = True

print(f"服务器启动在 {self.host}:{self.port}")

while self.running:

try:

conn, addr = self.server_socket.accept()

# 创建新线程处理客户端

client_thread = threading.Thread(

target=self.handle_client,

args=(conn, addr),

daemon=True

)

client_thread.start()

self.clients.append(conn)

except socket.timeout:

continue # 超时是正常的,继续接受新连接

except OSError as e:

if self.running: # 只有在运行时的错误才报告

print(f"接受连接错误: {e}")

break

except Exception as e:

print(f"服务器错误: {e}")

finally:

self.stop()

def stop(self):

"""停止服务器"""

print("正在停止服务器...")

self.running = False

# 关闭所有客户端连接

for conn in self.clients:

try:

conn.close()

except:

pass

self.clients.clear()

# 关闭服务器socket

if self.server_socket:

try:

self.server_socket.close()

except:

pass

self.server_socket = None

print("服务器已停止")

# 测试健壮服务器

def test_robust_server():

"""测试健壮服务器"""

server = RobustSocketServer(port=9999)

# 在后台启动服务器

server_thread = threading.Thread(target=server.start, daemon=True)

server_thread.start()

# 给服务器时间启动

import time

time.sleep(1)

# 测试客户端连接

try:

client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

client_socket.settimeout(5.0)

client_socket.connect(('localhost', 9999))

# 测试正常通信

client_socket.send("Hello Server".encode('utf-8'))

response = client_socket.recv(1024).decode('utf-8')

print(f"服务器响应: {response}")

# 测试退出

client_socket.send("exit".encode('utf-8'))

response = client_socket.recv(1024).decode('utf-8')

print(f"退出响应: {response}")

client_socket.close()

except Exception as e:

print(f"客户端测试错误: {e}")

# 停止服务器

time.sleep(1)

server.stop()

# 运行测试

test_robust_server()

十一、性能优化与监控

11.1 内存使用监控

import tracemalloc

import time

from functools import wraps

def monitor_memory_usage(func):

"""内存使用监控装饰器"""

@wraps(func)

def wrapper(*args, **kwargs):

# 开始内存跟踪

tracemalloc.start()

# 记录开始状态

snapshot1 = tracemalloc.take_snapshot()

# 执行函数

start_time = time.time()

result = func(*args, **kwargs)

end_time = time.time()

# 记录结束状态

snapshot2 = tracemalloc.take_snapshot()

# 分析内存差异

top_stats = snapshot2.compare_to(snapshot1, 'lineno')

print(f"\n函数 {func.__name__} 内存使用分析:")

print(f"执行时间: {end_time - start_time:.4f}秒")

print("内存变化 Top 5:")

for stat in top_stats[:5]:

print(f" {stat}")

# 停止内存跟踪

tracemalloc.stop()

return result

return wrapper

# 测试内存监控

@monitor_memory_usage

def memory_intensive_operation():

"""内存密集型操作"""

# 创建大量数据

big_list = [i for i in range(100000)]

big_dict = {i: str(i) for i in range(100000)}

# 模拟一些处理

result = [x * 2 for x in big_list if x in big_dict]

return len(result)

# 运行测试

memory_intensive_operation()

11.2 多线程性能优化

import threading

import queue

import time

from concurrent.futures import ThreadPoolExecutor

class OptimizedThreadPool:

"""优化的线程池"""

def __init__(self, max_workers=None):

self.max_workers = max_workers or threading.cpu_count()

self.executor = ThreadPoolExecutor(max_workers=self.max_workers)

self.task_queue = queue.Queue()

self.results = []

self.lock = threading.Lock()

def worker(self, task_id, data):

"""工作线程函数"""

print(f"线程 {threading.current_thread().name} 处理任务 {task_id}")

# 模拟工作负载

time.sleep(0.1)

result = f"任务{task_id}结果: {data * 2}"

with self.lock:

self.results.append(result)

return result

def process_batch(self, data_list, chunk_size=10):

"""批量处理数据"""

print(f"开始处理 {len(data_list)} 个任务,使用 {self.max_workers} 个线程")

start_time = time.time()

futures = []

# 分批提交任务

for i, data in enumerate(data_list):

future = self.executor.submit(self.worker, i, data)

futures.append(future)

# 等待所有任务完成

results = []

for future in futures:

try:

result = future.result(timeout=10) # 10秒超时

results.append(result)

except Exception as e:

print(f"任务执行错误: {e}")

end_time = time.time()

print(f"处理完成: {len(results)} 个结果")

print(f"总耗时: {end_time - start_time:.4f}秒")

print(f"平均每个任务: {(end_time - start_time) / len(data_list):.4f}秒")

return results

def shutdown(self):

"""关闭线程池"""

self.executor.shutdown(wait=True)

# 测试优化线程池

def test_optimized_pool():

"""测试优化线程池"""

data_list = [i for i in range(100)] # 100个任务

# 测试不同线程数

for workers in [1, 2, 4, 8]:

print(f"\n=== 使用 {workers} 个线程 ===")

pool = OptimizedThreadPool(max_workers=workers)

results = pool.process_batch(data_list)

pool.shutdown()

# 显示前5个结果

print("前5个结果:", results[:5])

# 运行测试

test_optimized_pool()

11.3 正则表达式性能优化

import re

import time

from functools import lru_cache

class OptimizedRegexPatterns:

"""优化的正则表达式模式"""

def __init__(self):

# 预编译常用模式

self.patterns = {

'email': re.compile(r'^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,}$'),

'phone': re.compile(r'^1[3-9]\d{9}$'),

'url': re.compile(r'^https?://[^\s/$.?#].[^\s]*$'),

'ipv4': re.compile(r'^(\d{1,3}\.){3}\d{1,3}$'),

'date': re.compile(r'^\d{4}-\d{2}-\d{2}$')

}

@lru_cache(maxsize=1000)

def match_pattern(self, pattern_name, text):

"""使用缓存匹配模式"""

if pattern_name not in self.patterns:

raise ValueError(f"未知模式: {pattern_name}")

return bool(self.patterns[pattern_name].match(text))

def validate_data_batch(self, data_list, pattern_name):

"""批量验证数据"""

valid_count = 0

start_time = time.time()

for data in data_list:

if self.match_pattern(pattern_name, data):

valid_count += 1

end_time = time.time()

print(f"模式 '{pattern_name}' 验证结果:")

print(f" 有效: {valid_count}/{len(data_list)}")

print(f" 耗时: {end_time - start_time:.6f}秒")

print(f" 平均: {(end_time - start_time)/len(data_list):.6f}秒/个")

return valid_count

# 测试正则性能优化

def test_regex_performance():

"""测试正则表达式性能"""

# 测试数据

emails = [

"test@example.com", "invalid.email", "user@domain.co.uk",

"another@test.org", "bad-email", "valid@email.com"

] * 1000 # 放大数据量

phones = [

"13812345678", "12345678901", "13987654321",

"invalid", "18800001111", "19912344321"

] * 1000

optimizer = OptimizedRegexPatterns()

print("=== 正则表达式性能测试 ===")

optimizer.validate_data_batch(emails, 'email')

optimizer.validate_data_batch(phones, 'phone')

# 运行测试

test_regex_performance()

Python高阶技巧完整指南

十二、综合实战项目(完整版)

12.1 智能日志分析系统(完整实现)

import re

import os

import threading

import time

from datetime import datetime, timedelta

from collections import defaultdict, Counter

from typing import Dict, List, Tuple, Any

from concurrent.futures import ThreadPoolExecutor

import json

class SmartLogAnalyzer:

"""智能日志分析系统 - 完整版"""

def __init__(self, log_directory="logs"):

self.log_directory = log_directory

self.patterns = {

'error': re.compile(r'ERROR|CRITICAL|FATAL', re.IGNORECASE),

'warning': re.compile(r'WARNING', re.IGNORECASE),

'http_status': re.compile(r'HTTP/\d\.\d"\s+(\d{3})'),

'ip_address': re.compile(r'\b(?:[0-9]{1,3}\.){3}[0-9]{1,3}\b'),

'timestamp': re.compile(r'\[(.*?)\]'),

'url': re.compile(r'"(GET|POST|PUT|DELETE)\s+([^"]+)\s+HTTP'),

'user_agent': re.compile(r'"([^"]*[Mm]ozilla[^"]*)"'),

}

self.analysis_results = defaultdict(list)

self.lock = threading.Lock()

self.performance_stats = {

'files_processed': 0,

'total_lines': 0,

'start_time': None,

'end_time': None

}

def analyze_log_file(self, file_path: str) -> Dict[str, Any]:

"""分析单个日志文件"""

file_results = {

'errors': [],

'warnings': [],

'status_codes': Counter(),

'ip_addresses': Counter(),

'urls': Counter(),

'user_agents': Counter(),

'file_size': 0,

'line_count': 0,

'file_name': os.path.basename(file_path)

}

try:

file_size = os.path.getsize(file_path)

file_results['file_size'] = file_size

with open(file_path, 'r', encoding='utf-8', errors='ignore') as f:

for line_num, line in enumerate(f, 1):

file_results['line_count'] = line_num

# 分析错误

if self.patterns['error'].search(line):

timestamp_match = self.patterns['timestamp'].search(line)

timestamp = timestamp_match.group(1) if timestamp_match else '未知时间'

file_results['errors'].append({

'line': line_num,

'timestamp': timestamp,

'message': line.strip()[:200],

'file': os.path.basename(file_path)

})

# 分析警告

if self.patterns['warning'].search(line):

file_results['warnings'].append({

'line': line_num,

'message': line.strip()[:200]

})

# 分析HTTP状态码

status_match = self.patterns['http_status'].search(line)

if status_match:

status_code = status_match.group(1)

file_results['status_codes'][status_code] += 1

# 分析IP地址

ip_matches = self.patterns['ip_address'].findall(line)

for ip in ip_matches:

file_results['ip_addresses'][ip] += 1

# 分析URL

url_match = self.patterns['url'].search(line)

if url_match:

method, url = url_match.groups()

file_results['urls'][f"{method} {url}"] += 1

# 分析User-Agent

ua_match = self.patterns['user_agent'].search(line)

if ua_match:

user_agent = ua_match.group(1)

file_results['user_agents'][user_agent] += 1

except Exception as e:

print(f"分析文件 {file_path} 时错误: {e}")

file_results['error'] = str(e)

return file_results

def analyze_directory(self, use_threading=True, max_workers=None) -> Dict[str, Any]:

"""分析整个日志目录"""

if not os.path.exists(self.log_directory):

print(f"日志目录不存在: {self.log_directory}")

return {}

# 查找所有日志文件

log_files = []

for root, dirs, files in os.walk(self.log_directory):

for filename in files:

if any(filename.endswith(ext) for ext in ['.log', '.txt', '.csv']):

file_path = os.path.join(root, filename)

if os.path.isfile(file_path):

log_files.append(file_path)

print(f"找到 {len(log_files)} 个日志文件")

if not log_files:

return {}

self.performance_stats['start_time'] = time.time()

self.performance_stats['files_processed'] = len(log_files)

if use_threading and len(log_files) > 1:

results = self._analyze_with_threads(log_files, max_workers)

else:

results = self._analyze_sequentially(log_files)

self.performance_stats['end_time'] = time.time()

return self._summarize_results(results)

def _analyze_sequentially(self, log_files: List[str]) -> Dict[str, Any]:

"""顺序分析文件"""

overall_results = defaultdict(list)

for file_path in log_files:

print(f"分析文件: {os.path.basename(file_path)}")

file_results = self.analyze_log_file(file_path)

self._merge_results(overall_results, file_results)

return overall_results

def _analyze_with_threads(self, log_files: List[str], max_workers=None) -> Dict[str, Any]:

"""使用多线程分析文件"""

overall_results = defaultdict(list)

if max_workers is None:

max_workers = min(4, len(log_files))

with ThreadPoolExecutor(max_workers=max_workers) as executor:

# 提交所有任务

future_to_file = {

executor.submit(self.analyze_log_file, file_path): file_path

for file_path in log_files

}

# 收集结果

for future in future_to_file:

file_path = future_to_file[future]

try:

file_results = future.result(timeout=30)

print(f"✓ 完成分析: {os.path.basename(file_path)}")

self._merge_results(overall_results, file_results)

except Exception as e:

print(f"✗ 分析文件 {file_path} 时错误: {e}")

return overall_results

def _merge_results(self, overall: Dict, file_results: Dict):

"""合并单个文件结果到总体结果"""

with self.lock:

for key, value in file_results.items():

if key == 'errors' and isinstance(value, list):

overall['errors'].extend(value)

elif key == 'warnings' and isinstance(value, list):

overall['warnings'].extend(value)

elif isinstance(value, Counter):

if key not in overall or not isinstance(overall[key], Counter):

overall[key] = Counter()

overall[key].update(value)

elif key in ['file_size', 'line_count']:

if key not in overall:

overall[key] = 0

overall[key] += value

elif key not in overall:

overall[key] = value

def _summarize_results(self, results: Dict[str, Any]) -> Dict[str, Any]:

"""汇总分析结果"""

summary = {

'summary': {

'total_files': self.performance_stats['files_processed'],

'total_errors': len(results.get('errors', [])),

'total_warnings': len(results.get('warnings', [])),

'total_lines': results.get('line_count', 0),

'total_size_bytes': results.get('file_size', 0),

'analysis_time_seconds': self.performance_stats['end_time'] - self.performance_stats['start_time'],

'analysis_date': datetime.now().isoformat()

},

'errors': {

'by_type': defaultdict(int),

'recent_errors': sorted(results.get('errors', []),

key=lambda x: x.get('timestamp', ''),

reverse=True)[:10],

'total_count': len(results.get('errors', []))

},

'traffic': {

'status_codes': dict(results.get('status_codes', Counter()).most_common()),

'top_ips': dict(results.get('ip_addresses', Counter()).most_common(10)),

'top_urls': dict(results.get('urls', Counter()).most_common(10)),

'top_user_agents': dict(results.get('user_agents', Counter()).most_common(5))

},

'performance': {

'lines_per_second': results.get('line_count', 0) /

(self.performance_stats['end_time'] - self.performance_stats['start_time'] or 1),

'files_per_second': self.performance_stats['files_processed'] /

(self.performance_stats['end_time'] - self.performance_stats['start_time'] or 1)

}

}

# 错误分类

for error in results.get('errors', []):

error_msg = error.get('message', '').lower()

if 'timeout' in error_msg:

summary['errors']['by_type']['timeout'] += 1

elif 'connection' in error_msg:

summary['errors']['by_type']['connection'] += 1

elif 'memory' in error_msg:

summary['errors']['by_type']['memory'] += 1

elif 'database' in error_msg:

summary['errors']['by_type']['database'] += 1

elif 'file' in error_msg:

summary['errors']['by_type']['file'] += 1

else:

summary['errors']['by_type']['other'] += 1

return summary

def generate_report(self, results: Dict[str, Any], output_file=None):

"""生成详细分析报告"""

report = []

# 报告头部

report.append("=" * 70)

report.append(" 智能日志分析报告")

report.append("=" * 70)

# 摘要信息

summary = results['summary']

report.append(f"分析时间: {summary['analysis_date']}")

report.append(f"分析文件数: {summary['total_files']}")

report.append(f"总行数: {summary['total_lines']:,}")

report.append(f"文件总大小: {summary['total_size_bytes'] / (1024*1024):.2f} MB")

report.append(f"分析耗时: {summary['analysis_time_seconds']:.2f}秒")

report.append(f"处理速度: {results['performance']['lines_per_second']:.0f} 行/秒")

# 错误分析

report.append("\n" + "=" * 70)

report.append("错误分析")

report.append("=" * 70)

report.append(f"总错误数: {summary['total_errors']}")

report.append(f"总警告数: {summary['total_warnings']}")

if results['errors']['by_type']:

report.append("\n错误分类:")

for error_type, count in results['errors']['by_type'].items():

report.append(f" {error_type}: {count}")

if results['errors']['recent_errors']:

report.append("\n最近10个错误:")

for error in results['errors']['recent_errors'][:5]:

report.append(f" [{error.get('timestamp', '未知时间')}] {error.get('message', '')}")

# 流量分析

report.append("\n" + "=" * 70)

report.append("流量分析")

report.append("=" * 70)

if results['traffic']['status_codes']:

report.append("HTTP状态码分布:")

for code, count in results['traffic']['status_codes'].items():

report.append(f" {code}: {count}")

if results['traffic']['top_ips']:

report.append("\n访问最频繁的IP地址 (Top 10):")

for ip, count in results['traffic']['top_ips'].items():

report.append(f" {ip}: {count}次")

if results['traffic']['top_urls']:

report.append("\n最常访问的URL (Top 10):")

for url, count in results['traffic']['top_urls'].items():

report.append(f" {url}: {count}次")

if results['traffic']['top_user_agents']:

report.append("\n最常见的User-Agent (Top 5):")

for ua, count in results['traffic']['top_user_agents'].items():

report.append(f" {ua[:50]}: {count}次")

# 输出报告

report_text = "\n".join(report)

print(report_text)

# 保存到文件

if output_file:

with open(output_file, 'w', encoding='utf-8') as f:

f.write(report_text)

print(f"\n报告已保存到: {output_file}")

return report_text

def export_json(self, results: Dict[str, Any], output_file: str):

"""导出结果为JSON格式"""

with open(output_file, 'w', encoding='utf-8') as f:

json.dump(results, f, ensure_ascii=False, indent=2)

print(f"JSON结果已导出到: {output_file}")

# 演示和使用示例

def demo_log_analyzer():

"""演示日志分析器"""

# 创建测试日志目录和文件

os.makedirs("demo_logs", exist_ok=True)

# 创建多个测试日志文件

test_logs = [

"""[2024-01-15 10:30:00] INFO 用户登录成功

[2024-01-15 10:31:00] ERROR 数据库连接超时

[2024-01-15 10:32:00] WARNING 内存使用率过高

[2024-01-15 10:33:00] INFO 192.168.1.1 - - [15/Jan/2024:10:33:00] "GET /api/users HTTP/1.1" 200 1234 "Mozilla/5.0"

[2024-01-15 10:34:00] ERROR 文件不存在

[2024-01-15 10:35:00] INFO 192.168.1.2 - - [15/Jan/2024:10:35:00] "POST /api/login HTTP/1.1" 401 567 "Chrome/91.0"

""",

"""[2024-01-15 11:30:00] INFO 系统启动完成

[2024-01-15 11:31:00] ERROR 数据库连接失败

[2024-01-15 11:32:00] INFO 192.168.1.3 - - [15/Jan/2024:11:32:00] "GET /api/products HTTP/1.1" 200 8901 "Firefox/89.0"

[2024-01-15 11:33:00] WARNING 磁盘空间不足

[2024-01-15 11:34:00] INFO 192.168.1.1 - - [15/Jan/2024:11:34:00] "GET /api/orders HTTP/1.1" 404 234 "Mozilla/5.0"

"""

]

for i, log_content in enumerate(test_logs, 1):

with open(f"demo_logs/app_{i}.log", 'w', encoding='utf-8') as f:

f.write(log_content)

print("创建测试日志文件完成")

# 使用日志分析器

analyzer = SmartLogAnalyzer("demo_logs")

print("开始分析日志...")

results = analyzer.analyze_directory(use_threading=True, max_workers=2)

print("生成分析报告...")

analyzer.generate_report(results, "log_analysis_report.txt")

print("导出JSON结果...")

analyzer.export_json(results, "log_analysis_results.json")

# 清理测试文件

import shutil

shutil.rmtree("demo_logs")

print("清理测试文件完成")

# 运行演示

if __name__ == "__main__":

demo_log_analyzer()

十三、高级设计模式实战

13.1 观察者模式(Observer Pattern)

from abc import ABC, abstractmethod

from typing import List, Dict, Any

import threading

class Event:

"""事件类"""

def __init__(self, event_type: str, data: Any = None):

self.event_type = event_type

self.data = data

self.timestamp = time.time()

class Observer(ABC):

"""观察者接口"""

@abstractmethod

def update(self, event: Event):

"""接收事件更新"""

pass

class Subject:

"""主题(被观察者)"""

def __init__(self):

self._observers: List[Observer] = []

self._lock = threading.Lock()

def attach(self, observer: Observer):

"""添加观察者"""

with self._lock:

if observer not in self._observers:

self._observers.append(observer)

def detach(self, observer: Observer):

"""移除观察者"""

with self._lock:

if observer in self._observers:

self._observers.remove(observer)

def notify(self, event: Event):

"""通知所有观察者"""

with self._lock:

for observer in self._observers:

try:

observer.update(event)

except Exception as e:

print(f"观察者通知失败: {e}")

# 具体实现

class LogMonitor(Subject):

"""日志监控器"""

def __init__(self):

super().__init__()

self._error_count = 0

self._warning_count = 0

def process_log_line(self, line: str):

"""处理日志行并触发事件"""

if 'ERROR' in line:

self._error_count += 1

event = Event('ERROR', {

'message': line.strip(),

'error_count': self._error_count,

'timestamp': time.time()

})

self.notify(event)

elif 'WARNING' in line:

self._warning_count += 1

event = Event('WARNING', {

'message': line.strip(),

'warning_count': self._warning_count

})

self.notify(event)

elif 'CRITICAL' in line:

event = Event('CRITICAL', {'message': line.strip()})

self.notify(event)

class EmailNotifier(Observer):

"""邮件通知器"""

def update(self, event: Event):

if event.event_type == 'CRITICAL':

self._send_critical_alert(event.data)

elif event.event_type == 'ERROR':

if event.data['error_count'] % 10 == 0: # 每10个错误发送一次

self._send_error_summary(event.data)

def _send_critical_alert(self, data: Dict):

print(f"🚨 发送严重警报邮件: {data['message']}")

def _send_error_summary(self, Dict):

print(f"📧 发送错误摘要邮件: 累计{data['error_count']}个错误")

class DatabaseLogger(Observer):

"""数据库记录器"""

def update(self, event: Event):

print(f"💾 记录到数据库: [{event.event_type}] {event.data['message']}")

class DashboardUpdater(Observer):

"""仪表板更新器"""

def __init__(self):

self._stats = {'errors': 0, 'warnings': 0, 'criticals': 0}

def update(self, event: Event):

if event.event_type in self._stats:

self._stats[event.event_type] += 1

print(f"📊 更新仪表板: 错误{self._stats['errors']}, "

f"警告{self._stats['warnings']}, 严重{self._stats['criticals']}")

# 使用观察者模式

def demo_observer_pattern():

"""演示观察者模式"""

# 创建监控器

monitor = LogMonitor()

# 创建观察者

email_notifier = EmailNotifier()

db_logger = DatabaseLogger()

dashboard = DashboardUpdater()

# 注册观察者

monitor.attach(email_notifier)

monitor.attach(db_logger)

monitor.attach(dashboard)

# 模拟日志处理

test_logs = [

"INFO: 系统启动完成",

"ERROR: 数据库连接失败",

"WARNING: 内存使用率80%",

"ERROR: 文件读取失败",

"CRITICAL: 数据库崩溃",

"ERROR: 网络超时",

]

print("开始处理日志...")

for i, log_line in enumerate(test_logs, 1):

print(f"\n处理第{i}条日志: {log_line}")

monitor.process_log_line(log_line)

time.sleep(0.5)

# 移除一个观察者

monitor.detach(db_logger)

print("\n移除数据库记录器后:")

monitor.process_log_line("ERROR: 测试移除后的通知")

# 运行演示

demo_observer_pattern()

十四、异步编程实战

14.1 异步日志处理器

import asyncio

import aiofiles

import re

from datetime import datetime

from typing import List, Dict, Any

class AsyncLogProcessor:

"""异步日志处理器"""

def __init__(self):

self.patterns = {

'error': re.compile(r'ERROR', re.IGNORECASE),

'warning': re.compile(r'WARNING', re.IGNORECASE),

'info': re.compile(r'INFO', re.IGNORECASE),

}

self.stats = {

'processed_files': 0,

'total_lines': 0,

'errors_found': 0,

'warnings_found': 0

}

async def process_file(self, file_path: str) -> Dict[str, Any]:

"""异步处理单个日志文件"""

results = {

'file': file_path,

'errors': [],

'warnings': [],

'line_count': 0

}

try:

async with aiofiles.open(file_path, 'r', encoding='utf-8') as f:

async for line_num, line in enumerate(f, 1):

results['line_count'] = line_num

if self.patterns['error'].search(line):

results['errors'].append({

'line': line_num,

'message': line.strip()[:100],

'timestamp': datetime.now().isoformat()

})

elif self.patterns['warning'].search(line):

results['warnings'].append({

'line': line_num,

'message': line.strip()[:100]

})

# 每1000行稍微休息一下,避免阻塞

if line_num % 1000 == 0:

await asyncio.sleep(0.001)

self.stats['processed_files'] += 1

self.stats['total_lines'] += results['line_count']

self.stats['errors_found'] += len(results['errors'])

self.stats['warnings_found'] += len(results['warnings'])

except Exception as e:

results['error'] = str(e)

return results

async def process_directory(self, directory: str) -> List[Dict[str, Any]]:

"""异步处理整个目录"""

import os

# 查找所有日志文件

log_files = []

for root, dirs, files in os.walk(directory):

for file in files:

if file.endswith(('.log', '.txt')):

log_files.append(os.path.join(root, file))

print(f"找到 {len(log_files)} 个日志文件,开始异步处理...")

# 创建所有任务

tasks = [self.process_file(file_path) for file_path in log_files]

# 并发执行所有任务

results = await asyncio.gather(*tasks, return_exceptions=True)

# 过滤掉异常结果

valid_results = [r for r in results if not isinstance(r, Exception)]

return valid_results

async def real_time_monitor(self, log_file: str, check_interval: float = 1.0):

"""实时监控日志文件"""

print(f"开始实时监控: {log_file}")

# 记录已读取的位置

current_position = 0

while True:

try:

async with aiofiles.open(log_file, 'r', encoding='utf-8') as f:

# 移动到上次读取的位置

await f.seek(current_position)

# 读取新内容

new_lines = await f.readlines()

current_position = await f.tell()

# 处理新行

for line in new_lines:

line = line.strip()

if line:

await self._process_real_time_line(line)

await asyncio.sleep(check_interval)

except FileNotFoundError:

print(f"日志文件不存在: {log_file}")

await asyncio.sleep(5)

except Exception as e:

print(f"监控错误: {e}")

await asyncio.sleep(5)

async def _process_real_time_line(self, line: str):

"""处理实时日志行"""

if self.patterns['error'].search(line):

print(f"🚨 实时错误: {line[:100]}")

# 这里可以添加通知逻辑

await self._send_alert(line)

elif self.patterns['warning'].search(line):

print(f

浙公网安备 33010602011771号

浙公网安备 33010602011771号