Linux Docker Conda

一、Docker

1.登录dockerhub

docker login -u user -p 111111 dockerxx.com

2.移动镜像

1. 将镜像 runoob/ubuntu:v3 生成 my_ubuntu_v3.tar 文档

docker save -o my_ubuntu_v3.tar runoob/ubuntu:v3

2. 导入镜像到本地

docker load --input my_ubuntu_v3.tar

3. 修改镜像名称

docker tag runoob/ubuntu:v3 runoob2/ubuntu:v3

4. 从docker pod下载文件到本地

docker cp contain:/app/part-00000 ./

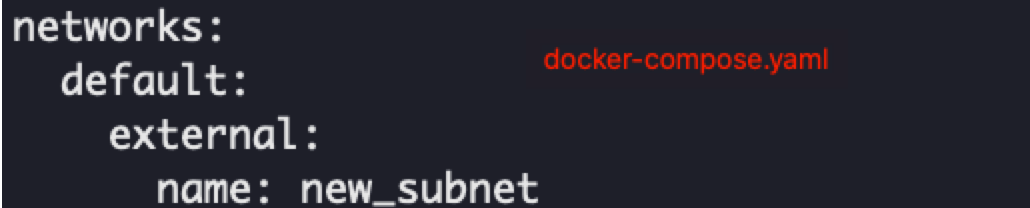

3.docker更换子网

docker network create --driver=bridge --subnet=192.168.2.0/24 --gateway=192.168.0.11 new_subnet

二、Conda环境

1.Conda小环境

conda info --envs # 查看环境 conda create -n myenv # 创建一个环境 source activate myenv # 激活进入 myenv环境 conda deactivate # 退出当前环境 conda env remove --name myenv # 移除环境

三、Linux命令

1.镜像中调整时区

rm -f /etc/localtime &&\

ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo "set fileencodings=utf-8,ucs-bom,gb18030,gbk,gb2312,cp936" >> /etc/vimrc &&\

echo "set termencoding=utf-8" >> /etc/vimrc &&\

echo "set encoding=utf-8" >> /etc/vimrc

2.启动spark-jar

#!/bin/sh

if [ -n "$1" ] ;then

dt=$1

fi

SPARK_SUBMIT_COMMAND="""

spark-submit --class "scala.aa.SoDa" \

--conf spark.master=yarn \

--conf spark.submit.deployMode=client \

--conf spark.driver.cores=4 \

--conf spark.driver.memory=4g \

--conf spark.executor.memory=10g \

--conf spark.executor.cores=20 \

--conf spark.executor.instances=10 \

--conf spark.driver.maxResultSize=2g \

--conf spark.sql.shuffle.partitions=300 \

--conf spark.shuffle.service.enabled=true

aa-1.0-SNAPSHOT.jar

"""

${SPARK_SUBMIT_COMMAND} ${dt} 2>a.err 1>a.log

echo -e "EndTime: `date '+%Y-%m-%d %H:%M:%S'` "

exit 0

3. for循环

for循环

#!/bin/bash

for((i=1;i<=9;i++));

do

echo "p_000"${i}

hdfs dfs -ls hdfs://na/p_000${i}|tail -2

done

for((i=10;i<=99;i++));

do

echo "p_00"${i}

hdfs dfs -ls hdfs://na/p_00${i}|tail -2

done

4.查看cpu核数

# 查看cpu核数和内存 # 总核数 = 物理CPU个数 X 每颗物理CPU的核数 # 总逻辑CPU数 = 物理CPU个数 X 每颗物理CPU的核数 X 超线程数 # 查看物理CPU个数 cat /proc/cpuinfo| grep "physical id"| sort| uniq| wc -l # 查看每个物理CPU中core的个数(即核数) cat /proc/cpuinfo| grep "cpu cores"| uniq # 查看逻辑CPU的个数 cat /proc/cpuinfo| grep "processor"| wc -l

5. Mysql

# 1. 安装MySQL客户端

sudo yum install mysql -y

# 2. 连接观星mysql数据库:

mysql --default-character-set=utf8 -h xx.xx.xx.xx -P 3358 -u uu db -p -A

密码: 123456

6.hive表创建

CREATE EXTERNAL TABLE `t1`( `f1` string COMMENT 'f1', `f2` string COMMENT 'f2') PARTITIONED BY ( `dt` string, `type` string) ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' LOCATION 'hdfs://xx.xx.xx.xx/' TBLPROPERTIES ( 'orc.compress'='SNAPPY', 'rawDataSize'='2', 'totalSize'='3', 'transient_lastDdlTime'='11')

修复分区:

alter table db.t1 add IF NOT EXISTS partition(dt='" + ate + "') MSCK REPAIR TABLE db.t1;

7.创建sql表

CREATE TABLE `t2` ( `id` varchar(300) NOT NULL DEFAULT '', `p_id` int(11) NOT NULL, `s1` bigint(20) DEFAULT NULL, `news` text, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8

8.CURL请求

curl --location --request POST 'http://xx.xx.xx.xx:port/cit?type=1' \

--header 'Content-Type: application/json' \

-d '{

"id": "t1",

"rr": "ii",

"feat": {

"type": "t1",

"name": "aabb"

}

}'

9.时间date命令

# 获取当前日期的前一天 date -d "-1 day" +"%Y-%m-%d"

浙公网安备 33010602011771号

浙公网安备 33010602011771号