一、部署opensearch

# 添加helm仓库

helm repo add opensearch https://opensearch-project.github.io/helm-charts

helm repo update

helm pull opensearch/opensearch --version 3.3.0 --untar

# 修改values配置

# values.yaml

···

extraEnvs:

- name: OPENSEARCH_INITIAL_ADMIN_PASSWORD # 添加字段

value: qwerQWER.com # 这个是你的密码,密码对安全度有要求,不能太简单

···

opensearchJavaOpts: "-Xmx512M -Xms512M"

···

resources: # 记得把limit字段加上,小心内存泄漏,上面有一条opensearchJavaOpts设置堆内存,给opensearch的内存不要小过这个值,假如想给1G就在1G上加512M

limits:

cpu: "1000m"

memory: "2Gi"

requests:

cpu: "500m"

memory: "800Mi"

···

···

persistence:

enabled: true

enableInitChown: true

# override image, which is busybox by default

# image: busybox

# override image tag, which is latest by default

# imageTag:

labels:

enabled: false

additionalLabels: {}

storageClass: "nfs-data" # 这里填写自己的存储类

accessModes:

- ReadWriteOnce

size: 8Gi # 设置大小

# 直接部署

helm install opensearch -n opensearch -f values.yaml .

# opensearch的镜像很大,最好是先拉下来再save成包导入到其他节点

# 提供一个国内的镜像地址: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/opensearchproject/opensearch:3.3.0

二、部署opensearch-dashboard

# 拉取helm chart

helm pull opensearch/opensearch-dashboards --version 3.3.0 --untar

cd opensearch-dashboards

# values.yaml

···

···

opensearchHosts: "https://opensearch-cluster-master:9200" # 填上opensearch的svc地址,记得要使用https的地址,因为opensearch他里面有一个默认的安全证书在里面,如果不填https的话会出现连接不上opensearch的错误,如果在opensearch中将他的安全认证关掉的话,地址填http也会连不上,因为密码也属于安全认证中一块,会出现设置了密码但是登录不上opensearch-dashboard的情况,又要关掉opensearch的密码登录功能,挺麻烦的,所以这里直接使用https就行了

···

···

ingress:

enabled: true

# For Kubernetes >= 1.18 you should specify the ingress-controller via the field ingressClassName

# See https://kubernetes.io/blog/2020/04/02/improvements-to-the-ingress-api-in-kubernetes-1.18/#specifying-the-class-of-an-ingress

ingressClassName: traefik # 下面这一段按照自己的环境填写不要照抄,或者直接改成enabled: false,自己创建一个ingress对外访问,NodePort也是可以的

annotations:

traefik.ingress.kubernetes.io/router.entrypoints: web,websecure

traefik.ingress.kubernetes.io/router.tls: "true"

traefik.ingress.kubernetes.io/router.tls.certresolver: stepca

traefik.ingress.kubernetes.io/redirect-entry-point: websecure

labels: {}

hosts:

- host: opensearch.xwk.local

paths:

- path: /

backend:

serviceName: ""

servicePort: ""

tls: []

# - secretName: chart-example-tls

# hosts:

# - chart-example.local

resources:

requests:

cpu: "100m"

memory: "250M"

limits:

cpu: "200m"

memory: "512M"

# 部署

helm install opensearch-dashboards -n opensearch -f values.yaml .

# 访问opensearch-dashboards服务,用户默认是admin,密码是上面opensearch自己设置的,登录成功则与opensearch成功连接

3、fluent-bit收集kubelet服务日志与容器日志

# 拉取helm chart文件

helm repo add fluent https://fluent.github.io/helm-charts

helm pull fluent/fluent-bit --version 0.48.10 --untar

cd fluent-bit

# values.yaml主要配置项

config:

service: |

[SERVICE]

Daemon Off

Flush {{ .Values.flush }}

Log_Level {{ .Values.logLevel }}

Parsers_File /fluent-bit/etc/parsers.conf

Parsers_File /fluent-bit/etc/conf/custom_parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port {{ .Values.metricsPort }}

Health_Check On

## https://docs.fluentbit.io/manual/pipeline/inputs

inputs: |

[INPUT] # 这里收集容器日志

Name tail # 收集的方式

Path /var/log/containers/*.log # 收集日志的路径,写自己容器日志所在的路径,记得去目录下确认后再填

Exclude_Path /var/log/containers/a.log # Exclude_Path 不收集指定的日志

multiline.parser docker, cri # docker, cri日志的多行解析器

Tag kube.* # 将收集到的*.log全部打上kube.*的标签

Mem_Buf_Limit 10M # 设置内存缓冲区,防止内存溢出

Skip_Long_Lines On # 超过缓冲区的超长日志直接跳过

[INPUT] # 这里收集服务日志

Name systemd

Tag host.* # 将收集的容器全部打上host.*的标签

Systemd_Filter _SYSTEMD_UNIT=kubelet.service # 填写服务名

Read_From_Tail On # 从最新的日志部分开始读取,Off从头读取

## https://docs.fluentbit.io/manual/pipeline/filters

filters: |

[FILTER]

Name kubernetes # 过滤插件

Match kube.* # 抓取带kube.*标签的日志

Merge_Log On # 合并元数据信息

Kube_Tag_Prefix kube.var.log.containers. # 收集的日志格式:kube.容器路径.容器日志名,所以需要使用Kube_Tag_Prefix将kube.容器路径去掉 Keep_Log Off # 是否保留原始日志字段

K8S-Logging.Parser On # 使用 Kubernetes定义的解析器

K8S-Logging.Exclude Off # 排查指定pod日志

## https://docs.fluentbit.io/manual/pipeline/outputs

outputs: |

[OUTPUT]

Name es # 解析器的名,都不用改

Match kube.* # 抓取带kube.*标签的日志

Host opensearch-cluster-master

Port 9200

HTTP_User admin # 填写opensearch的用户

HTTP_Passwd qwerQWER.com # 密码

TLS On # 开启tls

TLS.Verify off # 跳过证书

Suppress_Type_Name On # 抑制类型名字段确保兼容性

Logstash_Format On # 启用 Logstash

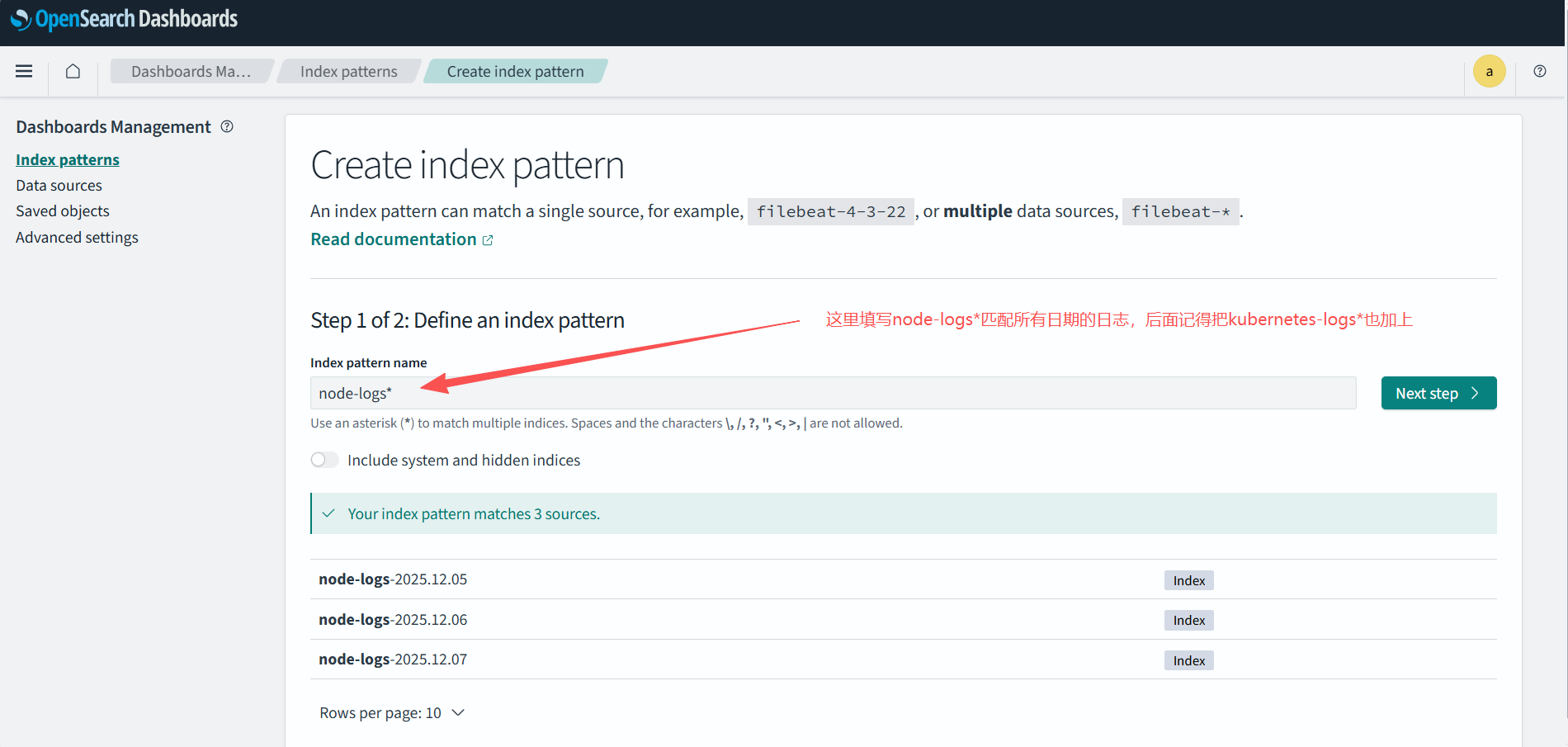

Logstash_Prefix kubernetes-logs # 生成的索引名前缀

Retry_Limit 10 # 收集重试次数

[OUTPUT]

Name es

Match host.*

Host opensearch-cluster-master

Port 9200

HTTP_User admin

HTTP_Passwd qwerQWER.com

TLS On

TLS.Verify off

Suppress_Type_Name On

Logstash_Format On

Logstash_Prefix node-logs

Retry_Limit False

···

···

daemonSetVolumes: # 设置挂载目录

- name: varlog # 想收集日志前一定要先将 日志文件挂进去

hostPath:

path: /var/log

- name: etcmachineid

hostPath:

path: /etc/machine-id

type: File

daemonSetVolumeMounts:

- name: varlog

mountPath: /var/log

- name: etcmachineid

mountPath: /etc/machine-id

readOnly: true

# 部署

helm install fluent-bit -n opensearch -f values.yaml .

# 查看fluent-bit的日志有没有一大堆的输出

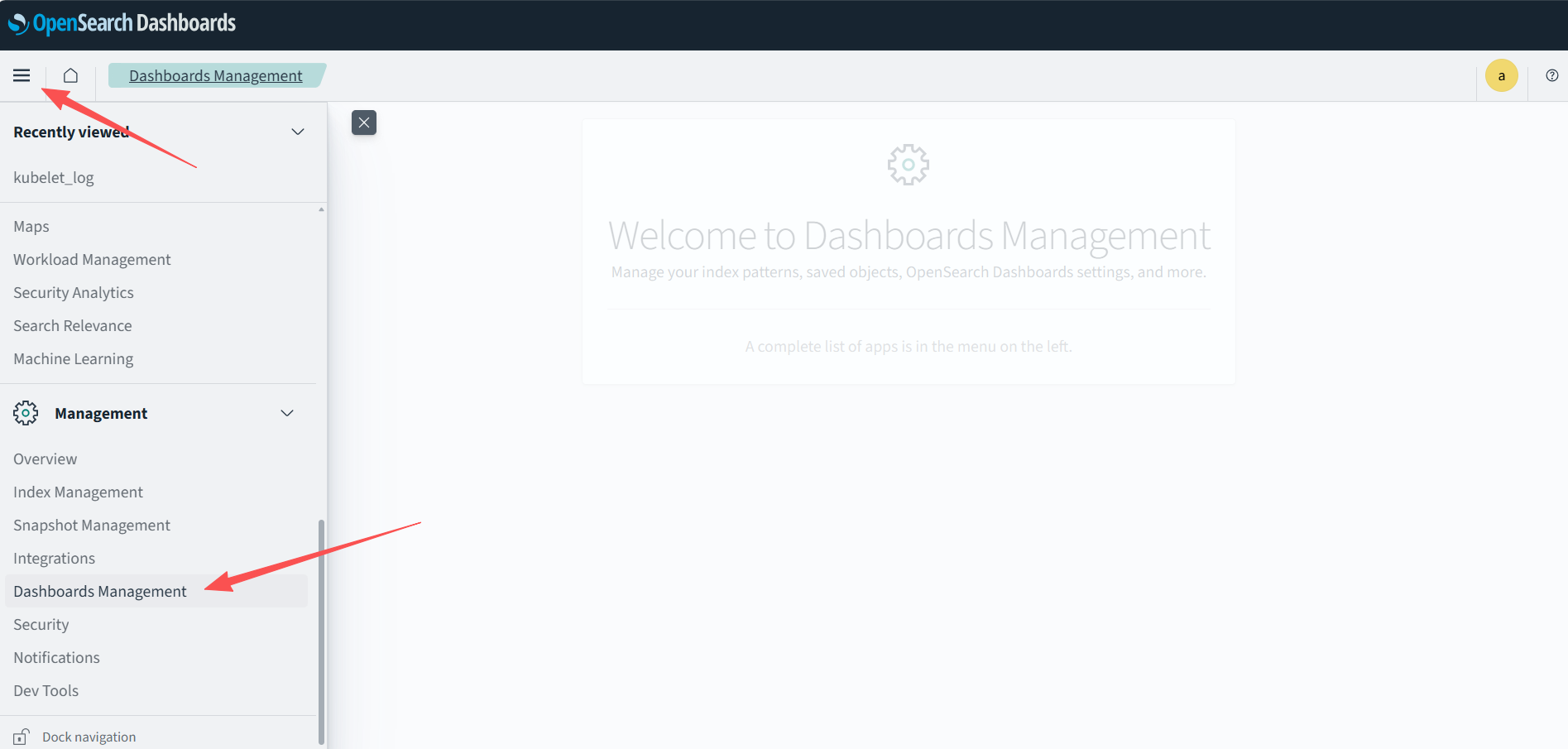

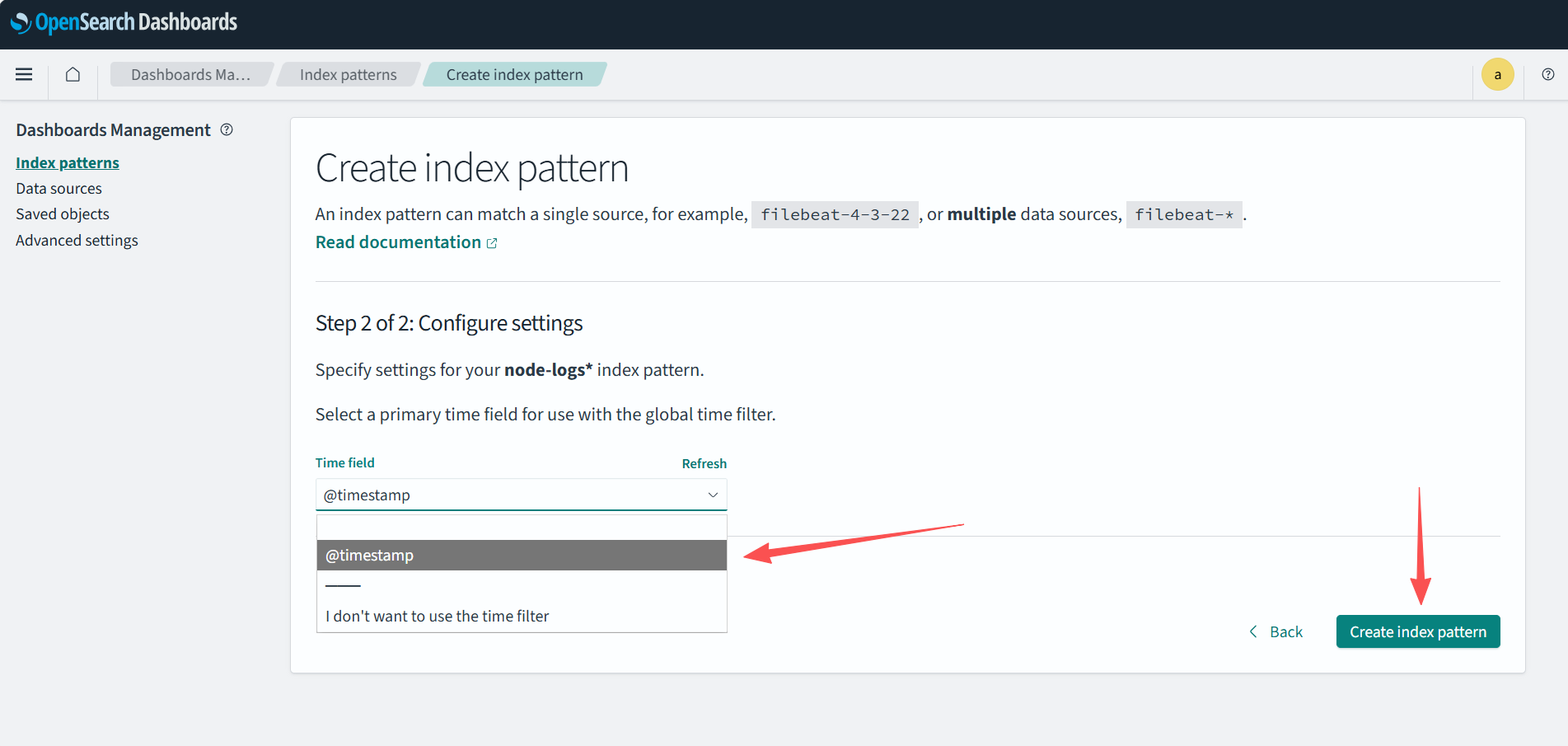

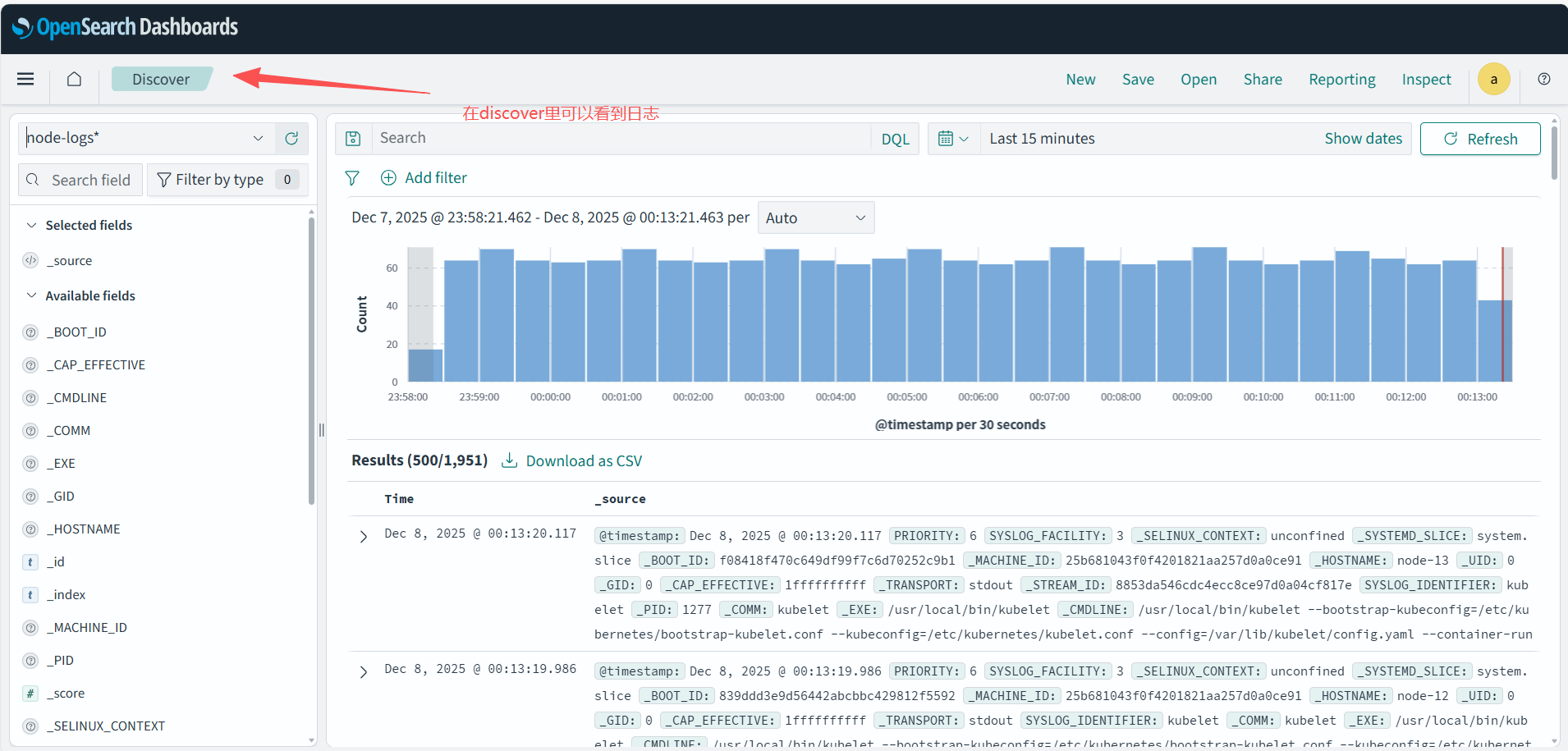

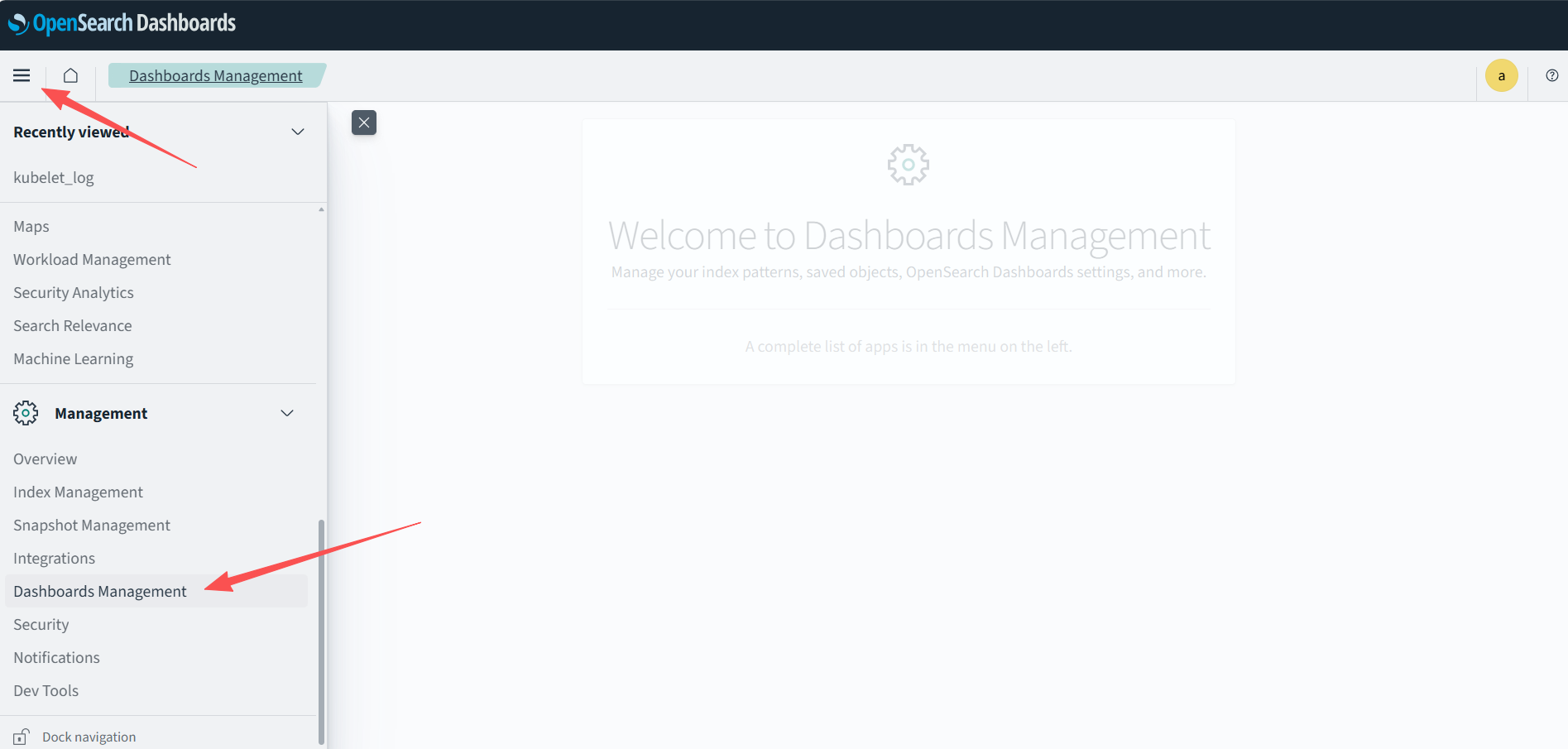

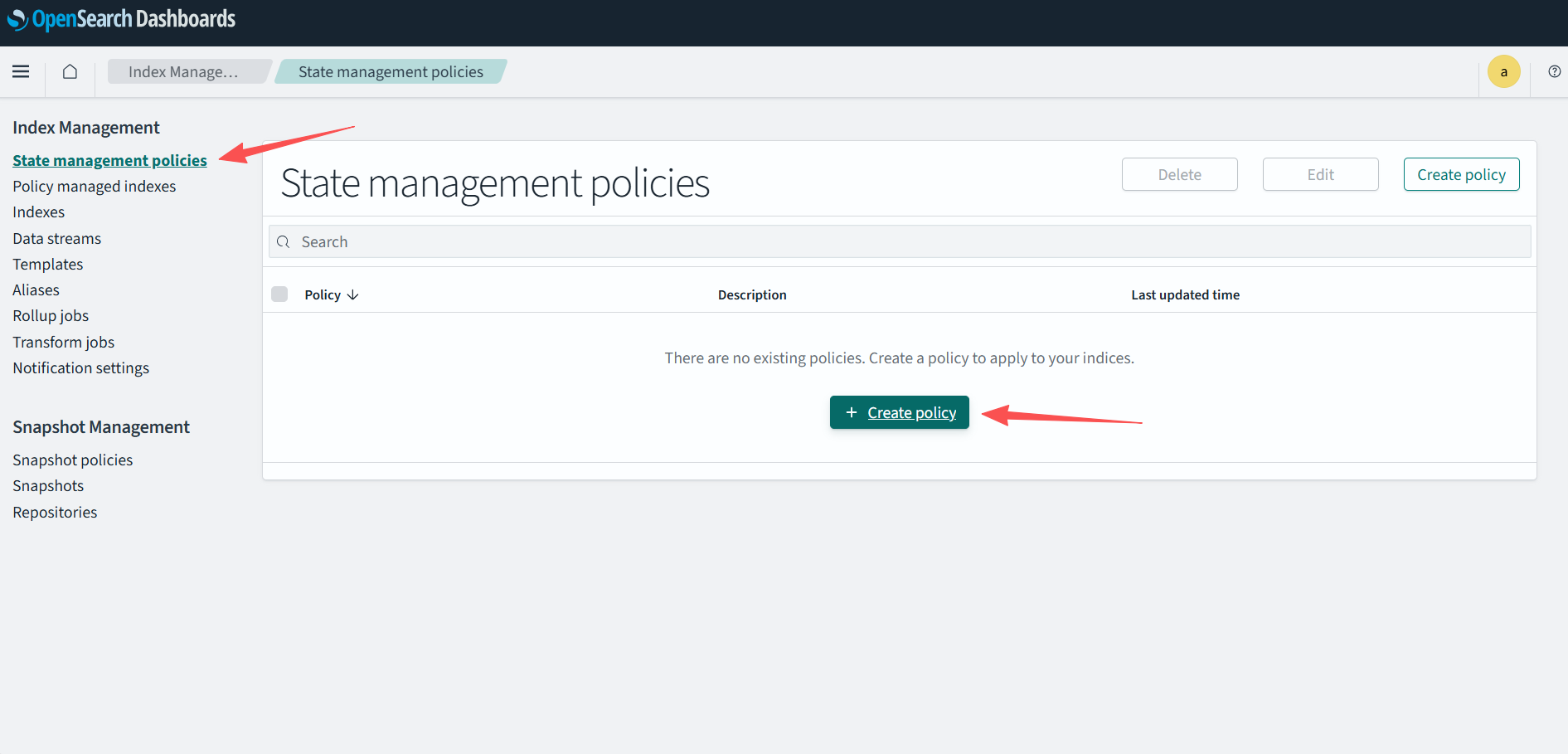

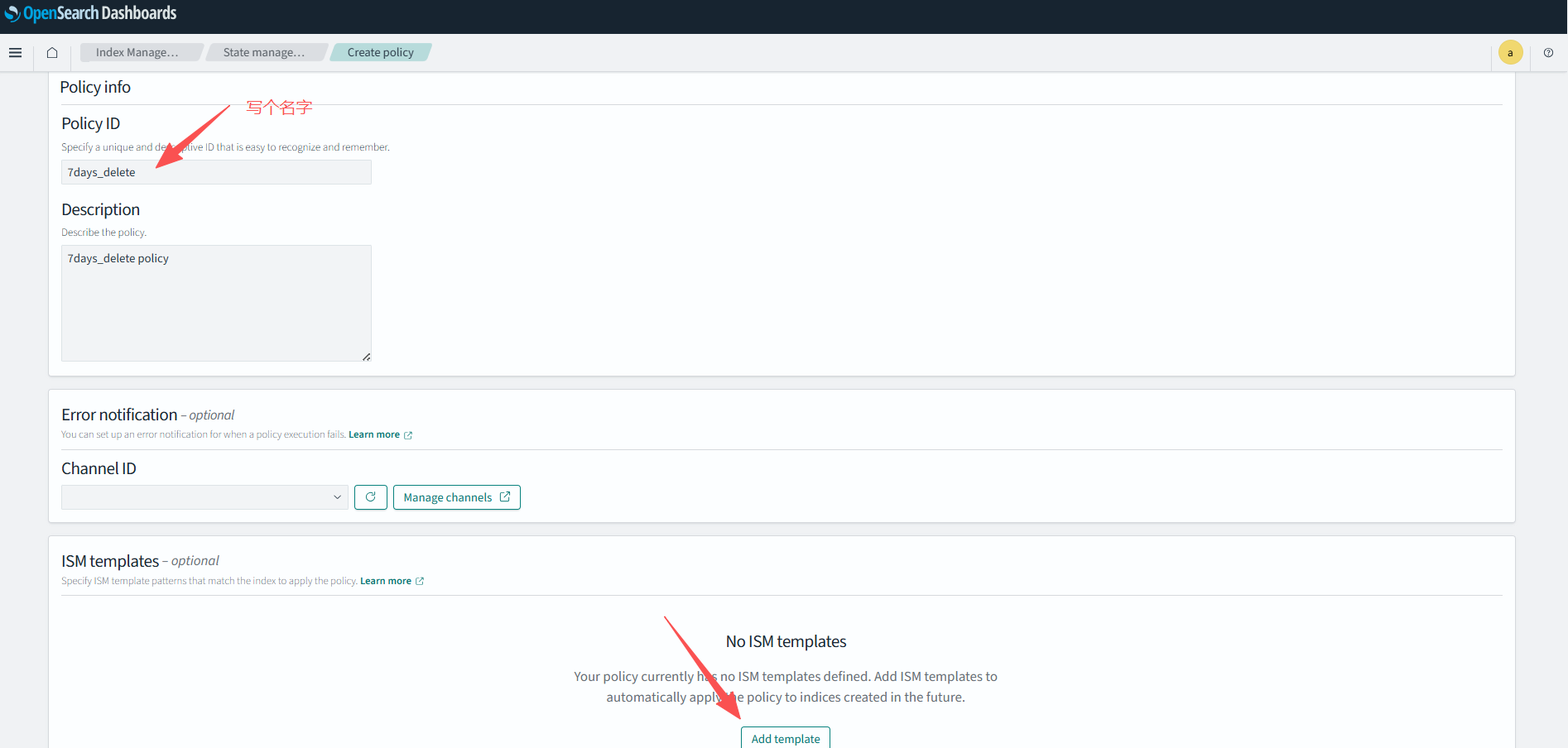

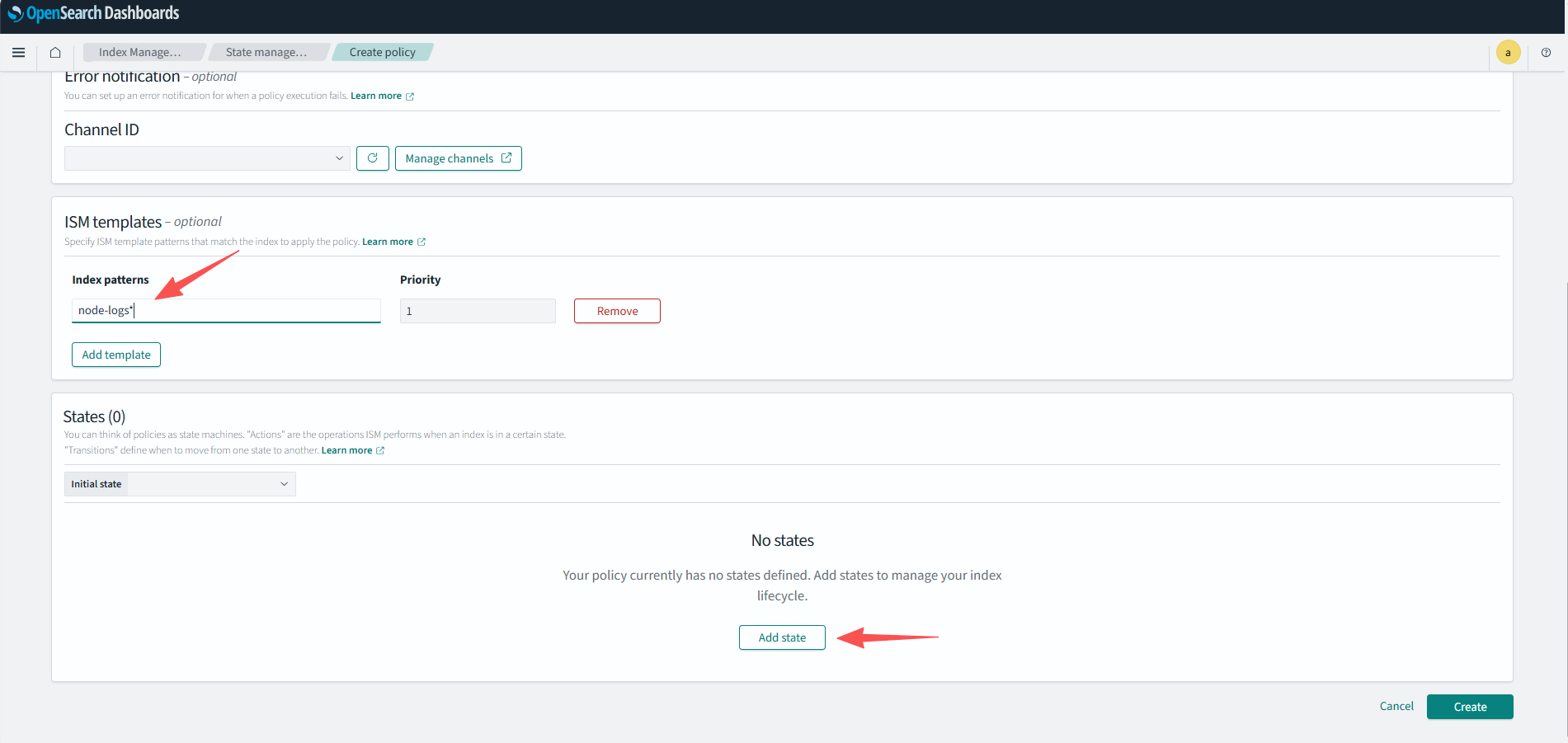

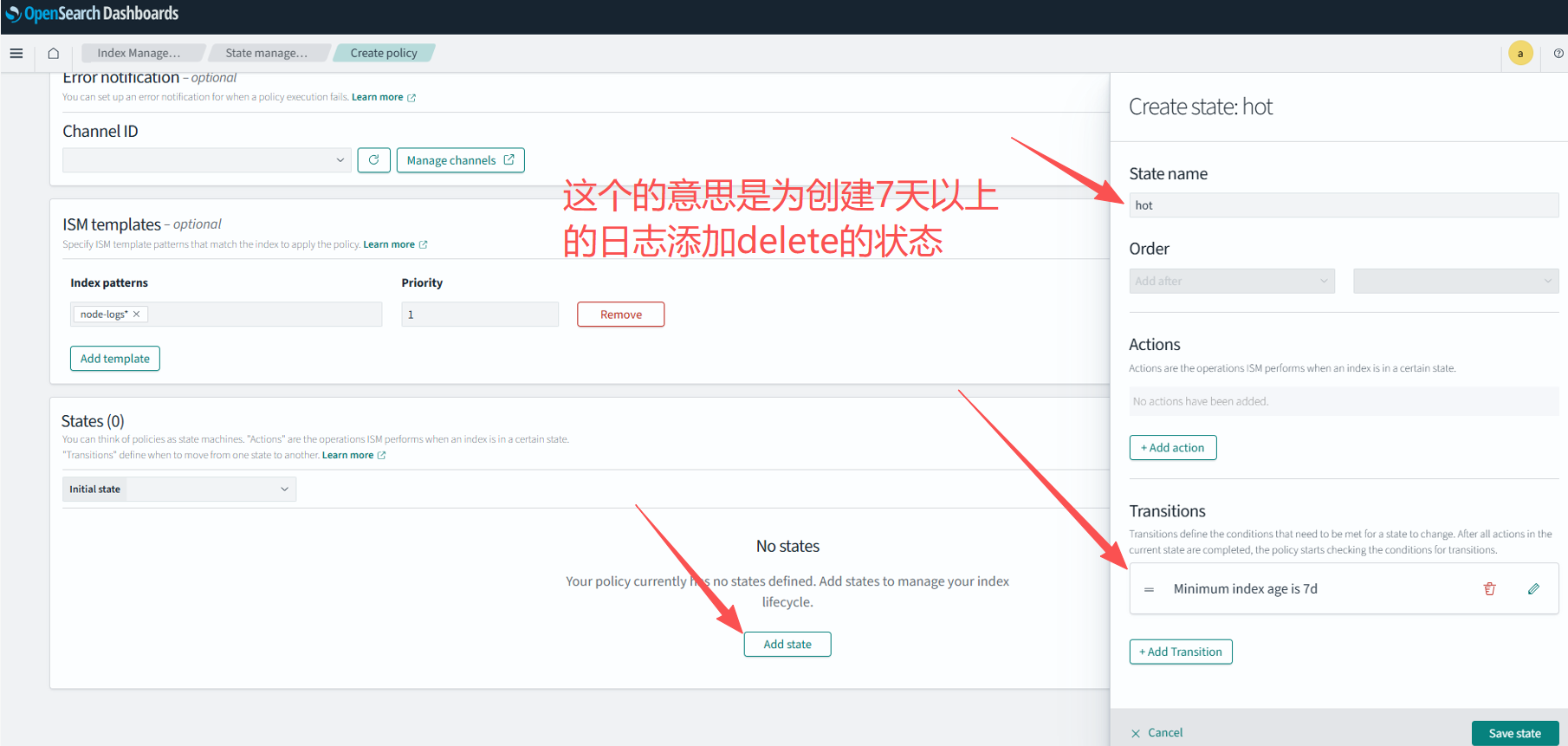

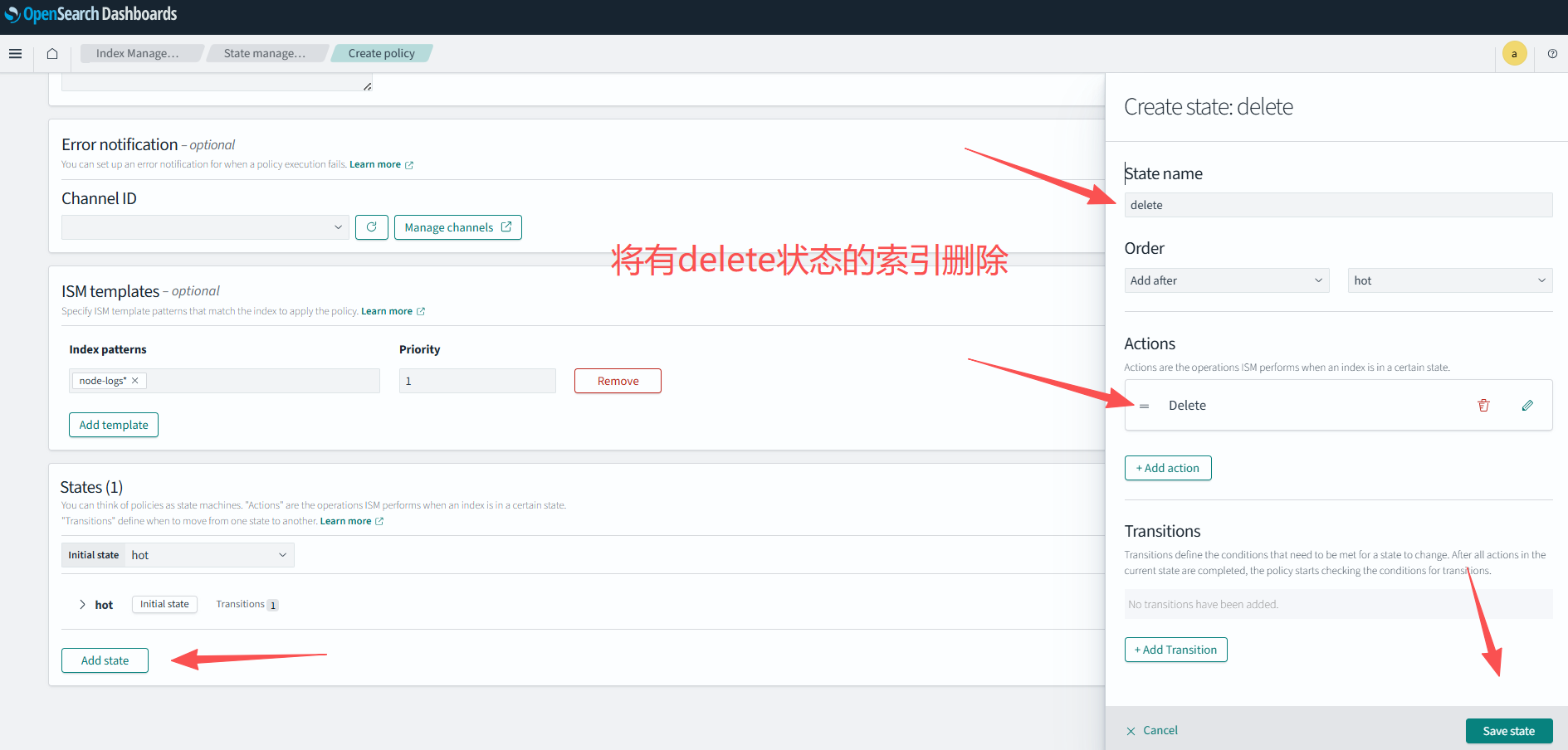

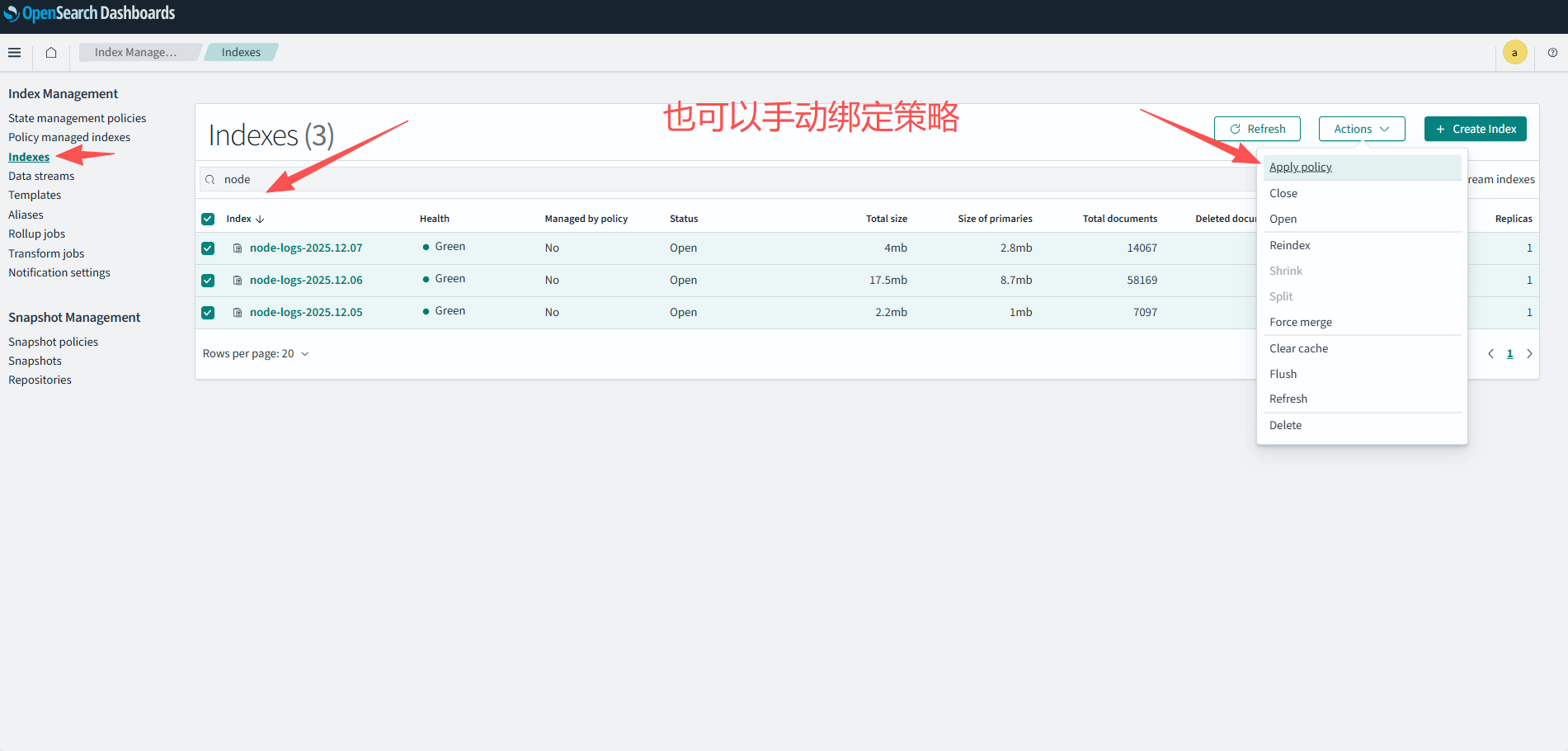

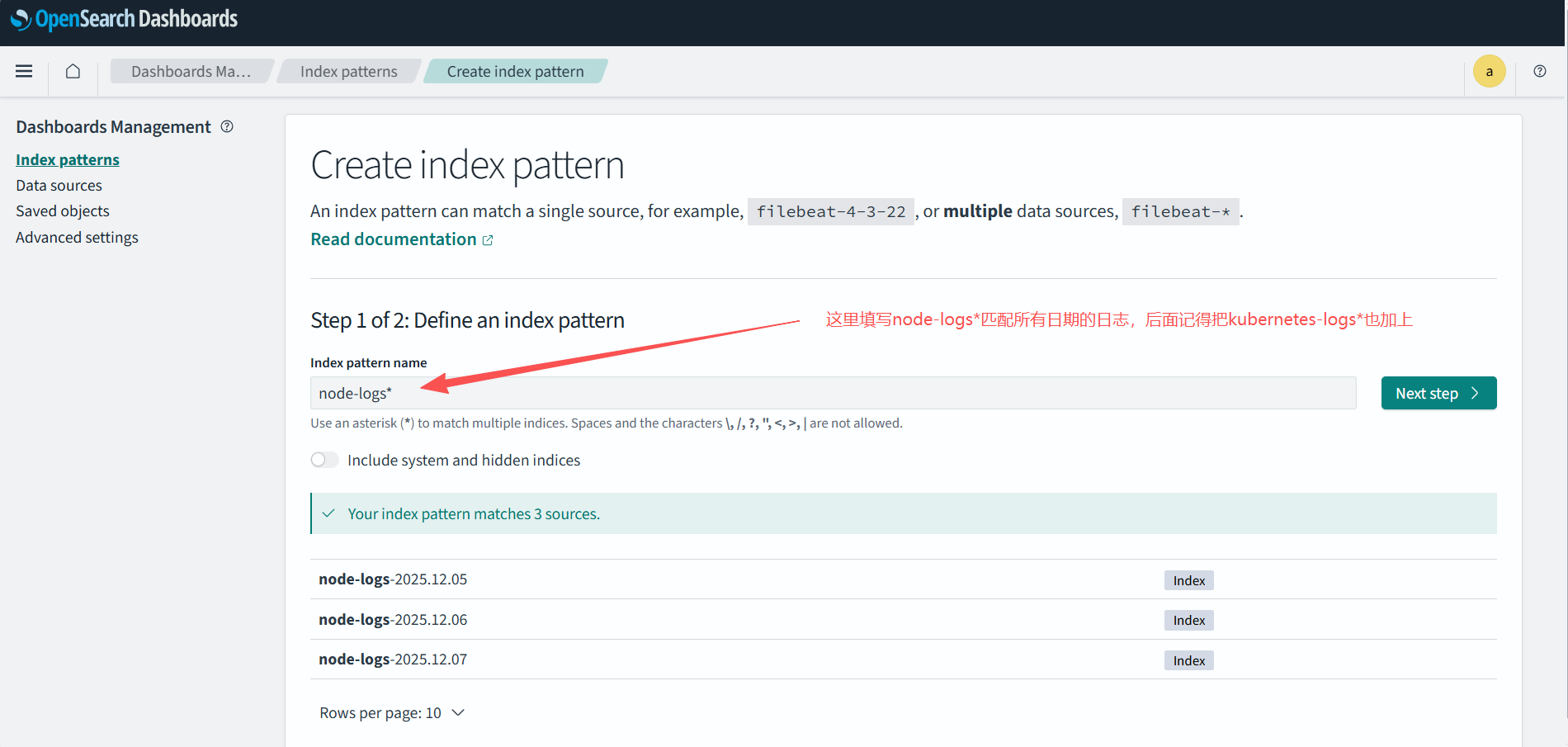

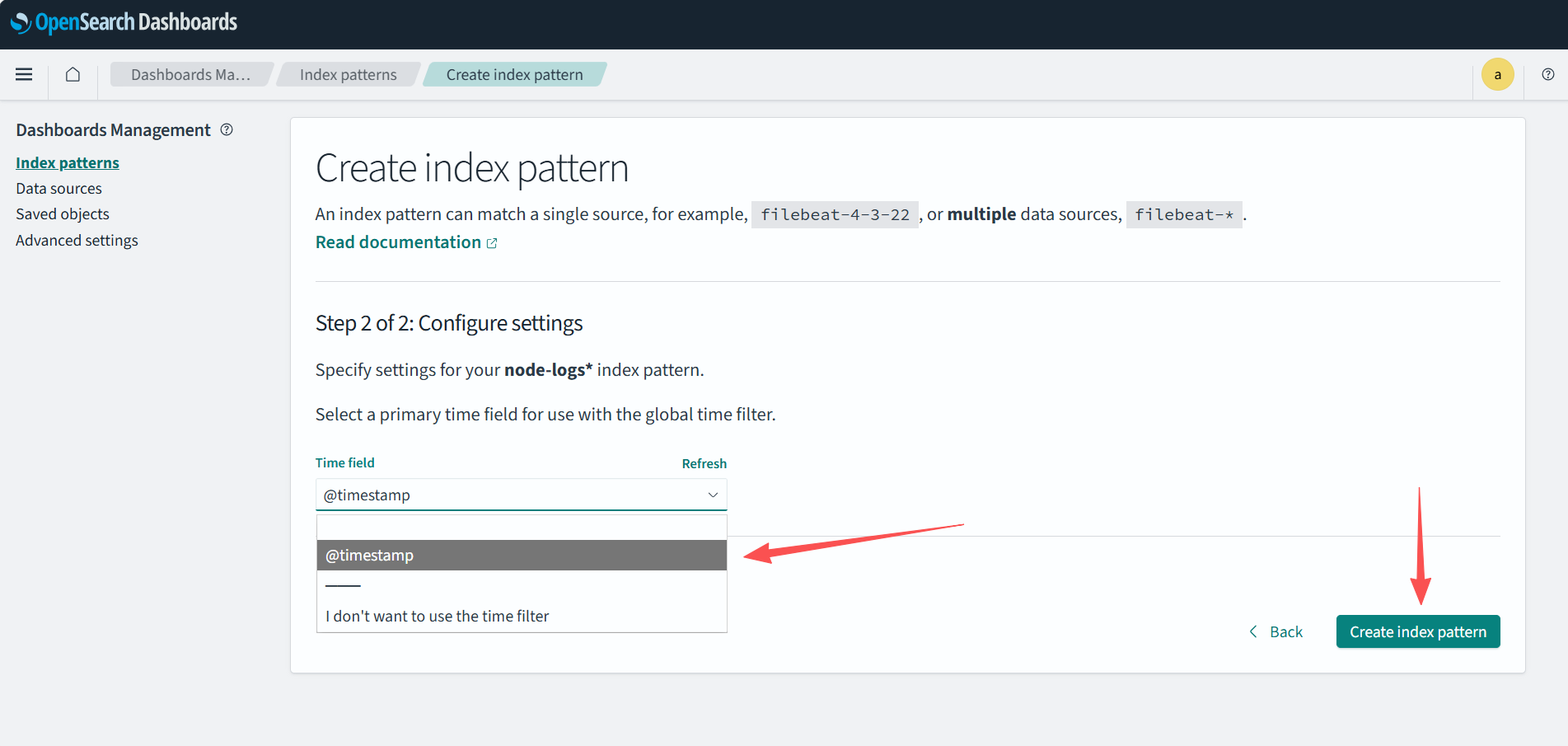

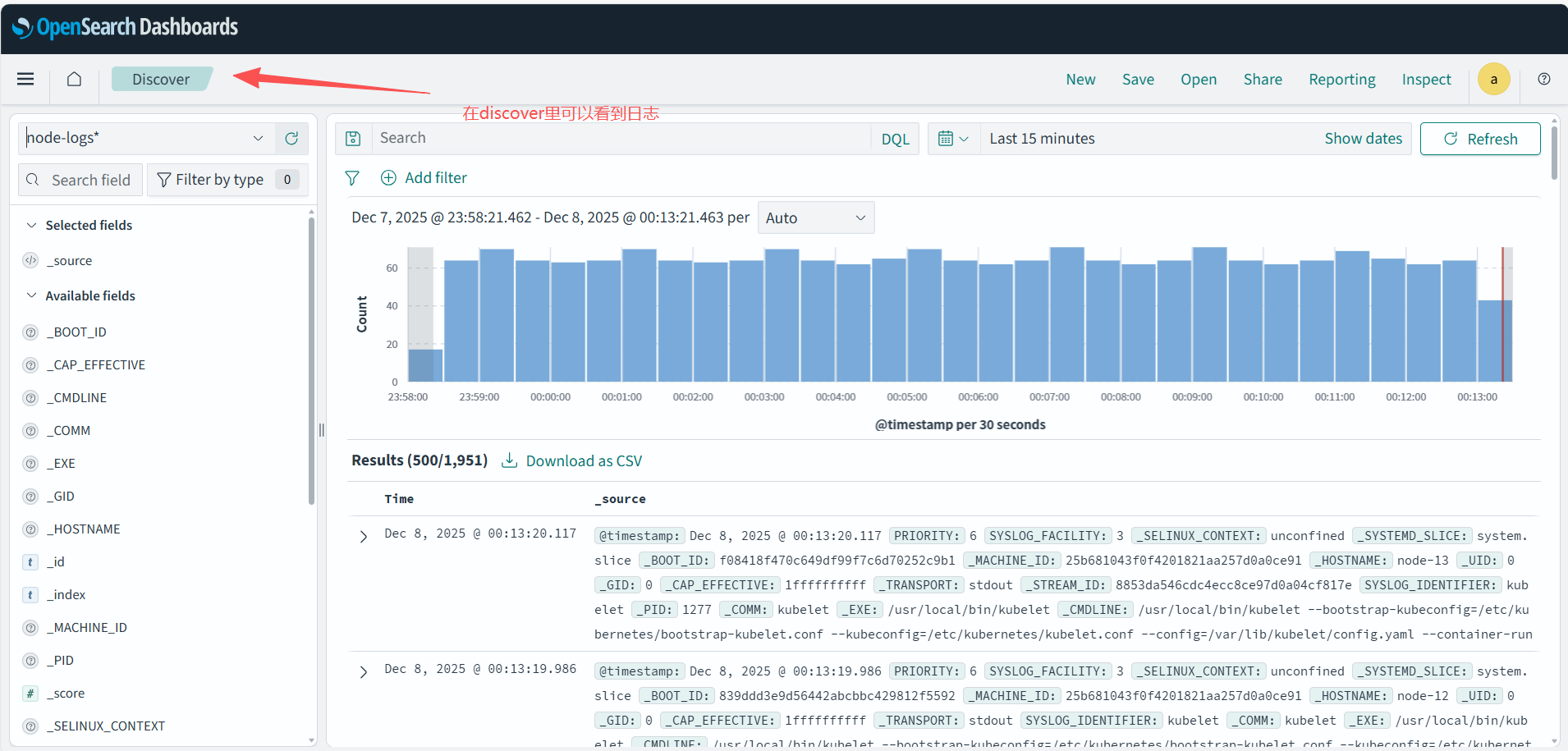

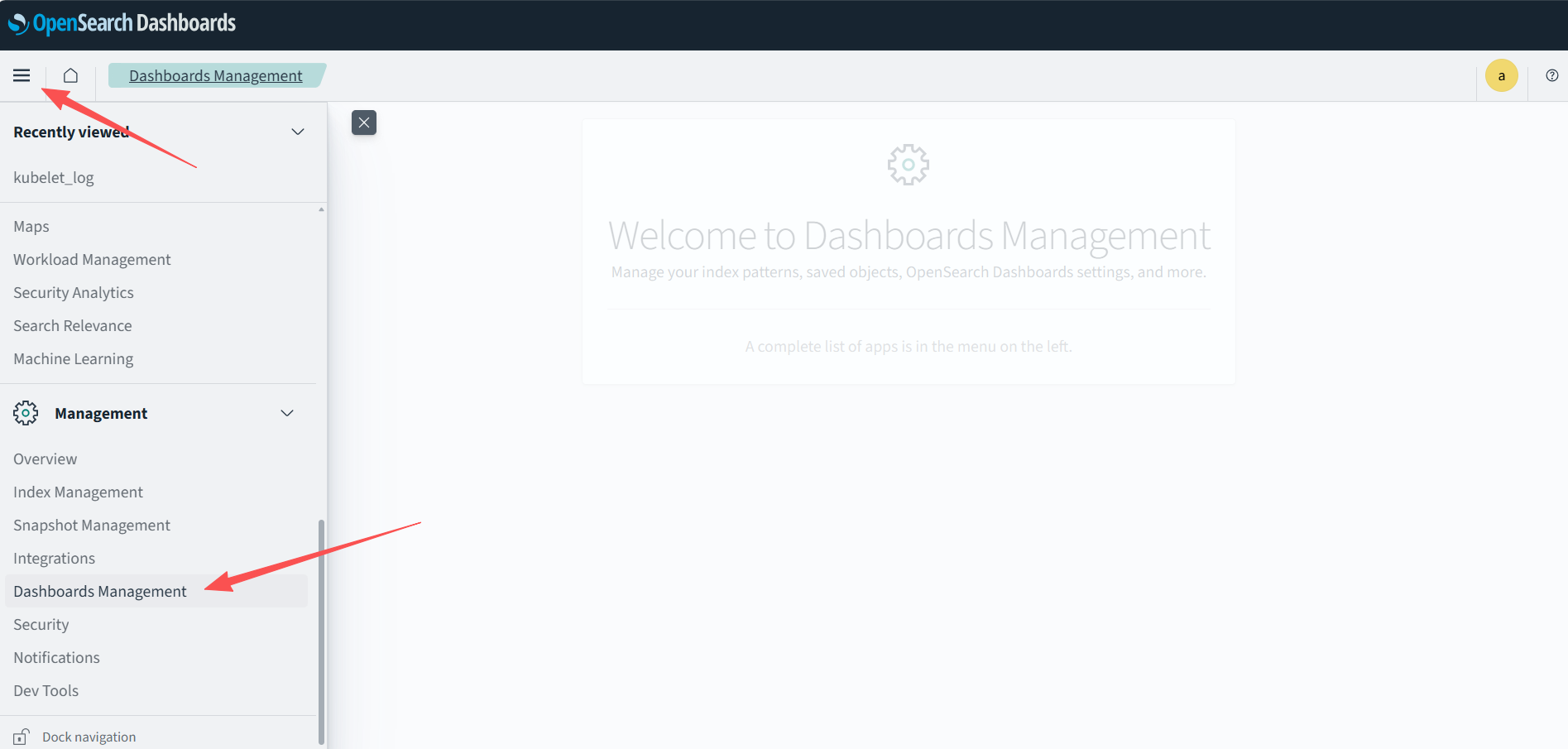

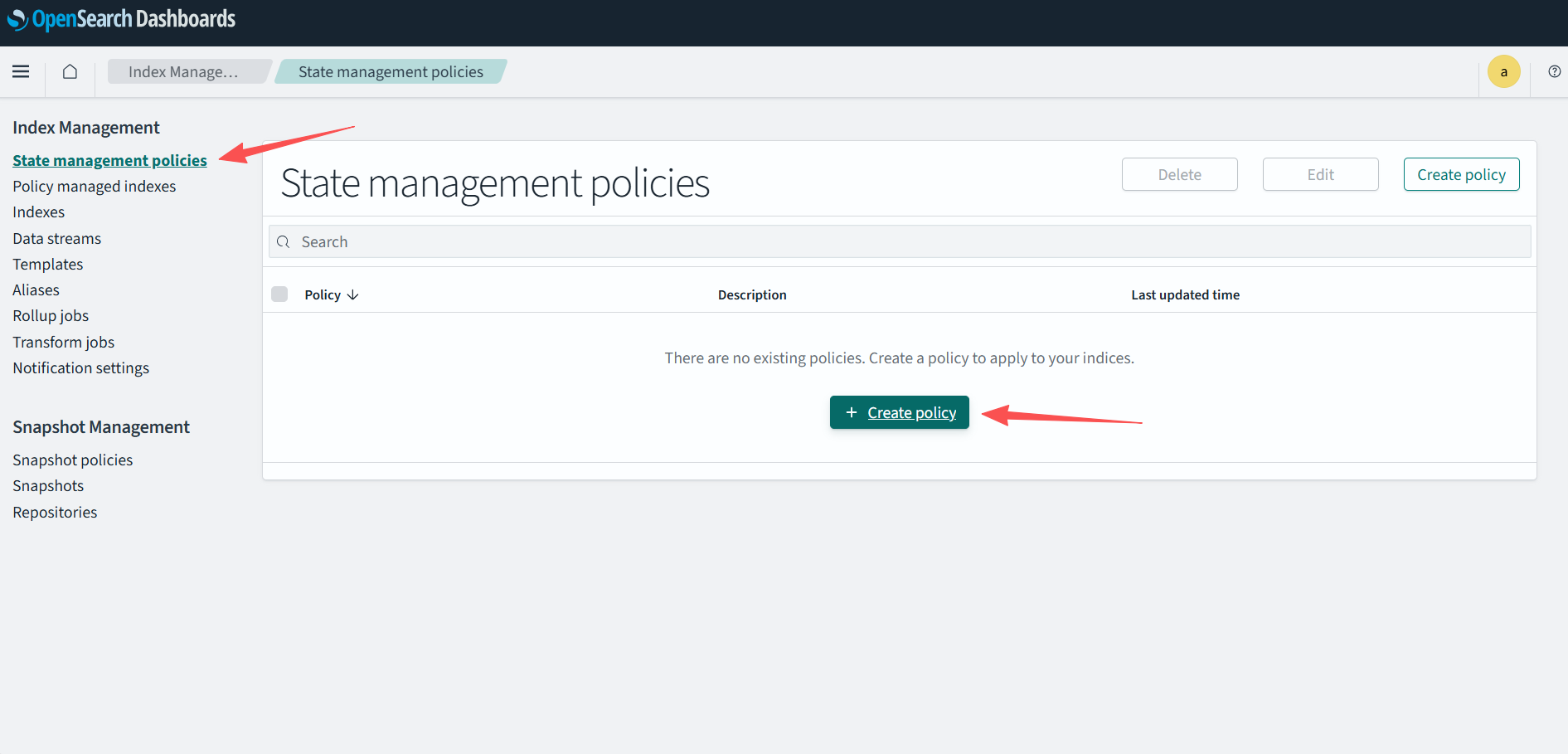

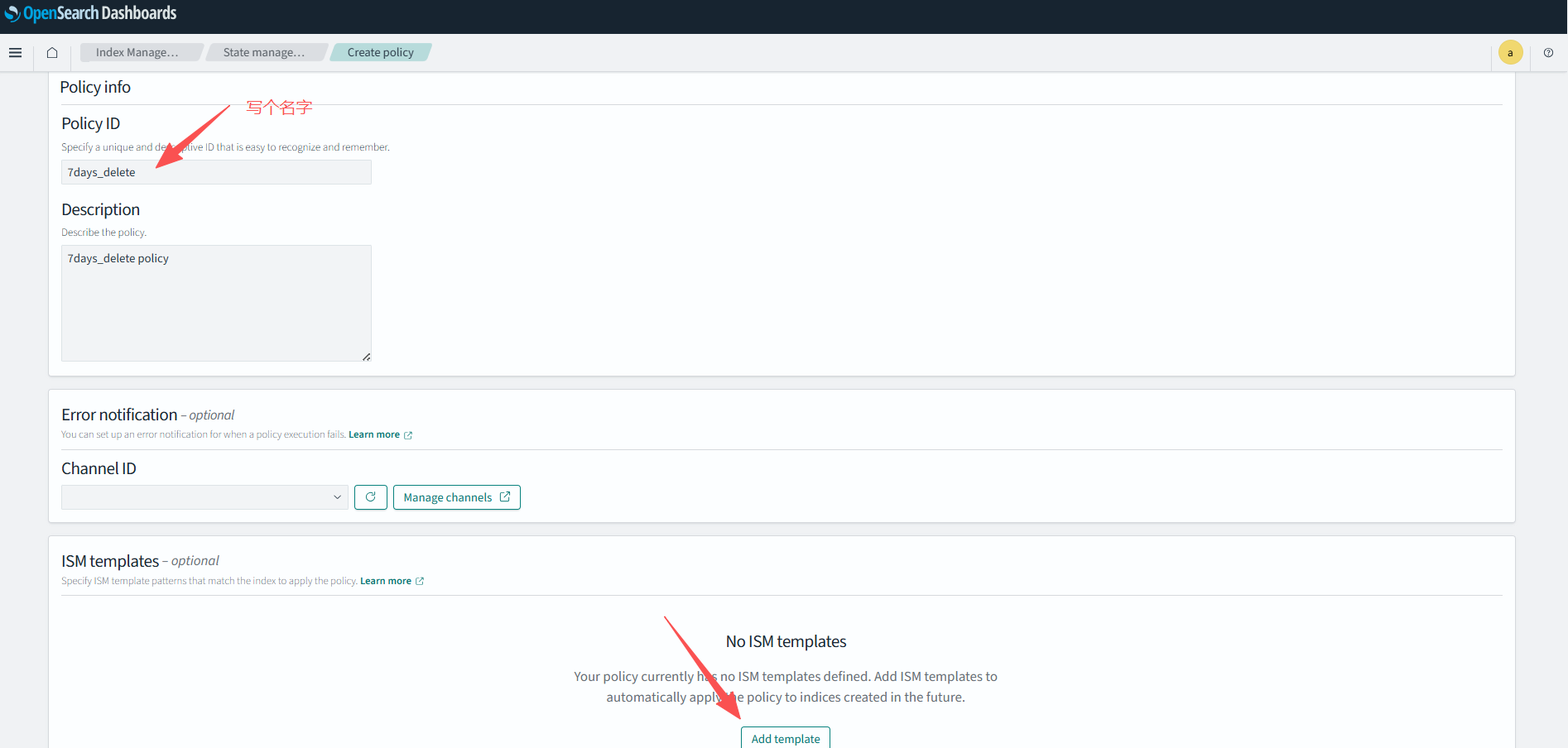

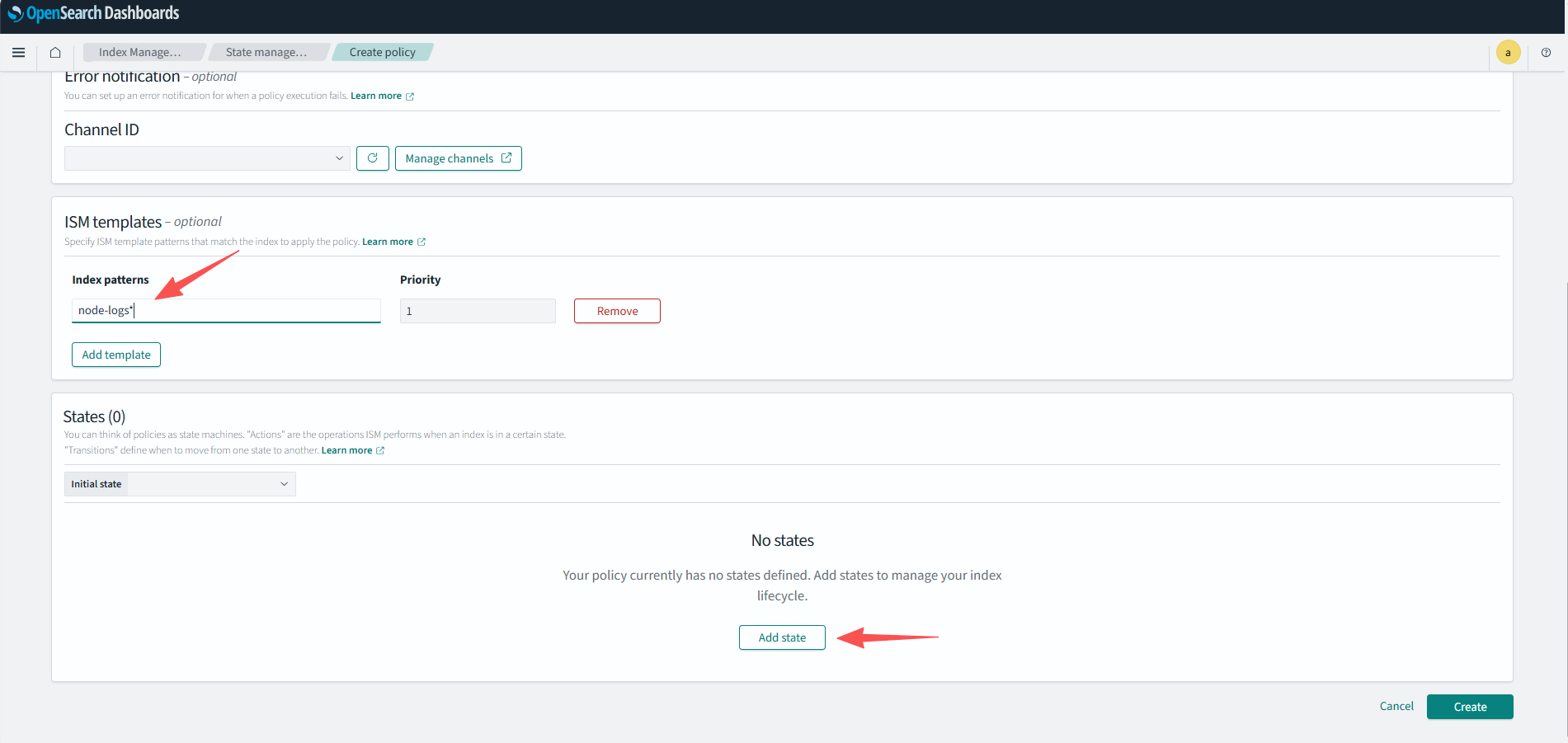

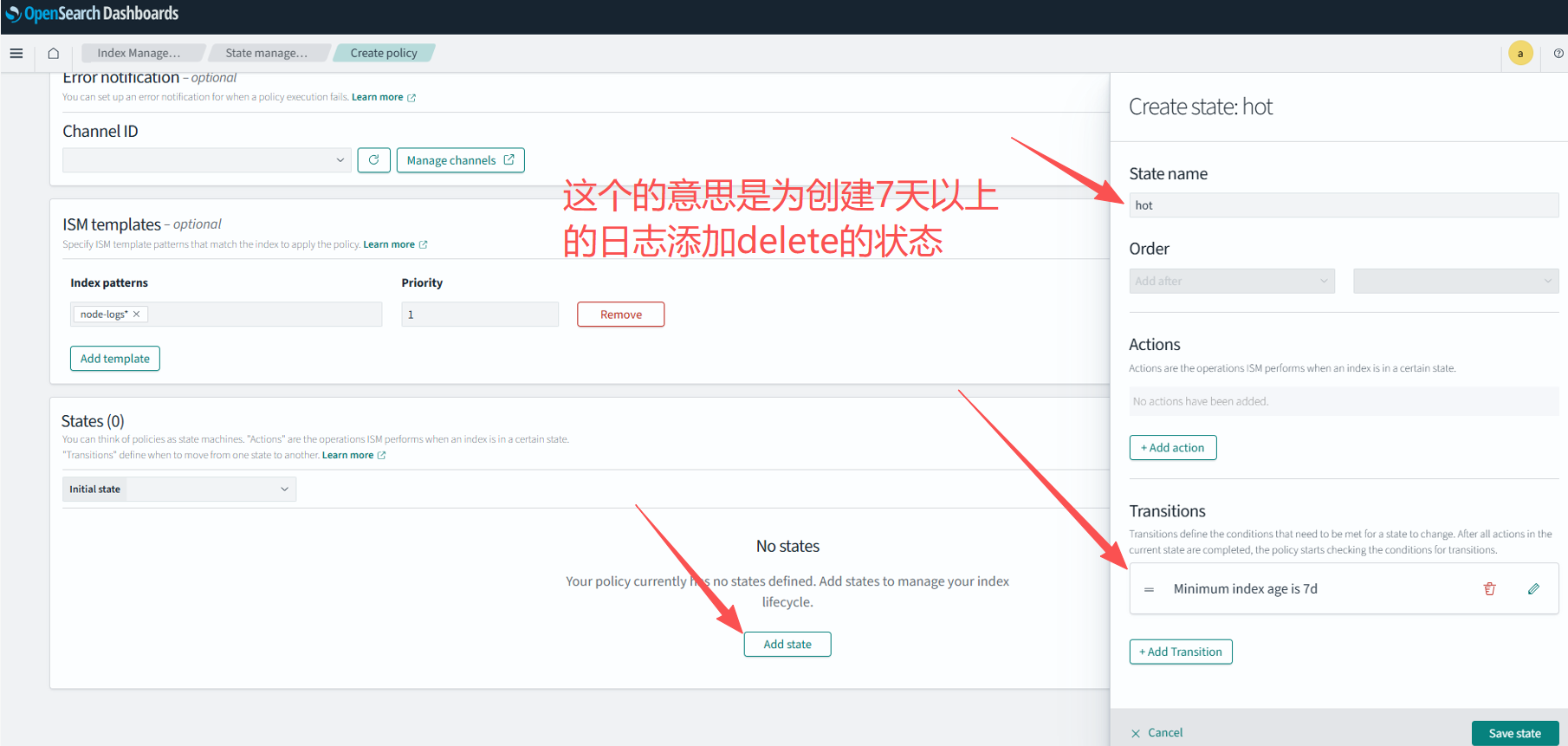

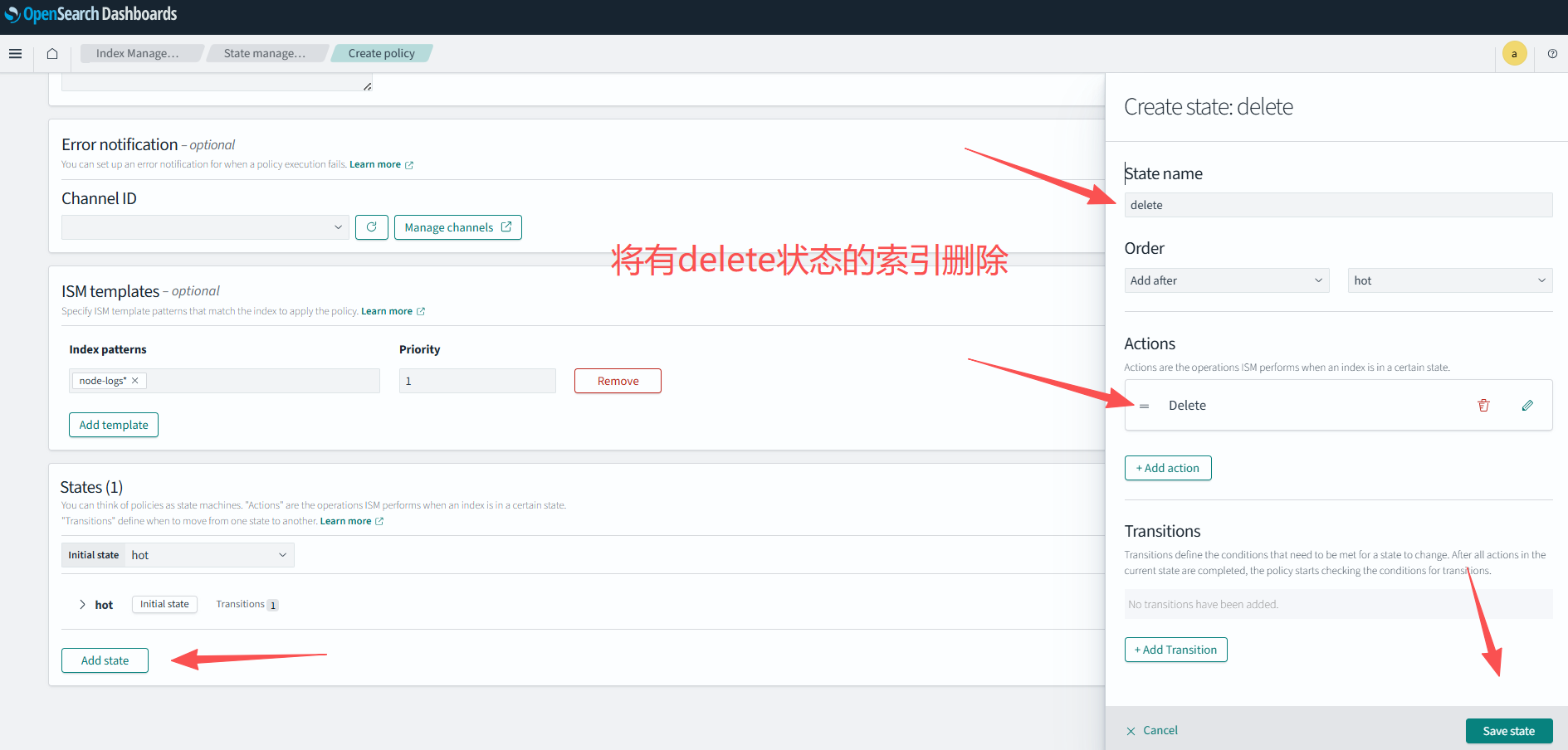

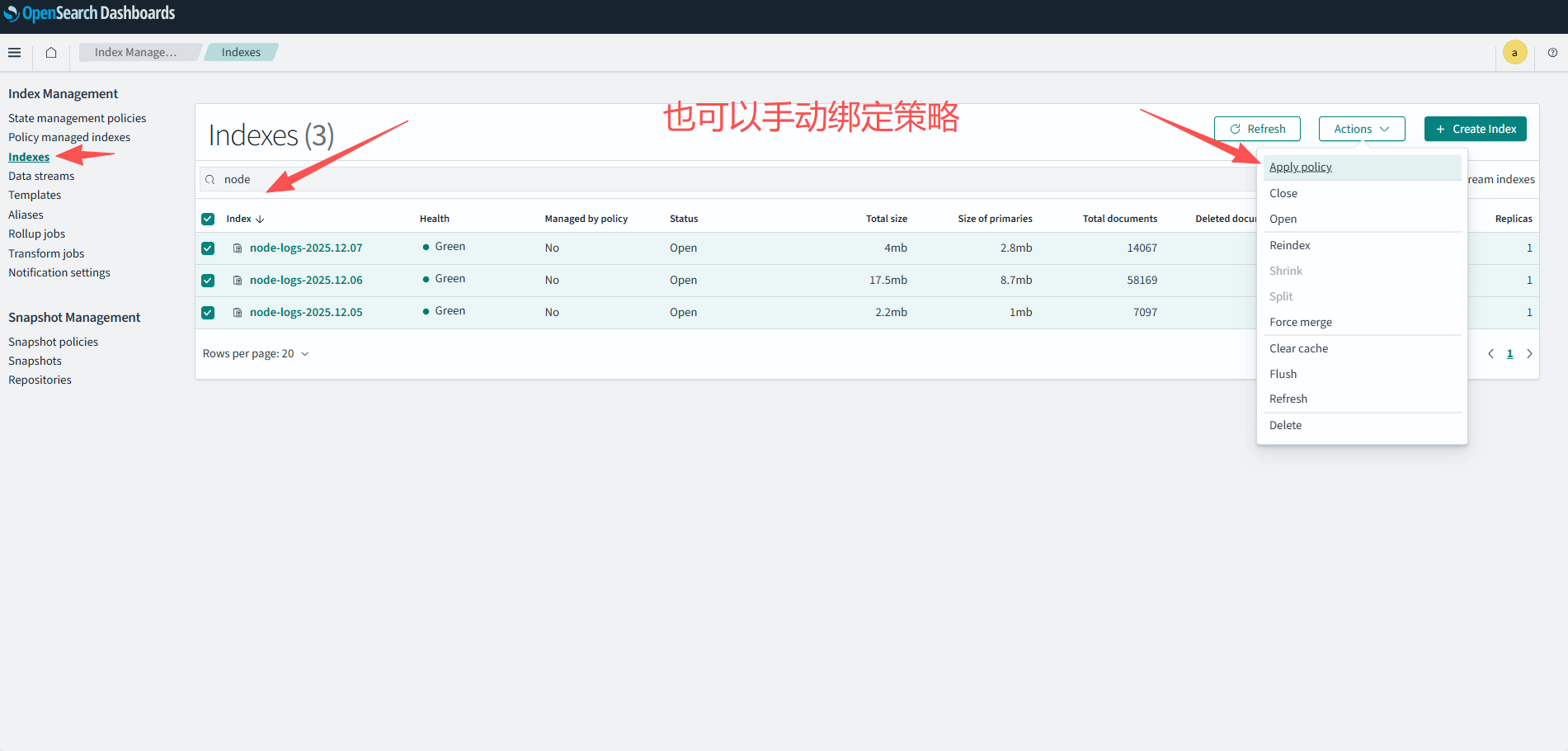

4、创建索引,设置索引生命周期

浙公网安备 33010602011771号

浙公网安备 33010602011771号