一、数据从文件导入elasticsearch

1、数据准备:

1、数据文件:test.json 2、索引名称:index 3、数据类型:doc 4、批量操作API:bulk

{"index":{"_index":"index2","_type":"type2","_id":0}}

{"age":10,"name":"jim"}

{"index":{"_index":"index2","_type":"type2","_id":1}}

{"age":16,"name":"tom"}

2、_bulk API导入ES的JSON文件需要满足一定的格式,每条记录之前,需要有文档ID且每一行\n结束

curl -H 'Content-Type: application/x-ndjson' -s -XPOST localhost:9200/_bulk --data-binary @test.json

如果是在test.json文件中没有指定index名、type、id时:

curl -H 'Content-Type: application/x-ndjson' -s -XPOST localhost:9200/index2/type2/_bulk --data-binary @test.json

{ "index" : { } }

{"age":16,"name":"tom"}

但是id会自动生成

3、对于普通json文件的导入,可以logstash进行导入:

logstash的安装准备详细过程请查阅:

https://www.cnblogs.com/yfb918/p/10763292.html

json数据准备

[root@master mnt]# cat data.json

{"age":16,"name":"tom"}

{"age":11,"name":"tsd"}

创建配置文件:

[root@master bin]# cat json.conf

input{

file{

path=>"/mnt/data.json"

start_position=>"beginning"

sincedb_path=>"/dev/null"

codec=>json{

charset=>"ISO-8859-1"

}

}

}

output{

elasticsearch{

hosts=>"http://192.168.200.100:9200"

index=>"jsontestlogstash"

document_type=>"doc"

}

stdout{}

}

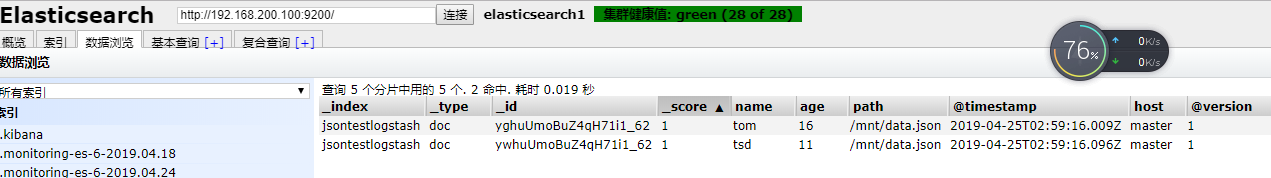

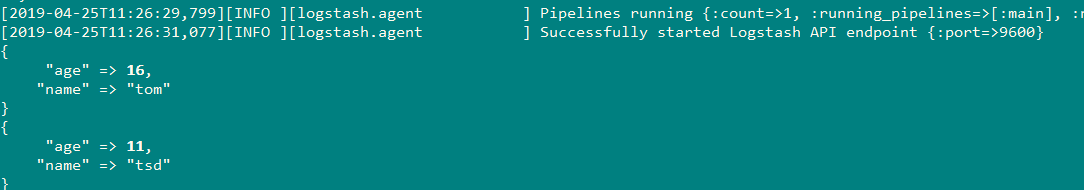

执行结果:

[root@master bin]# ./logstash -f json.conf

[2019-04-25T10:59:14,803][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-04-25T10:59:16,084][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

{

"name" => "tom",

"age" => 16,

"path" => "/mnt/data.json",

"@timestamp" => 2019-04-25T02:59:16.009Z,

"host" => "master",

"@version" => "1"

}

{

"name" => "tsd",

"age" => 11,

"path" => "/mnt/data.json",

"@timestamp" => 2019-04-25T02:59:16.096Z,

"host" => "master",

"@version" => "1"

}

从结果中可以看到:默认增加了几个字段。那么我们想要这几个默认生成的字段我们应该怎么么办呢,可以如下解决:

在配置文件中使用filter进行过滤:

[root@master bin]# cat json.conf

input{

file{

path=>"/mnt/data.json"

start_position=>"beginning"

sincedb_path=>"/dev/null"

codec=>json{

charset=>"ISO-8859-1"

}

}

}

filter{

mutate {

remove_field => "@timestamp"

remove_field => "@version"

remove_field => "host"

remove_field => "path"

}

}

output{

elasticsearch{

hosts=>"http://192.168.200.100:9200"

index=>"jsontestlogstash"

document_type=>"doc"

}

stdout{}

}

过滤之后的结果:

转载于:https://www.cnblogs.com/yfb918/p/10762984.html

posted on

posted on

浙公网安备 33010602011771号

浙公网安备 33010602011771号