结合 Oracle 11g RAC 架构特性、博客中 CRS-4124 报错解决方案及双节点协同要求,以下是从集群服务到数据库实例的完整启动流程、状态验证方法、问题排查及核心原则,形成可复用的标准化操作体系。

View Code

View Code

View Code

View Code

View Code

View Code

1. 核心启动原则(底层→上层,不可逆转)

RAC 集群依赖严格的组件依赖关系,启动顺序错误会导致组件冲突或启动失败,核心流程:

双节点需遵循 “先主节点(如 239)→后从节点(如 238)” 的顺序,避免集群脑裂或资源竞争。

服务器硬件→操作系统→OHAS(高可用服务)→CRS核心服务(CSSD/GPNPD/GIPCD等)→ASM实例→ASM磁盘组→RAC数据库实例

2. 全流程分步操作(含 BUG 处理与双节点协同)

2.1.阶段 1:前置准备(双节点均需执行)

- 确认服务器硬件正常(网卡、存储链路连通),操作系统(Linux)已启动;

- 验证

/etc/hosts配置一致性(双节点公网 / 私网 / VIP/SCAN IP 均需正确映射); - 检查 Grid/Oracle 用户环境变量(

ORACLE_HOME/GRID_HOME/ORACLE_SID)配置正确; - 清理残留进程与锁文件(避免历史进程导致启动冲突):

# root用户执行 kill -9 $(ps -ef | grep -E 'grid|oracle|ohasd|cssd|crsd' | grep -v grep) rm -rf /var/tmp/.oracle/* /tmp/.oracle/* $GRID_HOME/log/$(hostname)/ohasd/*.lock

2.2.阶段 2:启动集群服务(CRS)(双节点分步执行)

场景 1:正常启动(无 CRS-4124 报错)

- 主节点(如 239)启动 CRS:

# root用户执行 crsctl start crs # 验证CRS核心服务状态(4个组件均需ONLINE) crsctl check crs[root@wx-ncdb-90-239 ohasd]# crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online [root@wx-ncdb-90-239 ohasd]#

- 从节点(如 238)启动 CRS(等待主节点服务稳定后执行,约 3 分钟):

crsctl start crs crsctl check crs[root@wx-ncdb-90-238 wx-ncdb-90-238]# crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online [root@wx-ncdb-90-238 wx-ncdb-90-238]#

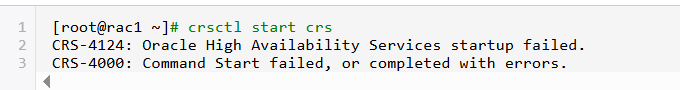

场景 2:触发 CRS-4124 报错(OHAS 启动失败,博客 BUG 解决方案)

若执行

crsctl start crs报CRS-4124,则需通过init.ohasd脚本强制启动 OHAS:

- 查找并执行

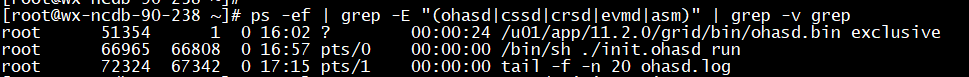

init.ohasd脚本:# root用户执行 find / -name init.ohasd # 定位脚本路径(默认/etc/rc.d/init.d/init.ohasd)[root@wx-ncdb-90-238 ohasd]# find / -name init.ohasd /etc/rc.d/init.d/init.ohasd /u01/app/11.2.0/grid/crs/init/init.ohasd /u01/app/11.2.0/grid/crs/utl/init.ohasd [root@wx-ncdb-90-238 ohasd]#

cd /etc/rc.d/init.d/ nohup ./init.ohasd run & # 后台启动OHAS,输出重定向到nohup.outtail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-238/ohasd/ohasd.log

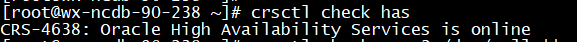

- 验证 OHAS 状态,再启动 CRS:

crsctl check has # 显示"CRS-4638: Oracle High Availability Services is online"

crsctl start crs # 此时可正常启动CRS核心服务(耐心等待,开启时间几分钟),查看日志

tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-238/ohasd/ohasd.log

tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-238/cssd/ocssd.log

tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-238/crsd/crsd.log View Code

View Code[root@wx-ncdb-90-239 ohasd]# tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-239/ohasd/ohasd.log 2026-01-18 10:28:33.916: [UiServer][574560000]{0:0:1969} Done for ctx=0x7fdcd000ab30 2026-01-18 10:29:33.900: [UiServer][572458752] CS(0x7fdcc40008e0)set Properties ( grid,0x7fdcf40a0aa0) 2026-01-18 10:29:33.911: [UiServer][574560000]{0:0:1970} Sending message to PE. ctx= 0x7fdcd00097a0, Client PID: 75616 2026-01-18 10:29:33.911: [ CRSPE][576661248]{0:0:1970} Processing PE command id=2011. Description: [Stat Resource : 0x7fdccc0a33c0] 2026-01-18 10:29:33.914: [UiServer][574560000]{0:0:1970} Done for ctx=0x7fdcd00097a0 ^C [root@wx-ncdb-90-239 ohasd]# [root@wx-ncdb-90-239 ohasd]# [root@wx-ncdb-90-239 ohasd]# tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-239/cssd/ocssd.log 2026-01-18 10:30:39.783: [ CSSD][1793013504]clssnmSendingThread: sent 5 status msgs to all nodes 2026-01-18 10:30:43.785: [ CSSD][1793013504]clssnmSendingThread: sending status msg to all nodes 2026-01-18 10:30:43.785: [ CSSD][1793013504]clssnmSendingThread: sent 4 status msgs to all nodes 2026-01-18 10:30:48.787: [ CSSD][1793013504]clssnmSendingThread: sending status msg to all nodes 2026-01-18 10:30:48.787: [ CSSD][1793013504]clssnmSendingThread: sent 5 status msgs to all nodes ^C [root@wx-ncdb-90-239 ohasd]# [root@wx-ncdb-90-239 ohasd]# tail -f -n 5 /u01/app/11.2.0/grid/log/wx-ncdb-90-239/crsd/crsd.log 2026-01-18 10:30:43.168: [UiServer][2730448640] CS(0x7f7e34009b10)set Properties ( grid,0x7f7e7815bec0) 2026-01-18 10:30:43.178: [UiServer][2732549888]{2:54573:1507} Sending message to PE. ctx= 0x7f7e40008b00, Client PID: 75616 2026-01-18 10:30:43.179: [ CRSPE][2734651136]{2:54573:1507} Processing PE command id=1558. Description: [Stat Resource : 0x7f7e3c0075f0] 2026-01-18 10:30:43.179: [ CRSPE][2734651136]{2:54573:1507} Expression Filter : ((NAME == ora.scan1.vip) AND (LAST_SERVER == wx-ncdb-90-239)) 2026-01-18 10:30:43.182: [UiServer][2732549888]{2:54573:1507} Done for ctx=0x7f7e40008b00

- 集群服务层

-

[root@wx-ncdb-90-239 ~]# crsctl check cluster -all ************************************************************** wx-ncdb-90-238: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** wx-ncdb-90-239: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online **************************************************************

-

- 配置开机自启(避免重启后重复报错):

vi /etc/rc.d/rc.local # 添加内容:nohup /etc/rc.d/init.d/init.ohasd run & chmod 744 /etc/rc.d/rc.local # 赋予执行权限-

开机自启优化:不应直接写入

rc.local。应使用集群工具配置:

crsctl enable crs # 启用集群自启 -

2.3.阶段 3:验证集群核心服务状态(双节点均需验证)

核心命令(覆盖所有 CRS 组件):

# 查看初始化层资源状态(关键:除ora.diskmon外均为ONLINE)

crsctl stat res -t -init

[root@wx-ncdb-90-238 ~]# crsctl stat res -t -init -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.asm 1 ONLINE OFFLINE ora.cluster_interconnect.haip 1 ONLINE OFFLINE ora.crf 1 ONLINE OFFLINE ora.crsd 1 ONLINE OFFLINE ora.cssd 1 ONLINE OFFLINE ora.cssdmonitor 1 ONLINE OFFLINE ora.ctssd 1 ONLINE OFFLINE ora.diskmon 1 OFFLINE OFFLINE ora.evmd 1 ONLINE OFFLINE ora.gipcd 1 ONLINE OFFLINE ora.gpnpd 1 ONLINE OFFLINE ora.mdnsd 1 ONLINE OFFLINE

# 查看完整集群资源状态(含VIP/监听器等)

crsctl stat res -t

[root@wx-ncdb-90-238 wx-ncdb-90-238]# crsctl stat res -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.ARCH.dg ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.DATA.dg ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.LISTENER.lsnr ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.OCRVOTE.dg ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.SYSTEM.dg ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.asm ONLINE ONLINE wx-ncdb-90-238 Started ONLINE ONLINE wx-ncdb-90-239 Started ora.gsd OFFLINE OFFLINE wx-ncdb-90-238 OFFLINE OFFLINE wx-ncdb-90-239 ora.net1.network ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 ora.ons ONLINE ONLINE wx-ncdb-90-238 ONLINE ONLINE wx-ncdb-90-239 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE wx-ncdb-90-239 ora.cvu 1 ONLINE ONLINE wx-ncdb-90-239 ora.oc4j 1 ONLINE ONLINE wx-ncdb-90-239 ora.orcl.db 1 ONLINE ONLINE wx-ncdb-90-238 Open 2 ONLINE ONLINE wx-ncdb-90-239 Open ora.scan1.vip 1 ONLINE ONLINE wx-ncdb-90-239 ora.wx-ncdb-90-238.vip 1 ONLINE ONLINE wx-ncdb-90-238 ora.wx-ncdb-90-239.vip 1 ONLINE ONLINE wx-ncdb-90-239

# 验证OCR(集群注册表)完整性(无损坏提示)

ocrcheck

[root@wx-ncdb-90-238 wx-ncdb-90-238]# ocrcheck Status of Oracle Cluster Registry is as follows : Version : 3 Total space (kbytes) : 262120 Used space (kbytes) : 2988 Available space (kbytes) : 259132 ID : 1283645573 Device/File Name : +OCRVOTE Device/File integrity check succeeded Device/File not configured Device/File not configured Device/File not configured Device/File not configured Cluster registry integrity check succeeded Logical corruption check succeeded [root@wx-ncdb-90-238 wx-ncdb-90-238]#

# 验证节点间集群通信(双节点均为ACTIVE)

su - grid

olsnodes -s -n[root@wx-ncdb-90-238 wx-ncdb-90-238]# su - grid [grid@wx-ncdb-90-238 ~]$ olsnodes -s -n wx-ncdb-90-238 1 Active wx-ncdb-90-239 2 Active [grid@wx-ncdb-90-238 ~]

正常标准:

ora.cssd/ora.crsd/ora.gpnpd/ora.gipcd/ora.evmd均为ONLINE,双节点olsnodes状态为Active。2.4.阶段 4:启动并验证 ASM(Grid 用户操作,双节点均需执行)

ASM 是 RAC 存储核心,由 grid 用户管理,需确保实例启动 + 磁盘组挂载:

- 启动 ASM 实例并挂载磁盘组:

su - grid sqlplus / as sysasm SQL> startup; # 启动ASM实例 SQL> alter diskgroup all mount; # 挂载所有ASM磁盘组(含OCR/数据磁盘组) SQL> select name, state from v$asm_diskgroup; # 验证磁盘组状态(STATE=MOUNTED) SQL> exit; - CRS 层面验证 ASM 资源:

srvctl status asm -n <节点名> # 如srvctl status asm -n wx-ncdb-90-239 # 正常输出:"ASM is running on wx-ncdb-90-239"

3. asmcmd 验证

[grid@wx-ncdb-90-239 ~]$ asmcmd lsdg State Type Rebal Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name MOUNTED EXTERN N 512 4096 1048576 204800 182511 0 182511 0 N ARCH/ MOUNTED EXTERN N 512 4096 1048576 1638400 1133900 0 1133900 0 N DATA/ MOUNTED NORMAL N 512 4096 1048576 61440 60514 20480 20017 0 Y OCRVOTE/ MOUNTED EXTERN N 512 4096 1048576 204800 720 0 720 0 N SYSTEM/

2.5.阶段 5:启动并验证 RAC 数据库实例(Oracle 用户操作)

推荐用

srvctl工具启动,确保双节点实例协同同步:- 启动数据库(双节点同时启动):

su - oracle # 查看数据库配置(获取数据库名,如orcl) srvctl config database # 启动整个RAC数据库 srvctl start database -d <数据库名> - 验证数据库状态:

# 集群层面验证(双节点实例均需RUNNING) srvctl status database -d <数据库名> # 单节点实例验证 sqlplus / as sysdba SQL> select instance_name, status from v$instance; # STATUS=OPEN SQL> select inst_id, instance_name, status from gv$instance; # 双节点实例均为OPEN - (可选)单独启动单个节点实例:

srvctl start instance -d <数据库名> -i <实例名> # 如srvctl start instance -d orcl -i orcl1

4. 验证

| 检查层面 | 检查命令与关键点 | 健康标准 |

|---|---|---|

| 应用层 | sqlplus / as sysdba -> select * from gv$instance; |

双实例 OPEN |

| 集群资源层 | crsctl stat res -t |

无 OFFLINE 或 UNKNOWN 的关键资源(如 .db, .vip, .lsnr) |

| 集群服务层 | crsctl check cluster -all |

所有节点显示 Cluster is ready |

| 网络层 | olsnodes -s -n |

所有节点 Active,序号连续 |

| 存储层 | asmcmd lsdg |

所有磁盘组 State=MOUNTED |

3. 关键状态验证命令汇总(按组件分类)

| 组件类型 | 验证命令 | 正常标准 |

|---|---|---|

| CRS 核心服务 | crsctl check crs |

4 个组件均为 ONLINE |

| 集群资源 | crsctl stat res -t / crsctl stat res -t -init |

核心资源无 OFFLINE/INTERMEDIATE |

| OCR 完整性 | ocrcheck |

无 "corrupt" 或 "unavailable" 提示 |

| 节点通信 | olsnodes -s -n |

双节点均为 ACTIVE |

| ASM | sqlplus / as sysasm → select name, state from v$asm_diskgroup; |

磁盘组 STATE=MOUNTED |

| 数据库实例 | srvctl status database -d <数据库名> / gv$instance |

双节点实例均为 RUNNING/OPEN |

4. 常见问题与解决方案(结合博客 + 实操经验)

| 问题现象 | 根因 | 解决方案 |

|---|---|---|

| CRS-4124: OHAS 启动失败 | OHAS 进程僵死 / 配置异常 | 执行nohup /etc/rc.d/init.d/init.ohasd run &强制启动 OHAS,配置开机自启 |

| ASM 启动报 ORA-29701 | CSSD 服务未启动 | 先通过crsctl start crs启动 CSSD,再启动 ASM |

| ASM 磁盘组无法挂载(STATE=DISMOUNTED) | 存储链路异常 / 磁盘权限错误 | 检查/dev/asm*权限(属主 grid:oinstall),验证存储阵列连通性 |

| 数据库实例启动失败 | ASM 磁盘组未挂载 / OCR 损坏 | 重新挂载 ASM 磁盘组,执行ocrcheck修复 OCR |

| 节点间通信失败(olsnodes 状态异常) | 私网配置错误 / 防火墙拦截 | 验证oifcfg getif私网配置,关闭防火墙 / 开放集群端口(如 2484/42424) |

5. 核心原则与避坑指南

- 启动顺序不可乱:严格遵循 “OHAS→CRS→ASM→数据库”,双节点先主后从,禁止反向操作;

- 状态验证贯穿全程:每个阶段启动后必须验证状态,避免后续组件启动失败;

- 优先用集群工具:CRS 用

crsctl,ASM / 数据库用srvctl,避免手动 sqlplus 启动导致集群资源不同步; - BUG 预防优先:11g RAC 建议配置

init.ohasd开机自启,避免重启后触发 CRS-4124 报错; - 日志定位核心:启动失败时优先查看对应组件日志(OHAS 日志:

$GRID_HOME/log/<节点名>/ohasd/ohasd.log;ASM 日志:$GRID_HOME/log/<节点名>/asm/alert_asm.log)。

6. 总结

2 节点 Oracle 11g RAC 启动的核心是 “遵循依赖顺序 + 双节点协同 + 状态闭环验证”。遇到 CRS-4124 等典型 BUG 时,可通过

init.ohasd脚本强制启动 OHAS;全程需确保 CRS 核心服务、ASM 磁盘组、数据库实例的 “全 ONLINE”,最终通过crsctl stat res -t/gv$instance等命令确认集群整体健康,才能保障 RAC 高可用特性发挥。

posted on

posted on

浙公网安备 33010602011771号

浙公网安备 33010602011771号