纯shell命令 一键安装k8s集群(三台master 也是node节点)

说明

能自动化的事就不要手动

如果一切顺利的话大概10分钟左右搭建好k8s集群 该脚本可以先看看注意有些包都是提前下载好了的 执行脚本前请先下载相关包到机器上 系统推荐CentOS Linux release 7.9.2009 (Core)内核推荐 4.4.245-1.el7.elrepo.x86_64以上 后续会继续完成node节点的脚本和初始化系统环境的脚本

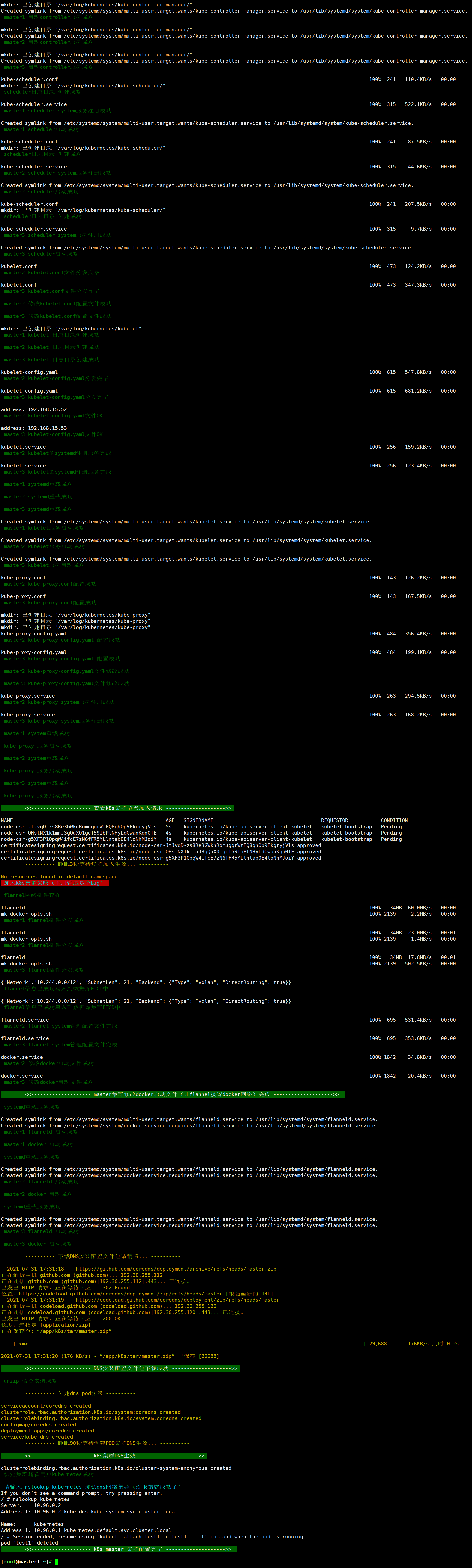

执行成功效果图

shell一键安装k8s集群(三台master 也是node节点)

#!/usr/bin/env bash

# Author:xiaolang

# Blog:https://www.cnblogs.com/xiaolang666

#source <(kubectl completion bash) k8s命令补全

#master节点之间手动做免密登录master免密自己也得做docker记得也得自己安装并运行起来

#环境变量必须的

m1=192.168.15.201

m2=192.168.15.202

m3=192.168.15.203

kube_vip=192.168.15.200

# 内网端口

keeaplived_interface=ens33

# 可以写多个master节点

master_array=(

192.168.15.201

192.168.15.202

192.168.15.203

)

m1=192.168.15.201

m2=192.168.15.202

m3=192.168.15.203

#保证kube-apiserver kube-controller-manager kube-proxy kubectl kubelet kube-scheduler这几个组件在目录/opt/data/kubernetes/server/bin/下并且授权一下可chmod +x ...执行权限

#上传网络插件flannel-v0.11.0-linux-amd64.tar.gz到/opt/data目录

#/etc/hosts 文件关系记得配置

# 建议手动下载证书安装包 网络有时候下载不成功

# mkdir -p /app/k8s/{tar,bin}/

#wget -P /app/k8s/bin/ https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

#wget -P /app/k8s/bin/ https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 逻辑判断函数执行失败就终止运行

function judge() {

if [ $2 -eq 0 ];then

echo -e "\033[32m $1 \033[0m \n"

else

echo -e "\033[41;36m $1 \033[0m \n"

exit 1

fi

}

# 1,安装集群证书命令

function install_certificate(){

# 创建k8s用到的安装包和命令目录

if [ -d /app/ ];then

echo '/app/ 目录已经存在'

else

mkdir -p /app/k8s/{tar,bin}/

fi

# 下载证书安装包

#wget -P /app/k8s/bin/ https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

#res=$?

#judge '下载cfssl证书安装包' $res

#wget -P /app/k8s/bin/ https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

#res=$?

#judge '下载cfssjsonl证书安装包' $res

#设置执行权限

for i in `ls /app/k8s/bin`

do

chmod +x /app/k8s/bin/$i

done

# 修改文件名

mv /app/k8s/bin/cfssljson* /app/k8s/bin/cfssljson && mv /app/k8s/bin/cfssl_* /app/k8s/bin/cfssl

# 移动到用户本地命令目录

mv /app/k8s/bin/* /usr/local/sbin/

# 验证

. /etc/profile

cfssl version &> /dev/null

res=$?

judge 'cfssl命令生效' $res

}

# 2,创建etcd集群根证书

function create_cluster_base_cert(){

# 创建ca目录

mkdir -p /opt/cert/ca/

# 创建etcd集群根证书json配置文件

cat > /opt/cert/ca/ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 创建etcd根CA证书签名请求文件

cat > /opt/cert/ca/ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}]

}

EOF

# 生成根证书文件

cd /opt/cert/ca/ && cfssl gencert -initca ca-csr.json | cfssljson -bare ca - && judge 'ETCD根证书生成完毕' $? || judge 'ETCD根证书生成失败' $?

}

# 部署etcd集群(部署在三台master节点上)

function etcd_deploy(){

# 下载或则上传到/app/k8s/tar 目录

cd /app/k8s/tar

# 下载ETCD安装包

echo -e "\033[33m \t---------- 正在下载etcd安装包 请稍等.... ----------\t \033[0m\n" &&

wget -P /app/k8s/tar/ https://mirrors.huaweicloud.com/etcd/v3.3.24/etcd-v3.3.24-linux-amd64.tar.gz

judge 'ETCD 安装包下载成功' $?

# 解压and分发到其他master节点

tar xf etcd-v3.3.24-linux-amd64.tar.gz -C /app/k8s/bin/

# etcd命令分发至其他节点

echo -e "\033[33m \t---------- 正在部署etcd服务端和客户端到所有集群中.... ----------\t \033[0m\n"

for i in ${master_array[*]}

do

scp /app/k8s/bin/etcd-v3.3.24-linux-amd64/etcd* root@$i:/usr/local/sbin/

done

# 查看etcd是否安装成功

. /etc/profile

etcd --version && judge 'etcd安装成功' $? || judge 'etcd数据库安装失败' $?

}

# 创建etcd证书

function create_etcd_cert(){

# 创建etcd证书目录

mkdir /opt/cert/etcd

cat > /opt/cert/etcd/etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"$m1",

"$m2",

"$m3",

"$kube_vip"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}

]

}

EOF

# 生成etcd证书

cd /opt/cert/etcd/ && cfssl gencert -ca=../ca/ca.pem -ca-key=../ca/ca-key.pem -config=../ca/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

judge 'etcd 证书生成完毕' $?

# 分发证书

for ip in ${master_array[*]}

do

ssh root@${ip} "mkdir -p /etc/etcd/ssl"

scp -q /opt/cert/ca/ca*.pem root@${ip}:/etc/etcd/ssl

ca_cert=$?

scp -q /opt/cert/etcd/etcd*.pem root@${ip}:/etc/etcd/ssl

etcd_cert=$?

if [ $ca_cert -eq 0 ] && [ $etcd_cert -eq 0 ];then

echo -e "\033[32m $ip ca证书和etcd证书分发完毕 \033[0m \n"

else

echo -e "\033[41;36m $ip ca证书和etcd证书分发失败 \033[0m \n"

exit 0

fi

done

for ip in ${master_array[*]}

do

# 拿到远程执行命令的结果

cert_num=$(ssh root@$ip "ls /etc/etcd/ssl/ | wc -l")

if [ "$cert_num" -eq 4 ];then

echo -e "\033[32m $ip 验证证书已成功分发 \033[0m \n"

else

echo -e "\033[41;36m $ip 验证证书分发失败 \033[0m \n"

exit 0

fi

done

}

# 生成etcd脚本注册文件

function etcd_script(){

cat > /app/k8s/bin/etcd_servie.sh << ENDOF

# 创建etcd配置目录

mkdir -p /etc/kubernetes/conf/etcd

INITIAL_CLUSTER=node-1=https://${m1}:2380,node-2=https://${m2}:2380,node-3=https://${m3}:2380

# 注册etcd system管理

cat > /usr/lib/systemd/system/etcd.service <<EOF

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/sbin/etcd \\

--name \$1 \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://\${2}:2380 \\

--listen-peer-urls https://\${2}:2380 \\

--listen-client-urls https://\${2}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://\${2}:2379 \\

--initial-cluster-token etcd-cluster \\

--initial-cluster \${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

ENDOF

# 增加执行权限

chmod +x /app/k8s/bin/etcd_servie.sh

}

# 批处理执行etcd脚本

function batch_execute_etcd_script(){

# master执行etcd服务注册

for ip in ${master_array[*]}

do

if [ $ip == $m1 ];then

scp /app/k8s/bin/etcd_servie.sh root@$ip:/root/

ssh root@$ip "bash /root/etcd_servie.sh node-1 $m1"

judge 'node-1 edcd配置文件完成' $?

elif [ $ip == $m2 ];then

scp /app/k8s/bin/etcd_servie.sh root@$ip:/root/

ssh root@$ip "bash /root/etcd_servie.sh node-2 $m2"

judge 'node-2 edcd配置文件完成' $?

elif [ $ip == $m3 ];then

scp /app/k8s/bin/etcd_servie.sh root@$ip:/root/

ssh root@$ip "bash /root/etcd_servie.sh node-3 $m3"

judge 'master3 edcd配置文件完成' $?

fi

done

# 启动etcd

for ip in ${master_array[*]}

do

ssh root@$ip "systemctl daemon-reload && systemctl enable etcd && systemctl start etcd"

judge "$ip 启动etcd服务中 " $?

done

# 查看启动状态

echo -e "\033[42;37m \t<<-------------------- 查看EDCT数据库是否启动成功 -------------------->> \033[0m \n"

sleep 2

for ip in ${master_array[*]}

do

ssh root@$ip 'systemctl status etcd &> /dev/null'

etcd_status_code=$?

if [ "$etcd_status_code" -eq 0 ];then

echo -e "\033[32m $ip etcd启动成功 \033[0m \n"

else

echo -e "\033[41;36m $ip etcd启动失败 删除/var/lib/etcd/下缓存文件试试或则看看etcd注册的配置文件是否准确 \033[0m \n"

exit 0

fi

done

}

# 2021/07/20 上面已成功

# 测试etcd集群

function test_etcd(){

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://${m1}:2379,https://${m2}:2379,https://${m3}:2379" \

endpoint status --write-out='table'

judge 'etcd 集群建立通信成功' $?

}

# 2021/7/21上面已测试完成

# 创建master CA节点证书

function create_master_ca_cert(){

# 创建k8s集群根证书目录

mkdir /opt/cert/k8s/ -p

cd /opt/cert/k8s/

# 配置k8s集群根ca证书配置

cat > /opt/cert/k8s/ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

# 创建k8s集群根CA证书签名请求文件

cat > /opt/cert/k8s/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

# 生成k8s根证书私钥公钥文件

cd /opt/cert/k8s/ &&

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

judge 'k8s根证书私钥公钥文件生成完毕' $?

}

# 创建kube-apiserver证书

function create_kube_apiserver_cert(){

# 创建apiserver json证书配置

cd /opt/cert/k8s/

cat > /opt/cert/k8s/server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"$m1",

"$m2",

"$m3",

"$kube_vip",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

# 生成apiserver证书私钥公钥文件

cd /opt/cert/k8s/ && cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

judge 'apiserver证书私钥公钥文件生成完毕' $?

}

# 创建controller-manager的证书

function create_kube_controller_manager_cert(){

cd /opt/cert/k8s/ &&

# 创建controller_manager_json证书配置

cat > /opt/cert/k8s/kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"$m1",

"$m2",

"$m3",

"$kube_vip"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

# 生成controller_manager_json证书私钥公钥文件

cd /opt/cert/k8s/ &&

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

judge 'controller_manager_json证书私钥公钥文件生成完毕' $?

}

# 创建kube-scheduler的证书

function create_kube_scheduler_cert(){

cd /opt/cert/k8s/

cat > /opt/cert/k8s/kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"$m1",

"$m2",

"$m3",

"$kube_vip"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

# 生成kube_scheduler_json证书私钥公钥文件

cd /opt/cert/k8s/

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

judge 'kube_scheduler_json证书私钥公钥文件生成完毕' $?

}

# 创建kube-proxy的证书

function create_kube_proxy_cert(){

cd /opt/cert/k8s/

cat > /opt/cert/k8s/kube-proxy-csr.json << EOF

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:kube-proxy",

"OU":"System"

}

]

}

EOF

# 生成kube_proxy_json证书私钥公钥文件

cd /opt/cert/k8s/ &&

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

judge 'kube_proxy_json证书私钥公钥文件生成完毕' $?

}

# 创建集群管理员kubectl证书

function create_cluster_kubectl_cert(){

cd /opt/cert/k8s/ &&

cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

# 生成kubectl_json证书私钥公钥文件

cd /opt/cert/k8s/ &&

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

judge 'kubectl_json证书私钥公钥文件生成完毕' $?

}

# 颁发k8s集群证书

function issue_k8s_cert(){

mkdir -pv /etc/kubernetes/ssl

cp -p /opt/cert/k8s/{ca*pem,server*pem,kube-controller-manager*pem,kube-scheduler*.pem,kube-proxy*pem,admin*.pem} /etc/kubernetes/ssl/

for i in ${master_array[*]}

do

if [ ! $i == $m1 ];then

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

echo -e "\033[33m \t---------- $i 正在拷贝k8s集群证书配置文件... ----------\t \033[0m\n"

scp -q /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl/

judge "$i k8s集群证书拷贝完成" $?

fi

done

}

# 分发k8s服务组件到每个master

function issue_k8s_server(){

mkdir -p /opt/data/kubernetes/server/bin/

cd /opt/data/kubernetes/server/bin/ &&

# 分发k8s master组件

for i in ${master_array[*]}

do

scp kube-apiserver kube-controller-manager kube-proxy kubectl kubelet kube-scheduler root@$i:/usr/local/sbin/

judge "$i k8s组件分发完毕" $?

done

}

# 创建k8s集群kube-controller-manager.kubeconfig配置文件

function create_controller_manage_conf(){

# 创建kube-controller-manager.kubeconfig

# 指定api服务的地址

export KUBE_APISERVER="https://$kube_vip:8443"

# 创建k8s集群配置文件目录

mkdir -pv /etc/kubernetes/cfg/

cd /etc/kubernetes/cfg/ &&

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

judge "controller-manager集群参数已设置" $?

# 设置客户端认证参数

kubectl config set-credentials "kube-controller-manager" \

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

judge "controller-manager集群客户端认证参数已设置" $?

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-controller-manager" \

--kubeconfig=kube-controller-manager.kubeconfig

judge "controller-manager集群上下文参数已设置" $?

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

judge "controller-manager默认上下文已设置成功" $?

echo -e "\033[42;37m \t<<-------------------- k8s集群kube-controller-manager.kubeconfig配置文件完毕 -------------------->> \033[0m \n"

}

# 创建kube-scheduler.kubeconfig配置文件

function create_scheduler_config(){

# 指定api服务的地址

export KUBE_APISERVER="https://$kube_vip:8443"

cd /etc/kubernetes/cfg/ &&

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

judge "k8s集群scheduler参数已设置" $?

# 设置客户端认证参数

kubectl config set-credentials "kube-scheduler" \

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

judge "k8s集群scheduler客户端认证参数已设置" $?

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-scheduler" \

--kubeconfig=kube-scheduler.kubeconfig

judge "k8s集群scheduler上下文参数参数已设置" $?

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

judge "k8s集群scheduler默认上下文参数参数已设置" $?

}

# 创建kube-proxy.kubeconfig集群配置文件

function create_proxy_conf(){

export KUBE_APISERVER="https://$kube_vip:8443"

cd /etc/kubernetes/cfg/ &&

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

judge "proxy 集群配置参数完成" $?

# 设置客户端认证参数

kubectl config set-credentials "kube-proxy" \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

judge "proxy客服端认证参数配置完成" $?

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-proxy" \

--kubeconfig=kube-proxy.kubeconfig

judge "proxy上下文参数配置完成" $?

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

judge "proxy上下文参数配置完成" $?

}

# 创建超级管理员kubectl的集群配置文件

function create_kubectl_conf(){

export KUBE_APISERVER="https://$kube_vip:8443"

cd /etc/kubernetes/cfg/ &&

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.kubeconfig

judge "kubectl集群参数配置完成" $?

# 设置客户端认证参数

kubectl config set-credentials "admin" \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--client-key=/etc/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

judge "kubectl客户端认证参数配置完成" $?

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="admin" \

--kubeconfig=admin.kubeconfig

judge "kubectl上下文参数参数配置完成" $?

# 配置默认上下文

kubectl config use-context default --kubeconfig=admin.kubeconfig

judge "kubectl默认上下文配置完成" $?

}

# 颁发集群配置文件

function issue_k8s_cluster_conf_file(){

for i in ${master_array[*]}

do

if [ ! $i == $m1 ];then

ssh root@$i "mkdir -pv /etc/kubernetes/cfg"

scp /etc/kubernetes/cfg/*.kubeconfig root@$i:/etc/kubernetes/cfg/

judge "controller scheduler proxy kubectl k8s组件的配置文件分发到$i完成" $?

fi

done

}

# 创建集群token

function create_cluster_token(){

# 只需要创建一次

# 必须要用自己机器创建的Token

TLS_BOOTSTRAPPING_TOKEN=`head -c 16 /dev/urandom | od -An -t x | tr -d ' '`

cd /opt/cert/k8s &&

cat > /opt/cert/k8s/token.csv << EOF

${TLS_BOOTSTRAPPING_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

# 分发集群token,用于集群TLS认证

for i in ${master_array[*]}

do

scp /opt/cert/k8s/token.csv root@$i:/etc/kubernetes/cfg/

judge "$i 集群token分发完毕" $?

done

}

# 部署各个组件(安装各个组件,使其可以正常工作)

# 安装kube-apiserver

function create_apiserver_conf(){

# 创建kube-apiserver的配置文件

# 在所有的master节点上执行

# 获取每台主机的IP地址

# KUBE_APISERVER_IP=`hostname -i`

cat > /etc/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/kube-apiserver/ \\

--advertise-address=${m1} \\

--default-not-ready-toleration-seconds=360 \\

--default-unreachable-toleration-seconds=360 \\

--max-mutating-requests-inflight=2000 \\

--max-requests-inflight=4000 \\

--default-watch-cache-size=200 \\

--delete-collection-workers=2 \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=30000-52767 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/etc/kubernetes/cfg/token.csv \\

--kubelet-client-certificate=/etc/kubernetes/ssl/server.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/etc/kubernetes/ssl/server.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kubernetes/k8s-audit.log \\

--etcd-servers=https://${m1}:2379,https://${m2}:2379,https://${m3}:2379 \\

--etcd-cafile=/etc/etcd/ssl/ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem"

EOF

}

# 分发apiserver配置文件到m2和m3

function excute_apiserver_conf(){

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kube-apiserver.conf root@$i:/etc/kubernetes/cfg/

judge "$i apiserver 配置文件完成" $?

# 更改master apiserver配置文件适配自己

ssh $i "sed -i "s#--advertise-address=${m1}#--advertise-address=${i}#g /etc/kubernetes/cfg/kube-apiserver.conf""

done

}

# 所有master注册kube-apiserver的服务

function register_apiserver(){

# 在所有的master节点上执行

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-apiserver.conf

ExecStart=/usr/local/sbin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 重载

systemctl daemon-reload

judge "node-1 apiserver 服务注册成功" $?

# 分发apiserver 服务注册

for i in $m2 $m3

do

scp /usr/lib/systemd/system/kube-apiserver.service root@$i:/usr/lib/systemd/system/

ssh root@$i "systemctl daemon-reload"

judge "$i apiserver 服务重载成功" $?

done

# 创建日志目录

for i in ${master_array[*]}

do

ssh $i "mkdir /var/log/kubernetes/kube-apiserver/ -pv"

judge "$i 创建apiserver日志记录目录 /var/log/kubernetes/kube-apiserver/ 成功" $?

done

# 启动apiserver服务

for i in ${master_array[*]}

do

ssh $i "systemctl enable --now kube-apiserver"

judge "$i apiserver 启动成功" $?

done

}

# 对kube-apiserver做高可用

function kube_apiserver_keepalived(){

# 安装高可用软件

# 三台master节点都需要安装

# keepalived + haproxy

for i in ${master_array[*]}

do

ssh $i 'echo -e "\033[33m ---------- $i 正在下载keepalived 和 haproxy 请稍后... ---------- \033[0m" &&

yum install -y keepalived haproxy &> /dev/null'

judge "$i keepalived 和 haproxy下载成功" $?

done

}

# 修改keepalived配置文件

function modifi_keep(){

# 根据节点不同,修改的配置也不同

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak &&

cd /etc/keepalived/ &&

#KUBE_APISERVER_IP=`hostname -i`

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_kubernetes {

script "/etc/keepalived/check_kubernetes.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface $keeaplived_interface

mcast_src_ip ${m1}

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

$kube_vip

}

}

EOF

for i in $m2 $m3

do

scp /etc/keepalived/keepalived.conf root@$i:/etc/keepalived/

ssh $i "sed -i 's#mcast_src_ip ${m1}#mcast_src_ip ${i}#g' /etc/keepalived/keepalived.conf"

if [ $i == $m2 ];then

ssh root@$i "sed -i 's#priority 100#priority 90#g' /etc/keepalived/keepalived.conf"

judge "$i 机器优先级修改成功" $?

else

ssh root@$i "sed -i 's#priority 100#priority 80#g' /etc/keepalived/keepalived.conf"

judge "$i 机器优先级修改成功" $?

fi

done

# 设置监控脚本

cat > /etc/keepalived/check_kubernetes.sh <<EOF

#!/bin/bash

function chech_kubernetes() {

for ((i=0;i<5;i++));do

apiserver_pid_id=\$(pgrep kube-apiserver)

#判断变量的值不是空就结束

if [[ ! -z \$apiserver_pid_id ]];then

return

else

sleep 2

fi

# 如果5次的值都为空就赋值0

apiserver_pid_id=0

done

}

# 1:running 0:stopped

check_kubernetes

if [[ \$apiserver_pid_id -eq 0 ]];then

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

# 设置执行权限

chmod +x /etc/keepalived/check_kubernetes.sh

# 分发监控脚本

for i in $m2 $m3

do

scp /etc/keepalived/check_kubernetes.sh root@$i:/etc/keepalived/

judge "$i 脚本分发成功" $?

done

# 启动高可用keepalived

for i in ${master_array[*]}

do

ssh root@$i "systemctl enable --now keepalived"

judge "$i keepalived 启动成功" $?

done

}

# 配置haproxy文件 减少单台apiserver压力

function modifi_haproxy(){

cat > /etc/haproxy/haproxy.cfg <<EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kubernetes-master-01 ${m1}:6443 check inter 2000 fall 2 rise 2 weight 100

server kubernetes-master-02 ${m2}:6443 check inter 2000 fall 2 rise 2 weight 100

server kubernetes-master-03 ${m3}:6443 check inter 2000 fall 2 rise 2 weight 100

EOF

# 分发haproxy配置 启动haproxy

for i in ${master_array[*]}

do

scp /etc/haproxy/haproxy.cfg root@$i:/etc/haproxy/

ssh $i "systemctl enable --now haproxy.service"

judge "$i haproxy 启动成功" $?

done

}

# 部署TLS(apiserver 动态签署颁发到Node节点,实现证书签署自动化)

function deploy_tls(){

export KUBE_APISERVER="https://${kube_vip}:8443"

token=`awk -F"," '{print $1}' /etc/kubernetes/cfg/token.csv`

cd /etc/kubernetes/cfg &&

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig

# 设置客户端认证参数,此处token必须用上自己生成的token.csv中的token

kubectl config set-credentials "kubelet-bootstrap" \

--token=$token \

--kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig

# 颁发集群bootstrap配置文件

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg/

judge "$i bootstrap配置文件颁发完毕" $?

done

# 创建TLS低权限用户

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

judge "kubernetes 低权限用户kubelet-bootstrap创建成功" $?

}

# 部署contorller-manager

function deploy_contorller_manager(){

# 需要在三台master节点上执行

cat > /etc/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/kube-controller-manager/ \\

--leader-elect=true \\

--cluster-name=kubernetes \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/12 \\

--service-cluster-ip-range=10.96.0.0/16 \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--kubeconfig=/etc/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=10s \\

--horizontal-pod-autoscaler-use-rest-clients=true"

EOF

# 注册服务

# 需要在三台master节点上执行

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/usr/local/sbin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kube-controller-manager.conf $i:/etc/kubernetes/cfg/

judge "$i controller-manager 配置文件完成" $?

scp /usr/lib/systemd/system/kube-controller-manager.service $i:/usr/lib/systemd/system/

judge "$i controller-manager system注册服务完成" $?

done

for i in ${master_array[*]}

do

ssh $i "mkdir -pv /var/log/kubernetes/kube-controller-manager/"

ssh $i "systemctl daemon-reload"

ssh $i "systemctl enable --now kube-controller-manager.service"

judge "$i 启动controller服务成功" $?

done

}

# 部署kube-scheduler

function deploy_kube_scheduler(){

# 三台机器上都需要执行

cat > /etc/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/kube-scheduler \\

--kubeconfig=/etc/kubernetes/cfg/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--master=http://127.0.0.1:8080 \\

--bind-address=127.0.0.1 "

EOF

# 三台节点上都需要执行

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-scheduler.conf

ExecStart=/usr/local/sbin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

for i in ${master_array[*]}

do

scp /etc/kubernetes/cfg/kube-scheduler.conf $i:/etc/kubernetes/cfg/

ssh $i "mkdir -pv /var/log/kubernetes/kube-scheduler/"

judge 'scheduler日志目录 创建成功' $?

scp /usr/lib/systemd/system/kube-scheduler.service $i:/usr/lib/systemd/system/

judge "$i scheduler system服务注册成功" $?

ssh $i "systemctl daemon-reload"

ssh $i "systemctl enable --now kube-scheduler"

judge "$i scheduler启动成功" $?

done

}

# 部署kubelet服务

function deploy_kubelet(){

# 创建kubelet.conf服务配置文件

KUBE_HOSTNAME=`hostname`

cat > /etc/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/kubelet \\

--hostname-override=${KUBE_HOSTNAME} \\

--container-runtime=docker \\

--kubeconfig=/etc/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig \\

--config=/etc/kubernetes/cfg/kubelet-config.yaml \\

--cert-dir=/etc/kubernetes/ssl \\

--image-pull-progress-deadline=15m \\

--pod-infra-container-image=registry.cn-shanghai.aliyuncs.com/mpd_k8s/pause:3.2"

EOF

# 分发配置文件kubelet.conf配置文件

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kubelet.conf root@$i:/etc/kubernetes/cfg/

judge "$i kubelet.conf文件分发完毕" $?

done

# 修改配置文件

for i in $m2 $m3

do

ssh $i "sed -i "s#`hostname`#\`hostname\`#g" /etc/kubernetes/cfg/kubelet.conf "

judge "$i 修改kubelet.conf配置文件成功" $?

done

# 创建kubelet日志目录

for i in ${master_array[*]}

do

mkdir -pv /var/log/kubernetes/kubelet

judge "$i kubelet 日志目录创建成功" $?

done

# 创建kubelet-config.yaml配置

#KUBE_HOSTNAME=`hostname -i`

cat > /etc/kubernetes/cfg/kubelet-config.yaml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: $m1

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

clusterDNS:

- 10.96.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

# 分发kubelet-config.yaml配置文件

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kubelet-config.yaml root@$i:/etc/kubernetes/cfg/

judge "$i kubelet-config.yaml分发完毕" $?

done

# 修改kubelet-config.yaml配置文件

for i in $m2 $m3

do

ssh $i "sed -i "s#${m1}#${i}#g" /etc/kubernetes/cfg/kubelet-config.yaml "

done

# 验证kubelet-config.yaml文件

for i in $m2 $m3

do

ssh $i "grep $i /etc/kubernetes/cfg/kubelet-config.yaml"

judge "$i kubelet-config.yaml文件OK" $?

done

# 注册kubelet的服务

# 需要在三台master节点上执行

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kubelet.conf

ExecStart=/usr/local/sbin/kubelet \$KUBELET_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 分发kubelet的systemd注册服务

for i in $m2 $m3

do

scp /usr/lib/systemd/system/kubelet.service root@$i:/usr/lib/systemd/system/

judge "$i kubelet的systemd注册服务完成" $?

done

# 重载kubelet system服务

for i in ${master_array[*]}

do

ssh $i "systemctl daemon-reload" &> /dev/null

judge "$i systemd重载成功" $?

done

# 启动kubelet服务

for i in ${master_array[*]}

do

ssh $i "systemctl enable --now kubelet.service"

judge "$i kubelet服务启动成功" $?

done

}

# 部署kube-proxy

function deploy_kube_proxy(){

# 创建配置文件

# 需要在三台master节点上执行

cat > /etc/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes/kube-proxy \\

--config=/etc/kubernetes/cfg/kube-proxy-config.yaml"

EOF

# 分发kube-proxy.conf配置文件

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kube-proxy.conf root@$i:/etc/kubernetes/cfg/

judge "$i kube-proxy.conf配置成功" $?

done

# 创建日志目录

for i in ${master_array[*]}

do

ssh $i "mkdir -pv /var/log/kubernetes/kube-proxy"

done

# 创建kube-proxy-config.yaml文件

# 需要在三台master节点上执行

#KUBE_HOSTNAME=`hostname -i`

HOSTNAME=`hostname`

cat > /etc/kubernetes/cfg/kube-proxy-config.yaml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: ${m1}

healthzBindAddress: ${m1}:10256

metricsBindAddress: ${m1}:10249

clientConnection:

burst: 200

kubeconfig: /etc/kubernetes/cfg/kube-proxy.kubeconfig

qps: 100

hostnameOverride: ${HOSTNAME}

clusterCIDR: 10.96.0.0/16

enableProfiling: true

mode: "ipvs"

kubeProxyIPTablesConfiguration:

masqueradeAll: false

kubeProxyIPVSConfiguration:

scheduler: rr

excludeCIDRs: []

EOF

# 分发kube-proxy-config.yaml文件

for i in $m2 $m3

do

scp /etc/kubernetes/cfg/kube-proxy-config.yaml root@$i:/etc/kubernetes/cfg/

judge "$i kube-proxy-config.yaml 配置成功" $?

done

# 修改kube-proxy-config.yaml文件

for i in $m2 $m3

do

ssh $i "sed -i "s#${m1}#${i}#g" /etc/kubernetes/cfg/kube-proxy-config.yaml"

ssh $i "sed -i "s#`hostname`#\`hostname\`#g" /etc/kubernetes/cfg/kube-proxy-config.yaml"

judge "$i kube-proxy-config.yaml文件修改成功" $?

done

# 注册system服务

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-proxy.conf

ExecStart=/usr/local/sbin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 分发kube-proxy system服务

for i in $m2 $m3

do

scp /usr/lib/systemd/system/kube-proxy.service root@$i:/usr/lib/systemd/system/

judge "$i kube-proxy system服务注册成功" $?

done

# 重载system启动kube-proxy服务

for i in ${master_array[*]}

do

ssh $i "systemctl daemon-reload"

judge "$i system重载成功" $?

ssh $i "systemctl enable --now kube-proxy"

judge "$i kube-proxy 服务启动成功" $?

done

}

# 查看集群节点加入请求

function add_k8s_cluster(){

echo -e "\033[42;37m \t<<-------------------- 查看k8s集群节点加入请求 -------------------->> \033[0m \n"

kubectl get csr

# 批准加入

kubectl certificate approve `kubectl get csr | grep "Pending" | awk '{print $1}'`

# 验证

# 睡眠3秒等待集群加入生效

echo -e "\033[33m \t---------- 睡眠3秒等待集群加入生效... ----------\t\n"

sleep 3

num=0

for i in `kubectl get nodes | awk '{print $2}' | tail -3`

do

if [ $i == 'Ready' ];then

let num+=1

fi

done

if [ $num -eq 3 ];then

echo -e "\033[32m master节点已成功加入到集群中 \033[0m \n"

else

echo -e "\033[41;36m 加入k8s集群失败 \033[0m \n"

fi

}

# 安装网络flannel插件

function deploy_flannel(){

if [ -f /opt/data/flannel-v* ];then

judge "flannel网络插件存在" 0

else

judge "flannel网络插件不存在请上传网络插件到/opt/data目录" 1

fi

tar -xf /opt/data/flannel-v0.11.0-linux-amd64.tar.gz -C /opt/data/

# 分发flannel插件到其他master节点

for i in ${master_array[*]}

do

scp /opt/data/{flanneld,mk-docker-opts.sh} root@$i:/usr/local/sbin/

judge "$i flannel插件分发成功" $?

done

# 将flannel配置写入集群数据库

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://${m1}:2379,https://${m2}:2379,https://${m3}:2379" \

mk /coreos.com/network/config '{"Network":"10.244.0.0/12", "SubnetLen": 21, "Backend": {"Type": "vxlan", "DirectRouting": true}}'

judge "flannel信息已成功写入到数据库ETCD中" $?

# 验证

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://${m1}:2379,https://${m2}:2379,https://${m3}:2379" \

get /coreos.com/network/config

judge "flannel信息已成功写入到数据库集群ETCD中" $?

# 注册flannel服务

cat > /usr/lib/systemd/system/flanneld.service << EOF

[Unit]

Description=Flanneld address

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/usr/local/sbin/flanneld \\

-etcd-cafile=/etc/etcd/ssl/ca.pem \\

-etcd-certfile=/etc/etcd/ssl/etcd.pem \\

-etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

-etcd-endpoints=https://${m1}:2379,https://${m2}:2379,https://${m3}:2379 \\

-etcd-prefix=/coreos.com/network \\

-ip-masq

ExecStartPost=/usr/local/sbin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

# 分发flannel system管理配置文件

for i in $m2 $m3

do

scp /usr/lib/systemd/system/flanneld.service root@$i:/usr/lib/systemd/system/

judge "$i flannel system管理配置文件完成" $?

done

# 修改docker启动文件

# 让flannel接管docker网络

sed -i '/ExecStart/s/\(.*\)/#\1/' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a EnvironmentFile=-/run/flannel/subnet.env' /usr/lib/systemd/system/docker.service

for i in $m2 $m3

do

scp /usr/lib/systemd/system/docker.service root@$i:/usr/lib/systemd/system/

judge "$i 修改docker启动文件成功" $?

done

echo -e "\033[42;37m \t<<-------------------- master集群修改docker启动文件(让flannel接管docker网络)完成 -------------------->> \033[0m \n"

# 分发systemd flannel服务配置

for i in ${master_array[*]}

do

ssh root@$i "systemctl daemon-reload"

judge "systemd重载服务成功" $?

ssh root@$i "systemctl enable --now flanneld"

judge "$i flanneld 启动成功" $?

ssh root@$i "systemctl restart docker"

judge "$i docker 启动成功" $?

done

# 验证集群网络通信

}

# 安装集群DNS

function deploy_ClusterDns(){

# 只需要在一台节点上执行即可

# 下载DNS安装配置文件包

echo -e "\033[33m \t---------- 下载DNS安装配置文件包请稍后... ----------\t\n"

wget https://github.com/coredns/deployment/archive/refs/heads/master.zip -P /app/k8s/tar/

if [ $? -eq 0 ];then

echo -e "\033[42;37m \t<<-------------------- DNS安装配置文件包下载成功 -------------------->> \033[0m \n"

else

echo -e "\033[33m \t---------- 下载DNS安装配置文件包失败 (正在准备第二次尝试)... ----------\t\n"

echo 'nameserver 8.8.8.8' >> /etc/resolv.conf

wget https://github.com/coredns/deployment/archive/refs/heads/master.zip -P /app/k8s/tar/

if [ $? -eq 0 ];then

echo -e "\033[42;37m \t<<-------------------- DNS安装配置文件包下载成功 -------------------->> \033[0m \n"

else

echo -e "\033[41;36m DNS安装配置文件包下载失败请检查网络 \033[0m \n"

exit 0

fi

fi

# 安装unzip命令

yum install -y unzip &> /dev/null

judge "unzip 命令安装成功" $?

# 解压DNS配置文件

cd /app/k8s/tar && unzip master.zip &> /dev/null

cd /app/k8s/tar/deployment-master/kubernetes && echo -e "\033[33m \t---------- 创建dns pod容器 ----------\t\n";./deploy.sh -i 10.96.0.2 -s | kubectl apply -f -

# 验证集群DNS

# 睡眠90秒等待集群DNS创建生效

echo -e "\033[33m \t---------- 睡眠90秒等待创建POD集群DNS生效... ----------\t\n"

sleep 90

kube_dns_pod_ready=`kubectl get pods -n kube-system | tail -1 | awk '{print $2}'`

if [[ $kube_dns_pod_ready = '1/1' ]];then

echo -e "\033[42;37m \t<<-------------------- k8s集群DNS生效 -------------------->> \033[0m \n"

else

echo -e "\033[33m \t---------- k8s集群DNS生效失败请排查 ----------\t\n"

exit 0

fi

# 测试集群DNS pod通信

# 绑定一下超管用户

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubernetes

judge "绑定集群超管用户kubernetes成功" $?

# 验证集群DNS和集群网络

echo -e "\033[36m 请输入 nslookup kubernetes 测试dns网络集群(没报错就成功了) \033[0m"

kubectl run test -it --rm --image=busybox:1.28.3

}

# 程序入口函数

function main(){

# 建议先手动ssh链接一下(master1 master2 master3)防止执行脚本的时候卡住

# 安装集群证书命令(由于是国外网站网络安装不稳定建议手动安装 master1安装就行了)

# 下载证书安装包注释的内容手动上传后再执

echo -e "\033[33m \t---------- shell一键部署k8s二进制 ----------\t

\t1. 部署过程中出现红色字体代表失败

\t2. 部署过程中出现绿色字体代表成功 \033[0m\n"

install_certificate

# 创建集群根证书

create_cluster_base_cert

# 部署etcd集群(部署在三台master节点上)

etcd_deploy

# 创建etcd证书

create_etcd_cert

# 生成etcd脚本注册文件

etcd_script

# 批处理执行etcd脚本

batch_execute_etcd_script

# 2021/07/20 上面函数已成功

# 测试etcd集群

test_etcd

echo -e "\033[42;37m \t<<-------------------- etcd数据库集群部署完成 -------------------->> \033[0m \n"

# 创建master CA节点证书

create_master_ca_cert

# 创建kube-apiserver证书

create_kube_apiserver_cert

# 创建controller-manager的证书

create_kube_controller_manager_cert

# 创建kube-scheduler的证书

create_kube_scheduler_cert

# 创建kube-proxy的证书

create_kube_proxy_cert

# 创建集群管理员kubectl证书

create_cluster_kubectl_cert

# 颁发k8s集群证书

issue_k8s_cert

# master集群所有证书已配置完毕

echo -e "\033[42;37m \t<<-------------------- master集群所有证书已配置完毕 -------------------->> \033[0m \n"

# 分发k8s服务组件到每个master

issue_k8s_server

# 创建k8s集群kube-controller-manager.kubeconfig配置文件

create_controller_manage_conf

# 创建kube-scheduler.kubeconfig配置文件

create_scheduler_config

# 创建kube-proxy.kubeconfig集群配置文件

create_proxy_conf

# 创建超级管理员kubectl的集群配置文件

create_kubectl_conf

# 颁发集群配置文件

issue_k8s_cluster_conf_file

# 创建集群token

create_cluster_token

# 配置apiserve配置文件

create_apiserver_conf

# 分发apiserver配置文件到m2和m3

excute_apiserver_conf

# 所有master注册kube-apiserver的服务

register_apiserver

# 对kube-apiserver做高可用

kube_apiserver_keepalived

# 修改keepalived配置文件

modifi_keep

# 配置haproxy文件

modifi_haproxy

# 部署TLS(apiserver 动态签署颁发到Node节点,实现证书签署自动化)

deploy_tls

# 部署contorller-manager

deploy_contorller_manager

# 部署kube-scheduler

deploy_kube_scheduler

# 部署kubelet服务

deploy_kubelet

# 部署kube-proxy

deploy_kube_proxy

# 查看集群节点加入请求

add_k8s_cluster

# 安装网络flannel插件

deploy_flannel

# 安装集群DNS

deploy_ClusterDns

echo -e "\033[42;37m \t<<-------------------- k8s master 集群配置完毕 -------------------->> \033[0m \n"

}

# 运行主函数

main

浙公网安备 33010602011771号

浙公网安备 33010602011771号