1031-诗人信息重新收集及诗人关联人物

诗人信息重新收集

收集所有诗人

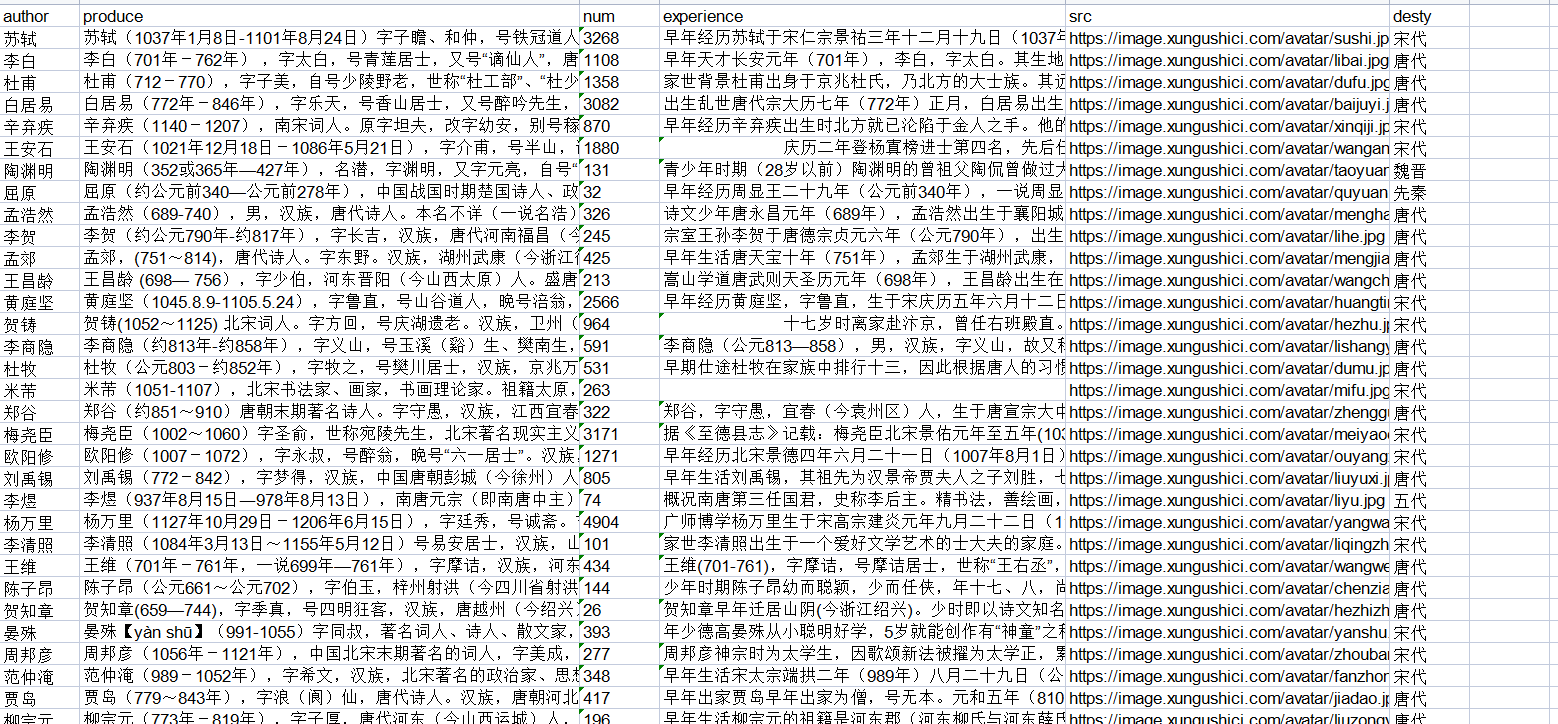

诗人图片与朝代,之前收集的诗人只有2000多个,是因为之前收集的诗人有生平,而其他诗人没有,故没有收集到

这次收集所有诗人,都有个人介绍。从个人介绍中也可提取出有用的信息。

经过此次收集,共收集到20088个诗人

import requests

from bs4 import BeautifulSoup

from lxml import etree

import re

headers = {'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'}#创建头部信息

pom_list=[]

k=1

for i in range(1,2010):

url='https://www.xungushici.com/authors/p-'+str(i)

r=requests.get(url,headers=headers)

content=r.content.decode('utf-8')

soup = BeautifulSoup(content, 'html.parser')

hed=soup.find('div',class_='col col-sm-12 col-lg-9')

list=hed.find_all('div',class_="card mt-3")

origin_url='https://www.xungushici.com'

for it in list:

content = {}

# 1.1获取单页所有诗集

title = it.find('h4', class_='card-title')

poemauthor=title.find_all('a')[1].text

desty=title.find_all('a')[0].text

#print(poemauthor+"+"+desty)

#获取诗人图像

if it.find('a',class_='ml-2 d-none d-md-block')!=None:

src=it.find('a',class_='ml-2 d-none d-md-block').img['src']

else:

src="http://www.huihua8.com/uploads/allimg/20190802kkk01/1531722472-EPucovIBNQ.jpg"

content['src']=src

href=title.find_all('a')[1]['href']

#对应的诗人个人详情页面

real_href = origin_url + href

#诗人简介及诗集个数

text = it.find('p', class_='card-text').text

#诗人的诗集个数

numtext = it.find('p', class_='card-text').a.text

pattern = re.compile(r'\d+')

num=re.findall(pattern, numtext)[0]

#进入诗人详情页面

r2=requests.get(real_href,headers=headers)

content2=r2.content.decode('utf-8')

soup2 = BeautifulSoup(content2, 'html.parser')

ul=soup2.find('ul',class_='nav nav-tabs bg-primary')

if ul!=None:

list_li=ul.find_all('li',class_='nav-item')

exp = ""

for it in list_li:

if it.a.text=="人物生平" or it.a.text=="人物" or it.a.text=="生平":

urlsp=origin_url+it.a['href']

r3 = requests.get(urlsp, headers=headers)

content3 = r3.content.decode('utf-8')

soup3 = BeautifulSoup(content3, 'html.parser')

list_p=soup3.select('body > div.container > div > div.col.col-sm-12.col-lg-9 > div:nth-child(3) > div.card > div')

for it in list_p:

exp=it.get_text().replace('\n','').replace('\t','').replace('\r','')

content['author']=poemauthor

content['produce']=text

content['num']=num

content['desty'] = desty

content['experience'] = exp

pom_list.append(content)

else:

content['author'] = poemauthor

content['produce'] = text

content['num'] = num

content['desty'] = desty

content['experience'] = "无"

pom_list.append(content)

print("第"+str(k)+"个")

k=k+1

import xlwt

xl = xlwt.Workbook()

# 调用对象的add_sheet方法

sheet1 = xl.add_sheet('sheet1', cell_overwrite_ok=True)

sheet1.write(0,0,"author")

sheet1.write(0,1,'produce')

sheet1.write(0,2,'num')

sheet1.write(0,3,'experience')

sheet1.write(0,4,'src')

sheet1.write(0,5,'desty')

for i in range(0,len(pom_list)):

sheet1.write(i+1,0,pom_list[i]['author'])

sheet1.write(i+1, 1, pom_list[i]['produce'])

sheet1.write(i+1, 2, pom_list[i]['num'])

sheet1.write(i+1, 3, pom_list[i]['experience'])

sheet1.write(i + 1, 4, pom_list[i]['src'])

sheet1.write(i + 1, 5, pom_list[i]['desty'])

xl.save("author3.xlsx")

存储效果

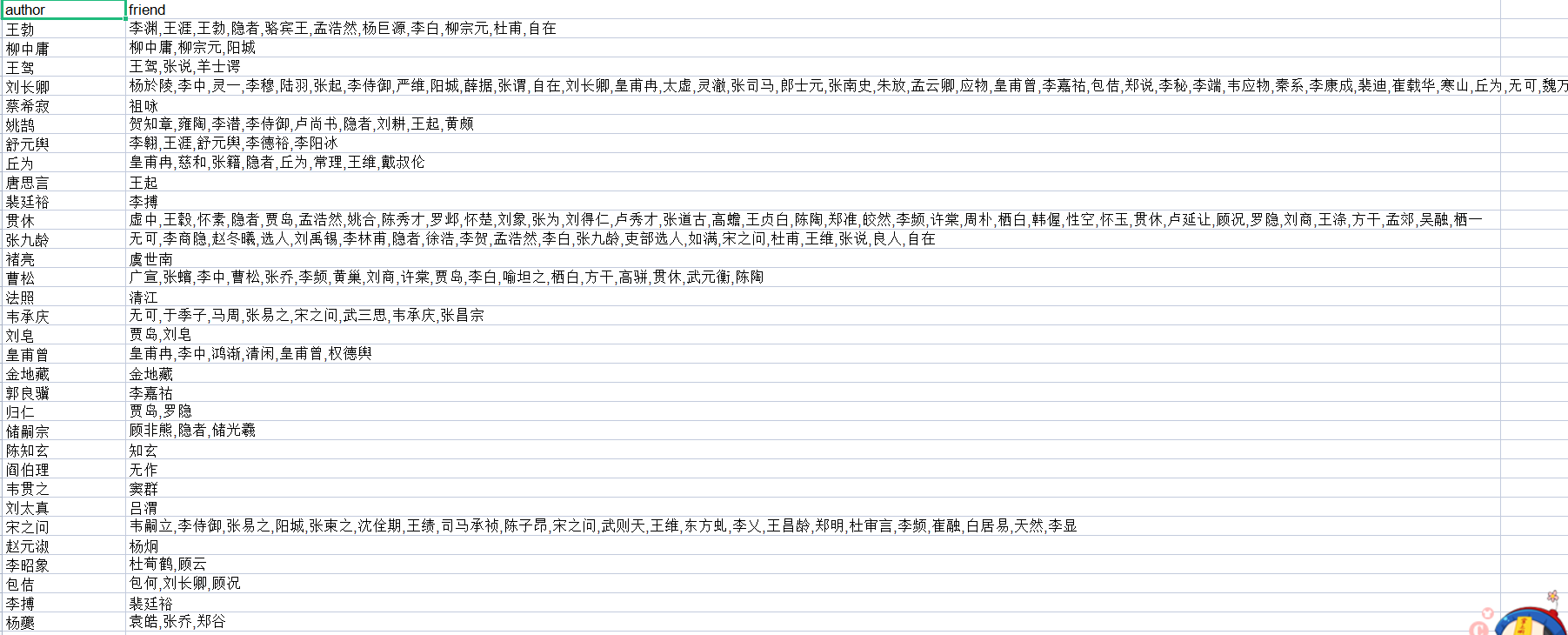

诗人关联人物

通过诗集来找到关联人物

先找唐代相互关联的诗人,步骤如下:

通过对诗名,诗文赏析,写作背景,来对作者(也就是诗人)进行分析,找出其中包含的其他诗人的名字。存储到一个列表

因为会涉及到多首诗,都是同一个作者,因此,涉及到字典,列表合并,去重

列表合并:list+=list2

列表去重:a=list(set(a))

在考虑是否是相关的作者时,有以下考察点:

其一:通过内容去检索所有诗人,找到诗人库中包含的关联诗人

其二:找到关联诗人后,判断是否和作者同一个朝代

其三:还可判断是否在作者的出生年月内(未实现,部分诗人出生年月不详)

import numpy

import xlwt

import pandas as pd

ans=[]

def read_author():

file="author3.xlsx"

data=pd.read_excel(file).fillna("无")

author=list(data.author)

all_desty=list(data.desty)

experience=list(data.experience)

dict_exp={}

dict_des={}

for i in range(len(author)):

dict_exp[author[i]]=experience[i]

dict_des[author[i]]=all_desty[i]

#去重

author=list(set(author))

file2="tang.xlsx"

tang=pd.read_excel(file2).fillna("无")

author_name=list(tang.author)

t_desty=list(tang.desty)

new_author=list(set(author_name))

dict={}

for it in new_author:

dict[it]=[]

title=list(tang.title)

appear=list(tang.appear)

back=list(tang.background)

for i in range(len(author_name)):

name = author_name[i]

if name=='佚名':

continue

content=title[i]+appear[i]+back[i]

friend=[]

for j in range(len(author)):

if content.find(author[j])!=-1 and dict_des[author[j]]=="唐代":

friend.append(author[j])

#print(name+": "+str(friend))

dict[name]+=friend

dict[name]=list(set(dict[name]))

dict[name]=dict[name]

print("--------------------ans--------------------------")

ans_author=[]

ans_friend=[]

for it in dict:

if dict[it]!=[]:

ans_author.append(it)

ans_friend.append(','.join(dict[it]))

print(it+":"+str(dict[it]))

xl = xlwt.Workbook()

# 调用对象的add_sheet方法

sheet1 = xl.add_sheet('sheet1', cell_overwrite_ok=True)

sheet1.write(0, 0, "author")

sheet1.write(0, 1, 'friend')

for i in range(0, len(ans_author)):

sheet1.write(i + 1, 0, ans_author[i])

sheet1.write(i + 1, 1, ans_friend[i])

xl.save("friend.xlsx")

if __name__ == '__main__':

read_author()

效果

里面friend有的包括原作者,之后在对其清洗

浙公网安备 33010602011771号

浙公网安备 33010602011771号