程序员需要知道的十个操作系统的概念

说明:我之前在网上看到这篇文章觉得非常好,于是把它翻译了下来。当然很多地方翻译的很渣,见笑了。温馨提示,文章有点长。

原文链接:

程序员需要知道的十个操作系统的概念

Do you speak binary? Can you comprehend machine code? If I gave you a sheet full of 1s and 0s could you tell me what it means/does? If you were to go to a country you’ve never been to that speaks a language you’ve never heard, or maybe your heard of it but don’t actually speak it, what would you need while there to help you communicate with the locals?

你知道二进制吗?你能理解机器语言吗?假如给你一张全是0或1的表格你能理解是啥意思吗?假如你要去一个你没有去过的国家,你要说你从没听过的语言,或者你要能听懂不需要说它,那么需要怎么帮助你才能和当地人交流呢?

You would need a translator. Your operating system functions as that translator in your PC. It converts those 1s and 0s, yes/no, on/off values into a readable language that you will understand. It does all of this in a streamlined graphical user interface, or GUI, that you can move around with a mouse click things, move them, see them happening before your eyes.

你需要一个翻译,你的操作系统就像你电脑里的翻译一样。它将0或1,是或否,开或关转成你能够理解的语言。它在一个简单的图形化界面中完成这些工作,你可以用鼠标点击、移动一些东西,这些可以用你的眼睛看到。

Knowing how operating systems work is a fundamental and critical to anyone who is a serious software developer. There should be no attempt to get around it and anyone telling you it’s not necessary should be ignored. While the extend and depth of knowledge can be questioned, knowing more than the fundamentals can be critical to how well your program runs and even its structure and flow.

一个严谨的软件工程师必须要知道操作系统的工作方式。如果有人跟你说那不重要,那么他是骗你的,事实上你不应该忽略它(操作系统的工作方式)。虽然知识的深度和广度会成为一个问题,但是了解更多的基础知识能让你更好的把控程序的运行、流程和结构。

Why? When you write a program and it runs too slow, but you see nothing wrong with your code, where else will you look for a solution. How will you be able to debug the problem if you don’t know how the operating system works? Are you accessing too many files? Running out of memory and swap is in high usage? But you don’t even know what swap is! Or is I/O blocking?

这是为什么呢?当你写的程序运行的越来越慢,但是代码里面看不到任何警告,这时候你该如何解决呢。假如你不知道操作系统是如何工作的,要怎么去调试这个问题呢?访问了过多文件?内存耗尽或者交换区使用过高?但是你甚至不知道什么是交换区(swap)或阻塞IO。

And you want to communicate with another machine. How do you do that locally or over the internet? And what’s the difference? Why do some programmers prefer one OS over another?

你想访问另一台机器,怎样在本地或网上操作呢?他们有什么不同吗?为什么有的程序在某个系统上能运行而其他的系统却不行呢?(这里感觉不妥)

In an attempt to be a serious developer, I recently took Georgia Tech’s course “Introduction to Operating Systems.” It teaches the basic OS abstractions, mechanisms, and their implementations. The core of the course contains concurrent programming (threads and synchronization), inter-process communication, and an introduction to distributed OSs. I want to use this post to share my takeaways from the course, that is the 10 critical operating system concepts that you need to learn if you want to get good at developing software.

上述链接地址:https://cn.udacity.com/course/introduction-to-operating-systems--ud923

为了成为一个严谨的程序员,我最近选修了佐治亚理工学院的课程,就是那个链接。课程内容有操作系统(这里也感觉不妥),运行机制以及如何实现的。核心内容有并发程序设计(线程同步),进程间通信和分布式系统的介绍。我想要用这篇文章来分享我在这门课程中学到的东西,这就是十个操作系统的关键知识,如果你想成为一个优秀的软件工程师你就需要学习它。

But first, let’s define what an operating system is. An Operating System (OS) is a collection of software that manages computer hardware and provides services for programs. Specifically, it hides hardware complexity, manages computational resources, and provides isolation and protection. Most importantly, it directly has privilege access to the underlying hardware. Major components of an OS are file system, scheduler, and device driver. You probably have used both Desktop (Windows, Mac, Linux) and Embedded (Android, iOS) operating systems before.

首先,我们先定义什么的操作系统:一个操作系统是管理电脑硬件以及为程序提供服务的一个软件。具体点来说,它隐藏了硬件细节,管理着计算资源和提供隔离和保护。更重要的是,它可以直接访问底层硬件。操作系统的主要组成部分就是文件系统,调度(进程)以及设备驱动。你可能已经用过一些系统,桌面系统(Windows, Mac, Linux)和嵌入式系统(Android, iOS)。

There are 3 key elements of an operating system, which are: (1) Abstractions(process, thread, file, socket, memory), (2) Mechanisms (create, schedule, open, write, allocate), and (3) Policies (LRU, EDF)

操作系统有三个重要元素:

1)抽象(进程、线程、文件、套接字、内存)

2)机制(创建。调度、打开、写操作、分配)

3)策略(LRU, EDF)(这两个我也暂时不懂)

There are 2 operating system design principles, which are: (1) Separation of mechanism and policy by implementing flexible mechanisms to support policies, and (2) Optimize for common case: Where will the OS be used? What will the user want to execute on that machine? What are the workload requirements?

还有两个操作系统的设计原则:

策略和机制分离:通过设计灵活的机制来支持策略。

2)达到最优的使用效果:操作系统在什么场景中使用、用户希望在机器中执行什么、负载会有多少。

The 3 types of Operating Systems commonly used nowadays are: (1) Monolithic OS, where the entire OS is working in kernel space and is alone in supervisor mode; (2) Modular OS, in which some part of the system core will be located in independent files called modules that can be added to the system at run time; and (3) Micro OS, where the kernel is broken down into separate processes, known as servers. Some of the servers run in kernel space and some run in user-space.

常用的操作系统有下面三种:

1)单片机系统: 整个操作系统都在内核空间中工作,并且以管理员模式独自运行着。(这个应该就是简单的单片机)

2)模块化系统:系统的核心部分独立的被称为模块的文件中,这些模块可以在运行中添加。

3)微操作系统:内核被分成单独的进程,称为服务。有的服务运行在内核空间,有的运行在用户空间。(这个就是我们常用的PC了)

1 — Processes and Process Management

A process is basically a program in execution. The execution of a process must progress in a sequential fashion. To put it in simple terms, we write our computer programs in a text file and when we execute this program, it becomes a process which performs all the tasks mentioned in the program.

一、进程和过程管理

进程是一个正在执行的程序。进程必须按照正确的流程执行。简单地说,我们把程序写在一个文本文件中,当我们执行这个程序时,它就成为了一个进程。

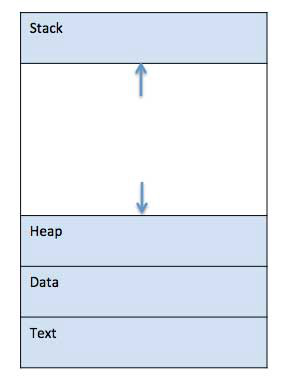

When a program is loaded into the memory and it becomes a process, it can be divided into four sections ─ stack, heap, text and data. The following image shows a simplified layout of a process inside main memory

当一个程序加载到内存中成为一个进程时,它可以分为四个部分--栈、堆、代码段和数据段。下面这幅图显示了内存结构的简单布局:

Stack: The process Stack contains the temporary data such as method/function parameters, return address and local variables.

Heap: This is dynamically allocated memory to a process during its run time.

Text: This includes the current activity represented by the value of Program Counter and the contents of the processor’s registers.

Data: This section contains the global and static variables.

栈:进程栈中包含一些临时数据,比如方法,函数参数,返回值地址和局部变量

堆:在程序运行期间动态分配的

代码段:这个段包含由程序计数器表示的当前活动状态和处理器寄存器的值(嗯,这个也不大妥)

数据段:这个段包含全局变量和静态变量

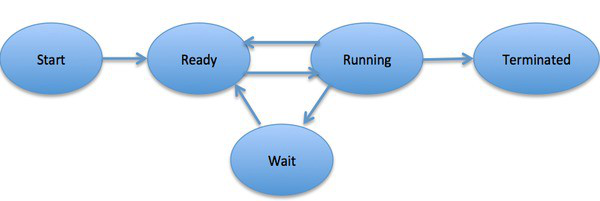

When a process executes, it passes through different states. These stages may differ in different operating systems, and the names of these states are also not standardized. In general, a process can have one of the following five states at a time:

当一个程序运行时,它会经历不同的状态。这些阶段在不同的操作系统中可能会有所不同,所以每个状态的称呼也没有标准化。一般来说,一个进程在运行期间会有下面五种状态。

- Start: This is the initial state when a process is first started/created.

- Ready: The process is waiting to be assigned to a processor. Ready processes are waiting to have the processor allocated to them by the operating system so that they can run. Process may come into this state after Start state or while running it by but interrupted by the scheduler to assign CPU to some other process.

- Running: Once the process has been assigned to a processor by the OS scheduler, the process state is set to running and the processor executes its instructions.

- Waiting: Process moves into the waiting state if it needs to wait for a resource, such as waiting for user input, or waiting for a file to become available.

- Terminated or Exit: Once the process finishes its execution, or it is terminated by the operating system, it is moved to the terminated state where it waits to be removed from main memory.

开始:一个进程第一次运行/创建时初始化的状态

就绪:进程正在等待被分配给处理器。就绪进程在等待操作系统给它分配处理器,之后才能运行。进程有可能由开始状态就进入这个状态,或者在运行期间被打断,系统将CPU分配给了其他进程。

运行:一旦进程被操作系统调度程序分配到了处理器,进程就会变成运行状态,处理器会执行它的指令。

等待:假如进程需要等待资源就会进入这个状态。比如等待输入、或等待文件可用。

结束/退出:一个进程执行完了或者被操作系统打断了,就会进入这个状态,它的资源也会被回收。

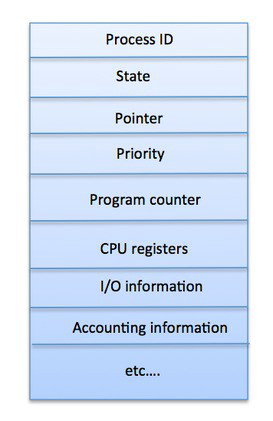

A Process Control Block is a data structure maintained by the Operating System for every process. The PCB is identified by an integer process ID (PID). A PCB keeps all the information needed to keep track of a process as listed below:

操作系统为每个进程维护一个进程控制块数据(PCB)结构,进程控制块由一个整型数唯一标识,这个数字称为进程号(PID)。PCB保存了所有的信息用来跟踪每个进程(见下图):

Process State: The current state of the process i.e., whether it is ready, running, waiting, or whatever.

Process Privileges: This is required to allow/disallow access to system resources.

Process ID: Unique identification for each of the process in the operating system.

Pointer: A pointer to parent process.

Program Counter: Program Counter is a pointer to the address of the next instruction to be executed for this process.

CPU Registers: Various CPU registers where process need to be stored for execution for running state.

CPU Scheduling Information: Process priority and other scheduling information which is required to schedule the process.

Memory Management Information: This includes the information of page table, memory limits, Segment table depending on memory used by the operating system.

Accounting Information: This includes the amount of CPU used for process execution, time limits, execution ID etc.

IO Status Information: This includes a list of I/O devices allocated to the process.

进程状态:当前的进程状态,有可能是就绪、运行、等待或者其他

进程权限:它允许访问的系统资源

进程号:在操作系统中是唯一的

一个指针:指向父进程的一个指针

程序计数器:指向下一次将要执行指令的地址

寄存器:各种CPU寄存器,它们要存储进程运行的状态信息

调度信息:进程优先级和其他进程调度信息

内存管理信息:它包含页表信息、内存限制、依赖操作系统的内存信息(这个也不靠谱)

统计信息:包含进程执行的CPU数量,时间限制,执行ID等。

IO状态信息:分配给进程的IO设备的列表

2 — Threads and Concurrency

A thread is a flow of execution through the process code, with its own program counter that keeps track of which instruction to execute next, system registers which hold its current working variables, and a stack which contains the execution history.

二、线程并发

线程是程序代码执行的一个流程,使用自己的程序计数器来记录下一条需要执行指令的地址,当前工作变量保存在系统寄存器中,和包含执行历史的堆栈。

A thread shares with its peer threads few information like code segment, data segment and open files. When one thread alters a code segment memory item, all other threads see that.

线程和其他同级线程共享一些信息,比如代码段、数据段和打开的文件。当一个线程修改了代码段的内存的某一项,其他线程都可以看见。

A thread is also called a lightweight process. Threads provide a way to improve application performance through parallelism. Threads represent a software approach to improving performance of operating system by reducing the overhead thread is equivalent to a classical process.

线程也可以称为轻量级进程。线程提供了通过并行提高程序效率的方法。通过线程开销来达到提高操作系统性能的目的,这是一个经典的过程。(这里也不大妥)

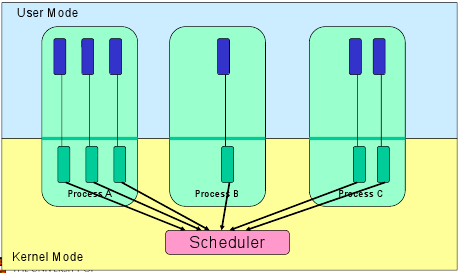

Each thread belongs to exactly one process and no thread can exist outside a process. Each thread represents a separate flow of control. Threads have been successfully used in implementing network servers and web server. They also provide a suitable foundation for parallel execution of applications on shared memory multiprocessors.

每个线程都依赖与进程中,没有线程可以存在与进程之外。每个线程代表一个单独的控制流。线程成功的应用在网络服务器和web服务器。它们为多核处理器上并行执行程序提供了合适的基础。

Advantages of Thread:

- Threads minimize the context switching time.

- Use of threads provides concurrency within a process.

- Efficient communication.

- It is more economical to create and context switch threads.

- Threads allow utilization of multiprocessor architectures to a greater scale and efficiency.

线程的优势:

线程上下文切换时间很短

使用线程可以实现进程内的并发

线程间通信简单

创建线程,上下文切换资源消耗低

在多核处理器上有非常高的效率

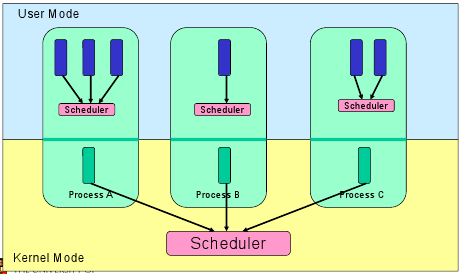

Threads are implemented in the following 2 ways:

- User Level Threads: User managed threads.

- Kernel Level Threads: Operating System managed threads acting on kernel, an operating system core.

线程有两种:

用户线程:用户管理的线程

内核线程:操作系统管理的线程,运行在内核态,是一个操作系统的核心。

User Level Threads

In this case, the thread management kernel is not aware of the existence of threads. The thread library contains code for creating and destroying threads, for passing message and data between threads, for scheduling thread execution and for saving and restoring thread contexts. The application starts with a single thread.

用户线程:

在这种情况下,线程管理内核不知道线程的存在。线程库主要功能有创建/销毁线程,线程间传递数据,线程调度的保护现场和恢复现场。程序最开始时是单线程的。

(补充:用户线程指不需要内核支持而在用户程序中实现的线程,其不依赖于操作系统核心,应用进程利用线程库管理的线程)

Advantages:

- Thread switching does not require Kernel mode privileges.

- User level thread can run on any operating system.

- Scheduling can be application specific in the user level thread.

- User level threads are fast to create and manage.

优势:

线程切换不需要想内核申请权限

用户级线程可以在任何操作系统中运行

// 这个要怎么翻译

用户级线程可以快速创建和管理

Disadvantages:

- In a typical operating system, most system calls are blocking.

- Multithreaded application cannot take advantage of multiprocessing.

劣势:

在典型的操作系统中,很多系统调用都是阻塞的

多线程程序不能用多进程处理

Kernel Level Threads

In this case, thread management is done by the Kernel. There is no thread management code in the application area. Kernel threads are supported directly by the operating system. Any application can be programmed to be multithreaded. All of the threads within an application are supported within a single process.

内核级线程:

线程管理由内核完成,应用程序区域没有线程管理的代码。操作系统能非常好的支持内核线程。任何应用程序都可以多线程的,应用程序中的所有线程都支持单个进程。(这一段不妥)

The Kernel maintains context information for the process as a whole and for individuals threads within the process. Scheduling by the Kernel is done on a thread basis. The Kernel performs thread creation, scheduling and management in Kernel space. Kernel threads are generally slower to create and manage than the user threads.

内核为每个进程维护上下文信息,同时也为每个线程维护上下文信息。内核的调度是在线程的基础上完成的。内核在内核空间中创建线程、调度和管理。内核线程的创建和管理一般比用户级线程更慢。

Advantages

- Kernel can simultaneously schedule multiple threads from the same process on multiple processes.

- If one thread in a process is blocked, the Kernel can schedule another thread of the same process.

- Kernel routines themselves can be multithreaded.

优势:

内核可以在多个进程中调用同一个进程中的不同线程。

假如有进程中有一个线程被阻塞了,内核可以调度这个进程中的其他线程。

内核例程本身可以是多线程的

Disadvantages

- Kernel threads are generally slower to create and manage than the user threads.

- Transfer of control from one thread to another within the same process requires a mode switch to the Kernel.

劣势:

内核线程的创建和管理一般比用户级线程更慢。

线程之间的切换需要切换到内核态。

3 — Scheduling

The process scheduling is the activity of the process manager that handles the removal of the running process from the CPU and the selection of another process on the basis of a particular strategy.

进程管理器来管理着进程调度,它可以暂停正在运行的进程,再根据特定条件去执行另外的进程。

Process scheduling is an essential part of a Multiprogramming operating systems. Such operating systems allow more than one process to be loaded into the executable memory at a time and the loaded process shares the CPU using time multiplexing.

程序调度是多进程系统中非常重要的组成部分,操作系统允许同一时间将多个进程加载内存中去,加载的进程利用多路复用来共享CPU。

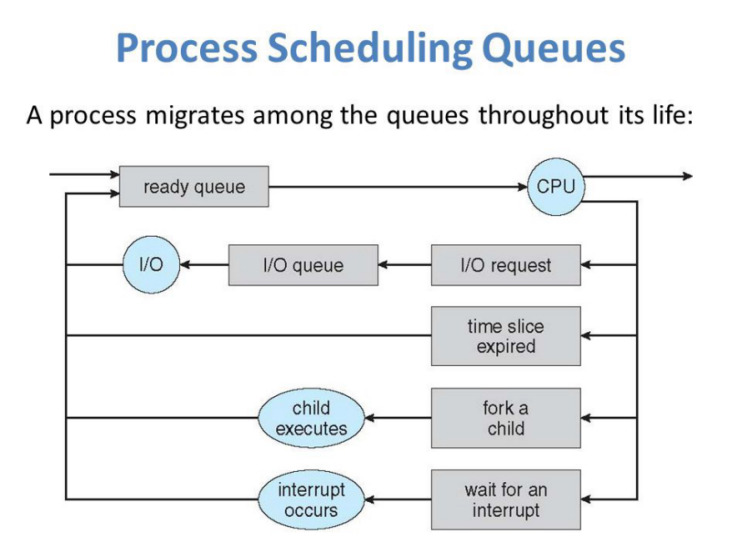

The OS maintains all Process Control Blocks (PCBs) in Process Scheduling Queues. The OS maintains a separate queue for each of the process states and PCBs of all processes in the same execution state are placed in the same queue. When the state of a process is changed, its PCB is unlinked from its current queue and moved to its new state queue.

操作系统在进程调度队列中维护了所有的进程控制块。操作系统为每个进程状态维护一个单独的队列,将处于相同执行状态的所有的进程控制块放在同一个队列中。当有的进程状态发生变化,就在原有队列中将它移除并加入到新的状态队列。

The Operating System maintains the following important process scheduling queues:

- Job queue − This queue keeps all the processes in the system.

- Ready queue − This queue keeps a set of all processes residing in main memory, ready and waiting to execute. A new process is always put in this queue.

- Device queues − The processes which are blocked due to unavailability of an I/O device constitute this queue.

操作系统维护着下面几个重要的进程调度队列:

工作队列:这个队列包含系统所有的进程。

就绪队列:这个队列中保存着加载进内存的进程队列,它们等待着被执行。(这个好像不太妥)一个新的进程总是先加在这个队列。

设备队列:由于I/O设备的不可用而被阻塞的进程组成了这个队列。

The OS can use different policies to manage each queue (FIFO, Round Robin, Priority, etc.). The OS scheduler determines how to move processes between the ready and run queues which can only have one entry per processor core on the system; in the above diagram, it has been merged with the CPU.

操作系统使用不同的策略来管理每个队列(先进先出、循环、优先级等),系统调度器管理进程如何在就绪队列和运行队中进行切换,在系统中每个处理器里面只能有一个条目(就绪队列中的一个进程),就像上面的图一样,它会合并到CPU中(这个怎么翻译)。

Two-state process model refers to running and non-running states:

- Running: When a new process is created, it enters into the system as in the running state.

- Not Running: Processes that are not running are kept in queue, waiting for their turn to execute. Each entry in the queue is a pointer to a particular process. Queue is implemented by using linked list. Use of dispatcher is as follows. When a process is interrupted, that process is transferred in the waiting queue. If the process has completed or aborted, the process is discarded. In either case, the dispatcher then selects a process from the queue to execute.

进程的两种状态,运行和非运行:

运行:当一个新的进程被撞见,它就会以运行状态进入系统。

非运行:不运行的进程也被放在一个队列中,等待着被执行。队列中的每一项都指向一个特定的进程。队列通过链表实现的。调度的流程大概是这样:当进程被打断了,它就加入到等待队列中;如果进程结束或被终止了,就被调度器丢弃了。不管怎样,调度器都从队列中选择进程来执行。

A context switch is the mechanism to store and restore the state or context of a CPU in Process Control block so that a process execution can be resumed from the same point at a later time. Using this technique, a context switcher enables multiple processes to share a single CPU. Context switching is an essential part of a multitasking operating system features.

上下文切换是在CPU中利用进程控制块保护现场和恢复现场的一种机制,以便在某些时候可以恢复现场让进程在同样的位置执行。使用这个技术,上下文切换允许多个进程共享一个处理器。上下文切换是多任务操作系统中非常重要的组成部分。

When the scheduler switches the CPU from executing one process to execute another, the state from the current running process is stored into the process control block. After this, the state for the process to run next is loaded from its own PCB and used to set the PC, registers, etc. At that point, the second process can start executing.

当调度器进程将CPU执行一个进程切换到执行另外一个进程时,当前进程的状态信息会被保存在进程控制块中,然后运行另外的进程并从进程控制块中加载它的信息,之后第二个进程就开始执行了。

Context switches are computationally intensive since register and memory state must be saved and restored. To avoid the amount of context switching time, some hardware systems employ two or more sets of processor registers. When the process is switched, the following information is stored for later use: Program Counter, Scheduling Information, Base and Limit Register Value, Currently Used Register, Changed State, I/O State Information, and Accounting Information.

因为需要保存和恢复寄存器和内存,所以上下文切换是计算密集型的。为了缩短上下文切换的时间,一些硬件系统提供了两个或者更多的处理器。当进程切换时要保存下面的信息:程序计数器(下个指令的地址),调度器信息,寄存器的值,当前使用的寄存器,更改的状态,IO状态信息和统计信息。

4 — Memory Management

Memory management is the functionality of an operating system which handles or manages primary memory and moves processes back and forth between main memory and disk during execution. Memory management keeps track of each and every memory location, regardless of either it is allocated to some process or it is free. It checks how much memory is to be allocated to processes. It decides which process will get memory at what time. It tracks whenever some memory gets freed or unallocated and correspondingly it updates the status.

- 内存管理

内存管理是操作系统的基础功能,它管理着主内存,执行期间在内存和硬盘之间来回移动进程。内存管理跟踪这个每一块内存,不管内存是被动态分配的还是自由的。(这个free,要翻译成不是动态分配的吗)。它要检查为进程分配了多少内存。它也决定着什么时候把内存分给进程。它也跟踪那些未分配的内存并且会更新他们的状态。

The process address space is the set of logical addresses that a process references in its code. For example, when 32-bit addressing is in use, addresses can range from 0 to 0x7fffffff; that is, 2³¹ possible numbers, for a total theoretical size of 2 gigabytes.

进程地址空间是进程在代码中引用的一组逻辑地址(不是真正的物理地址)。假如你用的是32位的系统,地址范围就是0 —— 0x7FFFFFFF。也就是231,总的就是2G。(这里应该是指的用户空间)

The operating system takes care of mapping the logical addresses to physical addresses at the time of memory allocation to the program. There are three types of addresses used in a program before and after memory is allocated:

- Symbolic addresses: The addresses used in a source code. The variable names, constants, and instruction labels are the basic elements of the symbolic address space.

- Relative addresses: At the time of compilation, a compiler converts symbolic addresses into relative addresses.

- Physical addresses: The loader generates these addresses at the time when a program is loaded into main memory.

操作系统负责在程序运行时将逻辑地址映射到物理地址,在内存分配前后总共有三种类型的地址:

符号地址:源码中使用的地址。变量名,常量和指令标签是符号地址空间中的基本元素。(这个指令标签是指啥,函数名之类的吗)

相对地址:编译期间,编译器将符号地址转换成相对地址。

物理地址:加载程序在程序加载到主内存时会生成这类地址。(这里感觉不太靠谱,CPU地址总线传来的地址,由硬件电路控制)

Virtual and physical addresses are the same in compile-time and load-time address-binding schemes. Virtual and physical addresses differ in execution-time address-binding scheme.

The set of all logical addresses generated by a program is referred to as a logical address space. The set of all physical addresses corresponding to these logical addresses is referred to as a physical address space.

编译加载时地址绑定方案中的虚拟地址和物理地址相同。在执行时地址绑定方案中虚拟地址和物理地址相同上有所不同。

由程序生成的所有逻辑地址的集合称为逻辑地址空间。 与这些逻辑地址相对应的所有物理地址的集合被称为物理地址空间。

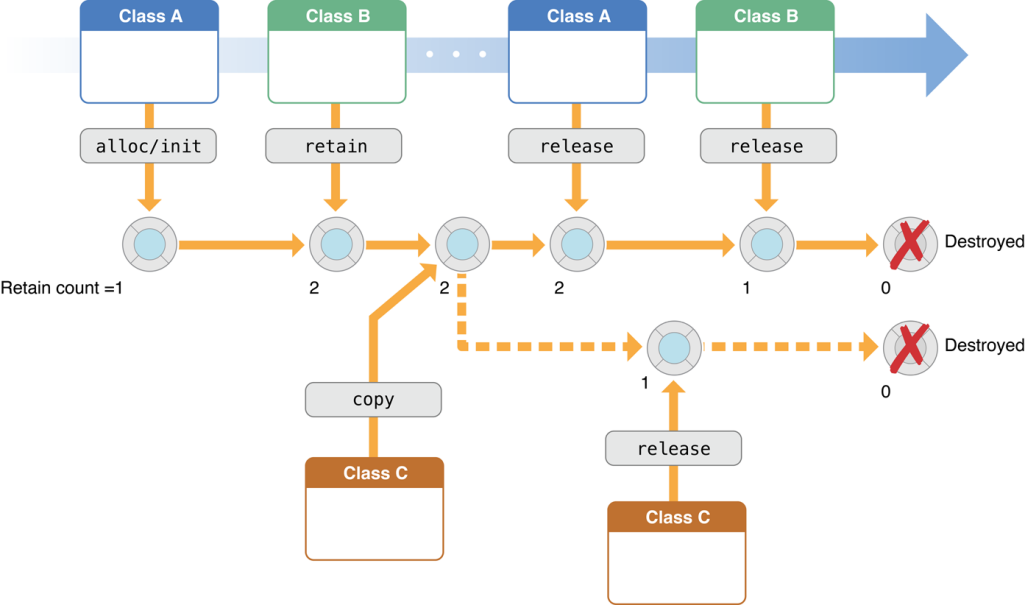

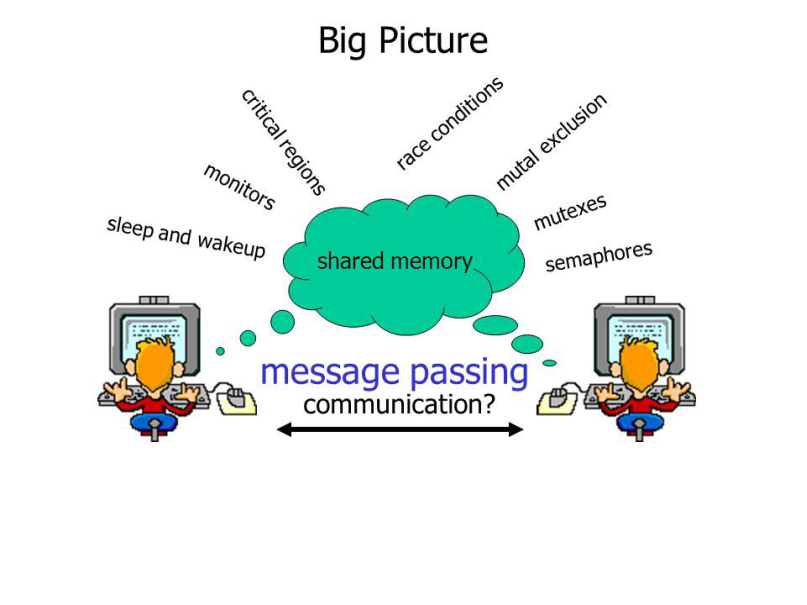

5 — Inter-Process Communication

A process can be of 2 types: Independent process and Co-operating process. An independent process is not affected by the execution of other processes while a co-operating process can be affected by other executing processes. Though one can think that those processes, which are running independently, will execute very efficiently but in practical, there are many situations when co-operative nature can be utilized for increasing computational speed, convenience and modularity. Inter-process communication (IPC) is a mechanism which allows processes to communicate each other and synchronize their actions. The communication between these processes can be seen as a method of co-operation between them. Processes can communicate with each other using these two ways: Shared Memory and Message Parsing.

五——进程间通信

一个进程有两种类型:独立的进程和协作的进程。一个独立的进程执行过程不受其他进程的影响,但是一个协作的进程执行过程会受其他进程的影响。虽然人们会认为那些独立的进程会高效的执行,实际上有很多情况可以通过相互协作来达到增加运算速度,方便快捷,和模块化的功效。

进程间通信是一种允许进程间相互通信和同步的机制,可以看成是进程间的一种合作的方式。进程间用心有下面两种通信方式:共享内存和消息解析(我觉得这里其实把消息队列,socket等都划为这类了)

Shared Memory Method

There are two processes: Producer and Consumer. Producer produces some item and Consumer consumes that item. The two processes shares a common space or memory location known as buffer where the item produced by Producer is stored and from where the Consumer consumes the item if needed. There are two version of this problem: first one is known as unbounded buffer problem in which Producer can keep on producing items and there is no limit on size of buffer, the second one is known as bounded buffer problem in which producer can produce up to a certain amount of item and after that it starts waiting for consumer to consume it.

共享内存:

假如有两个进程,生产者和消费者。生产者生产一些产品,消费者消费产品。这两个进程共享一个公共的缓冲区或本地空间,生产者往那个缓冲区存储数据(生产产品),消费者从那里消费产品。这个问题有两个版本,1:无缓冲边界问题:生产者可以一直生产产品,缓冲区没有上限。2:有缓冲区边界问题:生产者最多可以生产出一定数量的产品,然后等消费者消费掉才能继续生产。

In the bounded buffer problem: First, the Producer and the Consumer will share some common memory, then producer will start producing items. If the total produced item is equal to the size of buffer, producer will wait to get it consumed by the Consumer. Similarly, the consumer first check for the availability of the item and if no item is available, Consumer will wait for producer to produce it. If there are items available, consumer will consume it.

在有边界的问题中:生产者和消费者共享一部分内存。生产者开始生产产品,假如产品总数等于缓冲区容量,生产者就等待消费者从仓库里面拿走产品。类似的,消费者首先要检查仓库里面有没有产品,假如没有产品,消费者就要等待生产者生产出来。假如有产品,就能立即消费它。

(我觉得这里介绍的是生产者消费者模型,跟我们平常理解的共享内存不太一样,指Linux下的shmget、windows下的CreateFileMapping)

Message Parsing Method

In this method, processes communicate with each other without using any kind of of shared memory. If two processes p1 and p2 want to communicate with each other, they proceed as follow:

- Establish a communication link (if a link already exists, no need to establish it again.)

- Start exchanging messages using basic primitives. We need at least two primitives: send(message, destination) or send(message) and receive(message, host) or receive(message)

消息解析:

在这种方法中进程间通信不使用任何共享内存,假如进程p1和p2想要通信,它们将按照下面流程进行:

1)建立通信连接(已经连接上了就不再需要重复连接)

2)开始用基本原语交换数据,这里至少需要两种原语:发送(消息,目标地址)或发送(消息)、接收(消息,来源)或接收(消息)

(补充:原语,是执行过程中不可被打断的基本操作,你可以理解为一段代码,这段代码在执行过程中不能被打断)

The message size can be of fixed size or of variable size. if it is of fixed size, it is easy for OS designer but complicated for programmer and if it is of variable size then it is easy for programmer but complicated for the OS designer. A standard message can have two parts: header and body.

消息大小是可变的也可以是固定的。如果它是固定大小的,操作系统设计者很容易,但是对于程序员来说很复杂,如果它是可变大小的,那么对于程序员来说很容易,但是操作系统的设计者却很复杂。一个标准的消息要包含两个部分:消息头和消息体。

The header part is used for storing Message type, destination id, source id, message length and control information. The control information contains information like what to do if runs out of buffer space, sequence number, priority. Generally, message is sent using FIFO style.

消息头存储着消息的类型,目标id,源id,消息长度和控制信息。控制信息通常包含缓冲区大小、序号优先级等信息。通常来说、消息发送采用FIFO类型。

(我感觉这个其实是指socket通信方式)

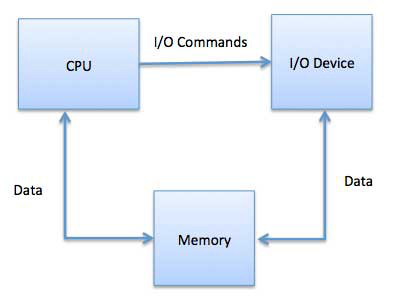

6 — I/O Management

One of the important jobs of an Operating System is to manage various I/O devices including mouse, keyboards, touch pad, disk drives, display adapters, USB devices, Bit-mapped screen, LED, Analog-to-digital converter, On/off switch, network connections, audio I/O, printers etc.

IO管理

操作系统的重要工作之一是管理各种I/O设备,包括鼠标、键盘、触摸板、磁盘驱动器、显示适配器、USB设备、位图屏幕、LED、模数转换器、开关、网络连接、音频I/O、打印机等。

An I/O system is required to take an application I/O request and send it to the physical device, then take whatever response comes back from the device and send it to the application. I/O devices can be divided into two categories:

- Block devices — A block device is one with which the driver communicates by sending entire blocks of data. For example, hard disks, USB cameras, Disk-On-Key etc.

- Character Devices — A character device is one with which the driver communicates by sending and receiving single characters (bytes, octets). For example, serial ports, parallel ports, sounds cards etc.

I/O系统需要接收应用程序I/O请求并将其发送到物理设备,然后将设备返回的响应发送到应用程序。I/O设备可以分为两类:

块设备:块设备是驱动程序通过发送整个数据块进行通信的设备。例如硬盘,USB摄像头,磁盘钥匙等等

字符设备:字符设备是驱动程序通过发送和接收单个字符(字节)进行通信的设备。例如,串口、并联端口、声卡等。

The CPU must have a way to pass information to and from an I/O device. There are three approaches available to communicate with the CPU and Device.

CPU必须有方法将信息传递给I/O设备。有三种方法可以与CPU和设备进行通信。

1> Special Instruction I/O

This uses CPU instructions that are specifically made for controlling I/O devices. These instructions typically allow data to be sent to an I/O device or read from an I/O device.

特殊指令IO:

它使用专门用于控制I / O设备的CPU指令。 这些指令通常允许将数据发送到I / O设备或从I / O设备读取。

2> Memory-mapped I/O

When using memory-mapped I/O, the same address space is shared by memory and I/O devices. The device is connected directly to certain main memory locations so that I/O device can transfer block of data to/from memory without going through CPU.

内存映射IO:

使用内存映射I / O时,内存和I / O设备共享相同的地址空间。 该设备直接连接到某些主存储器位置,因此I / O设备可以在不通过CPU的情况下将数据块传输到存储器或从存储器传输数据块。

While using memory mapped IO, OS allocates buffer in memory and informs I/O device to use that buffer to send data to the CPU. I/O device operates asynchronously with CPU, interrupts CPU when finished.

在使用内存映射IO时,OS会在内存中分配缓冲区,并通知I / O设备使用该缓冲区将数据发送到CPU。 I / O设备与CPU异步操作,完成后中断CPU。

The advantage to this method is that every instruction which can access memory can be used to manipulate an I/O device. Memory mapped IO is used for most high-speed I/O devices like disks, communication interfaces.

这种方法的优点是每个可以访问存储器的指令都可以用来操作I / O设备。 内存映射IO用于大多数高速I / O设备,如磁盘,通信接口。

3> Direct memory access (DMA)

Slow devices like keyboards will generate an interrupt to the main CPU after each byte is transferred. If a fast device such as a disk generated an interrupt for each byte, the operating system would spend most of its time handling these interrupts. So a typical computer uses direct memory access (DMA) hardware to reduce this overhead.

直接内存访问:

一些设备如键盘等慢速设备在传输一个字节后会对主CPU产生中断。如果诸如磁盘的快速设备为每个字节生成中断,则操作系统将花费大部分时间来处理这些中断。 因此,典型的计算机使用直接内存访问(DMA)硬件来减少这种开销。

Direct Memory Access (DMA) means CPU grants I/O module authority to read from or write to memory without involvement. DMA module itself controls exchange of data between main memory and the I/O device. CPU is only involved at the beginning and end of the transfer and interrupted only after entire block has been transferred.

直接内存访问(DMA)意味着CPU允许I / O模块直接读写内存。DMA模块本身控制主存储器和I / O设备之间的数据交换。CPU仅在传输的开始和结束时参与,并且仅在传输完整个块后才中断。

Direct Memory Access needs a special hardware called DMA controller (DMAC) that manages the data transfers and arbitrates access to the system bus. The controllers are programmed with source and destination pointers (where to read/write the data), counters to track the number of transferred bytes, and settings, which includes I/O and memory types, interrupts and states for the CPU cycles.

直接内存访问需要一个称为DMA控制器(DMAC)的特殊硬件,它管理数据传输并决定对系统总线的访问。控制器使用源和目标指针(在何处读取/写入数据),计数器来跟踪传输的字节数和设置,包括I / O和存储器类型,中断和CPU周期状态。

// +++++++++++++++++++++++++++

1) 字符设备:提供连续的数据流,应用程序可以顺序读取,通常不支持随机存取。相反,此类设备支持按字节/字符来读写数据。举例来说,调制解调器是典型的字符设备。

(2) 块设备:应用程序可以随机访问设备数据,程序可自行确定读取数据的位置。硬盘是典型的块设备,应用程序可以寻址磁盘上的任何位置,并由此读取数据。此外,数据的读写只能以块(通常是512B)的倍数进行。与字符设备不同,块设备并不支持基于字符的寻址。

区别:

1.字符设备只能以字节为最小单位访问,而块设备以块为单位访问,例如512字节,1024字节等

2.块设备可以随机访问,但是字符设备不可以

3.字符和块没有访问量大小的限制,块也可以以字节为单位来访问

// -----------------------------------------

7 — Virtualization

Virtualization is technology that allows you to create multiple simulated environments or dedicated resources from a single, physical hardware system. Software called a hypervisor connects directly to that hardware and allows you to split 1 system into separate, distinct, and secure environments known as virtual machines (VMs). These VMs rely on the hypervisor’s ability to separate the machine’s resources from the hardware and distribute them appropriately.

虚拟化技术允许你从一个硬件系统上配置多个环境来模拟特定的环境。有个管理软件直接安装在机器中,它允许将系统分割成多个独立的,不同的、安全的环境,这些环境被称为虚拟机(VMs)。这些虚拟机依赖于hypervisor’s的能力,并且可以在同一硬件资源中分发它们。

The original, physical machine equipped with the hypervisor is called the host, while the many VMs that use its resources are called guests. These guests treat computing resources — like CPU, memory, and storage — as a hangar of resources that can easily be relocated. Operators can control virtual instances of CPU, memory, storage, and other resources, so guests receive the resources they need when they need them.

最原始的物理机器被称为主机,其他使用资源的虚拟机被称为客户机。这些客户机可以轻松的使用一些资源,比如CPU、内存、存储器等。操作系统管理着CPU、内存、存储器等资源。当客户机需要的时候再分配给它们。

Ideally, all related VMs are managed through a single web-based virtualization management console, which speeds things up. Virtualization lets you dictate how much processing power, storage, and memory to give VMs, and environments are better protected since VMs are separated from their supporting hardware and each other. Simply put, virtualization creates the environments and resources you need from underused hardware.

理想的情况下,应该有一个基于web的控制台去管理这所有的虚拟机,这样有助于管理。虚拟化允许你为虚拟机分配指定的处理能力、存储空间和内存等,并且可以很好的保护它们,因为它们的跟硬件分离的。简单地说,虚拟化可以从未充分使用的硬件中创建出用户所需的环境和资源。

Types of Virtualization:

- Data Virtualization: Data that’s spread all over can be consolidated into a single source. Data virtualization allows companies to treat data as a dynamic supply — providing processing capabilities that can bring together data from multiple sources, easily accommodate new data sources, and transform data according to user needs. Data virtualization tools sits in front of multiple data sources and allows them to be treated as single source, delivering the needed data — in the required form — at the right time to any application or user.

数据虚拟化:分布在各个地方的数据可以合并成一个数据。数据虚拟化公司可以为数据提供动态支持——提供处理能力,将多个数据整合成一个资源,轻松的适应新的数据资源,并根据用户需求转换数据。数据虚拟化工具在处理多个数据时将它们看成单一的数据,并在合适的时候根据需求交付给用户。

- Desktop Virtualization: Easily confused with operating system virtualization — which allows you to deploy multiple operating systems on a single machine — desktop virtualization allows a central administrator (or automated administration tool) to deploy simulated desktop environments to hundreds of physical machines at once. Unlike traditional desktop environments that are physically installed, configured, and updated on each machine, desktop virtualization allows admins to perform mass configurations, updates, and security checks on all virtual desktops.

桌面虚拟化:操作系统虚拟化允许你在一台机器上部署多个操作系统,而桌面虚拟化允许中央管理员(或自动管理工具)同时将模拟桌面环境部署到数百台物理机器上,这两者很容易混淆。与物理安装、配置和在每台机器上更新的传统桌面环境不同,桌面虚拟化允许管理员在所有虚拟桌面上执行大量配置、更新和安全检查。

- Server Virtualization: Servers are computers designed to process a high volume of specific tasks really well so other computers — like laptops and desktops — can do a variety of other tasks. Virtualizing a server lets it to do more of those specific functions and involves partitioning it so that the components can be used to serve multiple functions.

服务器虚拟化:服务器是设计用来很好地处理大量特定任务的计算机,所以像笔记本电脑和台式机这样的其他计算机可以完成各种其他任务。虚拟化服务器使它可以执行更多这些特定的功能,并涉及对其进行分区,以便可以使用组件来服务多个功能。

- Operating System Virtualization: Operating system virtualization happens at the kernel — the central task managers of operating systems. It’s a useful way to run Linux and Windows environments side-by-side. Enterprises can also push virtual operating systems to computers, which: (1) Reduces bulk hardware costs, since the computers don’t require such high out-of-the-box capabilities, (2) Increases security, since all virtual instances can be monitored and isolated, and (3) Limits time spent on IT services like software updates.

操作系统虚拟化:操作系统的虚拟化发生在内核层(操作系统的核心管理任务),这是并行运行Linux系统或Windows系统的一种有效的方式。企业可以将虚拟计算系统推送到计算机上,他有下面几个特点:1)降低硬件资源,因为计算机不需要这么高的开箱能力(这个开箱能力不大合适)。2)提高安全性,所有的虚拟机都可以被监视和隔离。3)限制了在IT服务上花费的时间,比如软件更新。

- Network Functions Virtualization: Network functions virtualization (NFV) separates a network’s key functions (like directory services, file sharing, and IP configuration) so they can be distributed among environments. Once software functions are independent of the physical machines they once lived on, specific functions can be packaged together into a new network and assigned to an environment. Virtualizing networks reduces the number of physical components — like switches, routers, servers, cables, and hubs — that are needed to create multiple, independent networks, and it’s particularly popular in the telecommunications industry.

网络功能虚拟化:将网络的关键功能(比如目录服务、文件共享、IP配置等)分离出来,以便于在做环境中部署。一旦网络功能独立与物理机器,特定发功能就可以打包到一起在新的网络环境中使用了。旬计划网络技术减少了很多硬件组件,比如交换机,路由器,电缆和集线器。在电信行业中非常受欢迎。

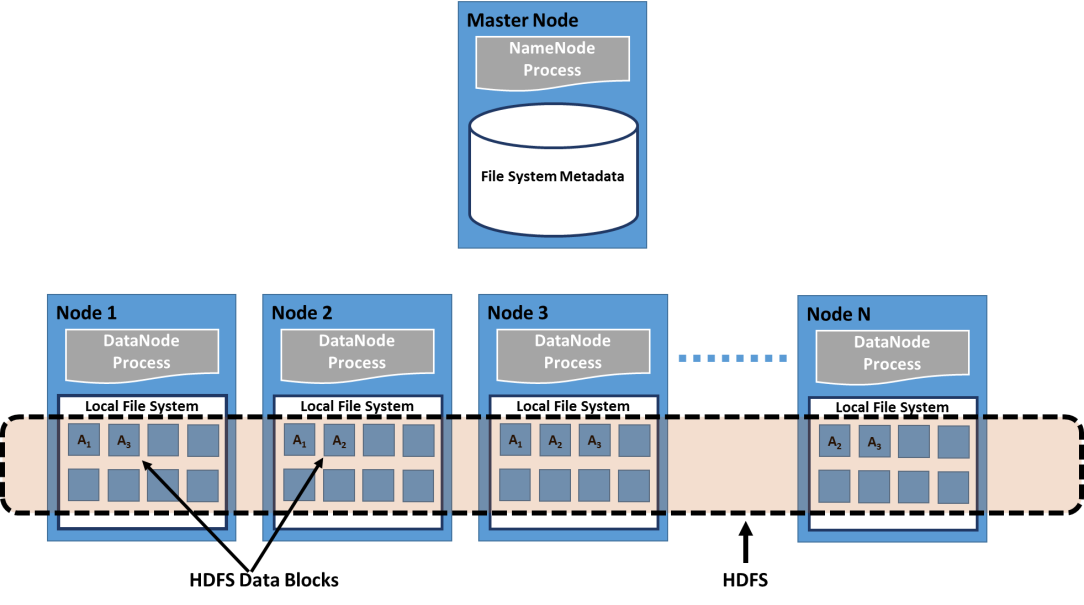

8 — Distributed File Systems

A distributed file system is a client/server-based application that allows clients to access and process data stored on the server as if it were on their own computer. When a user accesses a file on the server, the server sends the user a copy of the file, which is cached on the user’s computer while the data is being processed and is then returned to the server.

分布式文件系统是一个基于客户机/服务器的应用程序,它允许客户端访问和处理存储在服务器上的数据,就像在自己的计算机上一样。当用户访问服务器上的文件时,服务器向用户发送文件副本,该文件在处理数据时缓存在用户的计算机上,然后返回给服务器。

Ideally, a distributed file system organizes file and directory services of individual servers into a global directory in such a way that remote data access is not location-specific but is identical from any client. All files are accessible to all users of the global file system and organization is hierarchical and directory-based.

理想情况下,分布式文件系统将单个服务器的文件和目录服务组织到一个全局目录中,以便远程数据访问不是特定目录的,而是与任何客户机相同。所有用户都可以访问全局文件系统的文件,组织是分层的和基于目录的。

Since more than one client may access the same data simultaneously, the server must have a mechanism in place (such as maintaining information about the times of access) to organize updates so that the client always receives the most current version of data and that data conflicts do not arise. Distributed file systems typically use file or database replication (distributing copies of data on multiple servers) to protect against data access failures.

Sun Microsystems’ Network File System (NFS), Novell NetWare, Microsoft’s Distributed File System, and IBM’s DFS are some examples of distributed file systems.

由于很多客户端可以同时访问数据,所以服务器必须有一种机制来组织管理,让客户端总是接收到当前最新的版本并且不会冲突。分布式文件系统必须使用文件或数据库复制(在多个服务器上分发文件副本)来保护访问数据不会失败。

下面是一些分布式文件系统的例子:太阳公司的网络文件系统(NFS)、Novell NetWare、微软的分布式文件系统、IBM的DFS。

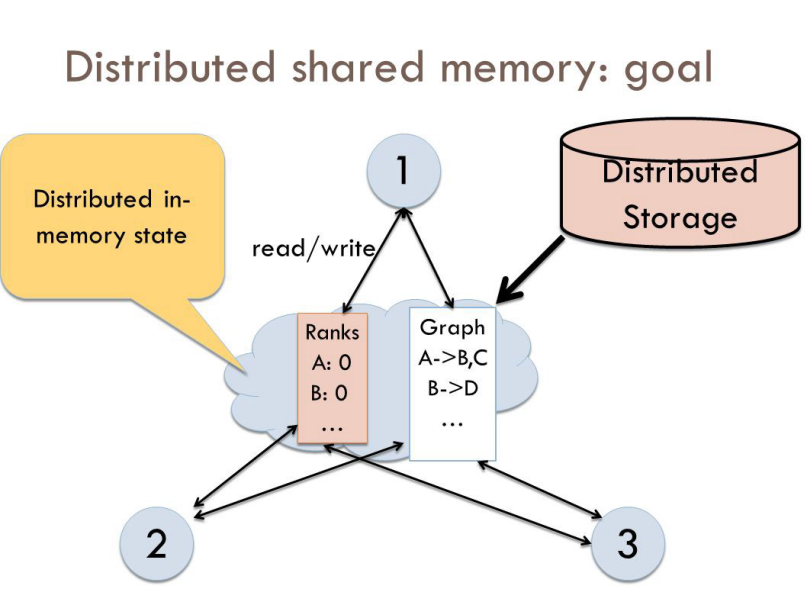

9 — Distributed Shared Memory

Distributed Shared Memory (DSM) is a resource management component of a distributed operating system that implements the shared memory model in distributed systems, which have no physically shared memory. The shared memory provides a virtual address space that is shared among all computers in a distributed system.

分布式共享内存(DSM):

DSM是分布式操作系统的一个资源管理组件,它实现了分布式系统中的共享内存模型,它是没有物理共享内存的。共享内存在分布式系统中为所有系统提供了虚拟地址空间。

In DSM, data is accessed from a shared space similar to the way that virtual memory is accessed. Data moves between secondary and main memory, as well as, between the distributed main memories of different nodes. Ownership of pages in memory starts out in some pre-defined state but changes during the course of normal operation. Ownership changes take place when data moves from one node to another due to an access by a particular process.

在DSM中,数据从共享空间访问,就像访问虚拟内存。数据在次要内存和主内存之间移动,以及在不同节点的分布式主内存之间移动。内存中页面的所有权在某些预定义状态下开始,但在正常操作过程中发生变化。当数据由于特定进程的访问而从一个节点移动到另一个节点时,所有权发生更改。

Advantages of Distributed Shared Memory:

- Hide data movement and provide a simpler abstraction for sharing data. Programmers don’t need to worry about memory transfers between machines like when using the message passing model.

- Allows the passing of complex structures by reference, simplifying algorithm development for distributed applications.

- Takes advantage of “locality of reference” by moving the entire page containing the data referenced rather than just the piece of data.

- Cheaper to build than multiprocessor systems. Ideas can be implemented using normal hardware and do not require anything complex to connect the shared memory to the processors.

- Larger memory sizes are available to programs, by combining all physical memory of all nodes. This large memory will not incur disk latency due to swapping like in traditional distributed systems.

- Unlimited number of nodes can be used. Unlike multiprocessor systems where main memory is accessed via a common bus, thus limiting the size of the multiprocessor system.

- Programs written for shared memory multiprocessors can be run on DSM systems.

分布式共享内存的优点:

隐藏数据移动并为共享数据提供更简单的抽象。程序员不需要像使用消息传递模型那样担心机器之间的内存传输。

允许通过引用传递复杂结构,简化了分布式应用程序的算法开发。

利用“引用的局部性”,移动包含引用数据的整个页面,而不仅仅是数据片段。

构建起来比多处理器系统成本低。用普通的硬件就能实现,不需要任何复杂的东西将共享内存连接到处理器。

通过将所有节点的物理内存相结合,可以为程序提供更大的内存大小。这种大内存不会像传统的分布式系统那样由于交换而导致磁盘延迟。

可以使用无限数量的节点。不同于通过公共总线访问主内存的多处理器系统,因此限制了多处理器系统的大小。

为共享内存多处理器编写的程序可以在DSM系统上运行。

There are two different ways that nodes can be informed of who owns what page: invalidation and broadcast. Invalidation is a method that invalidates a page when some process asks for write access to that page and becomes its new owner. This way the next time some other process tries to read or write to a copy of the page it thought it had, the page will not be available and the process will have to re-request access to that page. Broadcasting will automatically update all copies of a memory page when a process writes to it. This is also called write-update. This method is a lot less efficient more difficult to implement because a new value has to sent instead of an invalidation message.

节点有两种方式可以知道页面的所有权:失效和广播。

失效:失效是当某个进程请求对该页进行写访问并成为该页的新所有者时,使该页失效的方法。这样,下次当其他进程试图读或写页面的副本时,页面将不可用,进程将不得不重新请求对该页面的访问。

广播:当进程写入内存页时,广播将自动更新内存页的所有副本。这也称为写更新。这个方法的效率要低得多,实现起来也更困难,因为必须发送一个新的值而不是一个无效消息。

10 — Cloud Computing

More and more, we are seeing technology moving to the cloud. It’s not just a fad — the shift from traditional software models to the Internet has steadily gained momentum over the last 10 years. Looking ahead, the next decade of cloud computing promises new ways to collaborate everywhere, through mobile devices.

云计算

现在我们看见越来越多的技术迁移到了云上——这并不是一时的热情。过去十年,传统软件模式慢慢的向互联网靠近。展望未来,云计算将在任何地方任何移动设备上被广泛应用。

So what is cloud computing? Essentially, cloud computing is a kind of outsourcing of computer programs. Using cloud computing, users are able to access software and applications from wherever they need, while it is being hosted by an outside party — in “the cloud.” This means that they do not have to worry about things such as storage and power, they can simply enjoy the end result.

那么,什么是云计算呢?从本质上讲,云计算是一种计算机程序的外包。使用云计算,用户可以从任何需要的地方访问软件和应用程序,而它是由云中的外部方托管的。这意味着他们不必担心存储和能力等问题,他们只需要享受最终的结果。

Traditional business applications have always been very complicated and expensive. The amount and variety of hardware and software required to run them are daunting. You need a whole team of experts to install, configure, test, run, secure, and update them. When you multiply this effort across dozens or hundreds of apps, it isn’t easy to see why the biggest companies with the best IT departments aren’t getting the apps they need. Small and mid-sized businesses don’t stand a chance.

传统商业软件总是非常繁琐和昂贵的。运行它们需要的大量的软件和硬件支持,这是非常吓人的。你需要完整的团队专业的人才来使用它们(安装、配置、测试、运行和更新)。一旦你需要运行几十个甚至几百个软件时,你就不难理解为啥那种很屌的公司都没有得到他们需要的应用程序。中小型的公司更加没有机会。(这一段要怎么才合理点)

With cloud computing, you eliminate those headaches that come with storing your own data, because you’re not managing hardware and software — that becomes the responsibility of an experienced vendor like Salesforce and AWS. The shared infrastructure means it works like a utility: you only pay for what you need, upgrades are automatic, and scaling up or down is easy.

使用云计算,你可以省却自己存储数据的麻烦,因为你不用管理软件和硬件——这是云计算供应商的责任,比如Salesforce 和AWS。共享基础架构意味着它就像一个实用程序:你只需支付所需的费用,升级是自动的,并且可以轻松扩展或缩小。

Cloud-based apps can be up and running in days or weeks, and they cost less. With a cloud app, you just open a browser, log in, customize the app, and start using it. Businesses are running all kinds of apps in the cloud, like customer relationship management (CRM), HR, accounting, and much more.

基于云计算的软件可以以低成本状态运行几天或几周。使用云应用程序时,你只需要打开浏览器,登录账号,自定义应用程序之后就可以开始使用了。很多企业都在云中运行各种程序,比如用户管理系统,人事管理软件等其他的。

As cloud computing grows in popularity, thousands of companies are simply rebranding their non-cloud products and services as “cloud computing.” Always dig deeper when evaluating cloud offerings and keep in mind that if you have to buy and manage hardware and software, what you’re looking at isn’t really cloud computing but a false cloud.

随着云计算越来越受欢迎,成千上万的公司只是将其非云产品和服务重新命名为“云计算”。在评估云产品时要牢记如果你必须购买和管理硬件和软件,那么你所看到的并不是真正的云计算,而是虚假的云。

Last Takeaway

As a software engineer, you will be part of a larger body of computer science, which encompasses hardware, operating systems, networking, data management and mining, and many other disciplines. The more engineers in each of these disciplines understand about the other disciplines, the better they will be able to interact with those other disciplines efficiently.

As the operating system is the “brain” that manages input, processing, and output, all other disciplines interact with the operating system. An understanding of how the operating system works will provide valuable insight into how the other disciplines work, as your interaction with those disciplines is managed by the operating system.

写在最后:

作为一个软件工程师,你将学习计算机科学中的一部分,比如硬件、操作系统、网络、数据管理和挖掘等很多其他学科。每个学科的工程师如果对其他学科的知识了解的越多,那么你也就能更加高效的与其他工程师合作完成工作。

操作系统是管理输入、处理和输出的大脑,其他所有的学科都和操作系统有交互。了解操作系统是如何工作的能让我们解决一些其他学科上面的问题,因为与其他学科都与操作系统有交互。

---------------分割线-----------------

翻译小结:

之前在网上看见这文章,觉得很不错。让我对一些操作系统的概念有了更加深刻的理解。作为软件工程师,我觉得操作系统知识就像是一栋楼的地基一样。不管是什么语言的工程师都必须要了解地基是怎样工作的,才能写出稳定可靠的摩天大楼。你如果不懂这些基础知识,估计就只能建造一些简单的平房,或者茅草屋了。

前面看了一点,受限于英文水平,边查边看。看的很辛苦,并且很多词汇翻译完过一会就忘了,于是萌生了将整篇文章翻译并记录下来的想法。一方面,强迫自己去理解这里面的一些感念,另一方面也提高一下自己的英文水平。

前后花了跨度有两个星期吧,工作之余有时间就翻译一会。前面还好,后面越来越烦躁,有一些段落都是直接用谷歌翻译的,再想办法理通顺。甚至一度不想翻译了,但想想既然做了,就做完这件事吧。勉勉强强的完成一件事情也比中途放弃一件事要好。

我知道很多地方翻译的很渣,所以强烈建议读者阅读英文理解它,我在很多我自己不确定的地方后面都有括号说明。如果有更好的翻译欢迎交流,我可以在上面修改,以便呈现出更好的文章给大家。

浙公网安备 33010602011771号

浙公网安备 33010602011771号