准备将oracle数据抽取到hive中作为ods层的数据

方案:使用sqoop建立job增量抽取到HDFS,使用hive建立外部表指定location到HDFS下的数据位置,使用Azkaban定时调用

1.建立sqoop job

sqoop job --create TMGPFRETURNDETAIL_RPT -- import \

--connect jdbc:oracle:thin:@192.168.0.18:1521:xxxx \

--username xxxx\

--password-file /sqoop/pwd/sqoopPWD_mgrpt.pwd \

--target-dir /DATA/ODS/TMGPFRETURNDETAIL_RPT \

--fields-terminated-by '^A' \

--table TMGPFRETURNDETAIL_RPT \

--incremental append \

--check-column DEALTIME \

--last-value '2016-01-01 00:00:00' \

--m 6

append 表示数据已追加的方式抽取

last-value 建立job后下次运行时sqoop会根据已有值自行判断替换这个值

password-file 指定数据库的密码文件

target-dir 抽取的数据存放在hdfs的位置

fields-terminated-by 字段之间的分隔符

check-column 增量抽取时的增量字段

查看sqoop下的job

[hadoop@FineReportAppServer pwd]$ echo `date` "jobs start"

2021年 04月 06日 星期二 15:50:04 CST jobs start

[hadoop@FineReportAppServer pwd]$ sqoop job -list

...

...

Warning: /home/hadoop/sqoop-1.4.6-cdh5.7.0/bin/../../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

21/04/06 16:04:40 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

Available jobs:

TMGPFRETURNDETAIL_RPT

TMGPFRETURNFORM_RPT

TMGPFSALEDETAIL_RPT

TMGPFSALEFORM_RPT

2.建立hive表

CREATE EXTERNAL TABLE `TMGPFRETURNDETAIL_RPT`(

`branchno` string,

`pfreturnno` string,

`serialno` double,

`plucode` string,

`jprice` double,

`pfprice` double,

`price` double,

`counts` double,

`pftotal` double,

`yttotal` double,

`zpcount` double,

`sharetotal` double,

`zrtotal` double,

`rtnrate` double,

`rtntotal` double,

`cost` double,

`comflag` string,

`guidflag` string,

`dbname` string,

`dealguid` string,

`dealtime` string)

COMMENT 'Imported by sqoop on 2021/04/02 10:48:31'

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '^A'

LINES TERMINATED BY '\n'

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://ns/DATA/ODS/TMGPFRETURNDETAIL_RPT'

TBLPROPERTIES (

'COLUMN_STATS_ACCURATE'='false',

'last_modified_by'='hadoop',

'last_modified_time'='1617349860',

'numFiles'='0',

'numRows'='-1',

'rawDataSize'='-1',

'totalSize'='0',

'transient_lastDdlTime'='1617349860')

建表的oracle

select

'CREATE EXTERNAL TABLE '||table_name||' ( '||

wm_concat(column_name||' '|| decode(data_type,'NUMBER', 'double','string')) ||')'

||

'COMMENT ''Imported by sqoop on '||to_char(sysdate,'yyyy/mm/dd HH:mm:ss')||' '' '

||'ROW FORMAT DELIMITED '

||' FIELDS TERMINATED BY ''^A'' '

|| 'LINES TERMINATED BY ''\n'' '

||'STORED AS INPUTFORMAT '

||'''org.apache.hadoop.mapred.TextInputFormat'' '

||'OUTPUTFORMAT '

|| '''org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'' '

||'LOCATION '

|| '''hdfs://ns/DATA/ODS/'||table_name||''' '

||'TBLPROPERTIES ( '

||'''COLUMN_STATS_ACCURATE''=''false'', '

||'''last_modified_by''=''hadoop'' )'

from dba_tab_columns where owner ='HSRPT' and table_name ='TMGPFSALEDETAIL_RPT'

group by table_name

查看hive表

hive (ods)> show tables;

OK

tab_name

tmgpfreturndetail_rpt

tmgpfreturnform_rpt

tmgpfsaledetail_rpt

tmgpfsaleform_rpt

Time taken: 0.043 seconds, Fetched: 4 row(s)

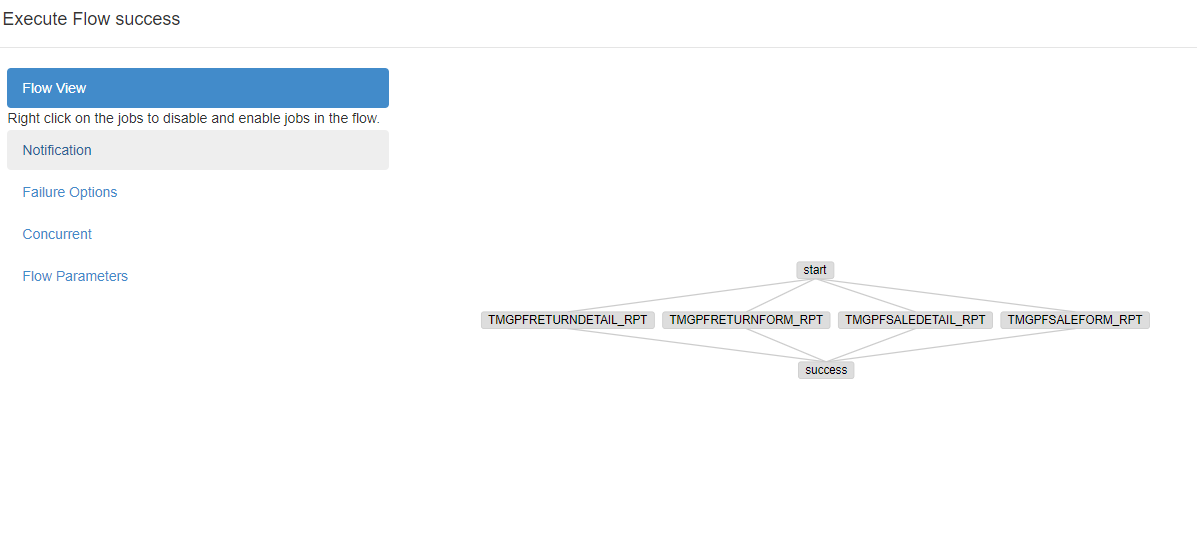

3.建立Azkaban job

START:

type=command

command=echo `date` "jobs start"

TMGPFSALEDETAIL_RPT:

type=command

command=sqoop job -exec TMGPFSALEDETAIL_RPT

dependencies=start

TMGPFRETURNDETAIL_RPT:

type=command

command=sqoop job -exec TMGPFRETURNDETAIL_RPT

dependencies=start

TMGPFSALEFORM_RPT:

type=command

command=sqoop job -exec TMGPFSALEFORM_RPT

dependencies=start

TMGPFRETURNFORM_RPT:

type=command

command=sqoop job -exec TMGPFRETURNFORM_RPT

dependencies=start

SUCCESS:

type=command

command=echo `date` "complete...TMGPFRETURNFORM_RPT...TMGPFSALEFORM_RPT...TMGPFSALEDETAIL_RPT...TMGPFRETURNDETAIL_RPT...syc"

dependencies=TMGPFRETURNFORM_RPT,TMGPFSALEFORM_RPT,TMGPFSALEDETAIL_RPT,TMGPFRETURNDETAIL_RPT

打包到zip文件下上传到Azkaban 的project下

4.执行

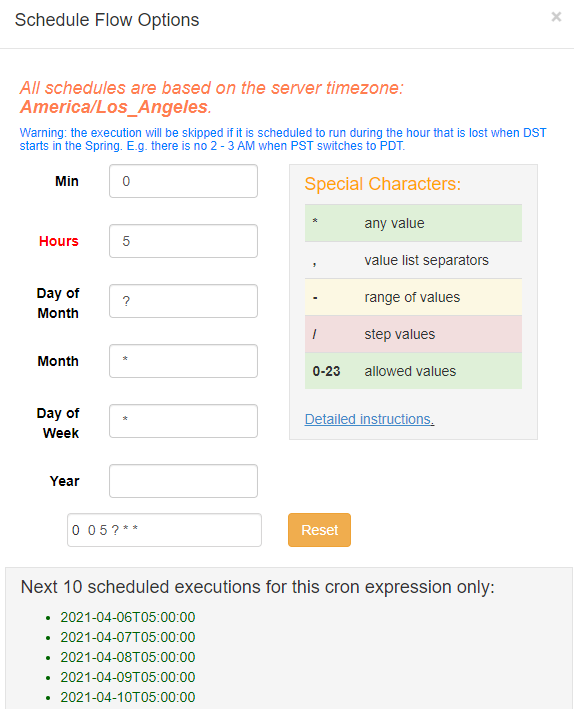

定时:点击schedule

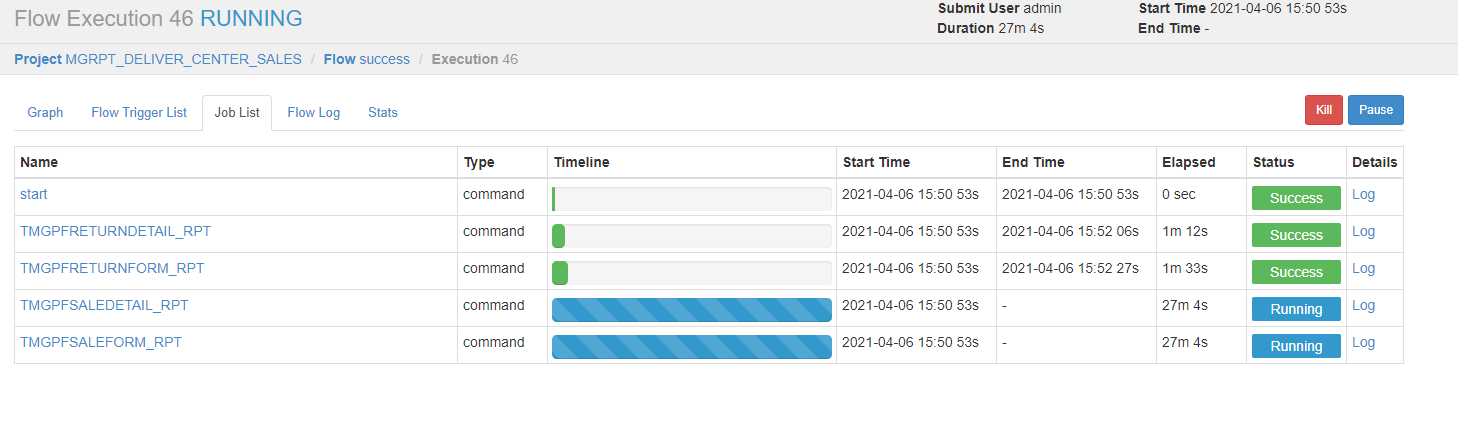

查看执行:

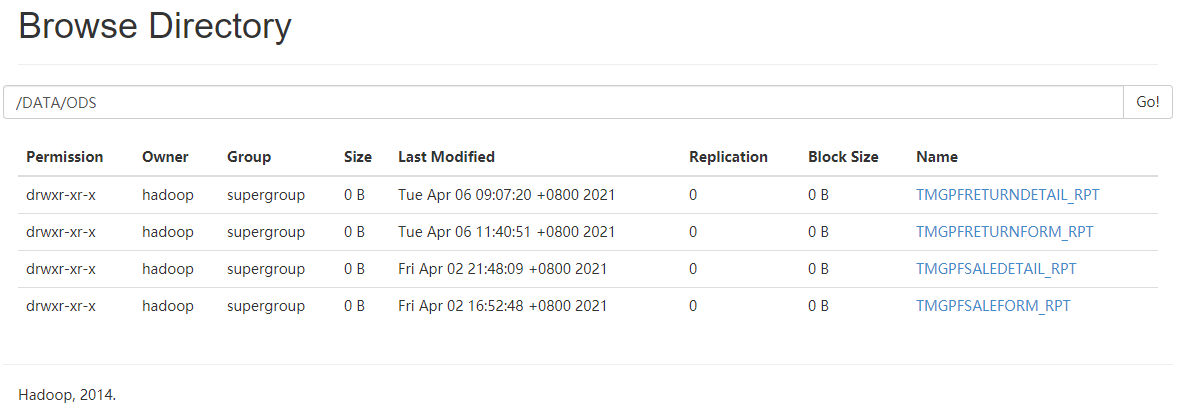

5.查看数据

进到hdfs查看:

[hadoop@oracletest01 ~]$ hdfs dfs -du -h /DATA/ODS/

21/04/06 16:30:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

3.6 G 7.1 G /DATA/ODS/TMGPFRETURNDETAIL_RPT

936.1 M 1.8 G /DATA/ODS/TMGPFRETURNFORM_RPT

73.2 G 146.4 G /DATA/ODS/TMGPFSALEDETAIL_RPT

15.0 G 30.1 G /DATA/ODS/TMGPFSALEFORM_RPT

进到hive查看数据

hive (ods)> select count(1) from TMGPFSALEFORM_RPT;

Query ID = hadoop_20210406150303_0b672820-3192-41a4-a5d1-f5abf46fdd31

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2021-04-06 15:06:35,356 Stage-1 map = 0%, reduce = 0%

2021-04-06 15:06:38,385 Stage-1 map = 100%, reduce = 0%

2021-04-06 15:07:38,635 Stage-1 map = 100%, reduce = 0%

2021-04-06 15:08:39,443 Stage-1 map = 100%, reduce = 0%

2021-04-06 15:09:21,386 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local130132522_0002

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 584384636688 HDFS Write: 0 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

_c0

56550606

Time taken: 167.557 seconds, Fetched: 1 row(s)

hive (ods)> show tables;

OK

tab_name

tmgpfreturndetail_rpt

tmgpfreturnform_rpt

tmgpfsaledetail_rpt

tmgpfsaleform_rpt

Time taken: 0.043 seconds, Fetched: 4 row(s)

浙公网安备 33010602011771号

浙公网安备 33010602011771号