RNN里的embeddings层 - 实践

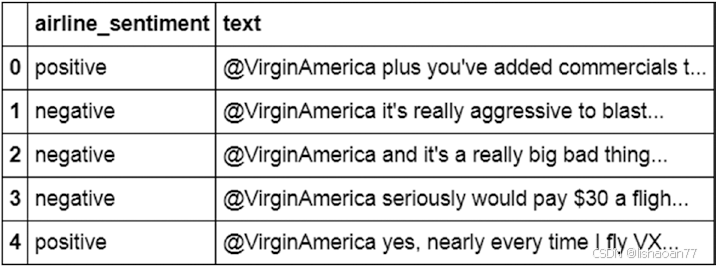

要看一下embedding如何工作,我们看一下预测航空公司客户sentiment的数据库,基于客户tweets:

先导入相关的包:就是1. 总

#import relevant packages

from keras.layers import Dense, Activation

from keras.layers.recurrent import SimpleRNN

from keras.models import Sequential

from keras.utils import to_categorical

from keras.layers.embeddings import Embedding

from sklearn.cross_validation import train_test_split

import numpy as np

import nltk

from nltk.corpus import stopwords

import re

import pandas as pd

#Let us go ahead and read the dataset:

t=pd.read_csv('/home/akishore/airline_sentiment.csv')

t.head()

import numpy as np

t['sentiment']=np.where(t['airline_sentiment']=="positive",1,0)

2. 因为文本有噪音,我们要预处理它,去除逗号并转换所有的词到小字字母:

def preprocess(text):

text=text.lower()

text=re.sub('[^0-9a-zA-Z]+',' ',text)

words = text.split()

#words2=[w for w in words if (w not in stop)]

#words3=[ps.stem(w) for w in words]

words4=' '.join(words)

return(words4)

t['text'] = t['text'].apply(preprocess)

3. 与前一节相似,大家转换词到索引值:

from collections import Counter

counts = Counter()

for i,review in enumerate(t['text']):

counts.update(review.split())

words = sorted(counts, key=counts.get, reverse=True)

words[:10]

![]()

chars = words

nb_chars = len(words)

word_to_int = {word: i for i, word in enumerate(words, 1)}

int_to_word = {i: word for i, word in enumerate(words, 1)}

word_to_int['the']

#3

int_to_word[3]

#the

4. 映射词到相应的索引:

mapped_reviews = []

for review in t['text']:

mapped_reviews.append([word_to_int[word] for word in review.

split()])

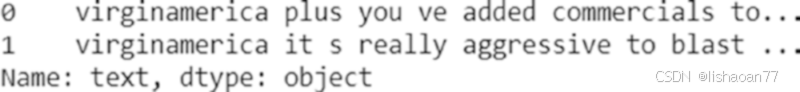

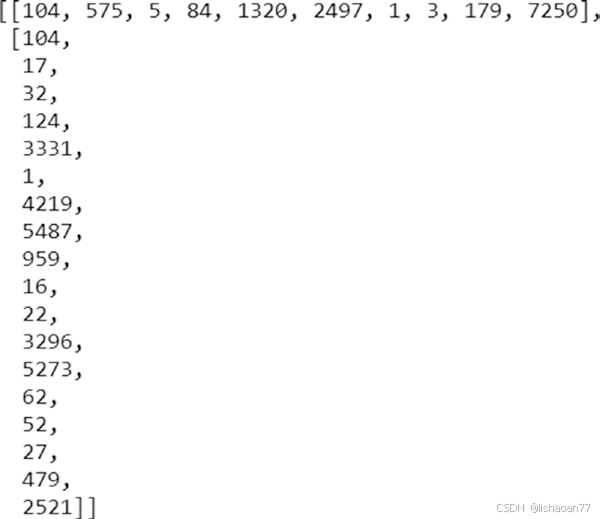

t.loc[0:1]['text']

mapped_reviews[0:2]

注意, virginamerica的索引在两个reviews里一样 (104)。

5. 初始化长度为200的零序列。注意我们选择200作为句子的长度,因为没有长度超过200个词的 reviews。而且,下面代码的第二部分保证所有的reviews长度小于200个词。所有的开始索引加零,最后的索引与词在 review里的呈现相对应:

sequence_length = 200

sequences = np.zeros((len(mapped_reviews), sequence_

length),dtype=int)

for i, row in enumerate(mapped_reviews):

review_arr = np.array(row)

sequences[i, -len(row):] = review_arr[-sequence_length:]

6. 我们分割数据集:

y=t['sentiment'].values

X_train, X_test, y_train, y_test = train_test_split(sequences, y,

test_size=0.30,random_state=10)

y_train2 = to_categorical(y_train)

y_test2 = to_categorical(y_test)

7. 有了数据集,我们创建模型。注意, embedding作为函数取唯一词的总数、降低的维表达的词、输入的词数作为输入:

top_words=12679

embedding_vecor_length=32

max_review_length=200

model = Sequential()

model.add(Embedding(top_words, embedding_vecor_length,

input_length=max_review_length))

model.add(SimpleRNN(1, return_sequences=False,unroll=True))

model.add(Dense(2, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam',

metrics=['accuracy'])

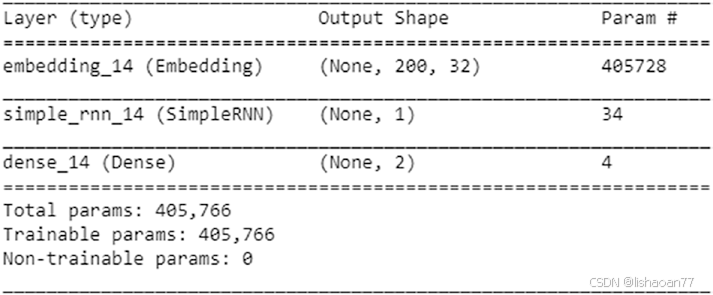

print(model.summary())

model.fit(X_train, y_train2, validation_data=(X_test, y_test2),

epochs=50, batch_size=1024)

我们看一下模型的汇总。数据集里共有12,679个唯一的词。embedding层保证我们用32-维空间来呈现词,因此embedding层有405,728个参数。

现在我们有32维embedded输入,每个输入与一个隐藏层单元连接—因此有 32个权重. 跟着32个权重有1个偏置。与这一层相应的最后权重是当前隐藏单元与上一隐藏单元相连的权重。因此有34个权重。注意,假如有一个输出来自 embedding 层,我们不需要在SimpleRNN层指明输入形状。一旦运行模型,输出分类准确率为 87%。

浙公网安备 33010602011771号

浙公网安备 33010602011771号