语音录入和DeepSeek大模型的调研笔记

整个的流程

- 使用麦克风录制音频文件

- 把录制生成的音频文件上传给SenseVoice,实现音频转文本的功能

- 把SenseVoice返回的结果文本作为输入给DeepSeek

- 输出DeepSeek的结果

DeepSeek的调用

DeepSeek如果调用官方在线的API,其实比较简单,用官方的示例即可完成最基本的交互,把代码中的api_key换成自己的key就可以了。

aiClient = OpenAI(api_key="这里换成自己的key", base_url="https://api.deepseek.com")

response = aiClient.chat.completions.create(

model="deepseek-chat",

messages=[

{"role": "system", "content": "你是一个精通百科知识的知识官。"},

{"role": "user", "content": inputText},

],

stream=False

)

print(response.choices[0].message.content)

SenseVoice的部署

SenseVoice的部署我是参照下面这篇文章的:

https://blog.csdn.net/weixin_49735366/article/details/145430989

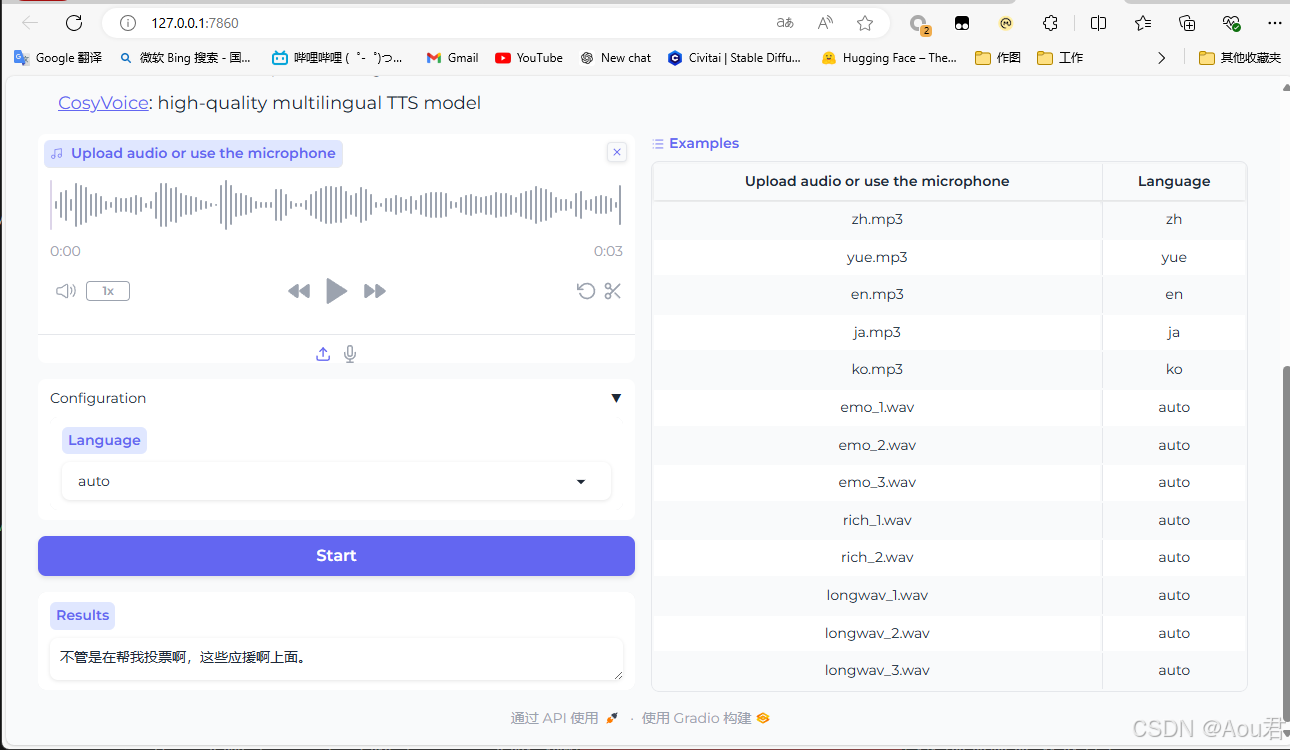

部署完成后可以通过http://127.0.0.1:7860/ 去访问,在网页上直接能完成音频文件和麦克风音频的转换。

页面上有对这个模型的介绍,这属于SenseVoice-Small模型,推荐30秒内的音频数据。

API调用

网页的底部,有个【通过API使用】的链接,点了以后提供了简单的API调用的示例:

from gradio_client import Client, handle_file

client = Client("http://127.0.0.1:7860/")

result = client.predict(

input_wav=handle_file('https://github.com/gradio-app/gradio/raw/main/test/test_files/audio_sample.wav'),

language="auto",

api_name="/model_inference"

)

print(result)

麦克风录制

上面的API调用的示例,也是文件上传的方式。所以流程串起来,需要使用麦克风录制音频,然后把音频文件上传。

下面是完整的客户端示例代码:

import io

import time

import wave

import requests

import speech_recognition as sr

from tqdm import tqdm

import re

from gradio_client import Client, handle_file

from openai import OpenAI

class AudioRecorder:

def __init__(self, rate=16000):

"""初始化录音器,设置采样率"""

self.rate = rate

self.recognizer = sr.Recognizer()

def record(self):

"""录制音频并返回音频数据"""

with sr.Microphone(sample_rate=self.rate) as source:

print("请在倒计时结束前说话", flush=True)

time.sleep(0.1) # 确保print输出

start_time = time.time()

audio = None

for _ in tqdm(range(20), desc="倒计时", unit="s"):

try:

# 录音,设置超时1秒以便更新进度条

audio = self.recognizer.listen(

source, timeout=1, phrase_time_limit=15

)

break # 录音成功,跳出循环

except sr.WaitTimeoutError:

# 超时未检测到语音

if time.time() - start_time > 20:

print("未检测到语音输入")

break

if audio is None:

print("未检测到语音输入")

return None

# 返回音频数据

audio_data = audio.get_wav_data()

return io.BytesIO(audio_data)

def save_wav(self, audio_data, filename="temp_output.wav"):

"""将音频数据保存为WAV文件"""

audio_data.seek(0)

with wave.open(filename, "wb") as wav_file:

nchannels = 1

sampwidth = 2 # 16-bit audio

framerate = self.rate # 采样率

comptype = "NONE"

compname = "not compressed"

audio_frames = audio_data.read()

wav_file.setnchannels(nchannels)

wav_file.setsampwidth(sampwidth)

wav_file.setframerate(framerate)

wav_file.setcomptype(comptype, compname)

wav_file.writeframes(audio_frames)

audio_data.seek(0)

def run(self):

"""运行录音功能并保存音频文件"""

audio_data = self.record()

filename = "temp_output.wav"

if audio_data:

self.save_wav(audio_data, filename)

return filename

class SenseVoice:

def __init__(self, api_url, emo=False):

"""初始化语音识别接口,设置API URL和情感识别开关"""

self.api_url = api_url

self.emo = emo

def _extract_second_bracket_content(self, raw_text):

"""提取文本中第二对尖括号内的内容"""

match = re.search(r"<[^<>]*><([^<>]*)>", raw_text)

if match:

return match.group(1)

return None

def _get_speech_text(self, audio_data):

"""将音频数据发送到API并获取识别结果"""

print("正在进行语音识别")

files = [("files", audio_data)]

data = {"keys": "audio1", "lang": "auto"}

response = requests.post(self.api_url, files=files, data=data)

if response.status_code == 200:

result_json = response.json()

if "result" in result_json and len(result_json["result"]) > 0:

if self.emo:

result = (

self._extract_second_bracket_content(

result_json["result"][0]["raw_text"]

)

+ "\n"

+ result_json["result"][0]["text"]

)

return result

else:

return result_json["result"][0]["text"]

else:

return "未识别到有效的文本"

else:

return f"请求失败,状态码: {response.status_code}"

def speech_to_text(self, audio_data):

"""调用API进行语音识别并返回结果"""

return self._get_speech_text(audio_data)

# 使用示例

if __name__ == "__main__":

recorder = AudioRecorder()

audio_data = recorder.run()

if audio_data:

client = Client("http://127.0.0.1:7860/")

inputText = client.predict(

input_wav=handle_file(audio_data),

language="zh",

api_name="/model_inference",

)

print(inputText)

aiClient = OpenAI(

api_key="这里换成你自己的key", base_url="https://api.deepseek.com"

)

response = aiClient.chat.completions.create(

model="deepseek-chat",

messages=[

{"role": "system", "content": "你是一个精通百科知识的知识官。"},

{"role": "user", "content": inputText},

],

stream=False,

)

print(response.choices[0].message.content)

上面的代码,依赖一些库文件,安装对应的库文件即可。

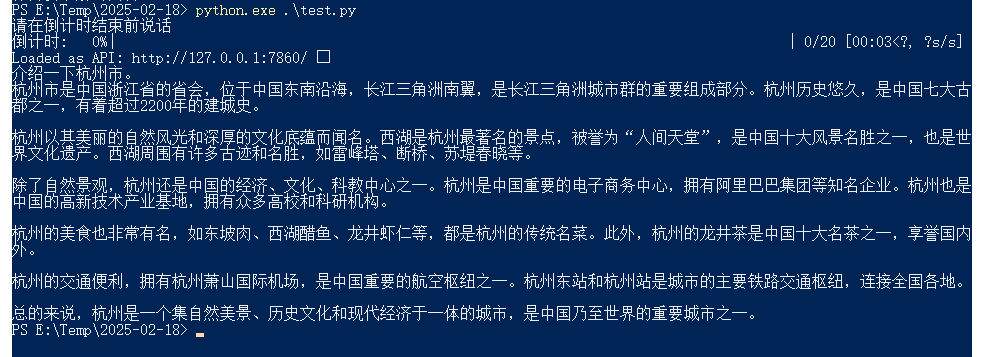

运行效果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号