第五次作业

作业①:

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。Selenium框架爬取京东商城某类商品信息及图片。

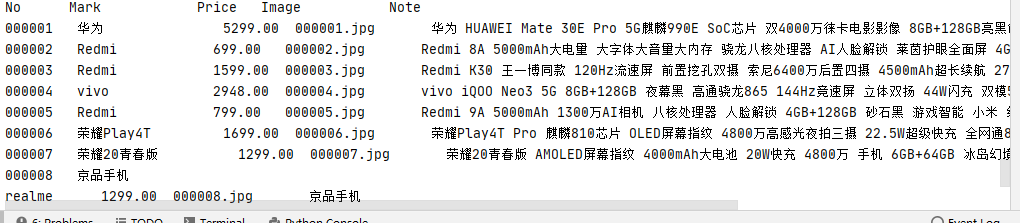

from selenium import webdriver from selenium.webdriver.chrome.options import Options import urllib.request import threading import sqlite3 import os import datetime from selenium.webdriver.common.keys import Keys import time class MySpider: headers = { "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36 " } # 保存图片的路径 imagePath = "download" def startUp(self, url, key): # Initializing Chrome browser chrome_options = Options() chrome_options.add_argument('--headless') chrome_options.add_argument('--disable-gpu') self.driver = webdriver.Chrome(chrome_options=chrome_options) # Initializing variables self.threads = [] self.No = 0 self.imgNo = 0 # Initializing database try: self.con = sqlite3.connect("phones.db") self.cursor = self.con.cursor() try: # 如果有表就删除 self.cursor.execute("drop table phones") except: pass try: # 建立新的表 sql = "create table phones (mNo varchar(32) primary key, mMark varchar(255),mPrice varchar(32),mNote varchar(255),mFile varchar(255))" self.cursor.execute(sql) except: pass except Exception as err: print(err) # Initializing images folder try: if not os.path.exists(MySpider.imagePath): os.mkdir(MySpider.imagePath) images = os.listdir(MySpider.imagePath) for img in images: s = os.path.join(MySpider.imagePath, img) os.remove(s) except Exception as err: print(err) self.driver.get(url) keyInput = self.driver.find_element_by_id("key") keyInput.send_keys(key) keyInput.send_keys(Keys.ENTER) def closeUp(self): try: self.con.commit() self.con.close() self.driver.close() except Exception as err: print(err) #插入数据 def insertDB(self, mNo, mMark, mPrice, mNote, mFile): try: sql = "insert into phones (mNo,mMark,mPrice,mNote,mFile) values (?,?,?,?,?)" self.cursor.execute(sql, (mNo, mMark, mPrice, mNote, mFile)) except Exception as err: print(err) def showDB(self): try: con = sqlite3.connect("phones.db") cursor = con.cursor() print("%-8s%-16s%-8s%-16s%s" % ("No", "Mark", "Price", "Image", "Note")) cursor.execute("select mNo,mMark,mPrice,mFile,mNote from phones order by mNo") rows = cursor.fetchall() for row in rows: print("%-8s %-16s %-8s %-16s %s" % (row[0], row[1], row[2], row[3], row[4])) con.close() except Exception as err: print(err) def download(self, src1, src2, mFile): data = None if src1: try: req = urllib.request.Request(src1, headers=MySpider.headers) resp = urllib.request.urlopen(req, timeout=10) data = resp.read() except: pass if not data and src2: try: req = urllib.request.Request(src2, headers=MySpider.headers) resp = urllib.request.urlopen(req, timeout=10) data = resp.read() except: pass if data: print("download begin", mFile) fobj = open(MySpider.imagePath + "\\" + mFile, "wb") fobj.write(data) fobj.close() print("download finish", mFile) def processSpider(self): try: time.sleep(1) print(self.driver.current_url) lis = self.driver.find_elements_by_xpath("//div[@id='J_goodsList']//li[@class='gl-item']") for li in lis: # We find that the image is either in src or in data-lazy-img attribute try: src1 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("src") except: src1 = "" try: src2 = li.find_element_by_xpath(".//div[@class='p-img']//a//img").get_attribute("data-lazy-img") except: src2 = "" try: price = li.find_element_by_xpath(".//div[@class='p-price']//i").text except: price = "0" try: note = li.find_element_by_xpath(".//div[@class='p-name p-name-type-2']//a//em").text mark = note.split(" ")[0] mark = mark.replace("爱心东东\n", "") mark = mark.replace(",", "") note = note.replace("爱心东东\n", "") note = note.replace(",", "") except: note = "" mark = "" src2 = "" self.No = self.No + 1 no = str(self.No) while len(no) < 6: no = "0" + no print(no, mark, price) if src1: src1 = urllib.request.urljoin(self.driver.current_url, src1) p = src1.rfind(".") mFile = no + src1[p:] elif src2: src2 = urllib.request.urljoin(self.driver.current_url, src2) p = src2.rfind(".") mFile = no + src2[p:] if src1 or src2: T = threading.Thread(target=self.download, args=(src1, src2, mFile)) T.setDaemon(False) T.start() self.threads.append(T) else: mFile = "" self.insertDB(no, mark, price, note, mFile) try: self.driver.find_element_by_xpath("//span[@class='p-num']//a[@class='pn-prev disabled']") except: nextPage = self.driver.find_elements_by_xpath("//span[@class='p-num']//a[@class='pn-next']") time.sleep(10) nextPage.click() self.processSpider() except Exception as err: print(err) def executeSpider(self, url, key): starttime = datetime.datetime.now() print("Spider starting......") self.startUp(url, key) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() for t in self.threads: t.join() print("Spider completed......") endtime = datetime.datetime.now() elapsed = (endtime - starttime).seconds print("Total ", elapsed, " seconds elapsed") url = "https://www.jd.com " spider = MySpider() spider.executeSpider(url, "手机") spider.showDB()

作业②

要求:

熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容。

使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

from selenium import webdriver from selenium.webdriver.chrome.options import Options import sqlite3 import time class stocks: headers = { "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36 " } def startUp(self, url): # Initializing Chrome browser chrome_options = Options() chrome_options.add_argument('--headless') chrome_options.add_argument('--disable-gpu') self.driver = webdriver.Chrome(options=chrome_options) try: self.con = sqlite3.connect("stocks.db") self.cursor = self.con.cursor() self.opened = True try: # 如果有表就删除 self.cursor.execute("drop table stocks") except: pass try: sql = "create table stocks (mid varchar(32) , mcode varchar(32) primary key , mname varchar(32)," \ "mzxj varchar(32),mzdf varchar(32),mzde varchar(32),mcjl varchar(32),mcje varchar(32),mzf varchar(32),mzg varchar(32),mzd varchar(32),mjk varchar(32),mzs varchar(32))" self.cursor.execute(sql) except: pass except Exception as err: print(err) # 获取url链接 self.driver.get(url) def closeUp(self): try: self.con.commit() self.con.close() self.driver.close() except Exception as err: print(err) def processSpider(self): time.sleep(1) try: trs = self.driver.find_elements_by_xpath( "//div[@class='listview full']/table[@id='table_wrapper-table']/tbody/tr") time.sleep(1) for tr in trs: time.sleep(1) id = tr.find_element_by_xpath(".//td[position()=1]").text # 序号 code = tr.find_element_by_xpath(".//td[position()=2]/a").text # 代码 name = tr.find_element_by_xpath(".//td[position()=3]/a").text # 名称 zxj = tr.find_element_by_xpath(".//td[position()=5]/span").text # 最新价 zdf = tr.find_element_by_xpath(".//td[position()=6]/span").text # 涨跌幅 zde = tr.find_element_by_xpath(".//td[position()=7]/span").text # 涨跌额 cjl = tr.find_element_by_xpath(".//td[position()=8]").text # 成交量 time.sleep(1) cje = tr.find_element_by_xpath(".//td[position()=9]").text # 成交额 zf = tr.find_element_by_xpath(".//td[position()=10]").text # 振幅 zg = tr.find_element_by_xpath(".//td[position()=11]/span").text # 最高 zd = tr.find_element_by_xpath(".//td[position()=12]/span").text # 最低 jk = tr.find_element_by_xpath(".//td[position()=13]/span").text # 今开 zs = tr.find_element_by_xpath(".//td[position()=14]").text # 昨收 time.sleep(1) # 打印爬取结果 print(id, code, name, zxj, zdf, zde, cjl, cje, zf,zg, zd, jk, zs) # 将结果存入数据库 if self.opened: self.cursor.execute( 'insert into stocks(mid, mcode, mname, mzxj, mzdf, mzde, mcjl, mcje, mzf, mzg, mzd, mjk, mzs) ' 'values (?,?,?,?,?,?,?,?,?,?,?,?,?)', (str(id), str(code), str(name), str(zxj), str(zdf), str(zde), str(cjl), str(cje), str(zf), str(zg), str(zd), str(jk), str(zs))) except Exception as err: print(err) def executeSpider(self, url): print("Spider starting......") self.startUp(url) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() url = "http://quote.eastmoney.com/center/gridlist.html#hs_a_board" spider = stocks() spider.executeSpider(url)

作业③:

要求:

熟练掌握 Selenium 查找HTML元素、实现用户模拟登录、爬取Ajax网页数据、等待HTML元素等内容。

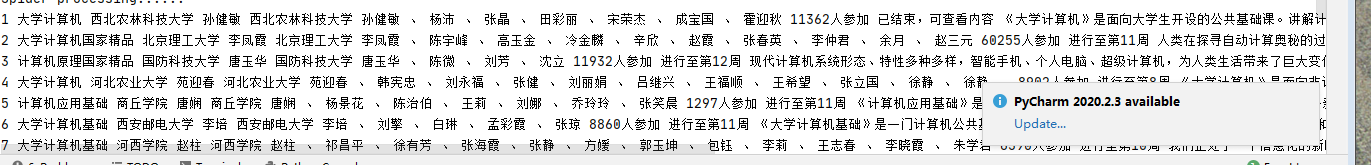

使用Selenium框架+MySQL爬取中国mooc网课程资源信息(课程号、课程名称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介)

from selenium import webdriver from selenium.webdriver.chrome.options import Options import time import sqlite3 class mooc: headers = { "User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/85.0.4183.102 Safari/537.36 " } def startUp(self, url): # Initializing Chrome browser chrome_options = Options() chrome_options.add_argument('--headless') chrome_options.add_argument('--disable-gpu') self.driver = webdriver.Chrome(options=chrome_options) try: self.con = sqlite3.connect("mooc.db") self.cursor = self.con.cursor() self.opened = True try: # 如果有表就删除 self.cursor.execute("drop table mooc") except: pass try: sql = "create table mooc (cId varchar(32) , cCourse varchar(32) , cCollege varchar(32)," \ "cTeacher varchar(32),cTeam varchar(32),cCount varchar(32) primary key,cProcess varchar(32),cBrief varchar(256))" self.cursor.execute(sql) except: pass except Exception as err: print(err) # 获取url链接 self.driver.get(url) # 计数 self.count = 1 def closeUp(self): if self.opened: self.con.commit() self.con.close() self.opened = False self.driver.close() print("closed") def processSpider(self): time.sleep(1) try: lis = self.driver.find_elements_by_xpath("//div[@class='m-course-list']/div/div[@class]") time.sleep(1) for li in lis: course = li.find_element_by_xpath(".//div[@class='t1 f-f0 f-cb first-row']").text college = li.find_element_by_xpath(".//a[@class='t21 f-fc9']").text teacher = li.find_element_by_xpath(".//div[@class='t2 f-fc3 f-nowrp f-f0']/a[position()=2]").text team = li.find_element_by_xpath(".//div[@class='t2 f-fc3 f-nowrp f-f0']").text count = li.find_element_by_xpath(".//div[@class='t2 f-fc3 f-nowrp f-f0 margin-top0']/span[@class='hot']").text process = li.find_element_by_xpath(".//span[@class='txt']").text brief = li.find_element_by_xpath(".//span[@class='p5 brief f-ib f-f0 f-cb']").text time.sleep(1) # 打印爬取结果 print(self.count, course, college, teacher, team, count, process, brief) # 将结果存入数据表 if self.opened: self.cursor.execute( "insert into mooc(cId, cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief)" "values(?, ?, ?, ?, ?, ?, ?, ?)", (str(self.count), course, college, teacher, team, str(count), process, brief)) self.count += 1 except Exception as err: print(err) def executeSpider(self, url): print("Spider starting......") self.startUp(url) print("Spider processing......") self.processSpider() print("Spider closing......") self.closeUp() url = 'https://www.icourse163.org/search.htm?search=%E8%AE%A1%E7%AE%97%E6%9C%BA#/' spider = mooc() spider.executeSpider(url)

心得体会:这次作业让我对selenium框架有了更深的理解,由于老师给出了作业一的爬取详解,使得作业二和三的解决思路变得十分清楚。

但在作业三中我也遇到了不少问题,程序第一遍执行时报错:

Message: unknown error: net::ERR_CONNECTION_REFUSED

在打开mooc网页时,明明网络通畅,但是mooc官方网页就是打不开

进行网络诊断windows建议我重启宽带,但是并不起效。后来把虚拟机网络禁用后,成功解决。

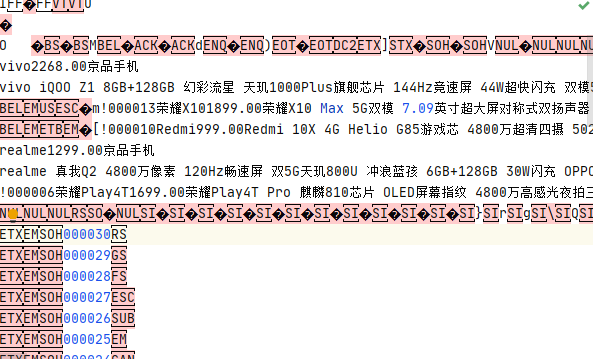

由于查看数据库时不小心将它用sql文件打开,所有数据库文件都成了乱码

浙公网安备 33010602011771号

浙公网安备 33010602011771号