借助阿里云PAI平台使用unsloth对llama3-7B进行微调

之前依照别人的示例在colab上跑过一次,但colab给我限额了,两天都没解锁,遂换成阿里云PAI再尝试了一次,但在阿里云上似乎不能访问到huggingface,需要对之前的示例载入模型和数据集部分进行一些修改,修改为使用已下载好的文件和数据集。

首先导入必要的unsloch

%%capture

import torch

major_version, minor_version = torch.cuda.get_device_capability()

# 由于Colab有torch 2.2.1,会破坏软件包,要单独安装

!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

if major_version >= 8:

# 新GPU,如Ampere、Hopper GPU(RTX 30xx、RTX 40xx、A100、H100、L40)。

!pip install --no-deps packaging ninja einops flash-attn xformers trl peft accelerate bitsandbytes

else:

# 较旧的GPU(V100、Tesla T4、RTX 20xx)

!pip install --no-deps trl peft accelerate bitsandbytes

!pip install xformers==0.0.25 #最新的0.0.26不兼容

pass

导入模型,models是一个文件夹,与当前文件同处一个目录下

from unsloth import FastLanguageModel

import torch

max_seq_length = 2048 # Choose any! We auto support RoPE Scaling internally!

dtype = None # None for auto detection. Float16 for Tesla T4, V100, Bfloat16 for Ampere+

load_in_4bit = True # Use 4bit quantization to reduce memory usage. Can be False.

# 4bit pre quantized models we support for 4x faster downloading + no OOMs.

fourbit_models = [

"unsloth/mistral-7b-bnb-4bit",

"unsloth/mistral-7b-instruct-v0.2-bnb-4bit",

"unsloth/llama-2-7b-bnb-4bit",

"unsloth/gemma-7b-bnb-4bit",

"unsloth/gemma-7b-it-bnb-4bit", # Instruct version of Gemma 7b

"unsloth/gemma-2b-bnb-4bit",

"unsloth/gemma-2b-it-bnb-4bit", # Instruct version of Gemma 2b

"unsloth/llama-3-8b-bnb-4bit", # [NEW] 15 Trillion token Llama-3

] # More models at https://huggingface.co/unsloth

model, tokenizer = FastLanguageModel.from_pretrained(

model_name = "models",

max_seq_length = max_seq_length,

dtype = dtype,

load_in_4bit = load_in_4bit,

# token = "hf_...", # use one if using gated models like meta-llama/Llama-2-7b-hf

)

==((====))== Unsloth: Fast Llama patching release 2024.4

\\ /| GPU: NVIDIA A10. Max memory: 22.199 GB. Platform = Linux.

O^O/ \_/ \ Pytorch: 2.1.2+cu121. CUDA = 8.6. CUDA Toolkit = 12.1.

\ / Bfloat16 = TRUE. Xformers = 0.0.23.post1. FA = True.

"-____-" Free Apache license: http://github.com/unslothai/unsloth

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

微调前测试

#3微调前测试

alpaca_prompt = """Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{}

### Input:

{}

### Response:

{}"""

FastLanguageModel.for_inference(model)

inputs = tokenizer(

[

alpaca_prompt.format(

"请用中文回答", # instruction

"太阳还有五十亿年就没了,那到时候向日葵看哪呢?", # input

"", # output

)

], return_tensors = "pt").to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 128)

Setting `pad_token_id` to `eos_token_id`:128001 for open-end generation.

<|begin_of_text|>Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

请用中文回答

### Input:

太阳还有五十亿年就没了,那到时候向日葵看哪呢?

### Response:

向日葵还在朝向太阳的方向,太阳照亮了向日葵,向日葵照亮了我们。

<|end_of_text|>

导入数据集,traning_datasets是一个文件夹,同样与当前文件同处一个目录下

#4准备微调数据集

EOS_TOKEN = tokenizer.eos_token # 必须添加 EOS_TOKEN

def formatting_prompts_func(examples):

instructions = examples["instruction"]

inputs = examples["input"]

outputs = examples["output"]

texts = []

for instruction, input, output in zip(instructions, inputs, outputs):

# 必须添加EOS_TOKEN,否则无限生成

text = alpaca_prompt.format(instruction, input, output) + EOS_TOKEN

texts.append(text)

return { "text" : texts, }

pass

from datasets import load_dataset

dataset = load_dataset("traning_datasets", split = "train")

dataset = dataset.map(formatting_prompts_func, batched = True,)

设置训练参数

#5设置训练参数

from trl import SFTTrainer

from transformers import TrainingArguments

model = FastLanguageModel.get_peft_model(

model,

r = 16, # 建议 8, 16, 32, 64, 128

target_modules = ["q_proj", "k_proj", "v_proj", "o_proj",

"gate_proj", "up_proj", "down_proj",],

lora_alpha = 16,

lora_dropout = 0,

bias = "none",

use_gradient_checkpointing = "unsloth", # 检查点,长上下文度

random_state = 3407,

use_rslora = False,

loftq_config = None,

)

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = dataset,

dataset_text_field = "text",

max_seq_length = max_seq_length,

dataset_num_proc = 2,

packing = False, # 可以让短序列的训练速度提高5倍。

args = TrainingArguments(

per_device_train_batch_size = 2,

gradient_accumulation_steps = 4,

warmup_steps = 5,

max_steps = 60, # 微调步数

learning_rate = 2e-4, # 学习率

fp16 = not torch.cuda.is_bf16_supported(),

bf16 = torch.cuda.is_bf16_supported(),

logging_steps = 1,

optim = "adamw_8bit",

weight_decay = 0.01,

lr_scheduler_type = "linear",

seed = 3407,

output_dir = "outputs",

),

)

Detected kernel version 4.19.24, which is below the recommended minimum of 5.5.0; this can cause the process to hang. It is recommended to upgrade the kernel to the minimum version or higher.

开始训练

#6开始训练

trainer_stats = trainer.train()

<div>

<progress value='60' max='60' style='width:300px; height:20px; vertical-align: middle;'></progress>

[60/60 02:09, Epoch 0/1]

</div>

<table border="1" class="dataframe">

测试微调后的模型

#7测试微调后的模型

FastLanguageModel.for_inference(model)

inputs = tokenizer(

[

alpaca_prompt.format(

"只用中文回答问题", # instruction

"太阳还有五十亿年就没了,那到时候向日葵看哪呢?", # input

"", # output

)

], return_tensors = "pt").to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer)

_ = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 128)

Setting `pad_token_id` to `eos_token_id`:128001 for open-end generation.

<|begin_of_text|>Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

只用中文回答问题

### Input:

太阳还有五十亿年就没了,那到时候向日葵看哪呢?

### Response:

向日葵是根据太阳的光线来生长和成长的,太阳没有了,向日葵也就没有了。<|end_of_text|>

保存模型

model.save_pretrained("lora_model") # Local saving

/opt/conda/lib/python3.10/site-packages/peft/utils/other.py:581: UserWarning: Unable to fetch remote file due to the following error (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /models/resolve/main/config.json (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x7f61a4747100>: Failed to establish a new connection: [Errno 101] Network is unreachable'))"), '(Request ID: 6310928e-26ed-47e2-b0eb-1794bfb94f9b)') - silently ignoring the lookup for the file config.json in models.

warnings.warn(

/opt/conda/lib/python3.10/site-packages/peft/utils/save_and_load.py:154: UserWarning: Could not find a config file in models - will assume that the vocabulary was not modified.

warnings.warn(

合并模型并量化成4位gguf保存

#9合并模型并量化成4位gguf保存

model.save_pretrained_gguf("model", tokenizer, quantization_method = "q4_k_m")

#model.save_pretrained_merged("outputs", tokenizer, save_method = "merged_16bit",) #合并模型,保存为16位hf

make: 进入目录“/mnt/workspace/llama3/llama.cpp”

I ccache not found. Consider installing it for faster compilation.

I llama.cpp build info:

I UNAME_S: Linux

I UNAME_P: x86_64

I UNAME_M: x86_64

I CFLAGS: -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -DGGML_USE_LLAMAFILE -std=c11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wshadow -Wstrict-prototypes -Wpointer-arith -Wmissing-prototypes -Werror=implicit-int -Werror=implicit-function-declaration -pthread -march=native -mtune=native -Wdouble-promotion

I CXXFLAGS: -std=c++11 -fPIC -O3 -Wall -Wextra -Wpedantic -Wcast-qual -Wno-unused-function -Wmissing-declarations -Wmissing-noreturn -pthread -march=native -mtune=native -Wno-array-bounds -Wno-format-truncation -Wextra-semi -I. -Icommon -D_XOPEN_SOURCE=600 -D_GNU_SOURCE -DNDEBUG -DGGML_USE_LLAMAFILE

I NVCCFLAGS: -std=c++11 -O3

I LDFLAGS:

I CC: cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0

I CXX: c++ (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0

rm -vrf *.o tests/*.o *.so *.a *.dll benchmark-matmult lookup-create lookup-merge lookup-stats common/build-info.cpp *.dot *.gcno tests/*.gcno *.gcda tests/*.gcda *.gcov tests/*.gcov lcov-report gcovr-report main quantize quantize-stats perplexity imatrix embedding vdot q8dot train-text-from-scratch convert-llama2c-to-ggml simple batched batched-bench save-load-state server gguf gguf-split eval-callback llama-bench libllava.a llava-cli baby-llama beam-search retrieval speculative infill tokenize benchmark-matmult parallel finetune export-lora lookahead lookup passkey gritlm tests/test-c.o tests/test-autorelease tests/test-backend-ops tests/test-double-float tests/test-grad0 tests/test-grammar-integration tests/test-grammar-parser tests/test-json-schema-to-grammar tests/test-llama-grammar tests/test-model-load-cancel tests/test-opt tests/test-quantize-fns tests/test-quantize-perf tests/test-rope tests/test-sampling tests/test-tokenizer-0 tests/test-tokenizer-1-bpe tests/test-tokenizer-1-spm

rm -vrf ggml-cuda/*.o

find examples pocs -type f -name "*.o" -delete

make: 离开目录“/mnt/workspace/llama3/llama.cpp”

Unsloth: Merging 4bit and LoRA weights to 16bit...

Unsloth: Will use up to 17.05 out of 29.44 RAM for saving.

91%|█████████ | 29/32 [00:01<00:00, 25.41it/s]We will save to Disk and not RAM now.

100%|██████████| 32/32 [00:01<00:00, 20.31it/s]

Unsloth: Saving tokenizer... Done.

Unsloth: Saving model... This might take 5 minutes for Llama-7b...

Done.

NOTICE: llama.cpp GGUF conversion is currently unstable, since llama.cpp is

undergoing some major bug fixes as at 5th of May 2024. This is not an Unsloth issue.

Please be patient - GGUF saving should still work, but might not work as well.

Unsloth: Converting llama model. Can use fast conversion = False.

Unsloth: We must use f16 for non Llama and Mistral models.

==((====))== Unsloth: Conversion from QLoRA to GGUF information

\\ /| [0] Installing llama.cpp will take 3 minutes.

O^O/ \_/ \ [1] Converting HF to GUUF 16bits will take 3 minutes.

\ / [2] Converting GGUF 16bits to q4_k_m will take 20 minutes.

"-____-" In total, you will have to wait around 26 minutes.

Unsloth: [0] Installing llama.cpp. This will take 3 minutes...

Unsloth: [1] Converting model at model into f16 GGUF format.

The output location will be ./model-unsloth.F16.gguf

This will take 3 minutes...

Unsloth: Conversion completed! Output location: ./model-unsloth.F16.gguf

Unsloth: [2] Converting GGUF 16bit into q4_k_m. This will take 20 minutes...

main: build = 2812 (1fd9c174)

main: built with cc (Ubuntu 11.4.0-1ubuntu1~22.04) 11.4.0 for x86_64-linux-gnu

main: quantizing './model-unsloth.F16.gguf' to './model-unsloth.Q4_K_M.gguf' as Q4_K_M using 16 threads

llama_model_loader: loaded meta data with 21 key-value pairs and 291 tensors from ./model-unsloth.F16.gguf (version GGUF V3 (latest))

llama_model_loader: Dumping metadata keys/values. Note: KV overrides do not apply in this output.

llama_model_loader: - kv 0: general.architecture str = llama

llama_model_loader: - kv 1: general.name str = model

llama_model_loader: - kv 2: llama.block_count u32 = 32

llama_model_loader: - kv 3: llama.context_length u32 = 8192

llama_model_loader: - kv 4: llama.embedding_length u32 = 4096

llama_model_loader: - kv 5: llama.feed_forward_length u32 = 14336

llama_model_loader: - kv 6: llama.attention.head_count u32 = 32

llama_model_loader: - kv 7: llama.attention.head_count_kv u32 = 8

llama_model_loader: - kv 8: llama.rope.freq_base f32 = 500000.000000

llama_model_loader: - kv 9: llama.attention.layer_norm_rms_epsilon f32 = 0.000010

llama_model_loader: - kv 10: general.file_type u32 = 1

llama_model_loader: - kv 11: llama.vocab_size u32 = 128256

llama_model_loader: - kv 12: llama.rope.dimension_count u32 = 128

llama_model_loader: - kv 13: tokenizer.ggml.model str = gpt2

llama_model_loader: - kv 14: tokenizer.ggml.pre str = llama-bpe

llama_model_loader: - kv 15: tokenizer.ggml.tokens arr[str,128256] = ["!", "\"", "#", "$", "%", "&", "'", ...

llama_model_loader: - kv 16: tokenizer.ggml.token_type arr[i32,128256] = [1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, ...

llama_model_loader: - kv 17: tokenizer.ggml.merges arr[str,280147] = ["Ġ Ġ", "Ġ ĠĠĠ", "ĠĠ ĠĠ", "...

llama_model_loader: - kv 18: tokenizer.ggml.bos_token_id u32 = 128000

llama_model_loader: - kv 19: tokenizer.ggml.eos_token_id u32 = 128001

llama_model_loader: - kv 20: tokenizer.ggml.padding_token_id u32 = 128255

llama_model_loader: - type f32: 65 tensors

llama_model_loader: - type f16: 226 tensors

[ 1/ 291] token_embd.weight - [ 4096, 128256, 1, 1], type = f16, converting to q4_K .. size = 1002.00 MiB -> 281.81 MiB

[ 2/ 291] blk.0.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 3/ 291] blk.0.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 4/ 291] blk.0.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 5/ 291] blk.0.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 6/ 291] blk.0.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 7/ 291] blk.0.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 8/ 291] blk.0.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 9/ 291] blk.0.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 10/ 291] blk.0.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 11/ 291] blk.1.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 12/ 291] blk.1.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 13/ 291] blk.1.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 14/ 291] blk.1.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 15/ 291] blk.1.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 16/ 291] blk.1.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 17/ 291] blk.1.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 18/ 291] blk.1.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 19/ 291] blk.1.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 20/ 291] blk.2.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 21/ 291] blk.2.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 22/ 291] blk.2.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 23/ 291] blk.2.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 24/ 291] blk.2.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 25/ 291] blk.2.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 26/ 291] blk.2.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 27/ 291] blk.2.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 28/ 291] blk.2.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 29/ 291] blk.3.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 30/ 291] blk.3.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 31/ 291] blk.3.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 32/ 291] blk.3.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 33/ 291] blk.3.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 34/ 291] blk.3.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 35/ 291] blk.3.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 36/ 291] blk.3.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 37/ 291] blk.3.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 38/ 291] blk.4.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 39/ 291] blk.4.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 40/ 291] blk.4.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 41/ 291] blk.4.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 42/ 291] blk.4.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 43/ 291] blk.4.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 44/ 291] blk.4.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 45/ 291] blk.4.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 46/ 291] blk.4.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 47/ 291] blk.5.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 48/ 291] blk.5.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 49/ 291] blk.5.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 50/ 291] blk.5.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 51/ 291] blk.5.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 52/ 291] blk.5.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 53/ 291] blk.5.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 54/ 291] blk.5.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 55/ 291] blk.5.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 56/ 291] blk.6.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 57/ 291] blk.6.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 58/ 291] blk.6.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 59/ 291] blk.6.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 60/ 291] blk.6.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 61/ 291] blk.6.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 62/ 291] blk.6.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 63/ 291] blk.6.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 64/ 291] blk.6.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 65/ 291] blk.7.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 66/ 291] blk.7.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 67/ 291] blk.7.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 68/ 291] blk.7.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 69/ 291] blk.7.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 70/ 291] blk.7.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 71/ 291] blk.7.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 72/ 291] blk.7.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 73/ 291] blk.7.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 74/ 291] blk.8.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 75/ 291] blk.8.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 76/ 291] blk.8.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 77/ 291] blk.8.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 78/ 291] blk.8.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 79/ 291] blk.8.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 80/ 291] blk.8.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 81/ 291] blk.8.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 82/ 291] blk.8.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 83/ 291] blk.10.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 84/ 291] blk.10.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 85/ 291] blk.10.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 86/ 291] blk.10.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 87/ 291] blk.10.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 88/ 291] blk.10.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 89/ 291] blk.10.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 90/ 291] blk.10.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 91/ 291] blk.10.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 92/ 291] blk.11.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 93/ 291] blk.11.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 94/ 291] blk.11.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 95/ 291] blk.11.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 96/ 291] blk.11.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 97/ 291] blk.11.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 98/ 291] blk.11.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 99/ 291] blk.11.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 100/ 291] blk.11.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 101/ 291] blk.12.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 102/ 291] blk.12.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 103/ 291] blk.12.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 104/ 291] blk.12.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 105/ 291] blk.12.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 106/ 291] blk.12.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 107/ 291] blk.12.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 108/ 291] blk.12.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 109/ 291] blk.12.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 110/ 291] blk.13.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 111/ 291] blk.13.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 112/ 291] blk.13.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 113/ 291] blk.13.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 114/ 291] blk.13.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 115/ 291] blk.13.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 116/ 291] blk.13.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 117/ 291] blk.13.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 118/ 291] blk.13.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 119/ 291] blk.14.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 120/ 291] blk.14.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 121/ 291] blk.14.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 122/ 291] blk.14.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 123/ 291] blk.14.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 124/ 291] blk.14.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 125/ 291] blk.14.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 126/ 291] blk.14.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 127/ 291] blk.14.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 128/ 291] blk.15.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 129/ 291] blk.15.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 130/ 291] blk.15.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 131/ 291] blk.15.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 132/ 291] blk.15.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 133/ 291] blk.15.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 134/ 291] blk.15.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 135/ 291] blk.15.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 136/ 291] blk.15.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 137/ 291] blk.16.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 138/ 291] blk.16.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 139/ 291] blk.16.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 140/ 291] blk.16.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 141/ 291] blk.16.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 142/ 291] blk.16.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 143/ 291] blk.16.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 144/ 291] blk.16.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 145/ 291] blk.16.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 146/ 291] blk.17.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 147/ 291] blk.17.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 148/ 291] blk.17.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 149/ 291] blk.17.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 150/ 291] blk.17.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 151/ 291] blk.17.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 152/ 291] blk.17.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 153/ 291] blk.17.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 154/ 291] blk.17.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 155/ 291] blk.18.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 156/ 291] blk.18.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 157/ 291] blk.18.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 158/ 291] blk.18.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 159/ 291] blk.18.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 160/ 291] blk.18.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 161/ 291] blk.18.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 162/ 291] blk.18.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 163/ 291] blk.18.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 164/ 291] blk.19.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 165/ 291] blk.19.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 166/ 291] blk.19.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 167/ 291] blk.19.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 168/ 291] blk.19.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 169/ 291] blk.19.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 170/ 291] blk.19.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 171/ 291] blk.19.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 172/ 291] blk.19.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 173/ 291] blk.20.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 174/ 291] blk.20.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 175/ 291] blk.20.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 176/ 291] blk.20.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 177/ 291] blk.20.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 178/ 291] blk.9.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 179/ 291] blk.9.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 180/ 291] blk.9.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 181/ 291] blk.9.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 182/ 291] blk.9.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 183/ 291] blk.9.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 184/ 291] blk.9.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 185/ 291] blk.9.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 186/ 291] blk.9.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 187/ 291] blk.20.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 188/ 291] blk.20.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 189/ 291] blk.20.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 190/ 291] blk.20.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 191/ 291] blk.21.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 192/ 291] blk.21.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 193/ 291] blk.21.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 194/ 291] blk.21.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 195/ 291] blk.21.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 196/ 291] blk.21.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 197/ 291] blk.21.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 198/ 291] blk.21.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 199/ 291] blk.21.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 200/ 291] blk.22.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 201/ 291] blk.22.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 202/ 291] blk.22.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 203/ 291] blk.22.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 204/ 291] blk.22.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 205/ 291] blk.22.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 206/ 291] blk.22.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 207/ 291] blk.22.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 208/ 291] blk.22.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 209/ 291] blk.23.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 210/ 291] blk.23.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 211/ 291] blk.23.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 212/ 291] blk.23.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 213/ 291] blk.23.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 214/ 291] blk.23.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 215/ 291] blk.23.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 216/ 291] blk.23.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 217/ 291] blk.23.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 218/ 291] blk.24.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 219/ 291] blk.24.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 220/ 291] blk.24.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 221/ 291] blk.24.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 222/ 291] blk.24.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 223/ 291] blk.24.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 224/ 291] blk.24.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 225/ 291] blk.24.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 226/ 291] blk.24.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 227/ 291] blk.25.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 228/ 291] blk.25.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 229/ 291] blk.25.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 230/ 291] blk.25.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 231/ 291] blk.25.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 232/ 291] blk.25.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 233/ 291] blk.25.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 234/ 291] blk.25.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 235/ 291] blk.25.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 236/ 291] blk.26.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 237/ 291] blk.26.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 238/ 291] blk.26.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 239/ 291] blk.26.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 240/ 291] blk.26.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 241/ 291] blk.26.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 242/ 291] blk.26.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 243/ 291] blk.26.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 244/ 291] blk.26.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 245/ 291] blk.27.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 246/ 291] blk.27.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 247/ 291] blk.27.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 248/ 291] blk.27.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 249/ 291] blk.27.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 250/ 291] blk.27.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 251/ 291] blk.27.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 252/ 291] blk.27.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 253/ 291] blk.27.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 254/ 291] blk.28.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 255/ 291] blk.28.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 256/ 291] blk.28.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 257/ 291] blk.28.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 258/ 291] blk.28.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 259/ 291] blk.28.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 260/ 291] blk.28.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 261/ 291] blk.28.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 262/ 291] blk.28.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 263/ 291] blk.29.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 264/ 291] blk.29.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 265/ 291] blk.29.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 266/ 291] blk.29.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 267/ 291] blk.29.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 268/ 291] blk.29.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 269/ 291] blk.29.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 270/ 291] blk.29.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 271/ 291] blk.29.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 272/ 291] blk.30.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 273/ 291] blk.30.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 274/ 291] blk.30.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 275/ 291] blk.30.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 276/ 291] blk.30.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 277/ 291] blk.30.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 278/ 291] blk.30.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 279/ 291] blk.30.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 280/ 291] blk.30.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 281/ 291] blk.31.ffn_gate.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 282/ 291] blk.31.ffn_up.weight - [ 4096, 14336, 1, 1], type = f16, converting to q4_K .. size = 112.00 MiB -> 31.50 MiB

[ 283/ 291] blk.31.attn_k.weight - [ 4096, 1024, 1, 1], type = f16, converting to q4_K .. size = 8.00 MiB -> 2.25 MiB

[ 284/ 291] blk.31.attn_output.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 285/ 291] blk.31.attn_q.weight - [ 4096, 4096, 1, 1], type = f16, converting to q4_K .. size = 32.00 MiB -> 9.00 MiB

[ 286/ 291] blk.31.attn_v.weight - [ 4096, 1024, 1, 1], type = f16, converting to q6_K .. size = 8.00 MiB -> 3.28 MiB

[ 287/ 291] output.weight - [ 4096, 128256, 1, 1], type = f16, converting to q6_K .. size = 1002.00 MiB -> 410.98 MiB

[ 288/ 291] blk.31.attn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 289/ 291] blk.31.ffn_down.weight - [14336, 4096, 1, 1], type = f16, converting to q6_K .. size = 112.00 MiB -> 45.94 MiB

[ 290/ 291] blk.31.ffn_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

[ 291/ 291] output_norm.weight - [ 4096, 1, 1, 1], type = f32, size = 0.016 MB

llama_model_quantize_internal: model size = 15317.02 MB

llama_model_quantize_internal: quant size = 4685.30 MB

main: quantize time = 159911.51 ms

main: total time = 159911.51 ms

Unsloth: Conversion completed! Output location: ./model-unsloth.Q4_K_M.gguf

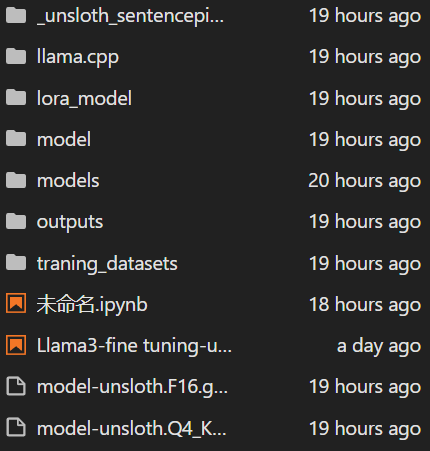

文件目录:

浙公网安备 33010602011771号

浙公网安备 33010602011771号