Scala spark RDD 转 DataFrame 转 libsvm 稀疏矩阵 KMeans 聚类算法

package main.scala.Alg

import main.scala.core.config.{sc, spark_session}

import org.apache.spark.ml.linalg.Vectors

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.DataFrame

import main.scala.core.config.spark_session.implicits._

import org.apache.spark.ml.clustering.{KMeans, KMeansModel}

import org.apache.spark.ml.evaluation.ClusteringEvaluator

import org.apache.spark.ml.linalg

object RddToDataframeToLibsvm {

def main(args: Array[String]): Unit = {

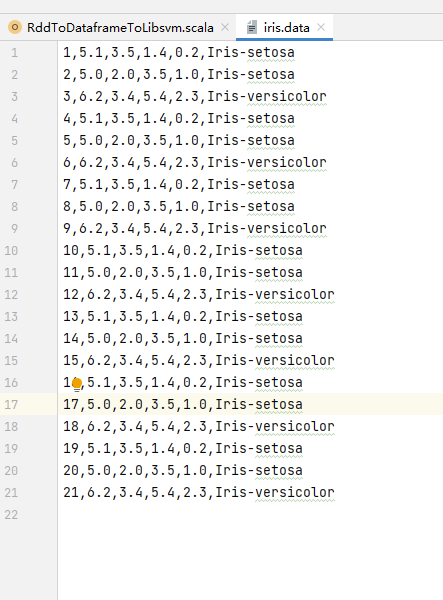

val path: String = "data/iris.data"

val rdd1:RDD[String] = sc.textFile(path)

val rdd2: RDD[Array[String]] = rdd1.map(line => {

line.split(",")

})

//val rlt: Seq[Any] = Seq(0.0, Vectors.sparse(3, Array(0, 2), Array(1.0, 3.0)))

//Seq(0.0, Vectors.sparse(3, Array(0, 2), Array(1.0, 3.0)))

val rdd3: RDD[(Int, linalg.Vector)] = rdd2.map(line=>{

//Seq(0.0, Vectors.sparse(line.length, Range(0,line.length-1).toArray,line.slice(0,4)))

(line(0).toInt, Vectors.sparse(line.length-2, Range(0,line.length-2).toArray,line.slice(1,5).map(_.toDouble)

))

})

// 假设已有DataFrame,其中包含特征列"features"和标签列"label"

// val df: DataFrame = spark_session.createDataFrame(Seq(

// (1.0, Vectors.dense(1.0, 2.0, 3.0)),

// (0.0, Vectors.sparse(3, Array(0, 2), Array(1.0, 3.0)))

// )).toDF("label", "features")

// rdd3.foreach(println)

val data:DataFrame = rdd3.toDF("label", "features")

data.show()

// Trains a k-means model.

val kmeans: KMeans = new KMeans().setK(2).setSeed(1L)

val model: KMeansModel = kmeans.fit(data)

// Make predictions

val predictions: DataFrame = model.transform(data)

println("分类数据点与类别")

predictions.foreach(Row => println(Row))

// Evaluate clustering by computing Silhouette score

val evaluator: ClusteringEvaluator = new ClusteringEvaluator()

val silhouette = evaluator.evaluate(predictions)

println(s"欧式距离 = $silhouette")

// Shows the result.

println("集群中心")

model.clusterCenters.foreach(println)

// $example off$

spark_session.stop()

}

}

数据情况

自动化学习。

浙公网安备 33010602011771号

浙公网安备 33010602011771号