用IDEA开发Spark程序(Scala)(三)—— 新建项目

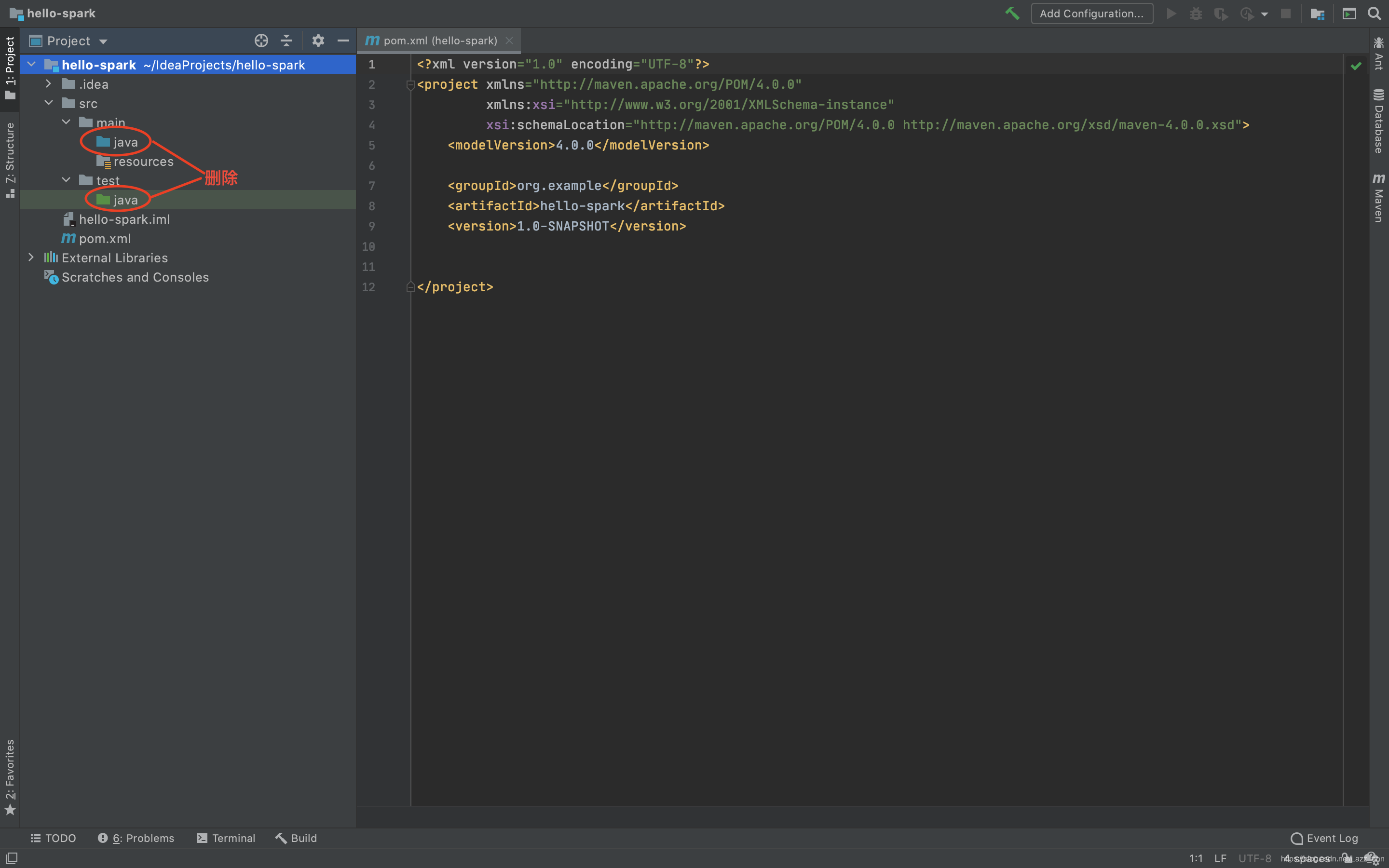

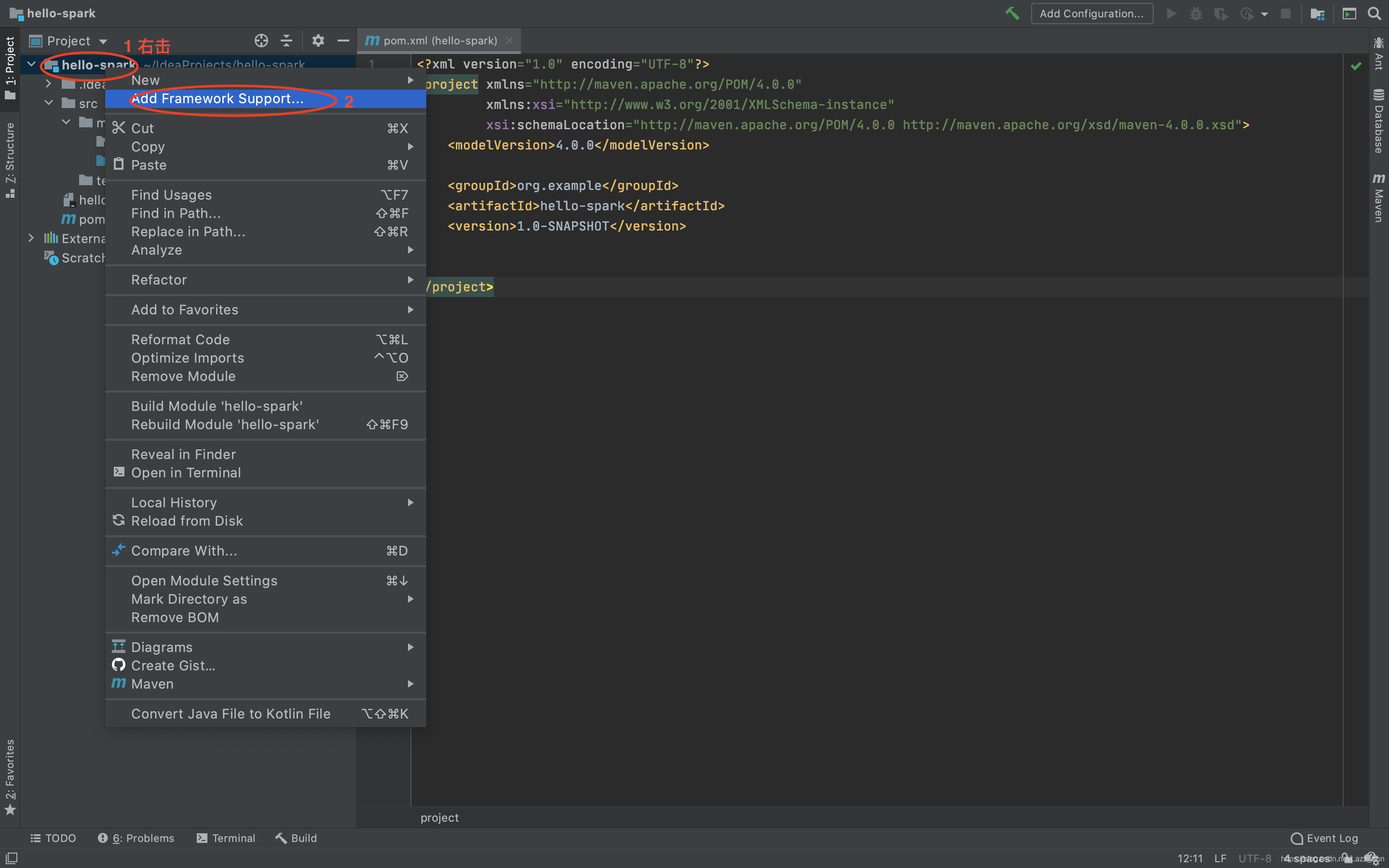

新建项目

添加依赖

编辑hello-spark/pom.xml,添加:

<properties>

<spark.version>2.3.4</spark.version>

<scala.version>2.11</scala.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<!-- 暂时不需要

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_${scala.version}</artifactId>

<version>${spark.version}</version>

</dependency>

-->

</dependencies>

可以在maven库搜索查看依赖版本

点击悬浮按钮或者maven工具栏的刷新按钮,下载依赖

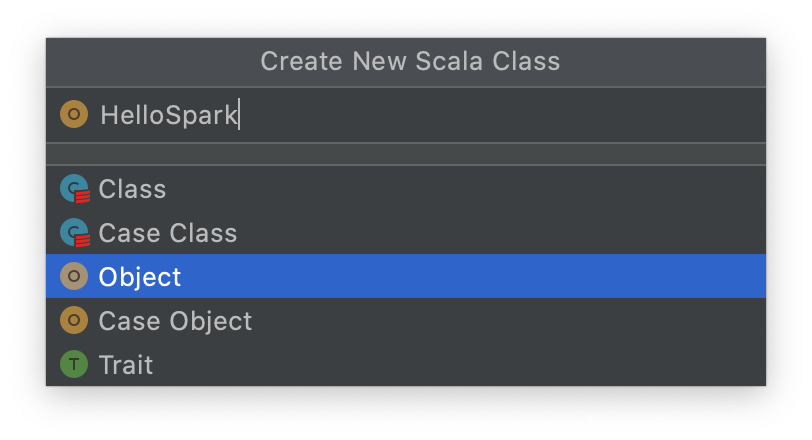

编写代码

编辑hello-spark/src/main/scala/HelloSpark.scala

import org.apache.spark.sql.SparkSession

object HelloSpark {

def main(args: Array[String]): Unit = {

val spark = SparkSession.builder().master("local").getOrCreate()

val tup = (1 to 10).map(x => (x , x * 2))

val df = spark.createDataFrame(tup)

df.show()

}

}

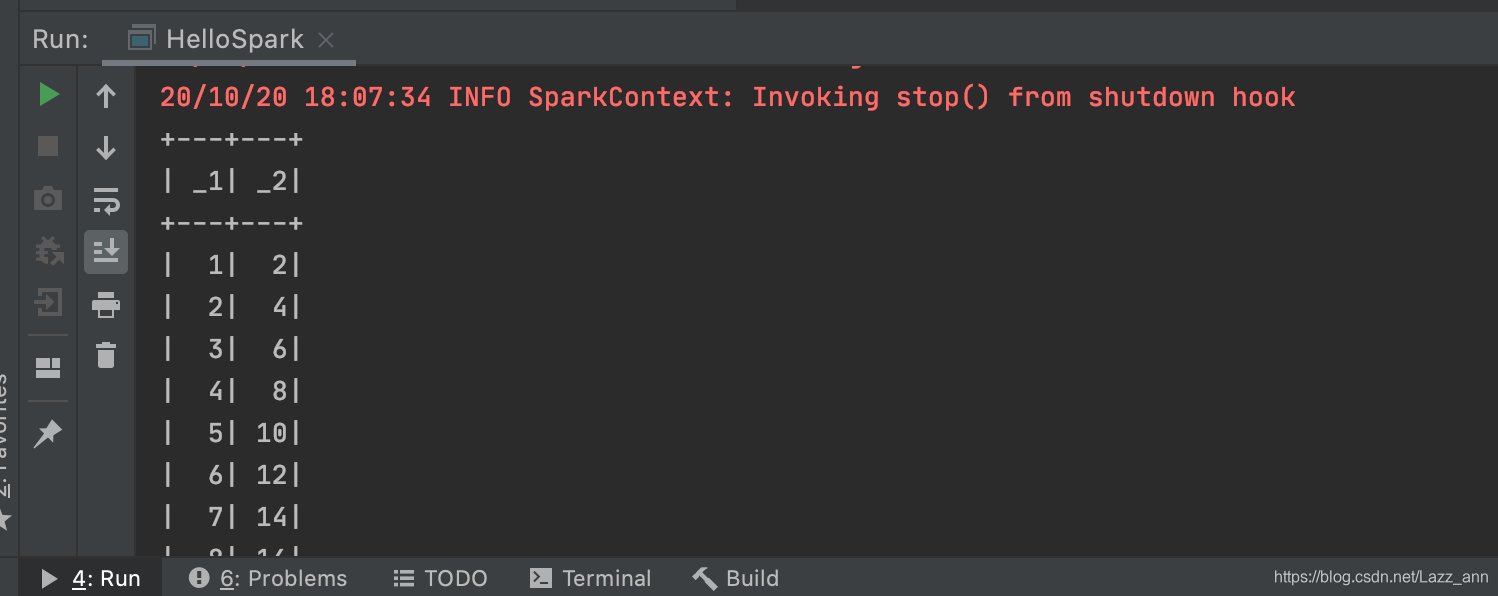

运行程序

右键 run 'HelloSpark'。运行结果:

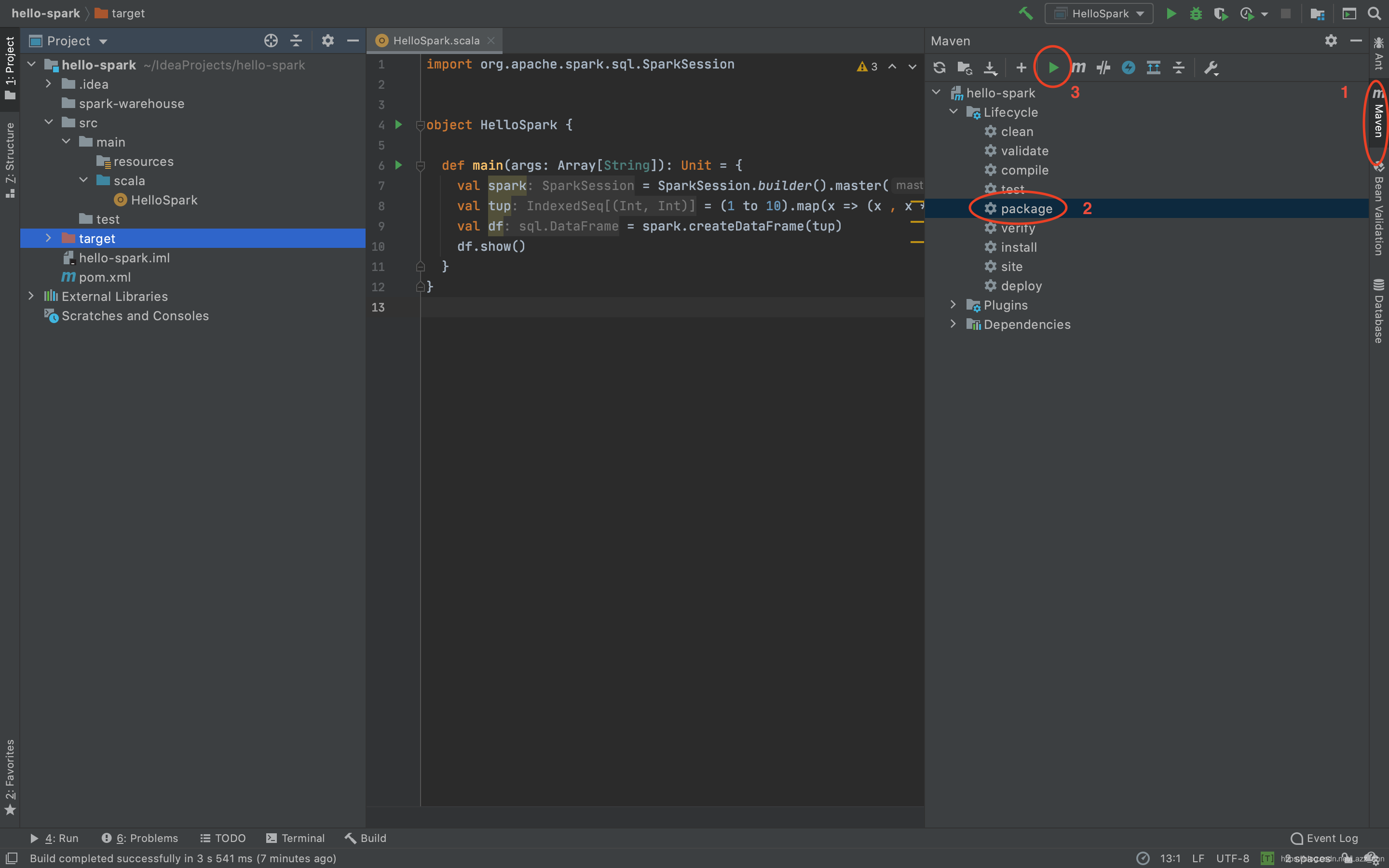

打包

打完的jar包会在hello-spark/target目录下

测试:

spark-submit --class HelloSpark hello-spark-1.0-SNAPSHOT.jar

浙公网安备 33010602011771号

浙公网安备 33010602011771号