【爬虫系列】bs4+requests 爬取三国演绎文本

1 import requests 2 from bs4 import BeautifulSoup 3 #需求:爬取三国演义小说所有的章节标题和章节内容http://www.shicimingju.com/book/sanguoyanyi.html 4 5 if __name__ == '__main__': 6 # 对首页的页面数据进行爬取 7 headers = { 8 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/94.0.4606.71 Safari/537.36' 9 } 10 url = 'http://www.shicimingju.com/book/sanguoyanyi.html' 11 response = requests.get(url=url,headers=headers) 12 response.encoding = response.apparent_encoding #设置编码为utf-8 13 print(response.encoding) 14 # Html1 = page_text.encode('iso-8859-1').decode('gbk') 15 # 在首页中解析出章节的标题和详情页的url 16 # 1.实例化BeautifulSoup对象,需要将页面源码数据加载到该对象中 17 soup = BeautifulSoup(response.text,'lxml') 18 #解析章节标题和详情页的url 19 li_list = soup.select('.book-mulu > ul > li') 20 fp = open('./sanguo.txt','w',encoding='utf-8') 21 for li in li_list: 22 title = li.a.string 23 detail_url = 'http://www.shicimingju.com' + li.a['href'] 24 # 对详情页发起请求,解析出章节内容 25 detail_page_res = requests.get(url=detail_url,headers=headers) 26 detail_page_res.encoding = detail_page_res.apparent_encoding #防止乱码 27 detail_page_text = detail_page_res.text 28 #解析出详情页中相关的章节内容 29 detail_soup = BeautifulSoup(detail_page_text,'lxml') 30 div_tg = detail_soup.find('div',class_='chapter_content') 31 #解析到了章节的内容 32 content = div_tg.text 33 fp.write(title + ':' + content + '\n\n') 34 print(title,'爬取成功!!!')

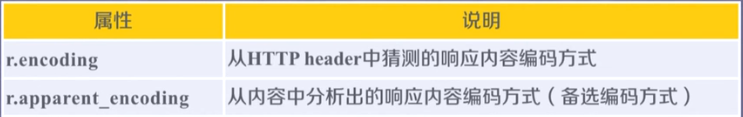

encoding是从http中的header中的charset字段中提取的编码方式,若header中没有charset字段则默认为ISO-8859-1编码模式,则无法解析中文,这是乱码的原因

apparent_encoding会从网页的内容中分析网页编码的方式,所以apparent_encoding比encoding更加准确。当网页出现乱码时可以把apparent_encoding的编码格式赋值给encoding。

没有借口

浙公网安备 33010602011771号

浙公网安备 33010602011771号