flume获取日志对接到kafka

netcat-to-kafka.conf 内容

# Name a1.sources = r1 a1.sinks = k1 a1.channels = c1 #Source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Sink a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.topic = niuma a1.sinks.k1.brokerList = hadoop111:9092,hadoop112:9092,hadoop113:9092 a1.sinks.k1.kafka.flumeBatchSize = 20 a1.sinks.k1.kafka.producer.acks = 1 a1.sinks.k1.kafka.producer.linger.ms = 1 # Bind a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

将 topic 和 brokerlist 改成你的即可

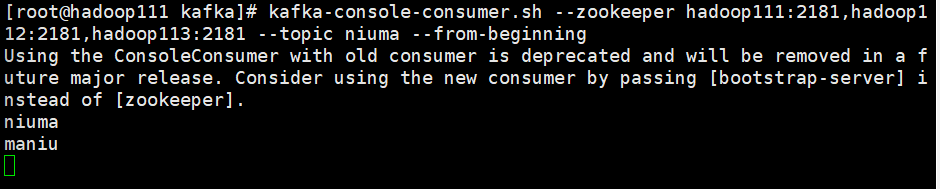

模拟消费者拉取 topic 数据

kafka-console-consumer.sh --zookeeper hadoop111:2181,hadoop112:2181,hadoop113:2181 --topic niuma --from-beginning

启动 flume

flume-ng agent -c conf -f /opt/bigdata/flume/conf/flume-to-kafka.conf -n a1

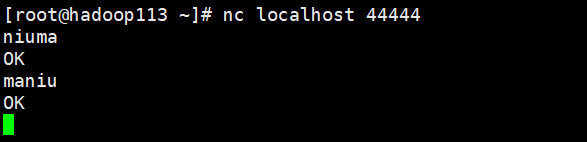

测试

然后就可以在 kafka 消费者这里看到消息了

浙公网安备 33010602011771号

浙公网安备 33010602011771号