简单的小说爬虫

简单的python爬虫

准备工作

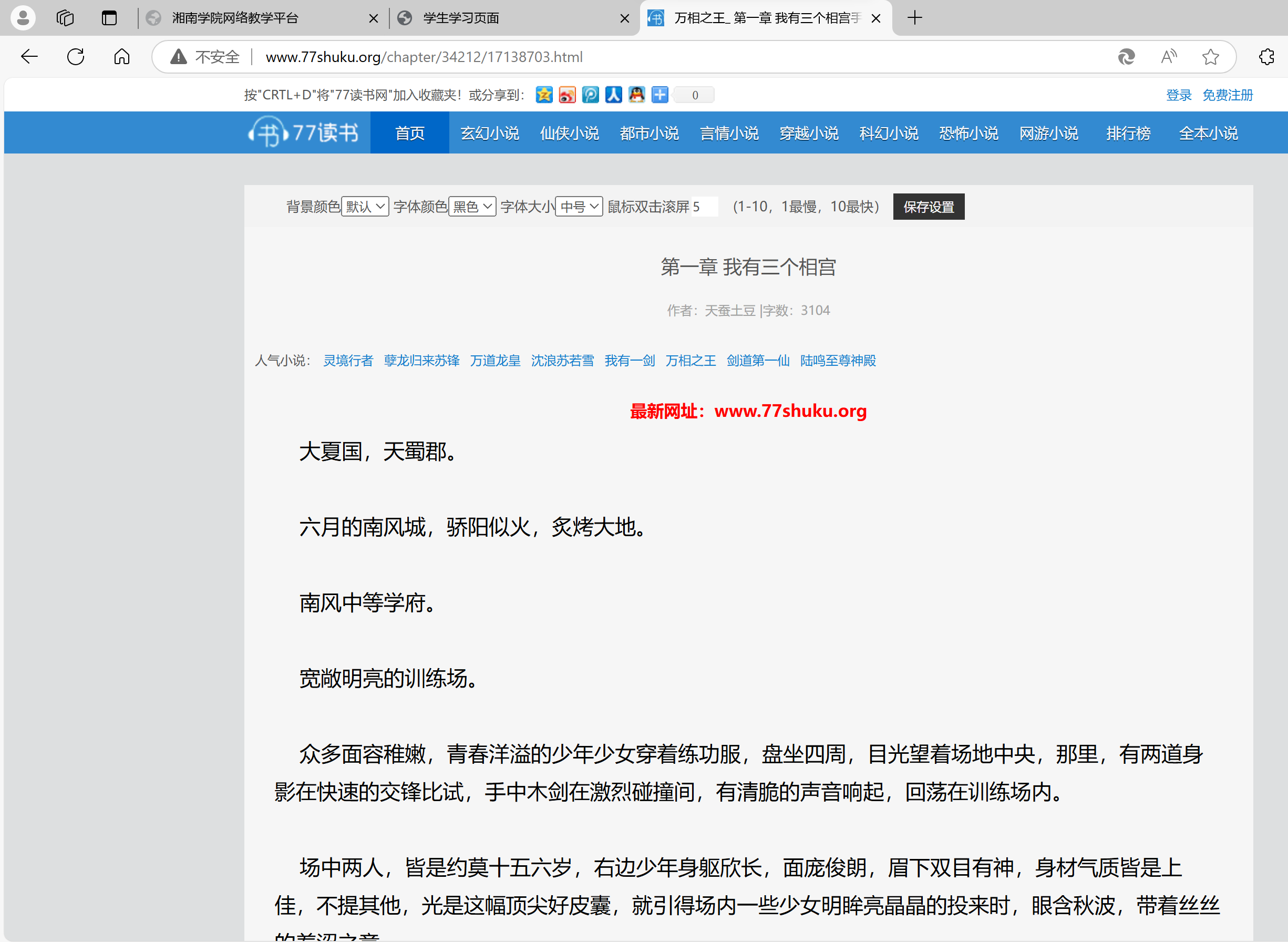

爬取网站77读书

先选择一本书:《万相之王》

复制链接:http://www.77shuku.org/chapter/34212/17138703.html

代码实操

import requests

from lxml import etree

import re

cookies = {

'clickbids': '96780',

'Hm_lvt_a5ca352c842077802ed8d4e53d0a525b': '1734608332',

'HMACCOUNT': '652E632A38AD9859',

'Hm_lpvt_a5ca352c842077802ed8d4e53d0a525b': '1734608337',

}

headers = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Cache-Control': 'max-age=0',

'Connection': 'keep-alive',

# 'Cookie': 'clickbids=96780; Hm_lvt_a5ca352c842077802ed8d4e53d0a525b=1734608332; HMACCOUNT=652E632A38AD9859; Hm_lpvt_a5ca352c842077802ed8d4e53d0a525b=1734608337',

'Referer': 'http://www.77shuku.org/novel/96780/',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36',

}

Url=input('输入你要爬77读书小说的网站:')

response = requests.get(Url, cookies=cookies, headers=headers, verify=False)

response.encoding=response.apparent_encoding

et=etree.HTML(response.text)

url=et.xpath("//div[@class='control']/span/a[@class=' pre_z pmulu']/@href")

ret=et.xpath("//div[@class='page-body']/div[@class='page-content']/text()")

novel=''.join(ret)

novel_clean= re.sub(r'[\xa0\r\n]+', '', novel)

url_clenn=[re.sub(r''$', '', p) for p in url]

zurl=url_clenn[0]

with open(f'new{0}.tex','w') as file:

file.write(novel_clean)

for i in range(1,3): ##爬多少张这里是3章

response = requests.get(zurl, cookies=cookies, headers=headers, verify=False)

response.encoding=response.apparent_encoding

et=etree.HTML(response.text)

url=et.xpath("//div[@class='control']/span/a[@class=' pre_z pmulu']/@href")

ret=et.xpath("//div[@class='page-body']/div[@class='page-content']/text()")

novel=''.join(ret)

novel_clean= re.sub(r'[\xa0\r\n]+', '', novel)

url_clenn=[re.sub(r''$', '', p) for p in url]

zurl=url_clenn[0]

with open(f'new{i}.tex','w') as file:

file.write(novel_clean)

print("成功")

总结

re库是找Ai的,后面需多加学习

这个太过简单,还需学习

浙公网安备 33010602011771号

浙公网安备 33010602011771号