使用containerd部署k8s集群

根据官方声明,k8s在1.22版本及之前的版本仍然兼容docker。但在2021年即将发布的1.23版本中,就将不再支持docker。因此对于新的k8s环境部署而言,同步更改容器运行时,弃用docker是很有必要的。

本次文章主要讲解,如何基于ubuntu 20.04 + containerd 基于裸机环境部署k8s生产环境集群。

到github上查找一下第一手消息源可以找到 1.20 changelog (Dockershim deprecation)上写着:

Docker as an underlying runtime is being deprecated. Docker-produced images will continue to work in your cluster with all runtimes, as they always have. The Kubernetes community has written a blog post about this in detail with a dedicated FAQ page for it.

什么是docker shim,为什么k8s要弃用它?

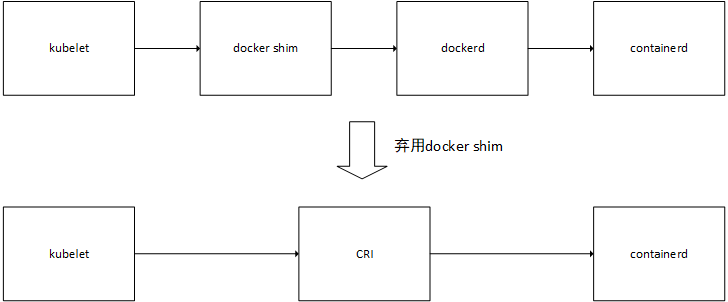

docker shim相当于一个转换器,将k8s的指令翻译为docker能听懂的语言。弃用docker shim后 k8s 无需考虑docker shim翻译过程的bug,或者docker的兼容性问题。

直接使用containerd作为runtime,会使得k8s的调用流程更为精简,也会因此获得更高的稳定性。具体流程如下图所示。

想要更深入了解其中发展历史的,可以查看这篇博文:https://blog.csdn.net/u011563903/article/details/90743853

deploy k8s

- kubeadm https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

cgroup https://kubernetes.io/docs/tasks/administer-cluster/kubeadm/configure-cgroup-driver/ - runtime https://kubernetes.io/docs/setup/production-environment/container-runtimes/#containerd

swapoff selinux - kubeadm deploy cluster https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

- kubelet config : https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/kubelet-integration/

- https://www.cnblogs.com/codenoob/p/14098539.html

https://www.cnblogs.com/coolops/p/14576478.html

准备工作

# 禁用swap

sudo swappoff -a

sudo nano /etc/fstab

# 设置hosts

#/etc/hosts

192.168.197.13 cluster-endpoint

containerd install

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

echo \

"deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://mirrors.aliyun.com/docker-ce/linux/ubuntu/ \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt update && sudo apt install containerd.io

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# Setup required sysctl params, these persist across reboots.

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --system

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

--/etc/containerd/config.toml--

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri"]

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2"

#sandbox_image = "k8s.gcr.io/pause:3.2"

# 设置镜像

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://docker.mirrors.ustc.edu.cn"]

#endpoint = ["https://registry-1.docker.io"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."*"]

endpoint = ["https://docker.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."192.168.196.110:5000"]

endpoint = ["http://192.168.196.110:5000"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."192.168.196.110:5000".tls]

insecure_skip_verify = true

--

sudo systemctl restart containerd

安装kubeadm

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

镜像

https://www.cnblogs.com/ryanyangcs/p/14168400.html

https://blog.csdn.net/xjjj064/article/details/107684300

k8s.gcr.io => registry.cn-hangzhou.aliyuncs.com/google_containers

https://packages.cloud.google.com => https://mirrors.aliyun.com/kubernetes/

https://apt.kubernetes.io/ => https://mirrors.aliyun.com/kubernetes/apt/

kubeadm 部署 master

通过阿里云容器镜像可以拉取到大部分镜像,如果有部分镜像没有,可以自己通过免费的阿里云容器镜像服务,配合境外服务器或GITHUB流水线来处理

阿里云容器镜像服务:https://cr.console.aliyun.com/

配合GITHUB拉取容器镜像: https://blog.csdn.net/u010953609/article/details/122042364

# 拉取阿里云google_containers缺少的镜像

sudo -i

ctr ns ls

ctr -n=k8s.io i ls

ctr -n=k8s.io i pull registry.cn-hangzhou.aliyuncs.com/wswind/mirror-k8s:coredns-v1.8.0

ctr -n=k8s.io i tag registry.cn-hangzhou.aliyuncs.com/wswind/mirror-k8s:coredns-v1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

ctr -n=k8s.io i ls

# 拉取阿里云google_containers镜像

kubeadm config images list --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

kubeadm config images pull --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers

# 设置hosts

/etc/hosts

192.168.197.13 cluster-endpoint

#https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#initializing-your-control-plane-node

sudo kubeadm init \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

--control-plane-endpoint=cluster-endpoint \

--pod-network-cidr=10.20.0.0/16 \

--cri-socket=/run/containerd/containerd.sock \

--apiserver-advertise-address=192.168.197.13 \

-v=5

成功提示

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token oigoz1.cvzmeucy16vb84tm \

--discovery-token-ca-cert-hash sha256:0dc77edd1e170bc67497b2d9062625aacbe3748d12dca6c7c15e9e7dc70901ae \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token oigoz1.cvzmeucy16vb84tm \

--discovery-token-ca-cert-hash sha256:0dc77edd1e170bc67497b2d9062625aacbe3748d12dca6c7c15e9e7dc70901ae

Worker节点加入

worker节点安装配置过程和master类似,只是最后一步 kubeadm init 改为了 kubeadm join ,具体命令可参考成功提示

kubeadm join <ip>:6443 --token 8bqn45.36ce7z9way24ef8g --discovery-token-unsafe-skip-ca-verification

如果成功提示内容未保存,则需要重新获取token,可在master使用以下命令:

kubeadm token create --print-join-command

网络插件

k8s集群中的pod通信依赖于网络插件我使用的是flannel,应用前可先浏览配置。注意master初始化集群时的--pod-network-cidr参数需要与 kube-flannel.yml 中的 net-conf.json:Network 保持一致,

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

vim kube-flannel.yml # 修改net-conf.json:Network

kubectl apply -f kube-flannel.yml

异常处理

journalctl -xu kubelet | less -

journalctl -fu kubelet | tee -

failed to pull and unpack image \"k8s.gcr.io/pause:3.2\":

sudo ctr -n=k8s.io i pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

vi /etc/containerd/config.toml

find sandbox_image

k8s.gcr.io/pause:3.2 => registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

配置未生效,需重装 containerd.io

sudo systemctl restart containerd

#kubelet cgroup配置为systemd

https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/#config-file

https://pkg.go.dev/k8s.io/kubernetes@v1.21.0/cmd/kubeadm/app/apis/kubeadm/v1beta2

cat /var/lib/kubelet/config.yaml

config模式:

kubeadm config print init-defaults --component-configs KubeletConfiguration

kubeadm config print init-defaults > kubeadm.yaml

修改:

advertiseAddress: cluster-endpoint

criSocket: /run/containerd/containerd.sock

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

末尾新增:

controlPlaneEndpoint: "cluster-endpoint:6443" #属于ClusterConfiguration

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

sudo kubeadm init --config=kubeadm.yaml -v=5

web ui

https://kubernetes.io/docs/tasks/access-application-cluster/web-ui-dashboard/

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

kubectl proxy&

# 通过ssh tunnel访问 web ui

ssh -L 8001:localhost:8001 user@<server>

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

#https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

token:

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

EOF

cat <<EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

ctr crictl 使用

k8s:crictl

containerd: ctr

sudo ctr plugin ls

sudo ctr -n=k8s.io i pull docker.io/library/nginx:alpine

sudo ctr -n=k8s.io i rm docker.io/library/nginx:alpine

sudo ctr i pull --plain-http 192.168.196.110:5000/tempest/basedata:latest

sudo ctr run -t --rm docker.io/library/busybox:latest bash

#export import

https://github.com/containerd/cri/blob/master/docs/crictl.md#directly-load-a-container-image

https://blog.scottlowe.org/2020/01/25/manually-loading-container-images-with-containerd/

ctr i export coredns.tar registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

ctr -n=k8s.io i import coredns.tar

crictl -r /run/containerd/containerd.sock images

crictl config runtime-endpoint /run/containerd/containerd.sock

crictl images

本文采用 知识共享署名 4.0 国际许可协议 进行许可

浙公网安备 33010602011771号

浙公网安备 33010602011771号