python爬虫练习——爬取壁纸

main.py

import requests from bs4 import BeautifulSoup import os import lib.tools as t import time def main(): for i in range(1,11): bz_url = f"https://desk.zol.com.cn/pc/{i}.html" list = get_bz_list(bz_url) for page_url in list: get_bz_src(page_url) time.sleep(1) def get_bz_list(bz_url): r = requests.get(bz_url) r.encoding = "gb2312" html = r.text soup=BeautifulSoup(html,'lxml') a_list = soup.select(".pic-list2")[0].select(".pic") list = [] for h in a_list: if os.path.splitext(h.attrs["href"])[-1] == ".html": list.append("https://desk.zol.com.cn" + h.attrs["href"]) return list def get_bz_src(page_url): r = requests.get(page_url) r.encoding = "gb2312" html = r.text soup=BeautifulSoup(html,'lxml') pic_div = soup.select("div[class='wrapper photo-tit clearfix']") title_name = pic_div[0].select("a[id='titleName']")[0].get_text() max_num = t.getmidstring(html, '<span>(<span class="current-num">1</span>/', ")</span>") src = soup.select("img[id='bigImg']")[0].attrs["src"] pic_name = title_name + os.path.splitext(src)[-1] t.down_pic(src,f"bz_save/{pic_name}") print(f"{pic_name}------下载完成") if __name__ == "__main__": main()

tools.py

import requests import os import glob # 取出中间文本 def getmidstring(html, start_str, end): start = html.find(start_str) if start >= 0: start += len(start_str) end = html.find(end, start) if end >= 0: return html[start:end].strip() # 下载图片 def down_pic(img_url,path): reponse = requests.get(img_url) with open(path,'wb') as f: f.write(reponse.content) # 创建文件夹 # 遇到重复文件夹命名为文件夹目录_1(2,3,4……) # 返回文件夹目录名称 def mkdir(path,root_flag=False): folder = os.path.exists(path) floder_path = path if not folder: os.makedirs(path) else: if not root_flag: num_p = 1 sub_path = glob.glob(path + '*') if sub_path: # 最后一个创建目录 last_path = sub_path[-1] floder_path = last_path + '_{}'.format(num_p) if last_path.find('_') > 0: num_str = last_path.split('_') if num_str[-1].isdigit(): num_p = int(num_str[-1]) + 1 floder_path = last_path[0:last_path.rfind( '_')] + '_{}'.format(num_p) os.makedirs(floder_path) else: os.makedirs(floder_path) else: os.makedirs(floder_path) return floder_path

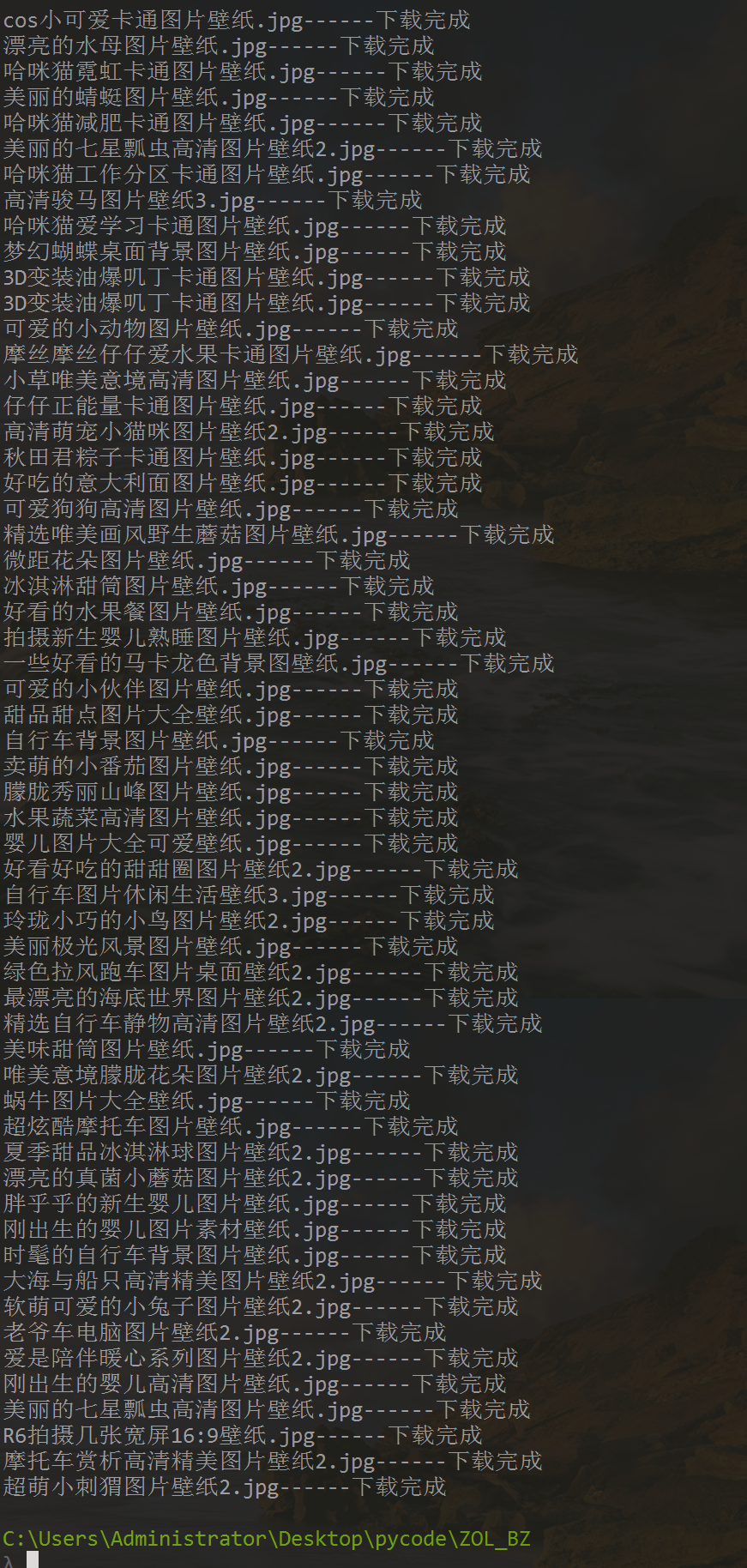

结果:

浙公网安备 33010602011771号

浙公网安备 33010602011771号