Pytorch.nn.conv2d 过程验证(单,多通道卷积过程)

来源 https://zhuanlan.zhihu.com/p/32190799

今天在看文档的时候,发现pytorch 的conv操作不是很明白,于是有了一下记录

首先提出两个问题:

1.输入图片是单通道情况下的filters是如何操作的? 即一通道卷积核卷积过程

2.输入图片是多通道情况下的filters是如何操作的? 即多通道多个卷积核卷积过程

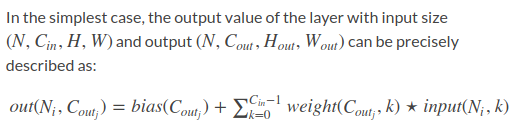

这里首先贴出官方文档:

classtorch.nn.Conv2d(in_channels, out_channels, kernel_size, stride=1, padding=0, dilation=1, groups=1, bias=True)[source]

Parameters:

- in_channels (int) – Number of channels in the input image

- out_channels (int) – Number of channels produced by the convolution

- kernel_size (intortuple) – Size of the convolving kernel

- stride (intortuple,optional) – Stride of the convolution. Default: 1

- padding (intortuple,optional) – Zero-padding added to both sides of the input. Default: 0

- dilation (intortuple,optional) – Spacing between kernel elements. Default: 1

- groups (int,optional) – Number of blocked connections from input channels to output channels. Default: 1

- bias (bool,optional) – If True, adds a learnable bias to the output. Default: True

这个文档中的公式对我来说,并不能看的清楚

一通道卷积核卷积过程:

比如32个卷积核,可以学习32种特征。在有多个卷积核时,如下图所示:输出就为32个feature map

也就是, 当conv2d( in_channels = 1 , out_channels = N)

有N个filter对输入进行滤波。同时输出N个结果即feature map,每个filter滤波输出一个结果.

import torch

from torch.autograd import Variable

##单位矩阵来模拟输入

input=torch.ones(1,1,5,5)

input=Variable(input)

x=torch.nn.Conv2d(in_channels=1,out_channels=3,kernel_size=3,groups=1)

out=x(input)

print(out)

print(list(x.parameters()))输出out的结果和conv2d 的参数如下,可以看到,conv2d是有3个filter加一个bias

# out的结果

Variable containing:

(0 ,0 ,.,.) =

-0.3065 -0.3065 -0.3065

-0.3065 -0.3065 -0.3065

-0.3065 -0.3065 -0.3065

(0 ,1 ,.,.) =

-0.3046 -0.3046 -0.3046

-0.3046 -0.3046 -0.3046

-0.3046 -0.3046 -0.3046

(0 ,2 ,.,.) =

0.0710 0.0710 0.0710

0.0710 0.0710 0.0710

0.0710 0.0710 0.0710

[torch.FloatTensor of size 1x3x3x3]

# conv2d的参数

[Parameter containing:

(0 ,0 ,.,.) =

-0.0789 -0.1932 -0.0990

0.1571 -0.1784 -0.2334

0.0311 -0.2595 0.2222

(1 ,0 ,.,.) =

-0.0703 -0.3159 -0.3295

0.0723 0.3019 0.2649

-0.2217 0.0680 -0.0699

(2 ,0 ,.,.) =

-0.0736 -0.1608 0.1905

0.2738 0.2758 -0.2776

-0.0246 -0.1781 -0.0279

[torch.FloatTensor of size 3x1x3x3]

, Parameter containing:

0.3255

-0.0044

0.0733

[torch.FloatTensor of size 3]

]验证如下,因为是单位矩阵,所以直接对参数用sum()来模拟卷积过程:

f_p=list(x.parameters())[0]

f_p=f_p.data.numpy()

print("the result of first channel in image:", f_p[0].sum()+(0.3255))可以看到结果是和(0 ,0 ,.,.) = -0.3065 ....一样的. 说明操作是通过卷积求和的.

the result of first channel in image: -0.306573044777

多通道卷积核卷积过程:

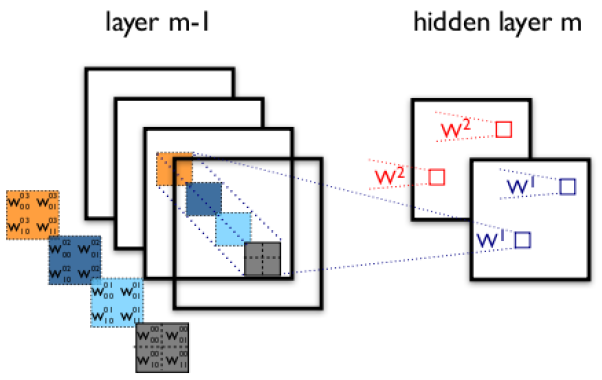

下图展示了在四个通道上的卷积操作,有两个卷积核,生成两个通道。其中需要注意的是,四个通道上每个通道对应一个卷积核,先将w2忽略,只看w1,那么在w1的某位置(i,j)处的值,是由四个通道上(i,j)处的卷积结果相加得到的。 所以最后得到两个feature map, 即输出层的卷积核核个数为 feature map 的个数。

在pytorch 中的展示为

conv2d( in_channels = X(x>1) , out_channels = N)

有N乘X个filter(N组filters,每组X 个)对输入进行滤波。即每次有一组里X个filter对原X个channels分别进行滤波最后相加输出一个结果,最后输出N个结果即feature map。

验证如下:

##单位矩阵来模拟输入

input=torch.ones(1,3,5,5)

input=Variable(input)

x=torch.nn.Conv2d(in_channels=3,out_channels=4,kernel_size=3,groups=1)

out=x(input)

print(list(x.parameters()))可以看到共有4*3=12个filter 和一个1×4的bias 作用在这个(3,5,5)的单位矩阵上

## out输出的结果

Variable containing:

(0 ,0 ,.,.) =

-0.6390 -0.6390 -0.6390

-0.6390 -0.6390 -0.6390

-0.6390 -0.6390 -0.6390

(0 ,1 ,.,.) =

-0.1467 -0.1467 -0.1467

-0.1467 -0.1467 -0.1467

-0.1467 -0.1467 -0.1467

(0 ,2 ,.,.) =

0.4138 0.4138 0.4138

0.4138 0.4138 0.4138

0.4138 0.4138 0.4138

(0 ,3 ,.,.) =

-0.3981 -0.3981 -0.3981

-0.3981 -0.3981 -0.3981

-0.3981 -0.3981 -0.3981

[torch.FloatTensor of size 1x4x3x3]

## x的参数设置

[Parameter containing:

(0 ,0 ,.,.) =

-0.0803 0.1473 -0.0762

0.0284 -0.0050 -0.0246

0.1438 0.0955 -0.0500

(0 ,1 ,.,.) =

0.0716 0.0062 -0.1472

0.1793 0.0543 -0.1764

-0.1548 0.1379 0.1143

(0 ,2 ,.,.) =

-0.1741 -0.1790 -0.0053

-0.0612 -0.1856 -0.0858

-0.0553 0.1621 -0.1822

(1 ,0 ,.,.) =

-0.0773 -0.1385 0.1356

0.1794 -0.0534 -0.1110

-0.0137 -0.1744 -0.0188

(1 ,1 ,.,.) =

-0.0396 0.0149 0.1537

0.0846 -0.1123 -0.0556

-0.1047 -