GhostNet: More Features from Cheap Operations

GhostNet: More Features from Cheap Operations

一. 论文简介

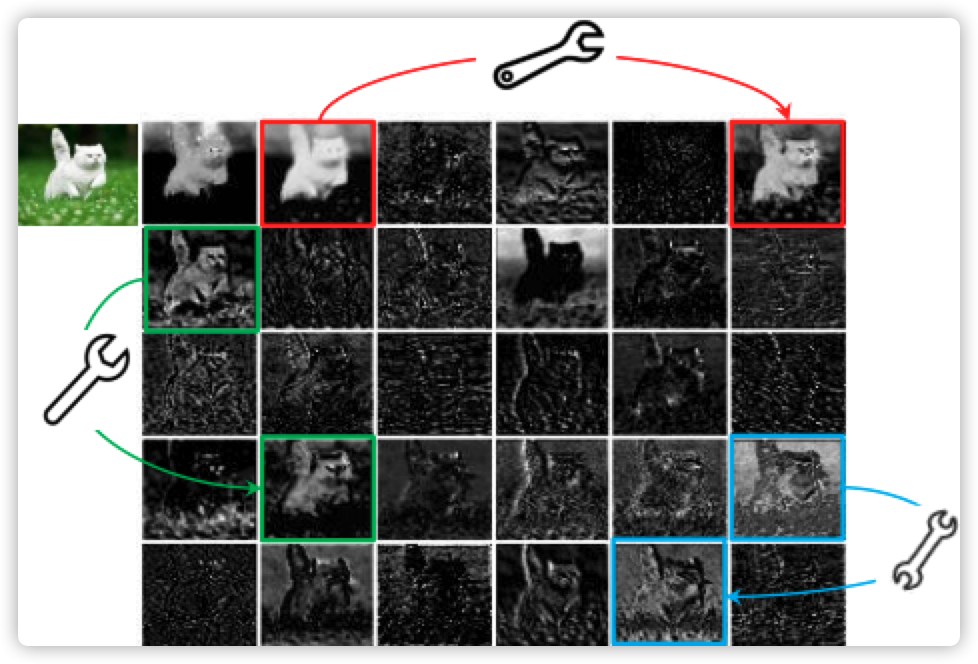

论文可视化特征图如下所示,目的是找到更多的特征对(Ghost)

如何找到?

论文意思是保留内在特征(instrinsic feature),可以获得更多的特征对

如何判定?

未解释

二. 模块详解

2.1 整体结构介绍

- Ghost module

先进行正常卷积(代码用的

1*1降低计算量),然后分组卷积,最后将输入特征和输出特征concat即可,关于卷积的一些操作如下链接所示:Point-wise / Depth-wise / group-convolution / Global Depthwise Convolution / Global Average Pooling

class GhostModule(nn.Module):

def __init__(self, inp, oup, kernel_size=1, ratio=2, dw_size=3, stride=1, relu=True):

super(GhostModule, self).__init__()

self.oup = oup

init_channels = math.ceil(oup / ratio)

new_channels = init_channels*(ratio-1)

# Custom Convolution

self.primary_conv = nn.Sequential(

nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size//2, bias=False),

nn.BatchNorm2d(init_channels),

nn.ReLU(inplace=True) if relu else nn.Sequential(),

)

# Depth-wise Convolution

self.cheap_operation = nn.Sequential(

nn.Conv2d(init_channels, new_channels, dw_size, 1, dw_size//2, groups=init_channels, bias=False),

nn.BatchNorm2d(new_channels),

nn.ReLU(inplace=True) if relu else nn.Sequential(),

)

def forward(self, x):

x1 = self.primary_conv(x)

x2 = self.cheap_operation(x1)

out = torch.cat([x1,x2], dim=1)

return out[:,:self.oup,:,:]

- GhostBottleneck

就是将Ghost module加入到resnet之内即可

class GhostBottleneck(nn.Module):

""" Ghost bottleneck w/ optional SE"""

def __init__(self, in_chs, mid_chs, out_chs, dw_kernel_size=3,

stride=1, act_layer=nn.ReLU, se_ratio=0.):

super(GhostBottleneck, self).__init__()

has_se = se_ratio is not None and se_ratio > 0.

self.stride = stride

# Point-wise expansion

self.ghost1 = GhostModule(in_chs, mid_chs, relu=True)

# Depth-wise convolution

if self.stride > 1:

self.conv_dw = nn.Conv2d(mid_chs, mid_chs, dw_kernel_size, stride=stride,

padding=(dw_kernel_size-1)//2,

groups=mid_chs, bias=False)

self.bn_dw = nn.BatchNorm2d(mid_chs)

# Squeeze-and-excitation

if has_se:

self.se = SqueezeExcite(mid_chs, se_ratio=se_ratio)

else:

self.se = None

# Point-wise linear projection

self.ghost2 = GhostModule(mid_chs, out_chs, relu=False)

# shortcut

if (in_chs == out_chs and self.stride == 1):

self.shortcut = nn.Sequential()

else:

self.shortcut = nn.Sequential(

nn.Conv2d(in_chs, in_chs, dw_kernel_size, stride=stride,

padding=(dw_kernel_size-1)//2, groups=in_chs, bias=False),

nn.BatchNorm2d(in_chs),

nn.Conv2d(in_chs, out_chs, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_chs),

)

def forward(self, x):

residual = x

# 1st ghost bottleneck

x = self.ghost1(x)

# Depth-wise convolution

if self.stride > 1:

x = self.conv_dw(x)

x = self.bn_dw(x)

# Squeeze-and-excitation

if self.se is not None:

x = self.se(x)

# 2nd ghost bottleneck

x = self.ghost2(x)

x += self.shortcut(residual)

return x

- SE模块

Attention mode,通道使用不同权重

https://arxiv.org/abs/1709.01507

# SE module that attetion mode

class SqueezeExcite(nn.Module):

def __init__(self, in_chs, se_ratio=0.25, reduced_base_chs=None,

act_layer=nn.ReLU, gate_fn=hard_sigmoid, divisor=4, **_):

super(SqueezeExcite, self).__init__()

self.gate_fn = gate_fn

reduced_chs = _make_divisible((reduced_base_chs or in_chs) * se_ratio, divisor)

self.avg_pool = nn.AdaptiveAvgPool2d(1)

self.conv_reduce = nn.Conv2d(in_chs, reduced_chs, 1, bias=True)

self.act1 = act_layer(inplace=True)

self.conv_expand = nn.Conv2d(reduced_chs, in_chs, 1, bias=True)

def forward(self, x):

x_se = self.avg_pool(x)

x_se = self.conv_reduce(x_se)

x_se = self.act1(x_se)

x_se = self.conv_expand(x_se)

x = x * self.gate_fn(x_se)

return x

作者:影醉阏轩窗

-------------------------------------------

个性签名:衣带渐宽终不悔,为伊消得人憔悴!

如果觉得这篇文章对你有小小的帮助的话,记得关注再下的公众号,同时在右下角点个“推荐”哦,博主在此感谢!

浙公网安备 33010602011771号

浙公网安备 33010602011771号