RocketMQ-4.NameServer

NameServer

RouteInfoManager 所有的集群状态的维护者

RouteInfoManager基本结构:

public class RouteInfoManager {

private static final InternalLogger log = InternalLoggerFactory.getLogger(LoggerName.NAMESRV_LOGGER_NAME);

private final static long BROKER_CHANNEL_EXPIRED_TIME = 1000 * 60 * 2;

private final ReadWriteLock lock = new ReentrantReadWriteLock();

private final HashMap<String/* topic */, List<QueueData>> topicQueueTable; //topic和queue列表的映射

private final HashMap<String/* brokerName */, BrokerData> brokerAddrTable;//brokerName和Broker信息的映射

private final HashMap<String/* clusterName */, Set<String/* brokerName */>> clusterAddrTable;//集群名和BrokerName的映射

private final HashMap<String/* brokerAddr */, BrokerLiveInfo> brokerLiveTable;//broker地址和broker运行状态的映射

private final HashMap<String/* brokerAddr */, List<String>/* Filter Server */> filterServerTable;//broker地址和过滤器列表的映射

public RouteInfoManager() {

this.topicQueueTable = new HashMap<String, List<QueueData>>(1024);

this.brokerAddrTable = new HashMap<String, BrokerData>(128);

this.clusterAddrTable = new HashMap<String, Set<String>>(32);

this.brokerLiveTable = new HashMap<String, BrokerLiveInfo>(256);

this.filterServerTable = new HashMap<String, List<String>>(256);

}

}

状态维护逻辑

其他角色会主动向NamerServer更新状态,其主要逻辑在DefaultRequestProcessor类中,根据不同的请求码做对应的处理:

@Override

public RemotingCommand processRequest(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

if (ctx != null) {

log.debug("receive request, {} {} {}",

request.getCode(),

RemotingHelper.parseChannelRemoteAddr(ctx.channel()),

request);

}

switch (request.getCode()) {

case RequestCode.PUT_KV_CONFIG:

return this.putKVConfig(ctx, request);

case RequestCode.GET_KV_CONFIG:

return this.getKVConfig(ctx, request);

case RequestCode.DELETE_KV_CONFIG:

return this.deleteKVConfig(ctx, request);

case RequestCode.QUERY_DATA_VERSION:

return queryBrokerTopicConfig(ctx, request);

case RequestCode.REGISTER_BROKER:

Version brokerVersion = MQVersion.value2Version(request.getVersion());

if (brokerVersion.ordinal() >= MQVersion.Version.V3_0_11.ordinal()) {

return this.registerBrokerWithFilterServer(ctx, request);

} else {

return this.registerBroker(ctx, request);

}

case RequestCode.UNREGISTER_BROKER:

return this.unregisterBroker(ctx, request);

case RequestCode.GET_ROUTEINFO_BY_TOPIC:

return this.getRouteInfoByTopic(ctx, request);

case RequestCode.GET_BROKER_CLUSTER_INFO:

return this.getBrokerClusterInfo(ctx, request);

case RequestCode.WIPE_WRITE_PERM_OF_BROKER:

return this.wipeWritePermOfBroker(ctx, request);

case RequestCode.GET_ALL_TOPIC_LIST_FROM_NAMESERVER:

return getAllTopicListFromNameserver(ctx, request);

case RequestCode.DELETE_TOPIC_IN_NAMESRV:

return deleteTopicInNamesrv(ctx, request);

case RequestCode.GET_KVLIST_BY_NAMESPACE:

return this.getKVListByNamespace(ctx, request);

case RequestCode.GET_TOPICS_BY_CLUSTER:

return this.getTopicsByCluster(ctx, request);

case RequestCode.GET_SYSTEM_TOPIC_LIST_FROM_NS:

return this.getSystemTopicListFromNs(ctx, request);

case RequestCode.GET_UNIT_TOPIC_LIST:

return this.getUnitTopicList(ctx, request);

case RequestCode.GET_HAS_UNIT_SUB_TOPIC_LIST:

return this.getHasUnitSubTopicList(ctx, request);

case RequestCode.GET_HAS_UNIT_SUB_UNUNIT_TOPIC_LIST:

return this.getHasUnitSubUnUnitTopicList(ctx, request);

case RequestCode.UPDATE_NAMESRV_CONFIG:

return this.updateConfig(ctx, request);

case RequestCode.GET_NAMESRV_CONFIG:

return this.getConfig(ctx, request);

default:

break;

}

return null;

}

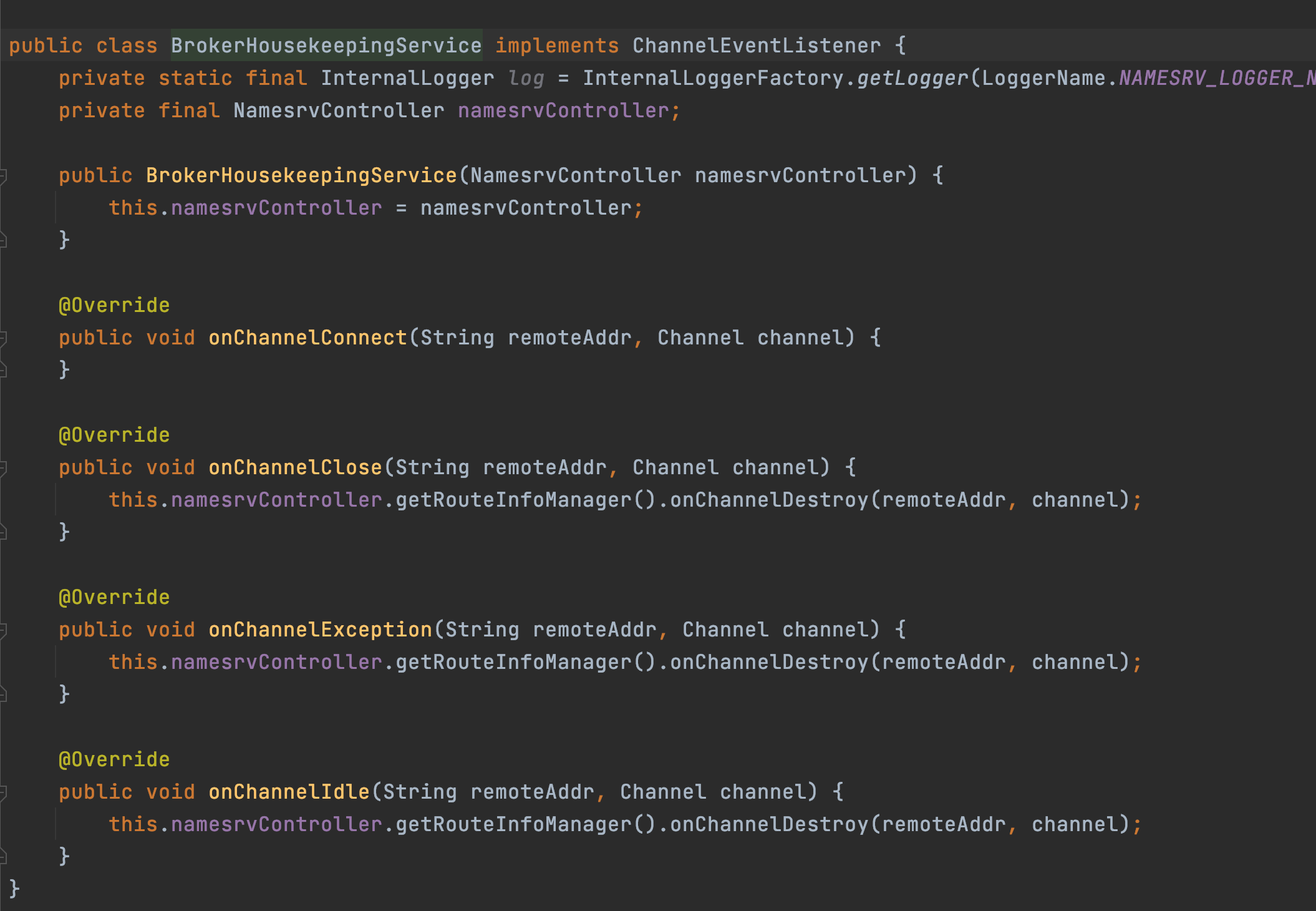

此外,断开的事件也会触发状态更新,具体逻辑在BrokerHousekeepingService类中

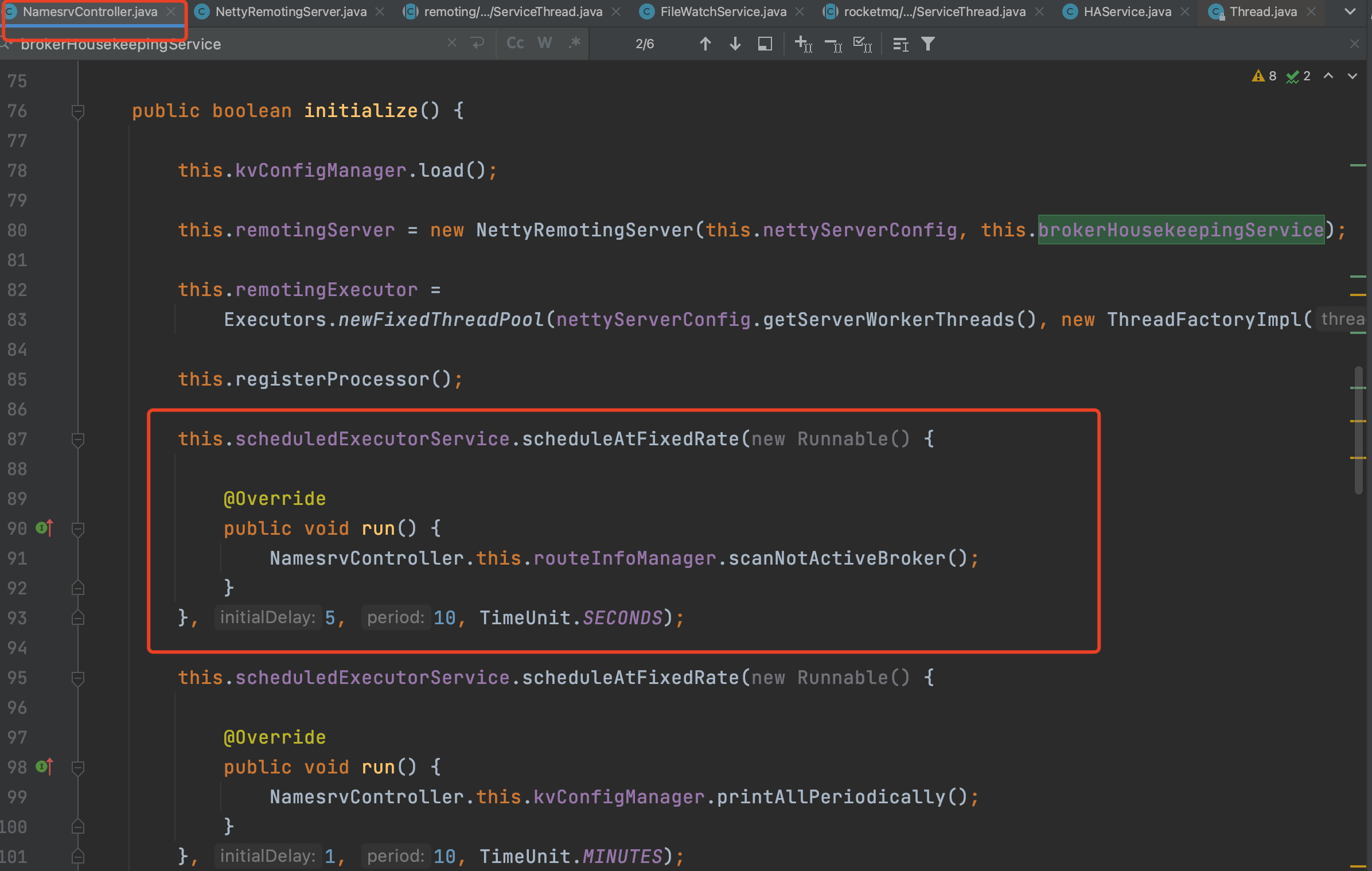

此外,NameServer还有定时检查时间戳的逻辑,Broker向NameServer发送心跳包会更新时间戳,档NameServer检查到时间戳长时间没有更新后,便会触发清理逻辑,如下:

public void scanNotActiveBroker() {

Iterator<Entry<String, BrokerLiveInfo>> it = this.brokerLiveTable.entrySet().iterator();

while (it.hasNext()) {

Entry<String, BrokerLiveInfo> next = it.next();

long last = next.getValue().getLastUpdateTimestamp();

if ((last + BROKER_CHANNEL_EXPIRED_TIME) < System.currentTimeMillis()) {

RemotingUtil.closeChannel(next.getValue().getChannel());

it.remove();

log.warn("The broker channel expired, {} {}ms", next.getKey(), BROKER_CHANNEL_EXPIRED_TIME);

this.onChannelDestroy(next.getKey(), next.getValue().getChannel());

}

}

}

每10秒检测一次,超过2分钟,会将Broker从RouteInfoManager维护的列表中去除。

各角色间的交互流程

创建Topic的节点

仅仅以创建Topic作为一个例子:

客户端通过MQAdminStartup启动,输入的 createTopic -b xxx -x xxx 之类的命令,内部会转化成对应的SubCommand,创建Topic的command为UpdateTopicSubCommand,其实现SubCommand,主要有buildCommandLine 和execute两个方法。

先看下buildCommandLine方法的具体逻辑:

RocketMQ 命令行解析使用的是org.apache.commons.cli,会生成CommandLine,然后利用cli框架解析commandLine最终转换成RemotingCommand指令,发送给Broker去执行。

@Override

public Options buildCommandlineOptions(Options options) {

OptionGroup optionGroup = new OptionGroup();//命令组

Option opt = new Option("b", "brokerAddr", true, "create topic to which broker");

optionGroup.addOption(opt);

opt = new Option("c", "clusterName", true, "create topic to which cluster");

optionGroup.addOption(opt);

optionGroup.setRequired(true);

options.addOptionGroup(optionGroup);

opt = new Option("t", "topic", true, "topic name");

opt.setRequired(true);

options.addOption(opt);

opt = new Option("r", "readQueueNums", true, "set read queue nums");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("w", "writeQueueNums", true, "set write queue nums");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("p", "perm", true, "set topic's permission(2|4|6), intro[2:W 4:R; 6:RW]");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("o", "order", true, "set topic's order(true|false)");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("u", "unit", true, "is unit topic (true|false)");

opt.setRequired(false);

options.addOption(opt);

opt = new Option("s", "hasUnitSub", true, "has unit sub (true|false)");

opt.setRequired(false);

options.addOption(opt);

return options;

}

UpdateTopicSubCommand.execute()

--> DefaultMQAdminExt.createAndUpdateTopicConfig()

--> DefaultMQAdminExtImpl.createAndUpdateTopicConfig

--> MQClientInstance..getMQClientAPIImpl().createTopic()

--> this.remotingClient.invokeSync(CreateTopicCommand)

topic具体的创建逻辑是execute方法里面,最终的执行逻辑是在 MQClientAPIImpl 里。

部分源码如下:

@Override

public void execute(final CommandLine commandLine, final Options options,

RPCHook rpcHook) throws SubCommandException {

DefaultMQAdminExt defaultMQAdminExt = new DefaultMQAdminExt(rpcHook);

defaultMQAdminExt.setInstanceName(Long.toString(System.currentTimeMillis()));

try {

TopicConfig topicConfig = new TopicConfig();

topicConfig.setReadQueueNums(8);

topicConfig.setWriteQueueNums(8);

topicConfig.setTopicName(commandLine.getOptionValue('t').trim());

// readQueueNums

if (commandLine.hasOption('r')) {

topicConfig.setReadQueueNums(Integer.parseInt(commandLine.getOptionValue('r').trim()));

}

// writeQueueNums

if (commandLine.hasOption('w')) {

topicConfig.setWriteQueueNums(Integer.parseInt(commandLine.getOptionValue('w').trim()));

}

// perm

if (commandLine.hasOption('p')) {

topicConfig.setPerm(Integer.parseInt(commandLine.getOptionValue('p').trim()));

}

boolean isUnit = false;

if (commandLine.hasOption('u')) {

isUnit = Boolean.parseBoolean(commandLine.getOptionValue('u').trim());

}

boolean isCenterSync = false;

if (commandLine.hasOption('s')) {

isCenterSync = Boolean.parseBoolean(commandLine.getOptionValue('s').trim());

}

int topicCenterSync = TopicSysFlag.buildSysFlag(isUnit, isCenterSync);

topicConfig.setTopicSysFlag(topicCenterSync);

boolean isOrder = false;

if (commandLine.hasOption('o')) {

isOrder = Boolean.parseBoolean(commandLine.getOptionValue('o').trim());

}

topicConfig.setOrder(isOrder);

if (commandLine.hasOption('b')) {

String addr = commandLine.getOptionValue('b').trim();

defaultMQAdminExt.start();

defaultMQAdminExt.createAndUpdateTopicConfig(addr, topicConfig);//这里发送Command给Broker

if (isOrder) {

String brokerName = CommandUtil.fetchBrokerNameByAddr(defaultMQAdminExt, addr);

String orderConf = brokerName + ":" + topicConfig.getWriteQueueNums();

defaultMQAdminExt.createOrUpdateOrderConf(topicConfig.getTopicName(), orderConf, false);

System.out.printf("%s", String.format("set broker orderConf. isOrder=%s, orderConf=[%s]",

isOrder, orderConf.toString()));

}

System.out.printf("create topic to %s success.%n", addr);

System.out.printf("%s", topicConfig);

return;

} else if (commandLine.hasOption('c')) {

String clusterName = commandLine.getOptionValue('c').trim();

defaultMQAdminExt.start();

Set<String> masterSet =

CommandUtil.fetchMasterAddrByClusterName(defaultMQAdminExt, clusterName);

for (String addr : masterSet) {//cluster的broker全部遍历创建

defaultMQAdminExt.createAndUpdateTopicConfig(addr, topicConfig);

System.out.printf("create topic to %s success.%n", addr);

}

if (isOrder) {

Set<String> brokerNameSet =

CommandUtil.fetchBrokerNameByClusterName(defaultMQAdminExt, clusterName);

StringBuilder orderConf = new StringBuilder();

String splitor = "";

for (String s : brokerNameSet) {

orderConf.append(splitor).append(s).append(":")

.append(topicConfig.getWriteQueueNums());

splitor = ";";

}

defaultMQAdminExt.createOrUpdateOrderConf(topicConfig.getTopicName(),

orderConf.toString(), true);

System.out.printf("set cluster orderConf. isOrder=%s, orderConf=[%s]", isOrder, orderConf);

}

System.out.printf("%s", topicConfig);

return;

}

ServerUtil.printCommandLineHelp("mqadmin " + this.commandName(), options);

} catch (Exception e) {

throw new SubCommandException(this.getClass().getSimpleName() + " command failed", e);

} finally {

defaultMQAdminExt.shutdown();

}

}

客户端发送command

最终的执行逻辑:

public void createTopic(final String addr, final String defaultTopic, final TopicConfig topicConfig,

final long timeoutMillis)

throws RemotingException, MQBrokerException, InterruptedException, MQClientException {

CreateTopicRequestHeader requestHeader = new CreateTopicRequestHeader();

requestHeader.setTopic(topicConfig.getTopicName());

requestHeader.setDefaultTopic(defaultTopic);

requestHeader.setReadQueueNums(topicConfig.getReadQueueNums());

requestHeader.setWriteQueueNums(topicConfig.getWriteQueueNums());

requestHeader.setPerm(topicConfig.getPerm());

requestHeader.setTopicFilterType(topicConfig.getTopicFilterType().name());

requestHeader.setTopicSysFlag(topicConfig.getTopicSysFlag());

requestHeader.setOrder(topicConfig.isOrder());

RemotingCommand request = RemotingCommand.createRequestCommand(RequestCode.UPDATE_AND_CREATE_TOPIC, requestHeader);//生成创建Topic的command,发送出去

RemotingCommand response = this.remotingClient.invokeSync(MixAll.brokerVIPChannel(this.clientConfig.isVipChannelEnabled(), addr),

request, timeoutMillis);

assert response != null;

switch (response.getCode()) {

case ResponseCode.SUCCESS: {

return;

}

default:

break;

}

throw new MQClientException(response.getCode(), response.getRemark());

}

为何不用Zookeeper

zk 作为分布式程序协调服务,作为调度中心享有盛名,其功能很强大,支持集群、master选举等功能。而RocketMQ的调度中心不需要master选举,其nameServer没有master、slave的概念,只需要一个轻量级的元数据维护中心。

其对稳定性要求高,因此自己开发容易维护。

底层通信机制

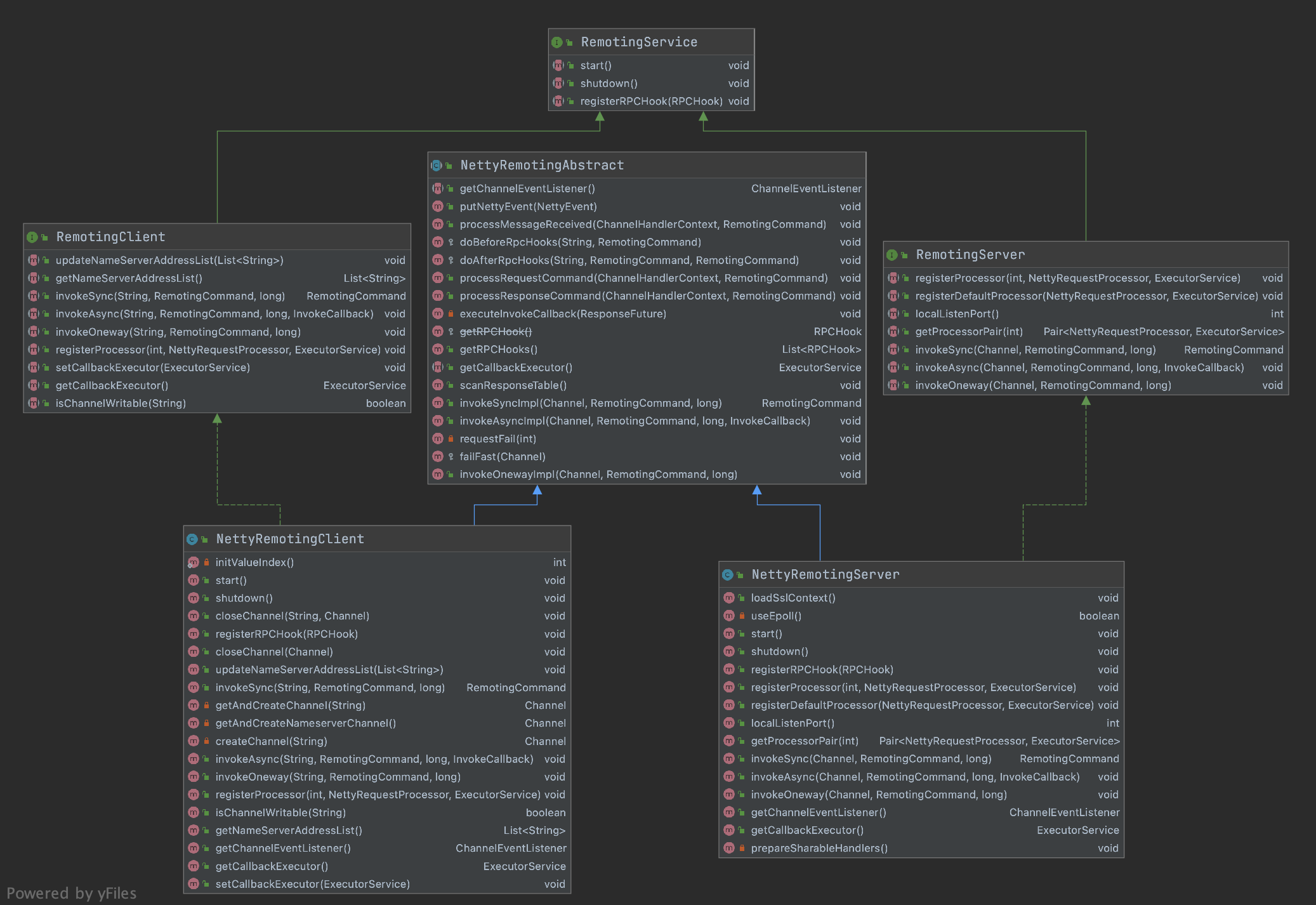

Remoting模块

通信模块代码都在Remoting模块,主要类结构如图所示:

RemotingService为最上层接口,server和client分别实现且有各自特有的方法。相互之前使用RemotingCommand的进行调用,底层使用Netty进行通信。

NameServer模块通信步骤

以NameServer模块为例,启动时候会创建NamesvrController,里面就包含了NettyRemotingServer remotingServer,里面有各种Processor用于处理不同的,而NameServerController通常装载的是DefaultRequestProcessor,所有的Command的处理逻辑可以在这里面看到。

NameServer模块启动关键步骤:

org.apache.rocketmq.namesrv.NamesrvStartup#main0 // new NameSrvController

--> org.apache.rocketmq.namesrv.NamesrvController#initialize // 初始化Controller

--> org.apache.rocketmq.namesrv.NamesrvController#registerProcessor // 注册Processor

NameServerController.initialize()的逻辑:

public boolean initialize() {

this.kvConfigManager.load();

this.remotingServer = new NettyRemotingServer(this.nettyServerConfig, this.brokerHousekeepingService);//实例化通信服务端

this.remotingExecutor =

Executors.newFixedThreadPool(nettyServerConfig.getServerWorkerThreads(), new ThreadFactoryImpl("RemotingExecutorThread_"));

this.registerProcessor();//注册服务端下的Processors

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.routeInfoManager.scanNotActiveBroker();

}

}, 5, 10, TimeUnit.SECONDS);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

NamesrvController.this.kvConfigManager.printAllPeriodically();

}

}, 1, 10, TimeUnit.MINUTES);

//...

return true;

}

DefaultRequestProcessor的processRequest逻辑:入参就是netty的channelCtx和RocketMQ抽象的RemotingCommand

@Override

public RemotingCommand processRequest(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

if (ctx != null) {

log.debug("receive request, {} {} {}",

request.getCode(),

RemotingHelper.parseChannelRemoteAddr(ctx.channel()),

request);

}

switch (request.getCode()) {//

case RequestCode.PUT_KV_CONFIG:

return this.putKVConfig(ctx, request);

case RequestCode.GET_KV_CONFIG:

return this.getKVConfig(ctx, request);

case RequestCode.DELETE_KV_CONFIG:

return this.deleteKVConfig(ctx, request);

case RequestCode.QUERY_DATA_VERSION:

return queryBrokerTopicConfig(ctx, request);

case RequestCode.REGISTER_BROKER:

Version brokerVersion = MQVersion.value2Version(request.getVersion());

if (brokerVersion.ordinal() >= MQVersion.Version.V3_0_11.ordinal()) {

return this.registerBrokerWithFilterServer(ctx, request);

} else {

return this.registerBroker(ctx, request);

}

case RequestCode.UNREGISTER_BROKER:

return this.unregisterBroker(ctx, request);

case RequestCode.GET_ROUTEINFO_BY_TOPIC:

return this.getRouteInfoByTopic(ctx, request);

case RequestCode.GET_BROKER_CLUSTER_INFO:

return this.getBrokerClusterInfo(ctx, request);

case RequestCode.WIPE_WRITE_PERM_OF_BROKER:

return this.wipeWritePermOfBroker(ctx, request);

case RequestCode.GET_ALL_TOPIC_LIST_FROM_NAMESERVER:

return getAllTopicListFromNameserver(ctx, request);

case RequestCode.DELETE_TOPIC_IN_NAMESRV:

return deleteTopicInNamesrv(ctx, request);

case RequestCode.GET_KVLIST_BY_NAMESPACE:

return this.getKVListByNamespace(ctx, request);

case RequestCode.GET_TOPICS_BY_CLUSTER:

return this.getTopicsByCluster(ctx, request);

case RequestCode.GET_SYSTEM_TOPIC_LIST_FROM_NS:

return this.getSystemTopicListFromNs(ctx, request);

case RequestCode.GET_UNIT_TOPIC_LIST:

return this.getUnitTopicList(ctx, request);

case RequestCode.GET_HAS_UNIT_SUB_TOPIC_LIST:

return this.getHasUnitSubTopicList(ctx, request);

case RequestCode.GET_HAS_UNIT_SUB_UNUNIT_TOPIC_LIST:

return this.getHasUnitSubUnUnitTopicList(ctx, request);

case RequestCode.UPDATE_NAMESRV_CONFIG:

return this.updateConfig(ctx, request);

case RequestCode.GET_NAMESRV_CONFIG:

return this.getConfig(ctx, request);

default:

break;

}

return null;

}

后面的逻辑无非就是本地集群信息的一些操作了,由RoutInfoManager去完成。

Consumer模块通信步骤

Consumer的通信最终由NettyRemotingClient完成。

装载Processor到NettyRemotingClient,默认的Processor大部分情况是:ClientRemotingProcessor,里面的处理逻辑见下面代码:

MQClientManager管理MQClientInstance,里面是一个Map: private ConcurrentMap<String/* clientId */, MQClientInstance> factoryTable

MQClientInstance#mQClientAPIImpl

MQClientAPIImpl#remotingClient --> NettyRemotingClient的实例。

初始化的逻辑主要在Consumer.start方法中

ClientRemotingProcessor处理逻辑:

@Override

public RemotingCommand processRequest(ChannelHandlerContext ctx,

RemotingCommand request) throws RemotingCommandException {

switch (request.getCode()) {

case RequestCode.CHECK_TRANSACTION_STATE:

return this.checkTransactionState(ctx, request);

case RequestCode.NOTIFY_CONSUMER_IDS_CHANGED:

return this.notifyConsumerIdsChanged(ctx, request);

case RequestCode.RESET_CONSUMER_CLIENT_OFFSET:

return this.resetOffset(ctx, request);

case RequestCode.GET_CONSUMER_STATUS_FROM_CLIENT:

return this.getConsumeStatus(ctx, request);

case RequestCode.GET_CONSUMER_RUNNING_INFO:

return this.getConsumerRunningInfo(ctx, request);

case RequestCode.CONSUME_MESSAGE_DIRECTLY:

return this.consumeMessageDirectly(ctx, request);

case RequestCode.PUSH_REPLY_MESSAGE_TO_CLIENT:

return this.receiveReplyMessage(ctx, request);

default:

break;

}

return null;

}

协议设计和编解码

RocketMQ有自己的通信协议,通过协议在ByteBuffer和RemotingCommand之间相互转换。转换的的方法为RemotingCommand.decode 和 RemotingCommand.encode。

协议的定义如下:

|4byte | 4byte | | |

|length | headerlength | header data | body data |

decode逻辑如下:

public static RemotingCommand decode(final ByteBuffer byteBuffer) {

int length = byteBuffer.limit();//nio的byteBuffer

int oriHeaderLen = byteBuffer.getInt();

int headerLength = getHeaderLength(oriHeaderLen);

byte[] headerData = new byte[headerLength];

byteBuffer.get(headerData);

RemotingCommand cmd = headerDecode(headerData, getProtocolType(oriHeaderLen));

int bodyLength = length - 4 - headerLength;

byte[] bodyData = null;

if (bodyLength > 0) {

bodyData = new byte[bodyLength];

byteBuffer.get(bodyData);

}

cmd.body = bodyData;

return cmd;

}

encode逻辑如下:

public ByteBuffer encode() {

// 1> header length size

int length = 4;

// 2> header data length

byte[] headerData = this.headerEncode();

length += headerData.length;

// 3> body data length

if (this.body != null) {

length += body.length;

}

ByteBuffer result = ByteBuffer.allocate(4 + length);

// length

result.putInt(length);

// header length

result.put(markProtocolType(headerData.length, serializeTypeCurrentRPC));

// header data

result.put(headerData);

// body data;

if (this.body != null) {

result.put(this.body);

}

result.flip();

return result;

}

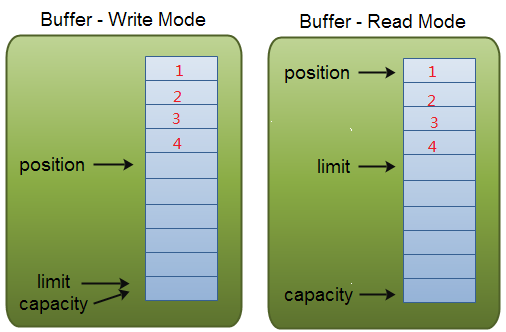

encode里面有flip操作,结合网上byteBuffer的结构图和flip的代码去理解:即读写模式的切换。

/**

翻转这个缓冲区。 将限制设置为当前位置,然后将位置设置为零。 如果定义了标记,则将其丢弃。

在一系列通道读取或放置操作之后,调用此方法以准备一系列通道写入或相关获取操作。 例如:

buf.put(magic); // Prepend header

in.read(buf); // Read data into rest of buffer

buf.flip(); // Flip buffer

out.write(buf); // Write header + data to channel

当将数据从一个地方传输到另一个地方时,此方法通常与compact方法结合使用。

返回:

这个缓冲区

*/

public final Buffer flip() {

limit = position;

position = 0;

mark = -1;

return this;

}

小结

- NameServer扮演了调度中心的角色,内部RouteInfoManager维护了所有的集群信息。Producer,Consumer通过长链接上报自己的状态,并维护更新,所有的集群信息维护在内存中。NameServer很重要,被设计的很轻量;

- RocketMQ底部借用Netty完成了通信,抽象有RemotingService接口,最终的处理逻辑全部在NettyRequestProcessor中,例如MQClientInstance(Client)的处理逻辑,大部分在ClientRemotingProcessor中org.apache.rocketmq.remoting.netty.NettyRemotingClient#start方法,设置了事件的pipeline,最后会add一个handler,即:org.apache.rocketmq.remoting.netty.NettyRemotingClient.NettyClientHandler,里面会调用Processor.

RemotingCommand processRequest(ChannelHandlerContext ctx, RemotingCommand request) throws Exception;方法;

浙公网安备 33010602011771号

浙公网安备 33010602011771号