hadoop部署(4)

3.hadoop安装配置

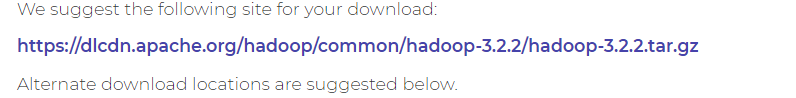

* 下载hadoop

点链接下载。

* 安装

1.解压

tar -zxvf hadoop-3.2.2.tar.gz

2.移动目录

mv hadoop-3.2.2 /usr/local/hadoop

3.配置hadoop

添加jdk-

cd /usr/local/hadoop

vim etc/hadoop/hadoop-env.sh

vim etc/hadoop/yarn-env.sh

添加JAVA_HOME环境到hadoop-env.sh和yarn-env.sh

JAVA_HOME=/usr/java/jdk1.8.5_151

查看版本

bin/hadoop version

* 配置hadoop

core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/hadoop/tmp</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/hadoop/dfs/data</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>Master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>Master:19888</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

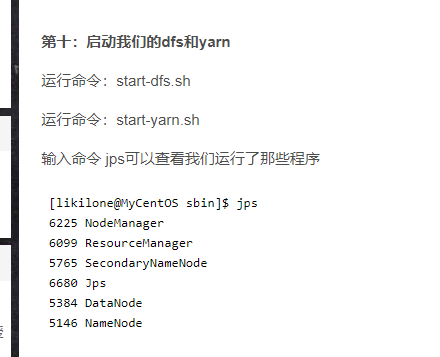

* 启动hadoop

在Master服务器启动hadoop,从节点会自动启动,进入/home/hadoop/hadoop-2.7.0目录

(1)初始化,输入命令,bin/hdfs namenode -format

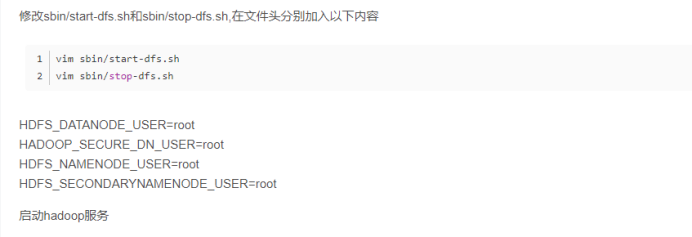

(2)全部启动sbin/start-all.sh,也可以分开sbin/start-dfs.sh、sbin/start-yarn.sh

(3)终止服务器:sbin/stop-all.sh

(4)输入命令jps,可以看到相关信息

如果失败,可尝试

* 本机上用浏览器访问

(1)关闭防火墙systemctl stop firewalld.service

(2)浏览器打开http://192.168.198.137:8088/

(3)浏览器打开http://192.168.198:137:50070/

浙公网安备 33010602011771号

浙公网安备 33010602011771号