k8s-2 Ingress-nginx 安装使用详解

一、Ingress简介

在Kubernetes中,服务和Pod的IP地址仅可以在集群网络内部使用,对于集群外的应用是不可见的。为了使外部的应用能够访问集群内的服务,在Kubernetes 目前 提供了以下几种方案:

NodePort

LoadBalancer

Ingress

1、Ingress组成

ingress controller

将新加入的Ingress转化成Nginx的配置文件并使之生效

ingress服务

将Nginx的配置抽象成一个Ingress对象,每添加一个新的服务只需写一个新的Ingress的yaml文件即可

2、Ingress工作原理

1.ingress controller通过和kubernetes api交互,动态的去感知集群中ingress规则变化,

2.然后读取它,按照自定义的规则,规则就是写明了哪个域名对应哪个service,生成一段nginx配置,

3.再写到nginx-ingress-control的pod里,这个Ingress controller的pod里运行着一个Nginx服务,控制器会把生成的nginx配置写入/etc/nginx.conf文件中,

4.然后reload一下使配置生效。以此达到域名分配置和动态更新的问题。

3、Ingress 可以解决什么问题

1.动态配置服务

如果按照传统方式, 当新增加一个服务时, 我们可能需要在流量入口加一个反向代理指向我们新的k8s服务. 而如果用了Ingress, 只需要配置好这个服务, 当服务启动时, 会自动注册到Ingress的中, 不需要而外的操作.

2.减少不必要的端口暴露

配置过k8s的都清楚, 第一步是要关闭防火墙的, 主要原因是k8s的很多服务会以NodePort方式映射出去, 这样就相当于给宿主机打了很多孔, 既不安全也不优雅. 而Ingress可以避免这个问题, 除了Ingress自身服务可能需要映射出去, 其他服务都不要用NodePort方式

二、部署配置ingress-nginx

1、下载配置文件(下载的整合文件)

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.29.0/deploy/static/mandatory.yaml

vim mandatory.yaml

#对下载的yaml文件进行简单的修改

spec: #定位到212行,也就是该行

hostNetwork: true #添加该行,表示使用主机网络

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

nodeSelector:

Ingress: nginx ##设置节点的标签选择器,指定在哪台节点上运行

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.29.0

#该资源使用的镜像,该镜像下载较慢,可使用步骤开头提供的网盘链接!

kubectl label nodes node01 Ingress=nginx

#对node01节点打相应的标签,以便指定Ingress-nginx运行在node01

kubectl get nodes node01 --show-labels

#查看node01的标签是否存在

docker load < nginx-ingress.tar

#node01节点导入镜像(也可自行下载)

kubectl apply -f mandatory.yaml

2、文件说明

可以分成五个单独的文件

1.namespace.yaml

创建一个独立的命名空间 ingress-nginx

2.configmap.yaml

ConfigMap是存储通用的配置变量的,类似于配置文件,使用户可以将分布式系统中用于不同模块的环境变量统一到一个对象中管理;而它与配置文件的区别在于它是存在集群的“环境”中的,并且支持K8S集群中所有通用的操作调用方式。

从数据角度来看,ConfigMap的类型只是键值组,用于存储被Pod或者其他资源对象(如RC)访问的信息。这与secret的设计理念有异曲同工之妙,主要区别在于ConfigMap通常不用于存储敏感信息,而只存储简单的文本信息。

ConfigMap可以保存环境变量的属性,也可以保存配置文件。

创建pod时,对configmap进行绑定,pod内的应用可以直接引用ConfigMap的配置。相当于configmap为应用/运行环境封装配置。

pod使用ConfigMap,通常用于:设置环境变量的值、设置命令行参数、创建配置文件。

3.default-backend.yaml

如果外界访问的域名不存在的话,则默认转发到default-http-backend这个Service,其会直接返回404:

4.rbac.yaml

负责Ingress的RBAC授权的控制,其创建了Ingress用到的ServiceAccount、ClusterRole、Role、RoleBinding、ClusterRoleBinding

5.with-rbac.yaml

是Ingress的核心,用于创建ingress-controller。前面提到过,ingress-controller的作用是将新加入的Ingress进行转化为Nginx的配置

3、选择要部署的节点

#给master002和master003打上标签

kubectl label nodes huoban-k8s-master02 kubernetes.io=nginx-ingress

kubectl label nodes huoban-k8s-master03 kubernetes.io=nginx-ingress

4、修改配置文件

# vim mandatory.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginxs

data:

proxy-body-size: "200m"

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 2

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

nodeSelector:

kubernetes.io: nginx-ingress

tolerations:

- effect: NoSchedule

operator: Exists

hostNetwork: true

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.25.1

imagePullPolicy: IfNotPresent

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

volumeMounts:

- name: ssl

mountPath: /etc/ingress-controller/ssl

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

volumes:

- name: ssl

nfs:

path: /conf/global_sign_ssl

server: 0a52248244-vcq8.cn-hangzhou.nas.aliyuncs.com

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

5、部署

# kubectl apply -f mandatory.yaml

namespace/ingress-nginx created

configmap/nginx-configuration created

configmap/tcp-services created

configmap/udp-services created

serviceaccount/nginx-ingress-serviceaccount created

clusterrole.rbac.authorization.k8s.io/nginx-ingress-clusterrole created

role.rbac.authorization.k8s.io/nginx-ingress-role created

rolebinding.rbac.authorization.k8s.io/nginx-ingress-role-nisa-binding created

clusterrolebinding.rbac.authorization.k8s.io/nginx-ingress-clusterrole-nisa-binding created

deployment.apps/nginx-ingress-controller created

service/ingress-nginx created

6、访问测试

# kubectl get pods -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-b44c4d4d7-9rprz 1/1 Running 0 63s 172.16.17.192 huoban-k8s-master03 <none> <none>

nginx-ingress-controller-b44c4d4d7-zfj5n 1/1 Running 0 63s 172.16.17.193 huoban-k8s-master02 <none> <none>

[root@HUOBAN-K8S-MASTER01 mq1]# curl 172.16.17.192

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>openresty/1.15.8.1</center>

</body>

</html>

[root@HUOBAN-K8S-MASTER01 mq1]# curl 172.16.17.193

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>openresty/1.15.8.1</center>

</body>

</html>

# kubectl get svc -n ingress-nginx -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

ingress-nginx ClusterIP 10.100.243.171 <none> 80/TCP,443/TCP 112s app.kubernetes.io/name=ingress-nginx,app.kubernetes.io/part-of=ingress-nginx

# curl http://10.100.243.171

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>openresty/1.15.8.1</center>

</body>

</html>

7、部署一个应用测试一下

1、创建一个nginx应用

# vim app-nginx.yaml

---

apiVersion: v1

kind: Service

metadata:

name: app-nginx

labels:

app: app-nginx

spec:

ports:

- port: 80

selector:

app: app-nginx

tier: production

---

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: app-nginx

spec:

maxReplicas: 3

minReplicas: 1

scaleTargetRef:

apiVersion: extensions/v1beta1

kind: Deployment

name: app-nginx

targetCPUUtilizationPercentage: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-nginx

labels:

app: app-nginx

spec:

replicas: 1

selector:

matchLabels:

app: app-nginx

tier: production

template:

metadata:

labels:

app: app-nginx

tier: production

spec:

containers:

- name: app-nginx

image: harbor.huoban.com/open/huoban-nginx:v1.1

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "50Mi"

cpu: "25m"

ports:

- containerPort: 80

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

- name: conf

mountPath: /etc/nginx/conf.d

volumes:

- name: html

nfs:

path: /open/web/app

server: 192.168.101.11

- name: conf

nfs:

path: /open/conf/app/nginx

server: 192.168.101.11

2、创建TLS证书

kubectl create secret tls bjwf-ingress-secret --cert=server.crt --key=server.key --dry-run -o yaml > bjwf-ingress-secret.yaml

3、创建应用的ingress

# vim app-nginx-ingress.yaml

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: app-ingress

namespace: default

spec:

tls:

- hosts:

- www.bjwf125.com

secretName: bjwf-ingress-secret

rules:

- host: www.bjwf125.com

http:

paths:

- path: /

backend:

serviceName: app-nginx

servicePort: 80

8、访问服务(这块就不截图了。已经能正常跳转至443

--------------------------------------------------------2022总结-------------------------------------------------------------------------------

参考:

https://kubernetes.github.io/ingress-nginx/examples/

https://github.com/kubernetes/ingress-nginx/tree/main/docs/examples

https://cloud.tencent.com/developer/article/1761376

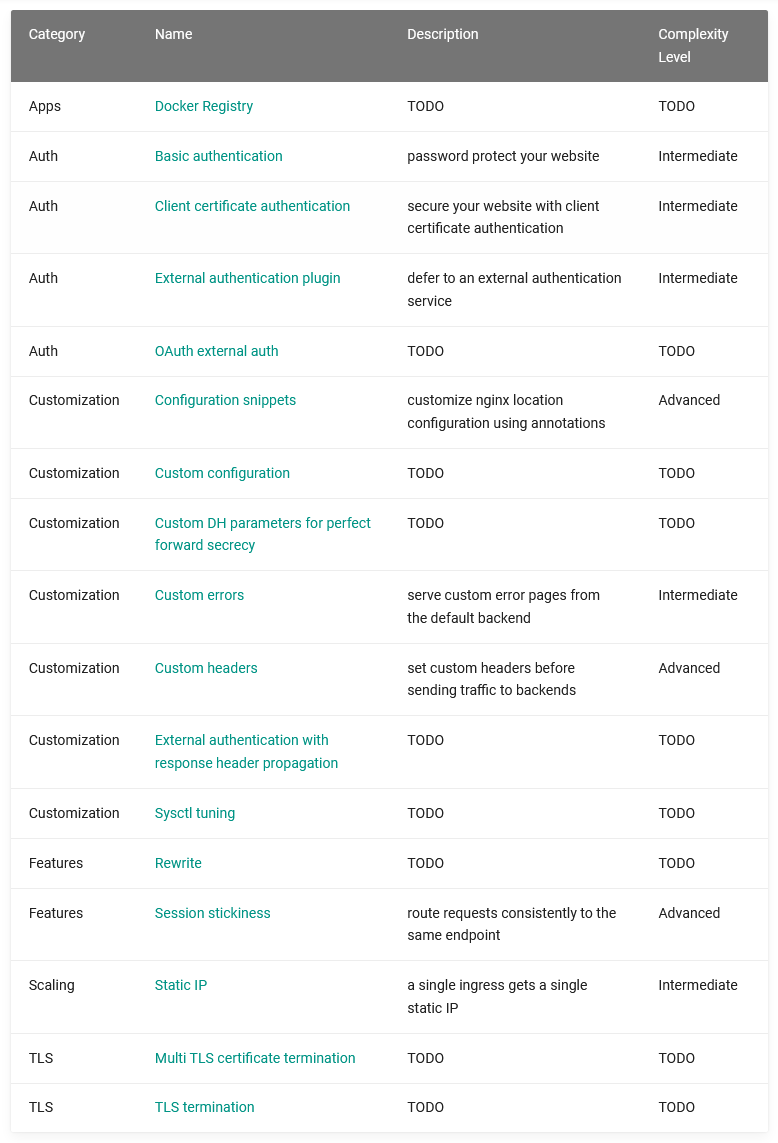

Ingress示例介绍

先决条件

TLS证书

除非另有说明,在示例中使用的TLS秘密是2048位RSA密钥/证书对与任意选择的主机名,创建如下:

$ openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

Generating a 2048 bit RSA private key

................+++

................+++

writing new private key to 'tls.key'

-----

$ kubectl create secret tls tls-secret --key tls.key --cert tls.crt

secret "tls-secret" created

注意:如果使用CA认证,如下所述,您将需要与CA签署服务器证书

客户端证书认证

CA认证也被称为相互身份验证允许服务器和客户端通过一个共同的CA.验证对方的身份

我们有一个通常从证书颁发机构获得的 CA 证书,并使用它来签署我们的服务器证书和客户端证书。 那么每次我们要访问我们的后端时,都必须通过客户端证书。

生成 CA 密钥和证书:

openssl req -x509 -sha256 -newkey rsa:4096 -keyout ca.key -out ca.crt -days 356 -nodes -subj '/CN=My Cert Authority'

生成服务器密钥和证书并使用 CA 证书签名:

openssl req -new -newkey rsa:4096 -keyout server.key -out server.csr -nodes -subj '/CN=mydomain.com'

openssl x509 -req -sha256 -days 365 -in server.csr -CA ca.crt -CAkey ca.key -set_serial 01 -out server.crt

生成客户端密钥和证书并使用 CA 证书签名:

openssl req -new -newkey rsa:4096 -keyout client.key -out client.csr -nodes -subj '/CN=My Client'

openssl x509 -req -sha256 -days 365 -in client.csr -CA ca.crt -CAkey ca.key -set_serial 02 -out client.crt

完成后,您可以继续按照此处(https://kubernetes.github.io/ingress-nginx/examples/auth/client-certs/#creating-certificate-secrets)的说明进行操作。

测试 HTTP 服务

所有需要测试 HTTP 服务的示例都使用标准的 http-svc pod,您可以按如下方式部署:

$ kubectl create -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/docs/examples/http-svc.yaml

service "http-svc" created

replicationcontroller "http-svc" created

$ kubectl get po

NAME READY STATUS RESTARTS AGE

http-svc-p1t3t 1/1 Running 0 1d

$ kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

http-svc 10.0.122.116 <pending> 80:30301/TCP 1d

您可以通过暂时公开它来测试 HTTP 服务是否有效:

$ kubectl patch svc http-svc -p '{"spec":{"type": "LoadBalancer"}}'

"http-svc" patched

$ kubectl get svc http-svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

http-svc 10.0.122.116 <pending> 80:30301/TCP 1d

$ kubectl describe svc http-svc

Name: http-svc

Namespace: default

Labels: app=http-svc

Selector: app=http-svc

Type: LoadBalancer

IP: 10.0.122.116

LoadBalancer Ingress: 108.59.87.136

Port: http 80/TCP

NodePort: http 30301/TCP

Endpoints: 10.180.1.6:8080

Session Affinity: None

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {service-controller } Normal Type ClusterIP -> LoadBalancer

1m 1m 1 {service-controller } Normal CreatingLoadBalancer Creating load balancer

16s 16s 1 {service-controller } Normal CreatedLoadBalancer Created load balancer

$ curl 108.59.87.136

CLIENT VALUES:

client_address=10.240.0.3

command=GET

real path=/

query=nil

request_version=1.1

request_uri=http://108.59.87.136:8080/

SERVER VALUES:

server_version=nginx: 1.9.11 - lua: 10001

HEADERS RECEIVED:

accept=*/*

host=108.59.87.136

user-agent=curl/7.46.0

BODY:

-no body in request-

$ kubectl patch svc http-svc -p '{"spec":{"type": "NodePort"}}'

"http-svc" patched

粘性会话

示例:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: nginx-test

annotations:

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/session-cookie-name: "route"

nginx.ingress.kubernetes.io/session-cookie-expires: "172800"

nginx.ingress.kubernetes.io/session-cookie-max-age: "172800"

spec:

rules:

- host: stickyingress.example.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /

验证:

$ kubectl describe ing nginx-test

Name: nginx-test

Namespace: default

Address:

Default backend: default-http-backend:80 (10.180.0.4:8080,10.240.0.2:8080)

Rules:

Host Path Backends

---- ---- --------

stickyingress.example.com

/ nginx-service:80 (<none>)

Annotations:

affinity: cookie

session-cookie-name: INGRESSCOOKIE

session-cookie-expires: 172800

session-cookie-max-age: 172800

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

7s 7s 1 {nginx-ingress-controller } Normal CREATE default/nginx-test

$ curl -I http://stickyingress.example.com

HTTP/1.1 200 OK

Server: nginx/1.11.9

Date: Fri, 10 Feb 2017 14:11:12 GMT

Content-Type: text/html

Content-Length: 612

Connection: keep-alive

Set-Cookie: INGRESSCOOKIE=a9907b79b248140b56bb13723f72b67697baac3d; Expires=Sun, 12-Feb-17 14:11:12 GMT; Max-Age=172800; Path=/; HttpOnly

Last-Modified: Tue, 24 Jan 2017 14:02:19 GMT

ETag: "58875e6b-264"

Accept-Ranges: bytes

基本认证

$ htpasswd -c auth foo

New password: <bar>

New password:

Re-type new password:

Adding password for user foo

$ kubectl create secret generic basic-auth --from-file=auth

secret "basic-auth" created

$ kubectl get secret basic-auth -o yaml

apiVersion: v1

data:

auth: Zm9vOiRhcHIxJE9GRzNYeWJwJGNrTDBGSERBa29YWUlsSDkuY3lzVDAK

kind: Secret

metadata:

name: basic-auth

namespace: default

type: Opaque

echo "

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

# type of authentication

nginx.ingress.kubernetes.io/auth-type: basic

# name of the secret that contains the user/password definitions

nginx.ingress.kubernetes.io/auth-secret: basic-auth

# message to display with an appropriate context why the authentication is required

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - foo'

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /

backend:

serviceName: http-svc

servicePort: 80

" | kubectl create -f -

$ curl -v http://10.2.29.4/ -H 'Host: foo.bar.com'

* Trying 10.2.29.4...

* Connected to 10.2.29.4 (10.2.29.4) port 80 (#0)

> GET / HTTP/1.1

> Host: foo.bar.com

> User-Agent: curl/7.43.0

> Accept: */*

>

< HTTP/1.1 401 Unauthorized

< Server: nginx/1.10.0

< Date: Wed, 11 May 2016 05:27:23 GMT

< Content-Type: text/html

< Content-Length: 195

< Connection: keep-alive

< WWW-Authenticate: Basic realm="Authentication Required - foo"

<

<html>

<head><title>401 Authorization Required</title></head>

<body bgcolor="white">

<center><h1>401 Authorization Required</h1></center>

<hr><center>nginx/1.10.0</center>

</body>

</html>

* Connection #0 to host 10.2.29.4 left intact

$ curl -v http://10.2.29.4/ -H 'Host: foo.bar.com' -u 'foo:bar'

* Trying 10.2.29.4...

* Connected to 10.2.29.4 (10.2.29.4) port 80 (#0)

* Server auth using Basic with user 'foo'

> GET / HTTP/1.1

> Host: foo.bar.com

> Authorization: Basic Zm9vOmJhcg==

> User-Agent: curl/7.43.0

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.10.0

< Date: Wed, 11 May 2016 06:05:26 GMT

< Content-Type: text/plain

< Transfer-Encoding: chunked

< Connection: keep-alive

< Vary: Accept-Encoding

<

CLIENT VALUES:

client_address=10.2.29.4

command=GET

real path=/

query=nil

request_version=1.1

request_uri=http://foo.bar.com:8080/

SERVER VALUES:

server_version=nginx: 1.9.11 - lua: 10001

HEADERS RECEIVED:

accept=*/*

connection=close

host=foo.bar.com

user-agent=curl/7.43.0

x-request-id=e426c7829ef9f3b18d40730857c3eddb

x-forwarded-for=10.2.29.1

x-forwarded-host=foo.bar.com

x-forwarded-port=80

x-forwarded-proto=http

x-real-ip=10.2.29.1

x-scheme=http

BODY:

* Connection #0 to host 10.2.29.4 left intact

-no body in request-

客户端证书认证

kubectl create secret generic ca-secret --from-file=ca.crt=ca.crt

kubectl create secret generic tls-secret --from-file=tls.crt=server.crt --from-file=tls.key=server.key

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

# Enable client certificate authentication

nginx.ingress.kubernetes.io/auth-tls-verify-client: "on"

# Create the secret containing the trusted ca certificates

nginx.ingress.kubernetes.io/auth-tls-secret: "default/ca-secret"

# Specify the verification depth in the client certificates chain

nginx.ingress.kubernetes.io/auth-tls-verify-depth: "1"

# Specify an error page to be redirected to verification errors

nginx.ingress.kubernetes.io/auth-tls-error-page: "http://www.mysite.com/error-cert.html"

# Specify if certificates are passed to upstream server

nginx.ingress.kubernetes.io/auth-tls-pass-certificate-to-upstream: "true"

name: nginx-test

namespace: default

spec:

rules:

- host: mydomain.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /

tls:

- hosts:

- mydomain.com

secretName: tls-secret

外部基本认证

$ kubectl create -f ingress.yaml

ingress "external-auth" created

$ kubectl get ing external-auth

NAME HOSTS ADDRESS PORTS AGE

external-auth external-auth-01.sample.com 172.17.4.99 80 13s

$ kubectl get ing external-auth -o yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-url: https://httpbin.org/basic-auth/user/passwd

creationTimestamp: 2016-10-03T13:50:35Z

generation: 1

name: external-auth

namespace: default

resourceVersion: "2068378"

selfLink: /apis/networking/v1beta1/namespaces/default/ingresses/external-auth

uid: 5c388f1d-8970-11e6-9004-080027d2dc94

spec:

rules:

- host: external-auth-01.sample.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /

status:

loadBalancer:

ingress:

- ip: 172.17.4.99

$

外部 OAUTH 认证

...

metadata:

name: application

annotations:

nginx.ingress.kubernetes.io/auth-url: "https://$host/oauth2/auth"

nginx.ingress.kubernetes.io/auth-signin: "https://$host/oauth2/start?rd=$escaped_request_uri"

...

自定义headers(configuration-snippet)

适用于特定Ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: nginx-configuration-snippet

annotations:

nginx.ingress.kubernetes.io/configuration-snippet: |

more_set_headers "Request-Id: $req_id";

spec:

rules:

- host: custom.configuration.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /

使用于所有Ingress:

apiVersion: v1

data:

X-Different-Name: "true"

X-Request-Start: t=${msec}

X-Using-Nginx-Controller: "true"

kind: ConfigMap

metadata:

name: custom-headers

namespace: ingress-nginx

apiVersion: v1

data:

proxy-set-headers: "ingress-nginx/custom-headers"

kind: ConfigMap

metadata:

name: ingress-nginx-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

自定义配置

使用 ConfigMap 可以自定义 NGINX 配置。

例如,如果我们想更改超时,我们需要创建一个 ConfigMap:

$ cat configmap.yaml

apiVersion: v1

data:

proxy-connect-timeout: "10"

proxy-read-timeout: "120"

proxy-send-timeout: "120"

kind: ConfigMap

metadata:

name: ingress-nginx-controller

如果 Configmap 更新,NGINX 将使用新配置重新加载。

Sysctl 调优

调优参数:

net.core.somaxconn = 32768

net.ipv4.ip_local_port_range 1024 65000

此示例旨在演示使用 Init Container 来调整 sysctl 默认值 kubectl patch

kubectl patch deployment -n ingress-nginx nginx-ingress-controller \

--patch="$(curl https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/docs/examples/customization/sysctl/patch.json)"

变化:

- 积压队列设置 net.core.somaxconn从 128至 32768

- 临时端口设置 net.ipv4.ip_local_port_range从 32768 60999至 1024 65000

在 NGINX 博客的一篇文章中(https://www.nginx.com/blog/tuning-nginx/),可以看到对这些变化的解释。

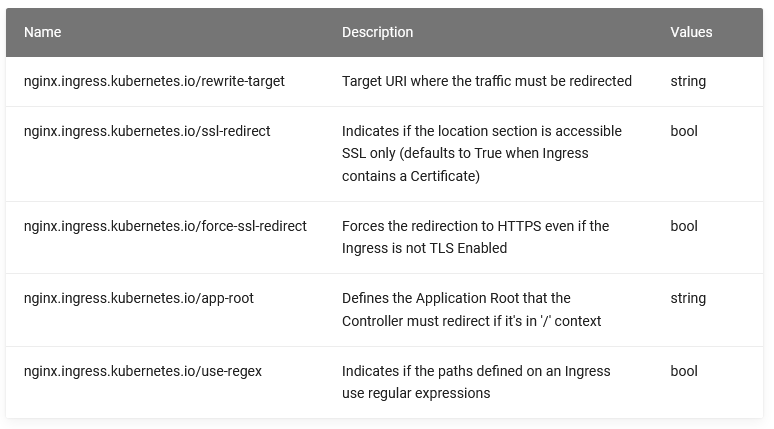

重写

部署

可以使用以下注释控制重写:

例子

重写目标

从版本 0.22.0 开始,使用注解的入口定义 nginx.ingress.kubernetes.io/rewrite-target不向后兼容以前的版本。 在版本 0.22.0 及更高版本中,请求 URI 中需要传递到重写路径的任何子字符串都必须在 明确定义 捕获组中 。

捕获的组 按时间顺序保存在编号的占位符中,格式为 $1, $2 ... $n. 这些占位符可以用作参数 rewrite-target注解。

创建带有重写注释的 Ingress 规则:

$ echo '

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: rewrite

namespace: default

spec:

rules:

- host: rewrite.bar.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /something(/|$)(.*)

' | kubectl create -f -

在这个入口定义中,任何被捕获的字符 (.*)将分配给占位符 $2,然后将其用作参数 rewrite-target注解。

例如,上面的入口定义将导致以下重写:

- rewrite.bar.com/something 改写为 rewrite.bar.com/

- rewrite.bar.com/something/ 改写为 rewrite.bar.com/

- rewrite.bar.com/something/new 改写为 rewrite.bar.com/new

应用程序根

创建带有 app-root 注释的 Ingress 规则:

$ echo "

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/app-root: /app1

name: approot

namespace: default

spec:

rules:

- host: approot.bar.com

http:

paths:

- backend:

serviceName: http-svc

servicePort: 80

path: /

" | kubectl create -f -

检查重写是否有效

$ curl -I -k http://approot.bar.com/

HTTP/1.1 302 Moved Temporarily

Server: nginx/1.11.10

Date: Mon, 13 Mar 2017 14:57:15 GMT

Content-Type: text/html

Content-Length: 162

Location: http://stickyingress.example.com/app1

Connection: keep-alive

一个完整示例

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: todo

namespace: default

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/app-root: /app/

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/configuration-snippet: | ## 借助 ingress-nginx 中的 configuration-snippet 来对静态资源做一次跳转

rewrite ^(/app)$ $1/ redirect; ## 应用在最后添加一个 / 这样的 slash

rewrite ^/stylesheets/(.*)$ /app/stylesheets/$1 redirect;

rewrite ^/images/(.*)$ /app/images/$1 redirect;

spec:

rules:

- host: todo.example.com

http:

paths:

- backend:

serviceName: todo

servicePort: 3000

path: /app(/|$)(.*)

TLS 终止

部署

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: nginx-test

spec:

tls:

- hosts:

- foo.bar.com

# This assumes tls-secret exists and the SSL

# certificate contains a CN for foo.bar.com

secretName: tls-secret

rules:

- host: foo.bar.com

http:

paths:

- path: /

backend:

# This assumes http-svc exists and routes to healthy endpoints

serviceName: http-svc

servicePort: 80

验证

$ kubectl describe ing nginx-test

Name: nginx-test

Namespace: default

Address: 104.198.183.6

Default backend: default-http-backend:80 (10.180.0.4:8080,10.240.0.2:8080)

TLS:

tls-secret terminates

Rules:

Host Path Backends

---- ---- --------

*

http-svc:80 (<none>)

Annotations:

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

7s 7s 1 {nginx-ingress-controller } Normal CREATE default/nginx-test

7s 7s 1 {nginx-ingress-controller } Normal UPDATE default/nginx-test

7s 7s 1 {nginx-ingress-controller } Normal CREATE ip: 104.198.183.6

7s 7s 1 {nginx-ingress-controller } Warning MAPPING Ingress rule 'default/nginx-test' contains no path definition. Assuming /

$ curl 104.198.183.6 -L

curl: (60) SSL certificate problem: self signed certificate

More details here: http://curl.haxx.se/docs/sslcerts.html

$ curl 104.198.183.6 -Lk

CLIENT VALUES:

client_address=10.240.0.4

command=GET

real path=/

query=nil

request_version=1.1

request_uri=http://35.186.221.137:8080/

SERVER VALUES:

server_version=nginx: 1.9.11 - lua: 10001

HEADERS RECEIVED:

accept=*/*

connection=Keep-Alive

host=35.186.221.137

user-agent=curl/7.46.0

via=1.1 google

x-cloud-trace-context=f708ea7e369d4514fc90d51d7e27e91d/13322322294276298106

x-forwarded-for=104.132.0.80, 35.186.221.137

x-forwarded-proto=https

BODY:

Pod 安全策略 (PSP)

在今天的大多数集群中,默认情况下,所有资源(例如 Deployments 和 ReplicatSets)都具有创建 pod 的权限。 然而,Kubernetes 提供了一种更细粒度的授权策略,称为 Pod 安全策略 (PSP) 。

PSP 允许集群所有者定义每个对象的权限,例如创建 pod。 如果您在集群上启用了 PSP,并且您部署了 ingress-nginx,您将需要为 Deployment 提供创建 pod 的权限。

在应用任何对象之前,首先通过运行来应用 PSP 权限:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/docs/examples/psp/psp.yaml

注意:必须在创建 Deployment 和 ReplicaSet 之前授予 PSP 权限。

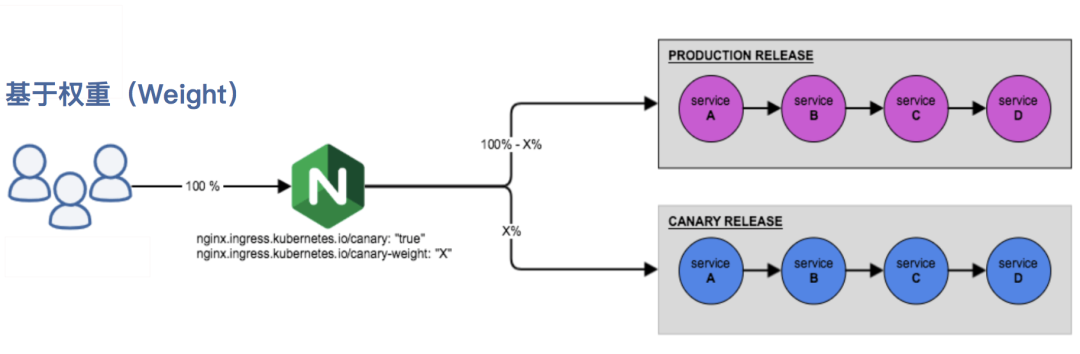

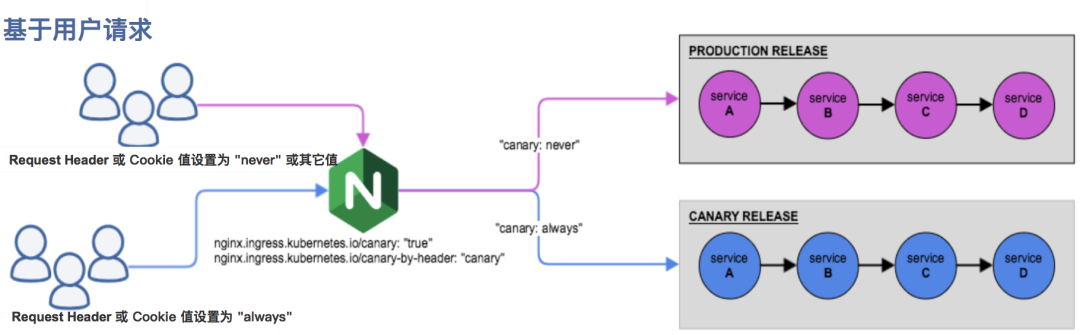

灰度发布

在日常工作中我们经常需要对服务进行版本更新升级,所以我们经常会使用到滚动升级、蓝绿发布、灰度发布等不同的发布操作。而 ingress-nginx 支持通过 Annotations 配置来实现不同场景下的灰度发布和测试,可以满足金丝雀发布、蓝绿部署与 A/B 测试等业务场景。ingress-nginx 的 Annotations 支持以下 4 种 Canary 规则:

- nginx.ingress.kubernetes.io/canary-by-header:基于 Request Header 的流量切分,适用于灰度发布以及 A/B 测试。当 Request Header 设置为 always 时,请求将会被一直发送到 Canary 版本;当 Request Header 设置为 never时,请求不会被发送到 Canary 入口;对于任何其他 Header 值,将忽略 Header,并通过优先级将请求与其他金丝雀规则进行优先级的比较。

- nginx.ingress.kubernetes.io/canary-by-header-value:要匹配的 Request Header 的值,用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务。当 Request Header 设置为此值时,它将被路由到 Canary 入口。该规则允许用户自定义 Request Header 的值,必须与上一个 annotation (即:canary-by-header) 一起使用。

- nginx.ingress.kubernetes.io/canary-weight:基于服务权重的流量切分,适用于蓝绿部署,权重范围 0 - 100 按百分比将请求路由到 Canary Ingress 中指定的服务。权重为 0 意味着该金丝雀规则不会向 Canary 入口的服务发送任何请求,权重为 100 意味着所有请求都将被发送到 Canary 入口。

- nginx.ingress.kubernetes.io/canary-by-cookie:基于 cookie 的流量切分,适用于灰度发布与 A/B 测试。用于通知 Ingress 将请求路由到 Canary Ingress 中指定的服务的cookie。当 cookie 值设置为 always 时,它将被路由到 Canary 入口;当 cookie 值设置为 never 时,请求不会被发送到 Canary 入口;对于任何其他值,将忽略 cookie 并将请求与其他金丝雀规则进行优先级的比较。

需要注意的是金丝雀规则按优先顺序进行排序:canary-by-header - > canary-by-cookie - > canary-weight

总的来说可以把以上的四个 annotation 规则划分为以下两类:

- 基于权重的 Canary 规则

- 基于用户请求的 Canary 规则

示例

创建一个用于 production 环境访问的 Ingress 资源对象:

# production-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: production

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: echo.example.com

http:

paths:

- backend:

serviceName: production

servicePort: 80

命令行访问应用:

curl echo.example.com

1.基于权重:基于权重的流量切分的典型应用场景就是蓝绿部署,可通过将权重设置为 0 或 100 来实现。例如,可将 Green 版本设置为主要部分,并将 Blue 版本的入口配置为 Canary。最初,将权重设置为 0,因此不会将流量代理到 Blue 版本。一旦新版本测试和验证都成功后,即可将 Blue 版本的权重设置为 100,即所有流量从 Green 版本转向 Blue。

创建一个基于权重的 Canary 版本的应用路由 Ingress 对象。

# canary-ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: canary

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-weight: "30" # 分配30%流量到当前Canary版本

spec:

rules:

- host: echo.example.com

http:

paths:

- backend:

serviceName: canary

servicePort: 80

命令行访问应用:

curl -s echo.example.com

2.基于 Request Header: 基于 Request Header 进行流量切分的典型应用场景即灰度发布或 A/B 测试场景。

在上面的 Canary 版本的 Ingress 对象中新增一条 annotation 配置 nginx.ingress.kubernetes.io/canary-by-header: canary(这里的 value 可以是任意值),使当前的 Ingress 实现基于 Request Header 进行流量切分,由于 canary-by-header 的优先级大于 canary-weight,所以会忽略原有的 canary-weight 的规则。

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-by-header: canary # 基于header的流量切分

nginx.ingress.kubernetes.io/canary-weight: "30" # 会被忽略,因为配置了 canary-by-header Canary版本

注意:当 Request Header 设置为 never 或 always 时,请求将不会或一直被发送到 Canary 版本,对于任何其他 Header 值,将忽略 Header,并通过优先级将请求与其他 Canary 规则进行优先级的比较。

命令行访问应用:

curl -s -H "canary: never" echo.example.com

curl -s -H "canary: always" echo.example.com

curl -s -H "canary: other-value" echo.example.com

当我们请求设置的 Header 值为 canary: other-value 时,ingress-nginx 会通过优先级将请求与其他 Canary 规则进行优先级的比较,我们这里也就会进入 canary-weight: "30" 这个规则去。

这个时候我们可以在上一个 annotation (即 canary-by-header)的基础上添加一条 nginx.ingress.kubernetes.io/canary-by-header-value: user-value 这样的规则,就可以将请求路由到 Canary Ingress 中指定的服务了。

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-by-header-value: user-value

nginx.ingress.kubernetes.io/canary-by-header: canary # 基于header的流量切分

nginx.ingress.kubernetes.io/canary-weight: "30" # 分配30%流量到当前Canary版本

同样更新 Ingress 对象后,重新访问应用,当 Request Header 满足 canary: user-value 时,所有请求就会被路由到 Canary 版本。

curl -s -H "canary: user-value" echo.example.com

3.基于 Cookie:与基于 Request Header 的 annotation 用法规则类似。例如在 A/B 测试场景下,需要让地域为北京的用户访问 Canary 版本。那么当 cookie 的 annotation 设置为 nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing",此时后台可对登录的用户请求进行检查,如果该用户访问源来自北京则设置 cookie users_from_Beijing 的值为 always,这样就可以确保北京的用户仅访问 Canary 版本。

同样我们更新 Canary 版本的 Ingress 资源对象,采用基于 Cookie 来进行流量切分:

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/canary: "true" # 要开启灰度发布机制,首先需要启用 Canary

nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing" # 基于 cookie

nginx.ingress.kubernetes.io/canary-weight: "30" # 会被忽略,因为配置了 canary-by-cookie

更新上面的 Ingress 资源对象后,我们在请求中设置一个 users_from_Beijing=always 的 Cookie 值,再次访问应用的域名:

curl -s -b "users_from_Beijing=always" echo.example.com

可以看到应用都被路由到了 Canary 版本的应用中去了,如果我们将这个 Cookie 值设置为 never,则不会路由到 Canary 应用中。

虽然k8s集群内部署的pod、service都有自己的IP,但是却无法提供外网访问,以前我们可以通过监听NodePort的方式暴露服务,但是这种方式并不灵活,生产环境也不建议使用;

Ingresss是k8s集群中的一个API资源对象,相当于一个集群网关,我们可以自定义路由规则来转发、管理、暴露服务(一组pod),比较灵活,生产环境建议使用这种方式;

Ingress不是kubernetes内置的(安装好k8s之后,并没有安装ingress),ingress需要单独安装,而且有多种类型Google Cloud Load Balancer,Nginx,Contour,Istio等等,我们这里选择官方维护的Ingress Nginx;

使用Ingress Nginx的步骤:

1、部署Ingress Nginx;

2、配置Ingress Nginx规则;

采用Ingress暴露容器化应用(Nginx)

1、部署一个容器化应用(pod),比如Nginx、SpringBoot程序;

kubectl create deployment nginx --image=nginx

2、暴露该服务;

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

3、部署Ingress Nginx

https://github.com/kubernetes/ingress-nginx

ingress-nginx是使用NGINX作为反向代理和负载均衡器的Kubernetes的Ingress控制器;

kubectl apply -f

https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.41.2/deploy/static/provider/baremetal/deploy.yaml

332行修改成阿里云镜像:

阿里云镜像首页:http://dev.aliyun.com/

修改镜像地址为:

registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:0.33.0

添加一个配置项:

应用:

kubectl apply -f deploy.yaml

4、查看Ingress的状态

kubectl get service -n ingress-nginx

kubectl get deploy -n ingress-nginx

kubectl get pods -n ingress-nginx

5、创建Ingress规则

kubectl apply -f ingress-nginx-rule.yaml

报如下错误:

解决:kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

然后再次执行:kubectl apply -f ingress-nginx-rule.yaml

浙公网安备 33010602011771号

浙公网安备 33010602011771号