(一)构建自己的图像分类数据集

一、图像采集

1.1、导包

import os import time import requests import urllib3 urllib3.disable_warnings() from tqdm import tqdm

1.2、参数请求

cookies = { 'BDqhfp': '%E7%8B%97%E7%8B%97%26%26NaN-1undefined%26%2618880%26%2621', 'BIDUPSID': '06338E0BE23C6ADB52165ACEB972355B', 'PSTM': '1646905430', 'BAIDUID': '104BD58A7C408DABABCAC9E0A1B184B4:FG=1', 'BDORZ': 'B490B5EBF6F3CD402E515D22BCDA1598', 'H_PS_PSSID': '35836_35105_31254_36024_36005_34584_36142_36120_36032_35993_35984_35319_26350_35723_22160_36061', 'BDSFRCVID': '8--OJexroG0xMovDbuOS5T78igKKHJQTDYLtOwXPsp3LGJLVgaSTEG0PtjcEHMA-2ZlgogKK02OTH6KF_2uxOjjg8UtVJeC6EG0Ptf8g0M5', 'H_BDCLCKID_SF': 'tJPqoKtbtDI3fP36qR3KhPt8Kpby2D62aKDs2nopBhcqEIL4QTQM5p5yQ2c7LUvtynT2KJnz3Po8MUbSj4QoDjFjXJ7RJRJbK6vwKJ5s5h5nhMJSb67JDMP0-4F8exry523ioIovQpn0MhQ3DRoWXPIqbN7P-p5Z5mAqKl0MLPbtbb0xXj_0D6bBjHujtT_s2TTKLPK8fCnBDP59MDTjhPrMypomWMT-0bFH_-5L-l5js56SbU5hW5LSQxQ3QhLDQNn7_JjOX-0bVIj6Wl_-etP3yarQhxQxtNRdXInjtpvhHR38MpbobUPUDa59LUvEJgcdot5yBbc8eIna5hjkbfJBQttjQn3hfIkj0DKLtD8bMC-RDjt35n-Wqxobbtof-KOhLTrJaDkWsx7Oy4oTj6DD5lrG0P6RHmb8ht59JROPSU7mhqb_3MvB-fnEbf7r-2TP_R6GBPQtqMbIQft20-DIeMtjBMJaJRCqWR7jWhk2hl72ybCMQlRX5q79atTMfNTJ-qcH0KQpsIJM5-DWbT8EjHCet5DJJn4j_Dv5b-0aKRcY-tT5M-Lf5eT22-usy6Qd2hcH0KLKDh6gb4PhQKuZ5qutLTb4QTbqWKJcKfb1MRjvMPnF-tKZDb-JXtr92nuDal5TtUthSDnTDMRhXfIL04nyKMnitnr9-pnLJpQrh459XP68bTkA5bjZKxtq3mkjbPbDfn02eCKuj6tWj6j0DNRabK6aKC5bL6rJabC3b5CzXU6q2bDeQN3OW4Rq3Irt2M8aQI0WjJ3oyU7k0q0vWtvJWbbvLT7johRTWqR4enjb3MonDh83Mxb4BUrCHRrzWn3O5hvvhKoO3MA-yUKmDloOW-TB5bbPLUQF5l8-sq0x0bOte-bQXH_E5bj2qRCqVIKa3f', 'BDSFRCVID_BFESS': '8--OJexroG0xMovDbuOS5T78igKKHJQTDYLtOwXPsp3LGJLVgaSTEG0PtjcEHMA-2ZlgogKK02OTH6KF_2uxOjjg8UtVJeC6EG0Ptf8g0M5', 'H_BDCLCKID_SF_BFESS': 'tJPqoKtbtDI3fP36qR3KhPt8Kpby2D62aKDs2nopBhcqEIL4QTQM5p5yQ2c7LUvtynT2KJnz3Po8MUbSj4QoDjFjXJ7RJRJbK6vwKJ5s5h5nhMJSb67JDMP0-4F8exry523ioIovQpn0MhQ3DRoWXPIqbN7P-p5Z5mAqKl0MLPbtbb0xXj_0D6bBjHujtT_s2TTKLPK8fCnBDP59MDTjhPrMypomWMT-0bFH_-5L-l5js56SbU5hW5LSQxQ3QhLDQNn7_JjOX-0bVIj6Wl_-etP3yarQhxQxtNRdXInjtpvhHR38MpbobUPUDa59LUvEJgcdot5yBbc8eIna5hjkbfJBQttjQn3hfIkj0DKLtD8bMC-RDjt35n-Wqxobbtof-KOhLTrJaDkWsx7Oy4oTj6DD5lrG0P6RHmb8ht59JROPSU7mhqb_3MvB-fnEbf7r-2TP_R6GBPQtqMbIQft20-DIeMtjBMJaJRCqWR7jWhk2hl72ybCMQlRX5q79atTMfNTJ-qcH0KQpsIJM5-DWbT8EjHCet5DJJn4j_Dv5b-0aKRcY-tT5M-Lf5eT22-usy6Qd2hcH0KLKDh6gb4PhQKuZ5qutLTb4QTbqWKJcKfb1MRjvMPnF-tKZDb-JXtr92nuDal5TtUthSDnTDMRhXfIL04nyKMnitnr9-pnLJpQrh459XP68bTkA5bjZKxtq3mkjbPbDfn02eCKuj6tWj6j0DNRabK6aKC5bL6rJabC3b5CzXU6q2bDeQN3OW4Rq3Irt2M8aQI0WjJ3oyU7k0q0vWtvJWbbvLT7johRTWqR4enjb3MonDh83Mxb4BUrCHRrzWn3O5hvvhKoO3MA-yUKmDloOW-TB5bbPLUQF5l8-sq0x0bOte-bQXH_E5bj2qRCqVIKa3f', 'indexPageSugList': '%5B%22%E7%8B%97%E7%8B%97%22%5D', 'cleanHistoryStatus': '0', 'BAIDUID_BFESS': '104BD58A7C408DABABCAC9E0A1B184B4:FG=1', 'BDRCVFR[dG2JNJb_ajR]': 'mk3SLVN4HKm', 'BDRCVFR[-pGxjrCMryR]': 'mk3SLVN4HKm', 'ab_sr': '1.0.1_Y2YxZDkwMWZkMmY2MzA4MGU0OTNhMzVlNTcwMmM2MWE4YWU4OTc1ZjZmZDM2N2RjYmVkMzFiY2NjNWM4Nzk4NzBlZTliYWU0ZTAyODkzNDA3YzNiMTVjMTllMzQ0MGJlZjAwYzk5MDdjNWM0MzJmMDdhOWNhYTZhMjIwODc5MDMxN2QyMmE1YTFmN2QyY2M1M2VmZDkzMjMyOThiYmNhZA==', 'delPer': '0', 'PSINO': '2', 'BA_HECTOR': '8h24a024042g05alup1h3g0aq0q', } headers = { 'Connection': 'keep-alive', 'sec-ch-ua': '" Not;A Brand";v="99", "Google Chrome";v="97", "Chromium";v="97"', 'Accept': 'text/plain, */*; q=0.01', 'X-Requested-With': 'XMLHttpRequest', 'sec-ch-ua-mobile': '?0', 'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/97.0.4692.99 Safari/537.36', 'sec-ch-ua-platform': '"macOS"', 'Sec-Fetch-Site': 'same-origin', 'Sec-Fetch-Mode': 'cors', 'Sec-Fetch-Dest': 'empty', 'Referer': 'https://image.baidu.com/search/index?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fm=result&fr=&sf=1&fmq=1647837998851_R&pv=&ic=&nc=1&z=&hd=&latest=©right=&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&dyTabStr=MCwzLDIsNiwxLDUsNCw4LDcsOQ%3D%3D&ie=utf-8&sid=&word=%E7%8B%97%E7%8B%97', 'Accept-Language': 'zh-CN,zh;q=0.9', }

1.3、爬取函数封装

def craw_single_class(keyword, DOWNLOAD_NUM=200): if os.path.exists('dataset/' + keyword): print('文件夹 dataset/{} 已存在,之后直接将爬取到的图片保存至该文件夹中'.format(keyword)) else: os.makedirs('dataset/{}'.format(keyword)) print('新建文件夹:dataset/{}'.format(keyword)) count = 1 with tqdm(total=DOWNLOAD_NUM, position=0, leave=True) as pbar: # 爬取第几张 num = 0 # 是否继续爬取 FLAG = True while FLAG: page = 30 * count params = ( ('tn', 'resultjson_com'), ('logid', '12508239107856075440'), ('ipn', 'rj'), ('ct', '201326592'), ('is', ''), ('fp', 'result'), ('fr', ''), ('word', f'{keyword}'), ('queryWord', f'{keyword}'), ('cl', '2'), ('lm', '-1'), ('ie', 'utf-8'), ('oe', 'utf-8'), ('adpicid', ''), ('st', '-1'), ('z', ''), ('ic', ''), ('hd', ''), ('latest', ''), ('copyright', ''), ('s', ''), ('se', ''), ('tab', ''), ('width', ''), ('height', ''), ('face', '0'), ('istype', '2'), ('qc', ''), ('nc', '1'), ('expermode', ''), ('nojc', ''), ('isAsync', ''), ('pn', f'{page}'), ('rn', '30'), ('gsm', '1e'), ('1647838001666', ''), ) response = requests.get('https://image.baidu.com/search/acjson', headers=headers, params=params, cookies=cookies) if response.status_code == 200: try: json_data = response.json().get("data") if json_data: for x in json_data: type = x.get("type") if type not in ["gif"]: img = x.get("thumbURL") fromPageTitleEnc = x.get("fromPageTitleEnc") try: resp = requests.get(url=img, verify=False) time.sleep(1) # print(f"链接 {img}") # 保存文件名 # file_save_path = f'dataset/{keyword}/{num}-{fromPageTitleEnc}.{type}' file_save_path = f'dataset/{keyword}/{num}.{type}' with open(file_save_path, 'wb') as f: f.write(resp.content) f.flush() # print('第 {} 张图像 {} 爬取完成'.format(num, fromPageTitleEnc)) num += 1 pbar.update(1) # 进度条更新 # 爬取数量达到要求 if num > DOWNLOAD_NUM: FLAG = False print('{} 张图像爬取完毕'.format(num)) break except Exception: pass except: pass else: break count += 1

1.4、调用爬取函数

# 爬取单类 craw_single_class('柚子', DOWNLOAD_NUM = 200) # 爬取多类 class_list = ['黄瓜','南瓜','冬瓜','木瓜','苦瓜','丝瓜','窝瓜','甜瓜','香瓜','白兰瓜','黄金瓜','西葫芦','人参果','羊角蜜','佛手瓜','伊丽莎白瓜'] for each in class_list: craw_single_class(each, DOWNLOAD_NUM = 200)

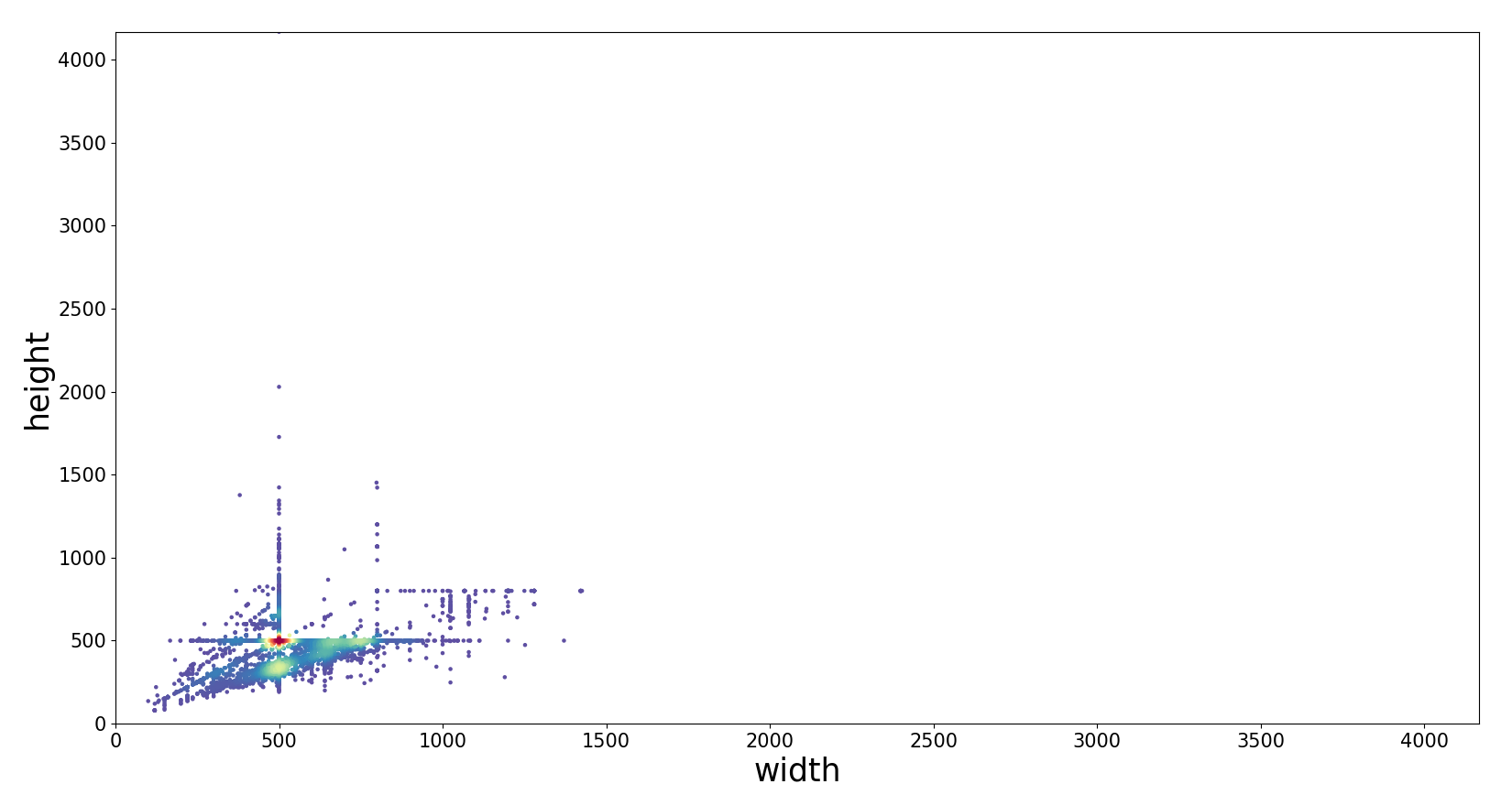

二、统计图像尺寸,比例分布

2.1、导包

import os import numpy as np import pandas as pd import cv2 from tqdm import tqdm from scipy.stats import gaussian_kde import matplotlib.pyplot as plt

2.2、指定根目录路径,读取路径下的所有文件至DataFrame中

dataset_path = 'fruit81_full' os.chdir(dataset_path) df = pd.DataFrame() for fruit in tqdm(os.listdir()): # 遍历每个类别 os.chdir(fruit) for file in os.listdir(): # 遍历每张图像 try: img = cv2.imread(file) df = df.append({'类别':fruit, '文件名':file, '图像宽':img.shape[1], '图像高':img.shape[0]}, ignore_index=True) except: print(os.path.join(fruit, file), '读取错误') os.chdir('../') os.chdir('../') print(df) df.to_csv('image.csv')

os.chdir(path) 表示切换到指定的path路径下dataset_path = 'fruit81_full'os.chdir(dataset_path) # 切换到当前path的路径下

2.3、可视化图像尺寸分布

x = df['图像宽'] # x:(0, 500.0) (1, 500.0) (2, 749.0) (3, 500.0) ... y = df['图像高'] # y:(0, 500.0) (1, 329.0) (2, 500.0) (3, 500.0) ... xy = np.vstack([x,y]) # xy: ndarray: (2, 14433), [[500. 500. 749. ... 500. 667. 600. ], [500. 329. 500. ... 482. 500. 476.]] z = gaussian_kde(xy)(xy) # z: ndarray: (14433,) [5.67285883e-05 2.10230972e-05 1.86175456e-05 ... ] # Sort the points by density, so that the densest points are plotted last idx = z.argsort() # idx: ndarray: (14433,) (5167, 5617, 6406, 2279, 10228...) x, y, z = x[idx], y[idx], z[idx] # x:Series, (14433,); y:Series, (14433,); z:ndarray, (14433,) plt.figure(figsize=(10,10)) plt.scatter(x, y, c=z, s=5, cmap='Spectral_r') plt.tick_params(labelsize=15) xy_max = max(max(df['图像宽']), max(df['图像高'])) plt.xlim(xmin=0, xmax=xy_max) plt.ylim(ymin=0, ymax=xy_max) plt.ylabel('height', fontsize=25) plt.xlabel('width', fontsize=25) plt.savefig('图像尺寸分布.pdf', dpi=120, bbox_inches='tight') plt.show()

三、划分训练集、测试集

3.1、导包:

import os import shutil import random import pandas as pd

3.2、获取所有类别名称:

# 指定数据集路径 dataset_path = 'fruit81_full' dataset_name = dataset_path.split('_')[0] print('数据集', dataset_name) classes = os.listdir(dataset_path) print(len(classes)) print(classes)

3.3、创建训练集文件夹和测试集文件夹

# 创建 train 文件夹 os.mkdir(os.path.join(dataset_path, 'train')) # 创建 test 文件夹 os.mkdir(os.path.join(dataset_path, 'val')) # 在 train 和 test 文件夹中创建各类别子文件夹 for fruit in classes: os.mkdir(os.path.join(dataset_path, 'train', fruit)) os.mkdir(os.path.join(dataset_path, 'val', fruit))

3.4、划分训练集、测试集,移动文件

# 划分训练集、测试集,移动文件 test_frac = 0.2 # 测试集比例 random.seed(123) # 随机数种子,便于复现 df = pd.DataFrame() print('{:^18} {:^18} {:^18}'.format('类别', '训练集数据个数', '测试集数据个数')) for fruit in classes: # 遍历每个类别 # 读取该类别的所有图像文件名 old_dir = os.path.join(dataset_path, fruit) images_filename = os.listdir(old_dir) random.shuffle(images_filename) # 随机打乱 # 划分训练集和测试集 testset_numer = int(len(images_filename) * test_frac) # 测试集图像个数 testset_images = images_filename[:testset_numer] # 获取拟移动至 test 目录的测试集图像文件名 trainset_images = images_filename[testset_numer:] # 获取拟移动至 train 目录的训练集图像文件名 # 移动图像至 test 目录 for image in testset_images: old_img_path = os.path.join(dataset_path, fruit, image) # 获取原始文件路径 new_test_path = os.path.join(dataset_path, 'val', fruit, image) # 获取 test 目录的新文件路径 shutil.move(old_img_path, new_test_path) # 移动文件 # 移动图像至 train 目录 for image in trainset_images: old_img_path = os.path.join(dataset_path, fruit, image) # 获取原始文件路径 new_train_path = os.path.join(dataset_path, 'train', fruit, image) # 获取 train 目录的新文件路径 shutil.move(old_img_path, new_train_path) # 移动文件 # 删除旧文件夹 assert len(os.listdir(old_dir)) == 0 # 确保旧文件夹中的所有图像都被移动走 shutil.rmtree(old_dir) # 删除文件夹 # 工整地输出每一类别的数据个数 print('{:^18} {:^18} {:^18}'.format(fruit, len(trainset_images), len(testset_images))) # 保存到表格中 df = df.append({'class': fruit, 'trainset': len(trainset_images), 'testset': len(testset_images)}, ignore_index=True) # 重命名数据集文件夹 shutil.move(dataset_path, dataset_name + '_split') # 数据集各类别数量统计表格,导出为 csv 文件 df['total'] = df['trainset'] + df['testset'] df.to_csv('数据量统计.csv', index=False)

# 随机数的使用random.seed(123) # 随机数种子,便于复现random.shuffle(images_filename) # 随机打乱

random.seed(int): 设置随机数种子,int值固定,每次执行的时候,结果是一样的,保证相同结果可以重现

import shutilshutil.move(old_img_path, new_test_path)shutil.rmtree(old_dir) # 删除文件夹shutil.move(dataset_path, dataset_name + '_split')

shutil.move(old_path, new_path) : 路径移动

shutil.tmtree(path) :删除文件夹

shutil.move(old_name, new_name) : 重命名

# 断言的使用,如果为False,则程序不继续执行assert len(os.listdir(old_dir)) == 0 # 确保旧文件夹中的所有图像都被移动走

assert的使用,可以保证判别式成立,程序才继续执行。

四、可视化文件夹中的图像

4.1、可视化文件夹的图像

4.1.1、导包:

import matplotlib.pyplot as plt from mpl_toolkits.axes_grid1 import ImageGrid import numpy as np import math import os import cv2

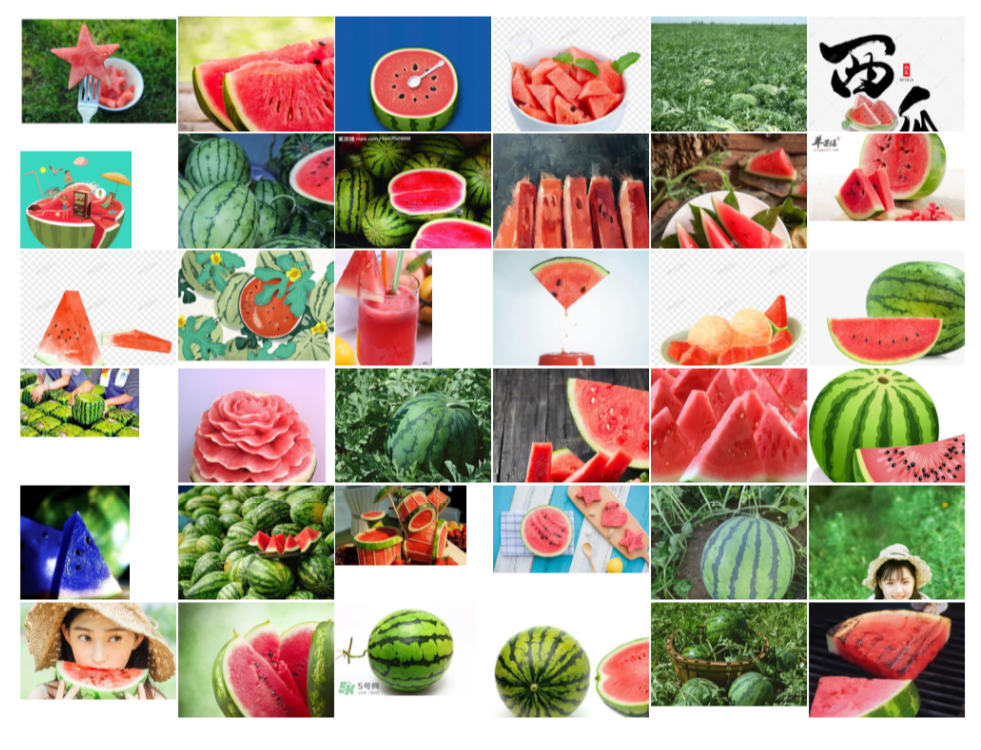

4.1.2、读取文件夹中的所有图像:

folder_path = 'fruit81_split/train/西瓜' # 可视化图像的个数 N = 36 # n 行 n 列 n = math.floor(np.sqrt(N)) print(n) images = [] for each_img in os.listdir(folder_path)[:N]: img_path = os.path.join(folder_path, each_img) img_bgr = cv2.imdecode(np.fromfile(img_path, dtype=np.uint8), -1) # 解决路径中带中文的问题 # img_bgr = cv2.imread(img_path) img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB) images.append(img_rgb) fig = plt.figure(figsize=(50, 50)) grid = ImageGrid(fig, 111, # 类似绘制子图 subplot(111) nrows_ncols=(n, n), # 创建 n 行 m 列的 axes 网格 axes_pad=0.02, # 网格间距 share_all=True )

ImageGrid画了一个空的画布

# 遍历每张图像 for ax, im in zip(grid, images): ax.imshow(im) ax.axis('off') plt.tight_layout() plt.show()

ax.imshow(im) 往每个网格中填充图片

ax.axis('off') 关闭网格线,最终得到图片如下:

images = []for each_img in os.listdir(folder_path)[:N]:img_path = os.path.join(folder_path, each_img)img_bgr = cv2.imdecode(np.fromfile(img_path, dtype=np.uint8), -1) # 解决路径中带中文的问题# img_bgr = cv2.imread(img_path)img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)images.append(img_rgb)

cv2.imdecode: 将图片转化成rgb的格式:

[[[251 255 252], [251 255 252], [250 255 252], ..., [251 255 255], [251 255 255], [251 255 255]],, [[253 255 254], [253 255 254], [251 255 254], ..., [251 255 255], [251 255 255], [251 255 255]],, [[255 254 255], [255 254 255], [255 254 255], ..., [251 255 255], [251 255 255], [251 255 255]],, ...,, [[255 252 255], [250 250 255], [242 254 254], ..., [234 255 240], [242 255 246], [250 255 252]],, [[255 253 255], [251 252 255], [241 254 252], ..., [236 255 241], [242 255 246], [250 255 252]],, [[255 253 255], [251 252 255], [244 255 253], ..., [236 255 241], [242 255 246], [250 255 252]]]

shape[0]: 表示图像高

shape[1]: 表示图像宽

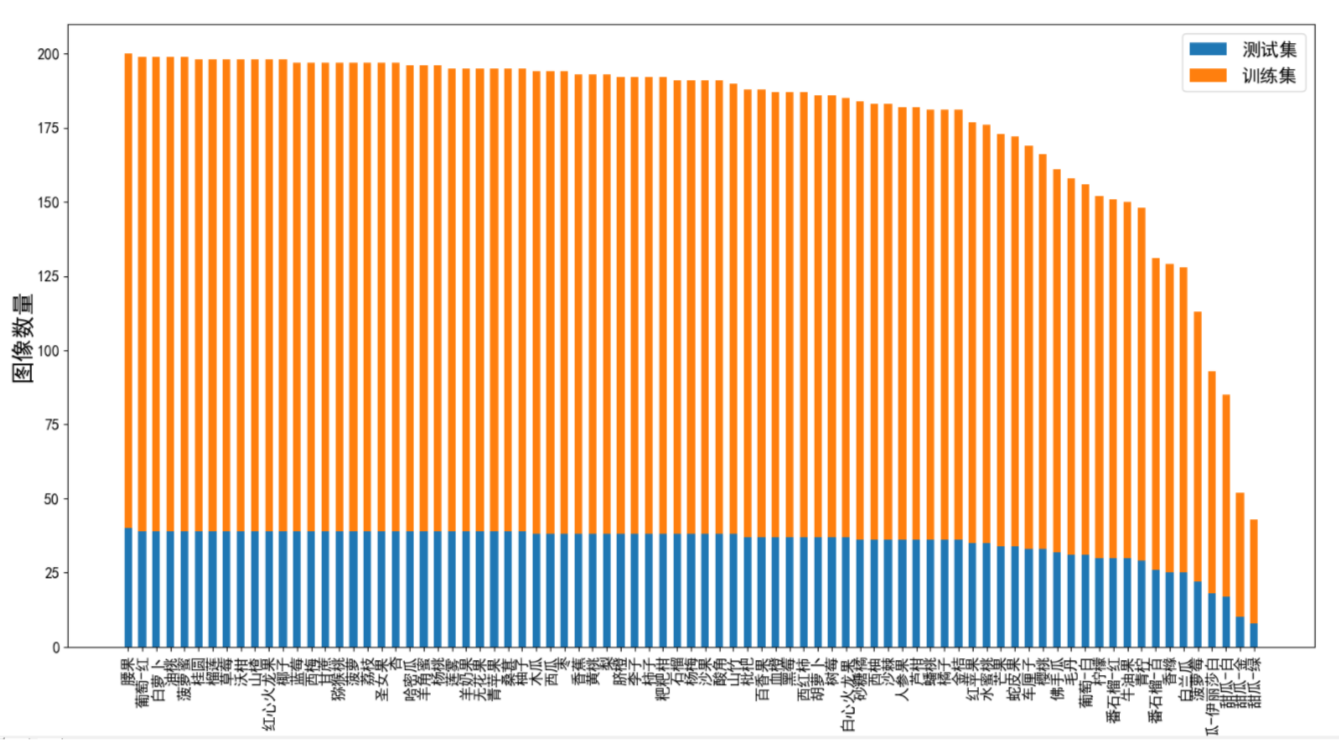

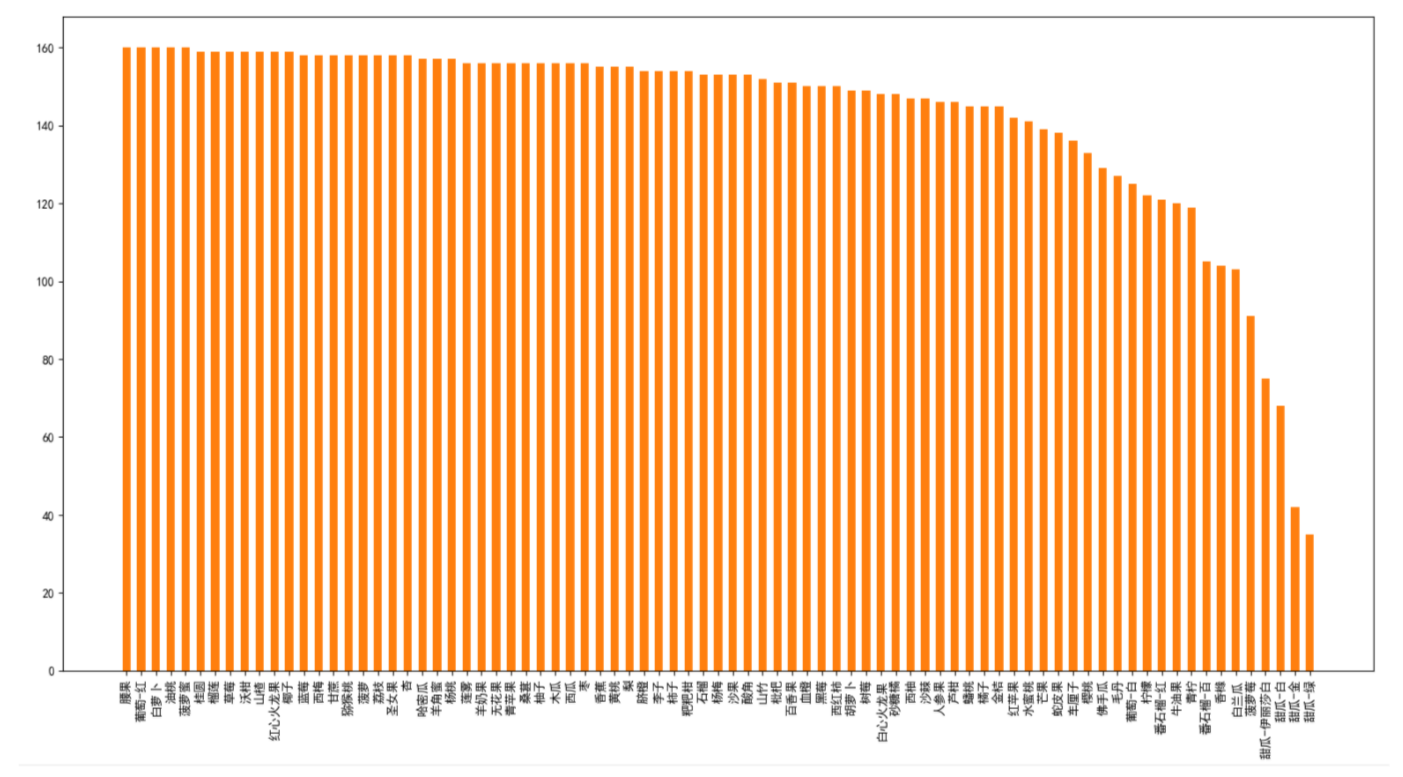

4.2、统计各类别图像数量

4.2.1、导包:

import pandas as pd import matplotlib.pyplot as plt

4.2.2、设置中文字体

# windows plt.rcParams['font.sans-serif']=['SimHei'] # 用来正常显示中文标签 plt.rcParams['axes.unicode_minus']=False # 用来正常显示负号 # linux import matplotlib matplotlib.rc("font",family='SimHei') # 中文字体 # plt.rcParams['font.sans-serif']=['SimHei'] # 用来正常显示中文标签 plt.rcParams['axes.unicode_minus']=False # 用来正常显示负号

4.2.3、图像数量柱状图可视化

df = pd.read_csv('数据量统计.csv') print(df.shape) print(df.head()) # 指定可视化的特征 feature = 'total' df = df.sort_values(by=feature, ascending=False) print(df.head()) plt.figure(figsize=(22, 7)) x = df['class'] y = df[feature] plt.bar(x, y, facecolor='#1f77b4', edgecolor='k') plt.xticks(rotation=90) plt.tick_params(labelsize=15) plt.xlabel('类别', fontsize=20) plt.ylabel('图像数量', fontsize=20) # plt.savefig('各类别图片数量.pdf', dpi=120, bbox_inches='tight') plt.show() plt.figure(figsize=(22, 7)) x = df['class'] y1 = df['testset'] y2 = df['trainset'] width = 0.55 # 柱状图宽度 plt.xticks(rotation=90) # 横轴文字旋转 plt.bar(x, y1, width, label='测试集') plt.bar(x, y2, width, label='训练集', bottom=y1) plt.xlabel('类别', fontsize=20) plt.ylabel('图像数量', fontsize=20) plt.tick_params(labelsize=13) # 设置坐标文字大小 plt.legend(fontsize=16) # 图例 # 保存为高清的 pdf 文件 plt.savefig('各类别图像数量.pdf', dpi=120, bbox_inches='tight') plt.show()

plt.bar(x, y1, width, label='测试集')

plt.bar(x, y2, width, label='训练集', bottom=y1)

bar中bottom的使用: 默认为0,表示距离y轴的距离为0,

bottom=y1表示距离y轴的距离为y1,此时可以画出两个bar柱状图出来

如果不设置 button,则第二个bar会把第一个bar覆盖,变成:

浙公网安备 33010602011771号

浙公网安备 33010602011771号