第十五章 k8s高级篇-中间件容器化机Helm

第十五章 k8s高级篇-中间件容器化机Helm

- 第十五章 k8s高级篇-中间件容器化机Helm

- 1,容器中间件说明

- 2,部署应用至k8s通用步骤

- 3,部署单实例中间件至k8s

- 4,k8s中间件的访问模式

- 5,k8s和传统架构管理中间件的区别

- 6,中间件到底要不要部署到k8s集群

- 7,使用Operator部署Redis集群

- 8,Redis集群使用和扩容

- 9,Redis集群的卸载

- 10,使用Helm创建Kafka、Zookeeper集群

- 11,安装kafka到k8s集群

- 12,Kafka集群测试验证

- 13,Kafka集群扩容及删除

- 14,Helm基础命令

- 15,Helm v3 Chart目录层级

- 16,Helm内置变量的使用

- 17,Helm常用函数的使用

- 18,Helm逻辑控制

- 19,小试牛刀:StatefulSet安装Rabbitmq集群

- 20,编写Chart一键安装Rabbitmq集群

1,容器中间件说明

一切皆容器

# 常用的中间件

rabbitmq

redis

mysql

kafka

MongoDB

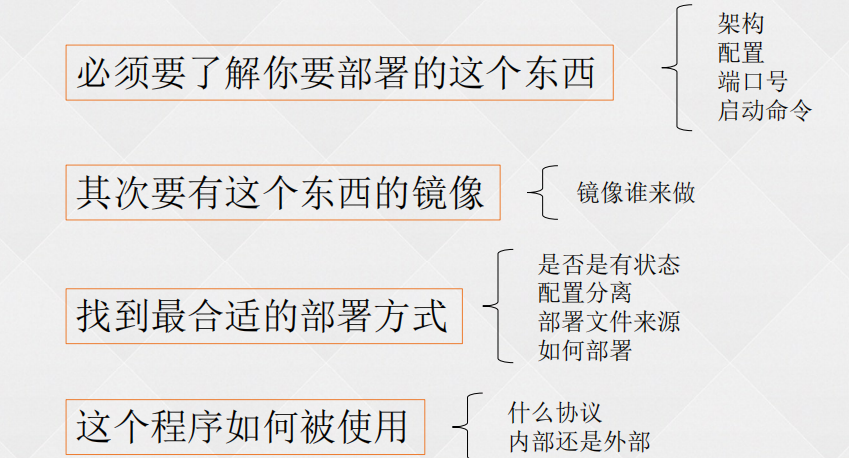

2,部署应用至k8s通用步骤

中间件单实例部署

1、找到官方镜像:https://hub.docker.com/

2、确认需要的配置:环境变量或配置文件

3、选择部署方式:Deployment或其他的

4、配置访问:TCP或HTTP

3,部署单实例中间件至k8s

部署单实例rabbitmq

# 创建一个命名空间

[root@k8s-master01 rabbitmq]# kubectl create ns public-service

# rabbitmq的deployment 此处部署的是单实例 所以用了deployment

[root@k8s-master01 rabbitmq]# cat rabbitmq-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: rabbitmq

name: rabbitmq

namespace: public-service

spec:

replicas: 1

selector:

matchLabels:

app: rabbitmq

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: rabbitmq

spec:

affinity: {}

containers:

- env:

- name: TZ

value: Asia/Shanghai

- name: LANG

value: C.UTF-8

- name: RABBITMQ_DEFAULT_USER # 指定rabbitmq的账户

value: admin # 指定rabbitmq的密码

- name: RABBITMQ_DEFAULT_PASS

value: admin

image: registry.cn-beijing.aliyuncs.com/k8s-study-test/rabbitmq:3.8.17-management

imagePullPolicy: Always

lifecycle: {}

livenessProbe:

failureThreshold: 2

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 5672

timeoutSeconds: 2

name: rabbitmq

ports:

- containerPort: 5672

name: web

protocol: TCP

readinessProbe:

failureThreshold: 2

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

tcpSocket:

port: 5672

timeoutSeconds: 2

resources:

limits:

cpu: 935m

memory: 1Gi

requests:

cpu: 97m

memory: 512Mi

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

status: {}

# rabbitmq的service

[root@k8s-master01 rabbitmq]# cat rabbitmq-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: rabbitmq

name: rabbitmq

namespace: public-service

spec:

ports:

- name: web

port: 5672

protocol: TCP

targetPort: 5672

- name: http

port: 15672

protocol: TCP

targetPort: 15672

selector:

app: rabbitmq

sessionAffinity: None

type: NodePort

# 查询

[root@k8s-master01 rabbitmq]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

rabbitmq-d89c56c8-44nn2 1/1 Running 0 46m

[root@k8s-master01 rabbitmq]# kubectl get svc -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rabbitmq NodePort 10.108.113.69 <none> 5672:32545/TCP,15672:32005/TCP 61m

注:

rabbitmq的 默认用户密码是guest和guest,我们在deploy中的env中可以指定

- name: RABBITMQ_DEFAULT_USER # 指定rabbitmq的账户

value: admin

- name: RABBITMQ_DEFAULT_PASS # 指定rabbitmq的密码

value: admin

- 在生产或测试环境,rabbitmq重启后数据会丢失的,我们需要配置数据挂载目录将数据存储起来

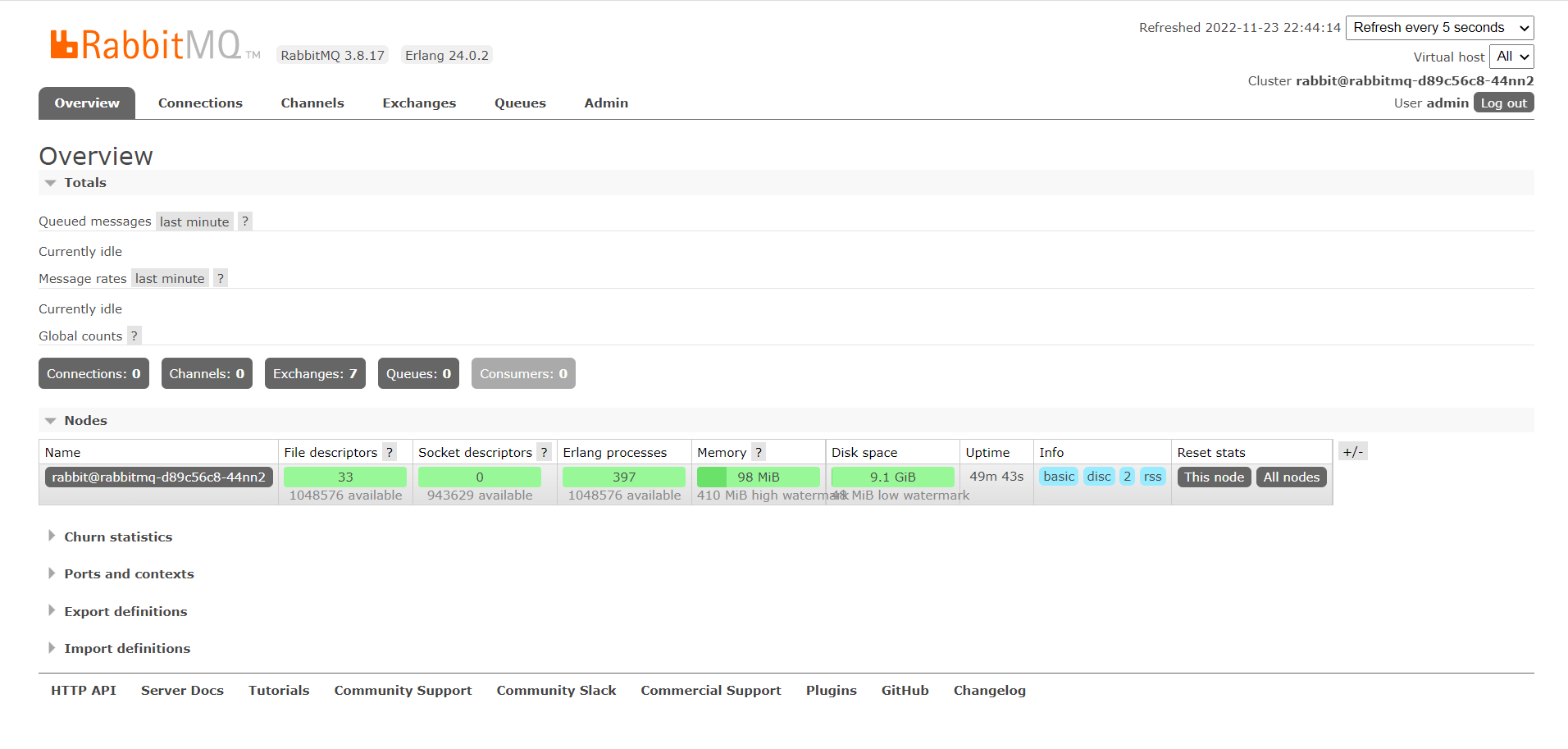

4,k8s中间件的访问模式

1. 通过http访问rabbitmq提供的web界面

我们此处是通过service的NodePort来访问的

http://192.168.0.118:32005

不出意外会出现如下界面

我们输入刚才设置的账号密码登录(admin/admin)

2. 开发用

集群内访问rabbitmq:5672 # <service_name>:<port>

集群外访问192.168.0.117:32545 # <宿主机的IP地址>:<NodePort端口号>

5,k8s和传统架构管理中间件的区别

6,中间件到底要不要部署到k8s集群

7,使用Operator部署Redis集群

Operator模板:https://github.com/operator-framework/awesome-operators

- 创建Operator

git clone https://github.com/ucloud/redis-cluster-operator.git

kubectl create -f deploy/crds/redis.kun_distributedredisclusters_crd.yaml

kubectl create -f deploy/crds/redis.kun_redisclusterbackups_crd.yaml

kubectl create ns redis-cluster # 如果想直接再部署一套redis集群的话,直接从此处往下重新部署一套到新的namespace即可

kubectl create -f deploy/service_account.yaml -n redis-cluster

kubectl create -f deploy/namespace/role.yaml -n redis-cluster

kubectl create -f deploy/namespace/role_binding.yaml -n redis-cluster

kubectl create -f deploy/namespace/operator.yaml -n redis-cluster

# 查看

[root@k8s-master01 redis-cluster-operator]# kubectl api-resources | grep redis # 自定义的资源

distributedredisclusters drc redis.kun/v1alpha1 true DistributedRedisCluster

redisclusterbackups drcb redis.kun/v1alpha1 true RedisClusterBackup

- 创建Redis集群

# Namespace级别的需要更改配置:

[root@k8s-master01 redis-cluster-operator]# cat deploy/example/redis.kun_v1alpha1_distributedrediscluster_cr.yaml

apiVersion: redis.kun/v1alpha1

kind: DistributedRedisCluster

metadata:

annotations:

# if your operator run as cluster-scoped, add this annotations

# redis.kun/scope: cluster-scoped

name: example-distributedrediscluster

spec:

# Add fields here

masterSize: 3

clusterReplicas: 1

image: redis:6.2.4-alpine3.13 # redis版本,根据自己所需修改

# 创建

kubectl create -f deploy/example/redis.kun_v1alpha1_distributedrediscluster_cr.yaml -n redis-cluster

【可选】提示:如果集群规模不大,资源少,可以自定义资源,把请求的资源降低

kubectl create -f deploy/example/custom-resources.yaml -n redis-cluster

- 查看集群状态

[root@k8s-master01 ~]# kubectl get distributedrediscluster -n redis-cluster # STATUS刚开始是scaling,变为Healthy也许会很长时间,两个小时都有可能

NAME MASTERSIZE STATUS AGE

example-distributedrediscluster 3 Healthy 59m

[root@k8s-master01 ~]# kubectl get pod -n redis-cluster

NAME READY STATUS RESTARTS AGE

drc-example-distributedrediscluster-0-0 1/1 Running 0 55m

drc-example-distributedrediscluster-0-1 1/1 Running 0 56m

drc-example-distributedrediscluster-1-0 1/1 Running 0 55m

drc-example-distributedrediscluster-1-1 1/1 Running 0 56m

drc-example-distributedrediscluster-2-0 1/1 Running 0 54m

drc-example-distributedrediscluster-2-1 1/1 Running 0 55m

redis-cluster-operator-675ccbc697-89frq 1/1 Running 1 120m

- 不成功排查

# 通过日志排查

[root@k8s-master01 ~]# kubectl logs -f redis-cluster-operator-675ccbc697-89frq -n redis-cluster

- 连接redis

[root@k8s-master01 ~]# kubectl exec -it drc-example-distributedrediscluster-0-0 -n redis-cluster -- sh

/data # redis-cli -h example-distributedrediscluster.redis-cluster # <svc_name>.<namespace>

example-distributedrediscluster.redis-cluster:6379> exit

/data # redis-cli -h example-distributedrediscluster # 统一ns可以省略ns

example-distributedrediscluster:6379>

- redis的分片模式

[root@k8s-master01 ~]# kubectl exec -it drc-example-distributedrediscluster-0-0 -n redis-cluster -- sh

/data # ls

dump.rdb nodes.conf redis_password

/data # cat nodes.conf # redis集群状态,分片范围

a679dbe38849e7fb75868680ce6bdcfc42abd477 172.27.14.220:6379@16379 master - 0 1669279468000 4 connected 10923-16383

002bd8c52b15b291fcd198b7dd4679173778cd88 172.25.92.95:6379@16379 master - 0 1669279467498 2 connected 5461-10922

7235fc894be624ca41d22813f652576d478e1069 172.25.244.221:6379@16379 slave a679dbe38849e7fb75868680ce6bdcfc42abd477 0 1669279465000 4 connected

5ccaea33d550ca1eb1175e3d5e3dc3f48f0b2edf 172.18.195.39:6379@16379 slave 002bd8c52b15b291fcd198b7dd4679173778cd88 0 1669279468503 2 connected

3bd5ec729525b3720bdadf98307714a5bbf7f343 192.168.0.118:6379@16379 master - 0 1669279466491 5 connected 0-5460

b59ad219085b5d326fd6e32ff37fd1559e9b4efd 172.25.92.96:6379@16379 myself,slave 3bd5ec729525b3720bdadf98307714a5bbf7f343 0 1669279467000 5 connected

vars currentEpoch 5 lastVoteEpoch 0

# 写数据

/data # redis-cli -h example-distributedrediscluster

example-distributedrediscluster:6379> set x 1

(error) MOVED 16287 172.27.14.220:6379

example-distributedrediscluster:6379> exit

/data # redis-cli -h 172.27.14.220

172.27.14.220:6379> set x 1

OK

172.27.14.220:6379> get x

"1"

172.27.14.220:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384 # 分片

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:5

cluster_my_epoch:4

cluster_stats_messages_ping_sent:4836

cluster_stats_messages_pong_sent:6060

cluster_stats_messages_meet_sent:327

cluster_stats_messages_sent:11223

cluster_stats_messages_ping_received:5660

cluster_stats_messages_pong_received:5107

cluster_stats_messages_meet_received:400

cluster_stats_messages_received:11167

8,Redis集群使用和扩容

redis集群在扩容时是不可用的状态,如果是线上,我们尽可能的找业务量比较小的时候去扩容,一般不建议缩容(缩容可能会导致集群报错)

- 将redis集群扩为4主4从

[root@k8s-master01 example]# kubectl get distributedrediscluster -n redis-cluster

NAME MASTERSIZE STATUS AGE

example-distributedrediscluster 3 Healthy 93m

[root@k8s-master01 example]# kubectl edit distributedrediscluster example-distributedrediscluster -n redis-cluster

distributedrediscluster.redis.kun/example-distributedrediscluster edited

spec:

clusterReplicas: 1

image: redis:6.2.4-alpine3.13

masterSize: 4 # 将3扩为4,直接edit就行

- 查看扩容状态并验证集群可用性

# 查看

[root@k8s-master01 example]# kubectl get distributedrediscluster -n redis-cluster # STATUS又变成了Scaling

NAME MASTERSIZE STATUS AGE

example-distributedrediscluster 4 Scaling 95m

[root@k8s-master01 example]# kubectl get distributedrediscluster -n redis-cluster

NAME MASTERSIZE STATUS AGE

example-distributedrediscluster 4 Healthy 101m

[root@k8s-master01 example]# kubectl exec -it drc-example-distributedrediscluster-3-1 -n redis-cluster -- sh

/data # cat nodes.conf

9ac2b0c8465842c87f9ba9c6b772514654943a78 172.25.244.222:6379@16379 myself,slave d58248895bc00d283689d3b74766b223506b4303 0 1669280278000 8 connected

a679dbe38849e7fb75868680ce6bdcfc42abd477 172.27.14.220:6379@16379 master - 0 1669280279470 4 connected 12288-16383

b59ad219085b5d326fd6e32ff37fd1559e9b4efd 172.25.92.96:6379@16379 master - 0 1669280279000 7 connected 1365-5460

5ccaea33d550ca1eb1175e3d5e3dc3f48f0b2edf 172.18.195.39:6379@16379 slave 002bd8c52b15b291fcd198b7dd4679173778cd88 0 1669280277000 2 connected

d58248895bc00d283689d3b74766b223506b4303 172.18.195.40:6379@16379 master - 0 1669280277000 8 connected 0-1364 5461-6826 10923-12287

002bd8c52b15b291fcd198b7dd4679173778cd88 172.25.92.95:6379@16379 master - 0 1669280276000 2 connected 6827-10922

7235fc894be624ca41d22813f652576d478e1069 172.25.244.221:6379@16379 slave a679dbe38849e7fb75868680ce6bdcfc42abd477 0 1669280277454 4 connected

3bd5ec729525b3720bdadf98307714a5bbf7f343 192.168.0.117:6379@16379 slave,fail b59ad219085b5d326fd6e32ff37fd1559e9b4efd 1669280062390 1669280054776 7 disconnected

vars currentEpoch 8 lastVoteEpoch 0

/data # redis-cli -h example-distributedrediscluster

example-distributedrediscluster:6379> get x

(error) MOVED 16287 172.27.14.220:6379

example-distributedrediscluster:6379> exit

/data # redis-cli -h 172.27.14.220

172.27.14.220:6379> get x # 刚才的数据还在

"1"

9,Redis集群的卸载

卸载集群

kubectl delete -f deploy/example/redis.kun_v1alpha1_distributedrediscluster_cr.yaml -n redis-cluster

kubectl delete -f deploy/cluster/operator.yaml -n redis-cluster

kubectl delete -f deploy/cluster/cluster_role_binding.yaml -n redis-cluster

kubectl delete -f deploy/cluster/cluster_role.yaml -n redis-cluster

kubectl delete -f deploy/service_account.yaml -n redis-cluster

kubectl delete -f deploy/crds/redis.kun_redisclusterbackups_crd.yaml -n redis-cluster

kubectl delete -f deploy/crds/redis.kun_distributedredisclusters_crd.yaml -n redis-cluster

10,使用Helm创建Kafka、Zookeeper集群

Helm客户端安装:https://helm.sh/docs/intro/install/

Download your desired version

Unpack it (tar -zxvf helm-v3.0.0-linux-amd64.tar.gz)

Find the helm binary in the unpacked directory, and move it to its desired destination (mv linux-amd64/helm /usr/local/bin/helm)

添加bitnami和官方helm仓库:

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add stable https://charts.helm.sh/stable

# 查看已添加的仓库

[root@k8s-master01 ~]# helm repo list

NAME URL

bitnami https://charts.bitnami.com/bitnami

stable https://charts.helm.sh/stable

You have new mail in /var/spool/mail/root

# 查看helm版本

[root@k8s-master01 ~]# helm version

version.BuildInfo{Version:"v3.10.1", GitCommit:"9f88ccb6aee40b9a0535fcc7efea6055e1ef72c9", GitTreeState:"clean", GoVersion:"go1.18.7"}

安装方式一:先下载后安装

helm pull bitnami/zookeeper

修改values.yaml相应配置:副本数、auth、持久化

helm install -n public-service zookeeper .

# 搜索包

[root@k8s-master01 ~]# helm search repo zookeeper

NAME CHART VERSION APP VERSION DESCRIPTION

bitnami/zookeeper 10.2.5 3.8.0 Apache ZooKeeper provides a reliable, centraliz...

bitnami/dataplatform-bp1 12.0.2 1.0.1 DEPRECATED This Helm chart can be used for the ...

bitnami/dataplatform-bp2 12.0.5 1.0.1 DEPRECATED This Helm chart can be used for the ...

bitnami/kafka 19.1.3 3.3.1 Apache Kafka is a distributed streaming platfor...

bitnami/schema-registry 7.0.0 7.3.0 Confluent Schema Registry provides a RESTful in...

bitnami/solr 6.3.2 9.1.0 Apache Solr is an extremely powerful, open sour...

stable/kafka-manager 2.3.5 1.3.3.22 DEPRECATED - A tool for managing Apache Kafka.

# 下载包

[root@k8s-master01 ~]# helm pull bitnami/zookeeper

# 下载好是个.tgz的压缩包,解压

[root@k8s-master01 ~]# ls -ld zookeeper-10.2.5.tgz

-rw-r--r-- 1 root root 44137 Nov 24 21:54 zookeeper-10.2.5.tgz

[root@k8s-master01 ~]# tar xf zookeeper-10.2.5.tgz

[root@k8s-master01 ~]# cd zookeeper

[root@k8s-master01 zookeeper]# ls

Chart.lock charts Chart.yaml README.md templates values.yaml

修改values.yaml文件

------------------------------------------------------------------------

persistence:

## @param persistence.enabled Enable ZooKeeper data persistence using PVC. If false, use emptyDir

##

enabled: false # 我们将此处改成false,因为我们没有storageClass(生产环境一定要持久化)

## @param persistence.existingClaim Name of an existing PVC to use (only when deploying a single replica)

##

existingClaim: ""

## @param persistence.storageClass PVC Storage Class for ZooKeeper data volume

## If defined, storageClassName: <storageClass>

## If set to "-", storageClassName: "", which disables dynamic provisioning

## If undefined (the default) or set to null, no storageClassName spec is

## set, choosing the default provisioner. (gp2 on AWS, standard on

## GKE, AWS & OpenStack)

##

storageClass: "" # 现在是空,如果有storageClass的话,写storageClass的name

------------------------------------------------------------------------

replicaCount: 3 # 副本数改为3

------------------------------------------------------------------------

image:

registry: docker.io # 同步到公司的镜像仓库

repository: bitnami/zookeeper

tag: 3.8.0-debian-11-r56

------------------------------------------------------------------------

auth:

client:

## @param auth.client.enabled Enable ZooKeeper client-server authentication. It uses SASL/Digest-MD5

##

enabled: false # 认证关掉

------------------------------------------------------------------------

#

[root@k8s-master01 zookeeper]# helm install zookeeper . -n public-service

NAME: zookeeper

LAST DEPLOYED: Thu Nov 24 23:32:04 2022

NAMESPACE: public-service

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: zookeeper

CHART VERSION: 10.2.5

APP VERSION: 3.8.0

** Please be patient while the chart is being deployed **

ZooKeeper can be accessed via port 2181 on the following DNS name from within your cluster:

zookeeper.public-service.svc.cluster.local

To connect to your ZooKeeper server run the following commands:

export POD_NAME=$(kubectl get pods --namespace public-service -l "app.kubernetes.io/name=zookeeper,app.kubernetes.io/instance=zookeeper,app.kubernetes.io/component=zookeeper" -o jsonpath="{.items[0].metadata.name}")

kubectl exec -it $POD_NAME -- zkCli.sh

To connect to your ZooKeeper server from outside the cluster execute the following commands:

kubectl port-forward --namespace public-service svc/zookeeper 2181:2181 &

zkCli.sh 127.0.0.1:2181

# 查看

[root@k8s-master01 zookeeper]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

zookeeper-0 1/1 Running 0 6m27s

zookeeper-1 1/1 Running 0 6m27s

zookeeper-2 1/1 Running 0 6m27s

[root@k8s-master01 zookeeper]# kubectl get svc -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

zookpeer-zookeeper ClusterIP 10.106.177.135 <none> 2181/TCP,2888/TCP,3888/TCP 5m29s

zookpeer-zookeeper-headless ClusterIP None <none> 2181/TCP,2888/TCP,3888/TCP 5m29s # 集群内部通讯用

安装方式二:直接安装(安装kafka在11小节中)

helm install kafka bitnami/kafka --set zookeeper.enabled=false --set

replicaCount=3 --set externalZookeeper.servers=zookeeper --set

persistence.enabled=false -n public-service

11,安装kafka到k8s集群

# 直接在命令行设置更改参数

[root@k8s-master01 zookeeper]# helm install kafka bitnami/kafka --set zookeeper.enabled=false --set replicaCount=3 --set externalZookeeper.servers=zookeeper --set persistence.enabled=false -n public-service

NAME: kafka

LAST DEPLOYED: Thu Nov 24 23:33:23 2022

NAMESPACE: public-service

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 19.1.3

APP VERSION: 3.3.1

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kafka.public-service.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kafka-0.kafka-headless.public-service.svc.cluster.local:9092

kafka-1.kafka-headless.public-service.svc.cluster.local:9092

kafka-2.kafka-headless.public-service.svc.cluster.local:9092

To create a pod that you can use as a Kafka client run the following commands:

kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:3.3.1-debian-11-r11 --namespace public-service --command -- sleep infinity

kubectl exec --tty -i kafka-client --namespace public-service -- bash

PRODUCER:

kafka-console-producer.sh \

--broker-list kafka-0.kafka-headless.public-service.svc.cluster.local:9092,kafka-1.kafka-headless.public-service.svc.cluster.local:9092,kafka-2.kafka-headless.public-service.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server kafka.public-service.svc.cluster.local:9092 \

--topic test \

--from-beginning

# 查询kafka状态

[root@k8s-master01 zookeeper]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 5m8s

kafka-1 1/1 Running 0 5m8s

kafka-2 1/1 Running 0 5m8s

zookeeper-0 1/1 Running 0 6m27s

zookeeper-1 1/1 Running 0 6m27s

zookeeper-2 1/1 Running 0 6m27s

#

[root@k8s-master01 zookeeper]# helm list -n public-service

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kafka public-service 1 2022-11-24 23:33:23.629071294 +0800 CST deployed kafka-19.1.3 3.3.1

zookeeper public-service 1 2022-11-24 23:32:04.382463623 +0800 CST deployed zookeeper-10.2.5 3.8.0

[root@k8s-master01 zookeeper]# helm get values kafka -n public-service

USER-SUPPLIED VALUES:

externalZookeeper:

servers: zookeeper

persistence:

enabled: false

replicaCount: 3

zookeeper:

enabled: false

12,Kafka集群测试验证

kafka是生产者与消费者的模型,在生产者打印一条消息,在消费者就可以看到

# 等待zookeeper和kafka都为Running状态,并且变为1/1之后我们来进行验证

[root@k8s-master01 zookeeper]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 10m

kafka-1 1/1 Running 0 10m

kafka-2 1/1 Running 0 10m

zookeeper-0 1/1 Running 0 12m

zookeeper-1 1/1 Running 0 12m

zookeeper-2 1/1 Running 0 12m

# 运行一个客户端

kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:2.8.0-debian-10-r30 --namespace public-service --command -- sleep infinity

# 打开两个窗口,一个做生产者,一个做消费者

kubectl exec -it kafka-client -n public-service -- bash

# 生产者:

kafka-console-producer.sh --broker-list kafka-0.kafka-headless.public-service.svc.cluster.local:9092,kafka-1.kafka-headless.publiservice.svc.cluster.local:9092,kafka-2.kafka-headless.public-service.svc.cluster.local:9092 --topic test

# 消费者:

kafka-console-consumer.sh \

--bootstrap-server kafka.public-service.svc.cluster.local:9092 --topic test --from-beginning

# 查看kafka-client已经启动

[root@k8s-master01 zookeeper]# kubectl get po -n public-service

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 37m

kafka-1 1/1 Running 0 37m

kafka-2 1/1 Running 0 37m

kafka-client 1/1 Running 0 116s

zookeeper-0 1/1 Running 0 39m

zookeeper-1 1/1 Running 0 39m

zookeeper-2 1/1 Running 0 39m

在生产者生产,在消费者可以消费,证明集群验证成功,就可以给开发用了

#

[root@k8s-master01 ~]# kubectl get svc -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kafka ClusterIP 10.99.2.130 <none> 9092/TCP 10h # 集群内部通讯用

kafka-headless ClusterIP None <none> 9092/TCP,9093/TCP 10h # kafka内部通讯使用

zookeeper ClusterIP 10.109.129.4 <none> 2181/TCP,2888/TCP,3888/TCP 10h

zookeeper-headless ClusterIP None <none> 2181/TCP,2888/TCP,3888/TCP 10h

13,Kafka集群扩容及删除

- kafka集群扩容

# 将kafka集群副本数由3扩容为5

[root@k8s-master01 ~]# helm upgrade kafka bitnami/kafka --set zookeeper.enabled=false --set replicaCount=5 --set externalZookeeper.servers=zookeeper --set persistence.enabled=false -n public-service

Release "kafka" has been upgraded. Happy Helming!

NAME: kafka

LAST DEPLOYED: Fri Nov 25 10:59:21 2022

NAMESPACE: public-service

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

CHART NAME: kafka

CHART VERSION: 19.1.3

APP VERSION: 3.3.1

** Please be patient while the chart is being deployed **

Kafka can be accessed by consumers via port 9092 on the following DNS name from within your cluster:

kafka.public-service.svc.cluster.local

Each Kafka broker can be accessed by producers via port 9092 on the following DNS name(s) from within your cluster:

kafka-0.kafka-headless.public-service.svc.cluster.local:9092

kafka-1.kafka-headless.public-service.svc.cluster.local:9092

kafka-2.kafka-headless.public-service.svc.cluster.local:9092

kafka-3.kafka-headless.public-service.svc.cluster.local:9092

kafka-4.kafka-headless.public-service.svc.cluster.local:9092

To create a pod that you can use as a Kafka client run the following commands:

kubectl run kafka-client --restart='Never' --image docker.io/bitnami/kafka:3.3.1-debian-11-r11 --namespace public-service --command -- sleep infinity

kubectl exec --tty -i kafka-client --namespace public-service -- bash

PRODUCER:

kafka-console-producer.sh \

--broker-list kafka-0.kafka-headless.public-service.svc.cluster.local:9092,kafka-1.kafka-headless.public-service.svc.cluster.local:9092,kafka-2.kafka-headless.public-service.svc.cluster.local:9092,kafka-3.kafka-headless.public-service.svc.cluster.local:9092,kafka-4.kafka-headless.public-service.svc.cluster.local:9092 \

--topic test

CONSUMER:

kafka-console-consumer.sh \

--bootstrap-server kafka.public-service.svc.cluster.local:9092 \

--topic test \

--from-beginning

# 查看

[root@k8s-master01 ~]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 5 12h

kafka-1 1/1 Running 4 12h

kafka-2 1/1 Running 7 12h

kafka-3 1/1 Running 13 81m

kafka-4 1/1 Running 18 81m

kafka-client 1/1 Running 0 26s

zookeeper-0 1/1 Running 7 12h

zookeeper-1 1/1 Running 3 12h

zookeeper-2 1/1 Running 3 12h

- kafka集群删除

[root@k8s-master01 ~]# helm list -n public-service

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kafka public-service 2 2022-11-25 10:59:21.55153204 +0800 CST deployed kafka-19.1.3 3.3.1

zookeeper public-service 1 2022-11-24 23:32:04.382463623 +0800 CST deployed zookeeper-10.2.5 3.8.0

[root@k8s-master01 ~]# helm delete kafka zookeeper -n public-service

release "kafka" uninstalled

release "zookeeper" uninstalled

14,Helm基础命令

下载一个包:helm pull

创建一个包:helm create

安装一个包:helm install

查看:helm list

查看安装参数:helm get values

更新:helm upgrade

删除:helm delete

15,Helm v3 Chart目录层级

# 目录层级

├── charts # 依赖文件

├── Chart.yaml # 当前chart的基本信息

apiVersion:Chart的apiVersion,目前默认都是v2

name:Chart的名称

type:图表的类型[可选]

version:Chart自己的版本号

appVersion:Chart内应用的版本号[可选]

description:Chart描述信息[可选]

├── templates # 模板位置

│ ├── deployment.yaml

│ ├── _helpers.tpl # 自定义的模板或者函数

│ ├── ingress.yaml

│ ├── NOTES.txt #Chart安装完毕后的提醒信息

│ ├── serviceaccount.yaml

│ ├── service.yaml

│ └── tests # 测试文件

│ └── test-connection.yaml

└── values.yaml #配置全局变量或者一些参数

[root@k8s-master01 zookeeper]# helm create helm-test # 该目录下会生成一个helm-test的目录

Creating helm-test

[root@k8s-master01 helm-test]# ls

charts Chart.yaml templates values.yaml

16,Helm内置变量的使用

◆ Release.Name: 实例的名称,helm install指定的名字

◆ Release.Namespace: 应用实例的命名空间

◆ Release.IsUpgrade: 如果当前对实例的操作是更新或者回滚,这个变量的值就会被置为true

◆ Release.IsInstall: 如果当前对实例的操作是安装,则这边变量被置为true

◆ Release.Revision: 此次修订的版本号,从1开始,每次升级回滚都会增加1

◆ Chart: Chart.yaml文件中的内容,可以使用Chart.Version表示应用版本,Chart.Name表示Chart的名称

17,Helm常用函数的使用

Go模板常用模板函数

default

trim

trimAll

trimSuffix

trimPrefix

upper

lower

title

untitle

repeat

substr

nospace

trunc

contains

hasPrefix

hasSuffix

quote

squote

cat

indent

nindent

replace

18,Helm逻辑控制

19,小试牛刀:StatefulSet安装Rabbitmq集群

下载基本文件:git clone https://github.com/dotbalo/k8s.git

cd k8s ; kubectl create ns public-service ; kubectl apply -f .

创建一个Chart:helm create rabbitmq-cluster

安装一个Chart:helm install XXXXX .

测试无误后删除Chart:helm delete XXXXX

# 克隆项目

[root@k8s-master01 study]# git clone https://github.com/dotbalo/k8s.git

Cloning into 'k8s'...

remote: Enumerating objects: 1118, done.

remote: Counting objects: 100% (73/73), done.

remote: Compressing objects: 100% (25/25), done.

remote: Total 1118 (delta 57), reused 51 (delta 48), pack-reused 1045

Receiving objects: 100% (1118/1118), 5.27 MiB | 1.71 MiB/s, done.

Resolving deltas: 100% (497/497), done.

[root@k8s-master01 study]# ls -ld k8s

drwxr-xr-x 20 root root 4096 Nov 26 13:43 k8s

[root@k8s-master01 study]# cd k8s/k8s-rabbitmq-cluster/

[root@k8s-master01 k8s-rabbitmq-cluster]# ls

rabbitmq-cluster-ss.yaml rabbitmq-configmap.yaml rabbitmq-rbac.yaml rabbitmq-secret.yaml rabbitmq-service-cluster.yaml rabbitmq-service-lb.yaml README.md

# 创建rabbitmq集群

[root@k8s-master01 k8s-rabbitmq-cluster]# kubectl create -f . -n public-service

statefulset.apps/rmq-cluster created

# 查看

[root@k8s-master01 k8s-rabbitmq-cluster]# kubectl get pod -n public-service

NAME READY STATUS RESTARTS AGE

rmq-cluster-0 1/1 Running 0 7m16s

rmq-cluster-1 1/1 Running 0 6m25s

rmq-cluster-2 1/1 Running 0 5m32s

[root@k8s-master01 k8s-rabbitmq-cluster]# kubectl get svc -n public-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rmq-cluster ClusterIP None <none> 5672/TCP 7m20s # Headless-service

rmq-cluster-balancer NodePort 10.103.12.249 <none> 15672:32394/TCP,5672:31056/TCP 7m20s

20,编写Chart一键安装Rabbitmq集群

# 先用helm创建一个chart,会生成一个叫rabbitmq-cluster的目录

[root@k8s-master01 k8s-rabbitmq-cluster]# helm create rabbitmq-cluster

Creating rabbitmq-cluster

[root@k8s-master01 k8s-rabbitmq-cluster]# ls

rabbitmq-cluster rabbitmq-configmap.yaml rabbitmq-secret.yaml rabbitmq-service-lb.yaml

rabbitmq-cluster-ss.yaml rabbitmq-rbac.yaml rabbitmq-service-cluster.yaml README.md

[root@k8s-master01 k8s-rabbitmq-cluster]# cd rabbitmq-cluster

[root@k8s-master01 rabbitmq-cluster]# ls

charts Chart.yaml templates values.yaml

# 删除一些不要的yaml文件

[root@k8s-master01 rabbitmq-cluster]# cd templates/

[root@k8s-master01 templates]# ls

deployment.yaml _helpers.tpl hpa.yaml ingress.yaml NOTES.txt serviceaccount.yaml service.yaml tests

[root@k8s-master01 templates]# rm deployment.yaml hpa.yaml ingress.yaml serviceaccount.yaml service.yaml tests -rf

You have new mail in /var/spool/mail/root

# 留下以下两个

[root@k8s-master01 templates]# ls

_helpers.tpl NOTES.txt

# 将 19小节 中 StatefulSet安装Rabbitmq集群的yaml文件 拷贝过来

[root@k8s-master01 templates]# cd ../../

[root@k8s-master01 k8s-rabbitmq-cluster]# ls

rabbitmq-cluster rabbitmq-configmap.yaml rabbitmq-secret.yaml rabbitmq-service-lb.yaml

rabbitmq-cluster-ss.yaml rabbitmq-rbac.yaml rabbitmq-service-cluster.yaml README.md

[root@k8s-master01 k8s-rabbitmq-cluster]# cp -rp *.yaml rabbitmq-cluster/templates/

# 拷贝成功

[root@k8s-master01 k8s-rabbitmq-cluster]# cd rabbitmq-cluster/templates/

[root@k8s-master01 templates]# ls

_helpers.tpl rabbitmq-cluster-ss.yaml rabbitmq-rbac.yaml rabbitmq-service-cluster.yaml

NOTES.txt rabbitmq-configmap.yaml rabbitmq-secret.yaml rabbitmq-service-lb.yaml

浙公网安备 33010602011771号

浙公网安备 33010602011771号