数据采集实践作业二

1)

-

要求:在中国气象网(

-

序号 地区 日期 天气信息 温度 1 北京 7日(今天) 晴间多云,北部山区有阵雨或雷阵雨转晴转多云 31℃/17℃ 2 北京 8日(明天) 多云转晴,北部地区有分散阵雨或雷阵雨转晴 34℃/20℃ 3 北京 9日(后台) 晴转多云 36℃/22℃ 4 北京 10日(周六) 阴转阵雨 30℃/19℃ 5 北京 11日(周日) 阵雨 27℃/18℃ 6......

1 from bs4 import BeautifulSoup 2 from bs4 import UnicodeDammit 3 import urllib.request 4 import sqlite3 5 6 class WeatherDB: 7 def openDB(self): 8 self.con=sqlite3.connect("weathers.db") 9 self.cursor=self.con.cursor() 10 try: 11 self.cursor.execute("create table weathers (wCity varchar(16),wDate varchar(16),wWeather varchar(64),wTemp varchar(32),constraint pk_weather primary key (wCity,wDate))") 12 except: 13 self.cursor.execute("delete from weathers") 14 15 def closeDB(self): 16 self.con.commit() 17 self.con.close() 18 19 20 def insert(self, city, date, weather, temp): 21 try: 22 self.cursor.execute("insert into weathers (wCity,wDate,wWeather,wTemp) values (?,?,?,?)", 23 (city, date, weather, temp)) 24 except Exception as err: 25 print(err) 26 27 def show(self): 28 self.cursor.execute("select * from weathers") 29 rows = self.cursor.fetchall() 30 print("{0:^18}{1:{4}^18}{2:{4}^21}{3:{4}^13}" .format("城市", "日期", "天气情况", "气温",chr(12288))) 31 for row in rows: 32 print("{0:^18}{1:{4}^20}{2:{4}^18}{3:{4}^18}".format(row[0], row[1], row[2], row[3],chr(12288))) 33 34 35 class WeatherForecast: 36 def __init__(self): 37 self.headers = { 38 "User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"} 39 self.cityCode = {"泉州": "101230501", "北京": "101010100", "上海": "101020100", "广州": "101280101", "深圳": "101280601", } 40 41 def forecastCity(self, city): 42 if city not in self.cityCode.keys(): 43 print(city + " code cannot be found") 44 return 45 46 47 url = "http://www.weather.com.cn/weather/" + self.cityCode[city] + ".shtml" 48 try: 49 req = urllib.request.Request(url, headers=self.headers) 50 data = urllib.request.urlopen(req) 51 data = data.read() 52 dammit = UnicodeDammit(data, ["utf-8", "gbk"]) 53 data = dammit.unicode_markup 54 soup = BeautifulSoup(data, "lxml") 55 lis = soup.select("ul[class='t clearfix'] li") 56 for li in lis: 57 try: 58 date=li.select('h1')[0].text 59 weather=li.select('p[class="wea"]')[0].text 60 temp=li.select('p[class="tem"]')[0].text.strip() 61 # print(city,date,weather,temp) 62 self.db.insert(city,date,weather,temp) 63 except Exception as err: 64 print(err) 65 except Exception as err: 66 print(err) 67 68 69 def process(self, cities): 70 self.db = WeatherDB() 71 self.db.openDB() 72 73 74 for city in cities: 75 self.forecastCity(city) 76 77 self.db.show() 78 self.db.closeDB() 79 80 ws = WeatherForecast() 81 ws.process(["泉州", "北京", "上海", "广州", "深圳"]) 82 print("completed")

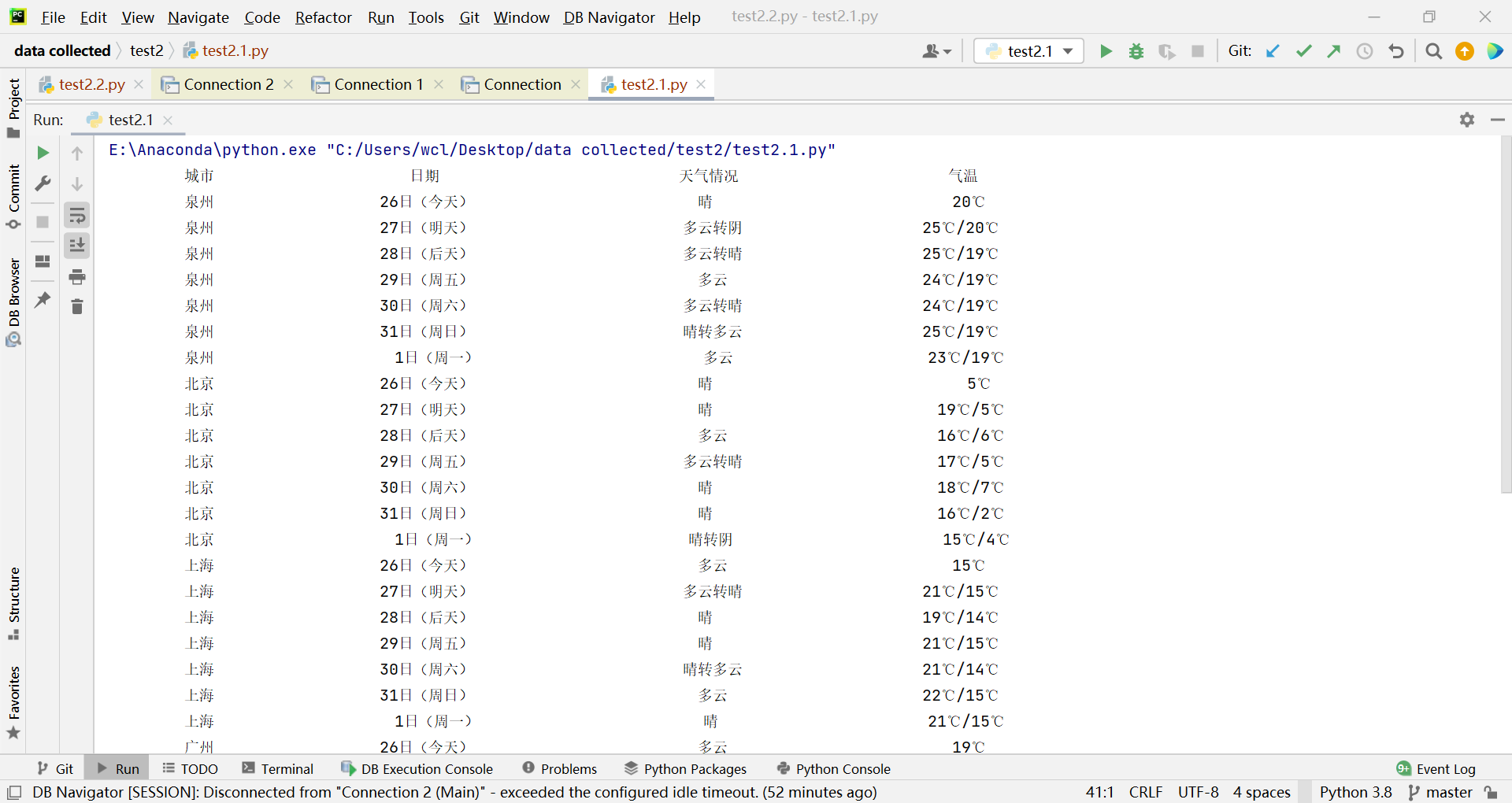

结果截图:

控制台:

数据库:

2)心得体会

本次实验是一次复现,学习到了如何在pycharm中查看数据库中的表的内容以及巩固了对BeautifulSoup的使用。

作业②

1)

-

要求:用requests和BeautifulSoup库方法定向爬取股票相关信息。

-

候选网站:东方财富网:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

-

技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

-

输出信息:

| 序号 | 股票代码 | 股票名称 | 最新报价 | 涨跌幅 | 涨跌额 | 成交量 | 成交额 | 振幅 | 最高 | 最低 | 今开 | 昨收 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 688093 | N世华 | 28.47 | 62.22% | 10.92 | 26.13万 | 7.6亿 | 22.34 | 32.0 | 28.08 | 30.2 | 17.55 |

| 2...... |

1 import json 2 import requests 3 import re 4 from bs4 import BeautifulSoup 5 from bs4 import UnicodeDammit 6 import urllib.request 7 import sqlite3 8 9 class StockDB: 10 def openDB(self): 11 self.con=sqlite3.connect("stocks.db") 12 self.cursor=self.con.cursor() 13 try: 14 self.cursor.execute("create table stocks(stockCode varchar(16),stockName varchar(16),Newprice varchar(16),RiseFallpercent varchar(16),RiseFall varchar(16),Turnover varchar(16),Dealnum varchar(16),Amplitude varchar(16),highest varchar(16),lowest varchar(16),today varchar(16),yesterday varchar(16))") 15 except: 16 self.cursor.execute("delete from stocks") 17 18 def closeDB(self): 19 self.con.commit() 20 self.con.close() 21 22 23 def insert(self,stockList): 24 try: 25 self.cursor.executemany("insert into stocks (stockcode,stockname,newprice,risefallpercent,risefall,turnover,dealnum,Amplitude,highest,lowest,today,yesterday) values (?,?,?,?,?,?,?,?,?,?,?,?)", 26 stockList) 27 except Exception as err: 28 print(err) 29 30 def show(self): 31 self.cursor.execute("select * from stocks") 32 rows = self.cursor.fetchall() 33 print("{:8}\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}" 34 "\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}" .format("股票代码","股票名称","最新价","涨跌幅","涨跌额","成交量","成交额","振幅","最高","最低","今收","昨收",chr(12288))) 35 for row in rows: 36 print("{:8}\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}" 37 "\t{:16}\t{:8}\t{:8}\t{:8}\t{:8}\t{:8}".format(row[0], row[1], row[2], row[3], row[4], row[5], row[6], row[7], row[8], row[9], row[10], row[11],chr(12288))) 38 39 class stock: 40 41 def getHTML(url): 42 try: 43 headers = { 44 "User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"} 45 r = requests.get(url, timeout=30, headers=headers) 46 r.raise_for_status() 47 r.encoding = r.apparent_encoding 48 return r.text 49 except Exception as e: 50 print(e) 51 52 53 def getStockData(self, html): 54 data = re.search(r'\[.*]', html).group() 55 stocks = re.findall(r'{.*?}', data) 56 stocks = [json.loads(x) for x in stocks] 57 att = {"股票代码": 'f12', "股票名称": 'f14', "最新报价": 'f2', "涨跌幅": 'f3', "涨跌额": 'f4', "成交量": 'f5', "成交额": 'f6', 58 "振幅": 'f7', "最高": 'f15', "最低": 'f16', "今开": 'f17', "昨收": 'f18'} 59 stockList = [] 60 for stock in stocks: 61 ls = [] 62 for i in att: 63 ls.append(stock[att[i]]) 64 ls = tuple(ls) 65 stockList.append(ls) 66 self.db.insert(stockList) 67 68 def process(self): 69 self.db = StockDB() 70 self.db.openDB() 71 for page in range(1, 5): 72 url = "http://56.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124032040654482613706_1635234281838&pn="+ str(page) + "&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1635234281839" 73 # url = "http://56.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124032040654482613706_1635234281838&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1635234281839" 74 html = stock.getHTML(url) 75 self.getStockData(html) 76 77 78 self.db.show() 79 self.db.closeDB() 80 81 82 83 if __name__ =="__main__": 84 s = stock() 85 s.process() 86 print("completed")

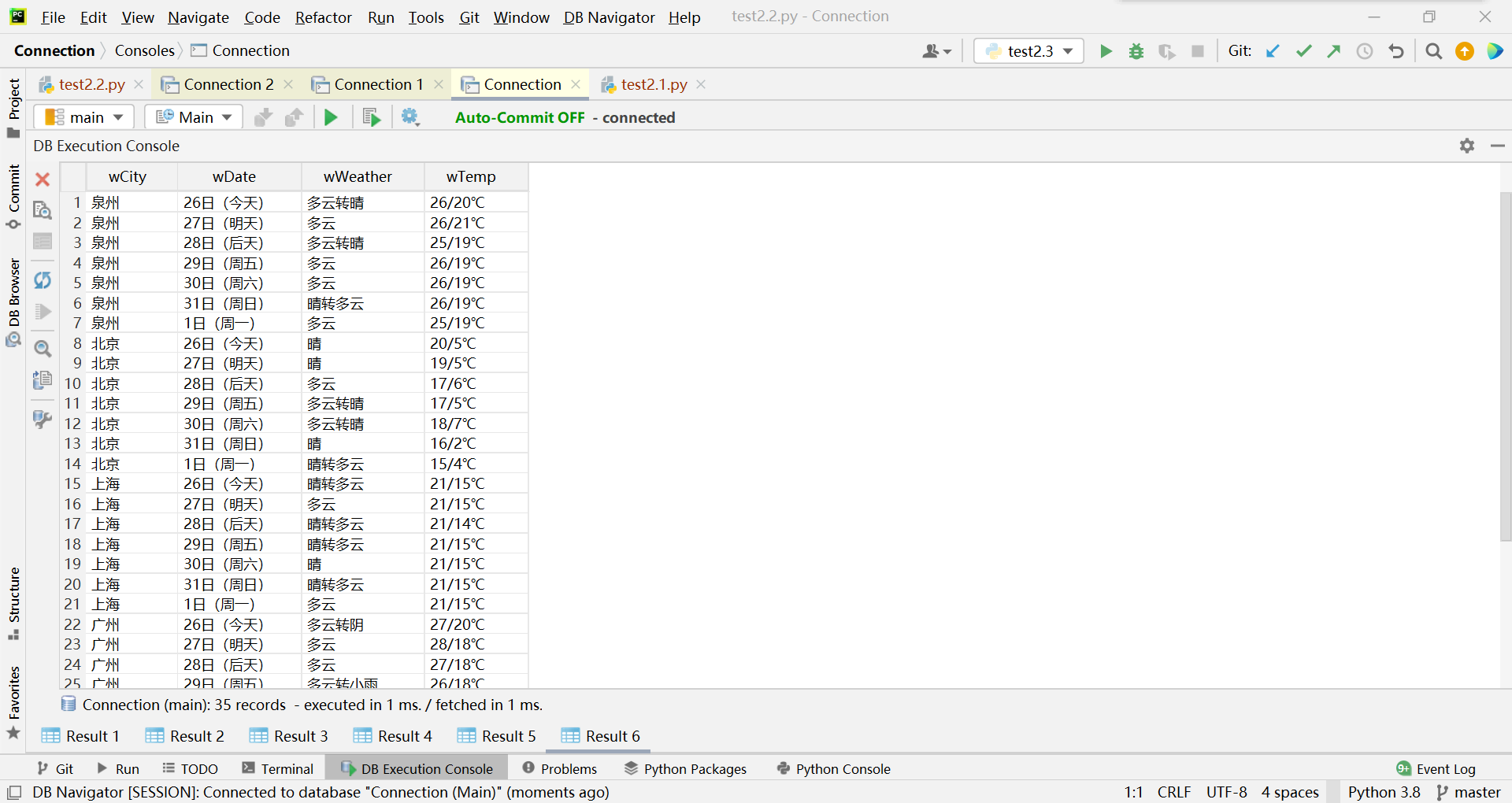

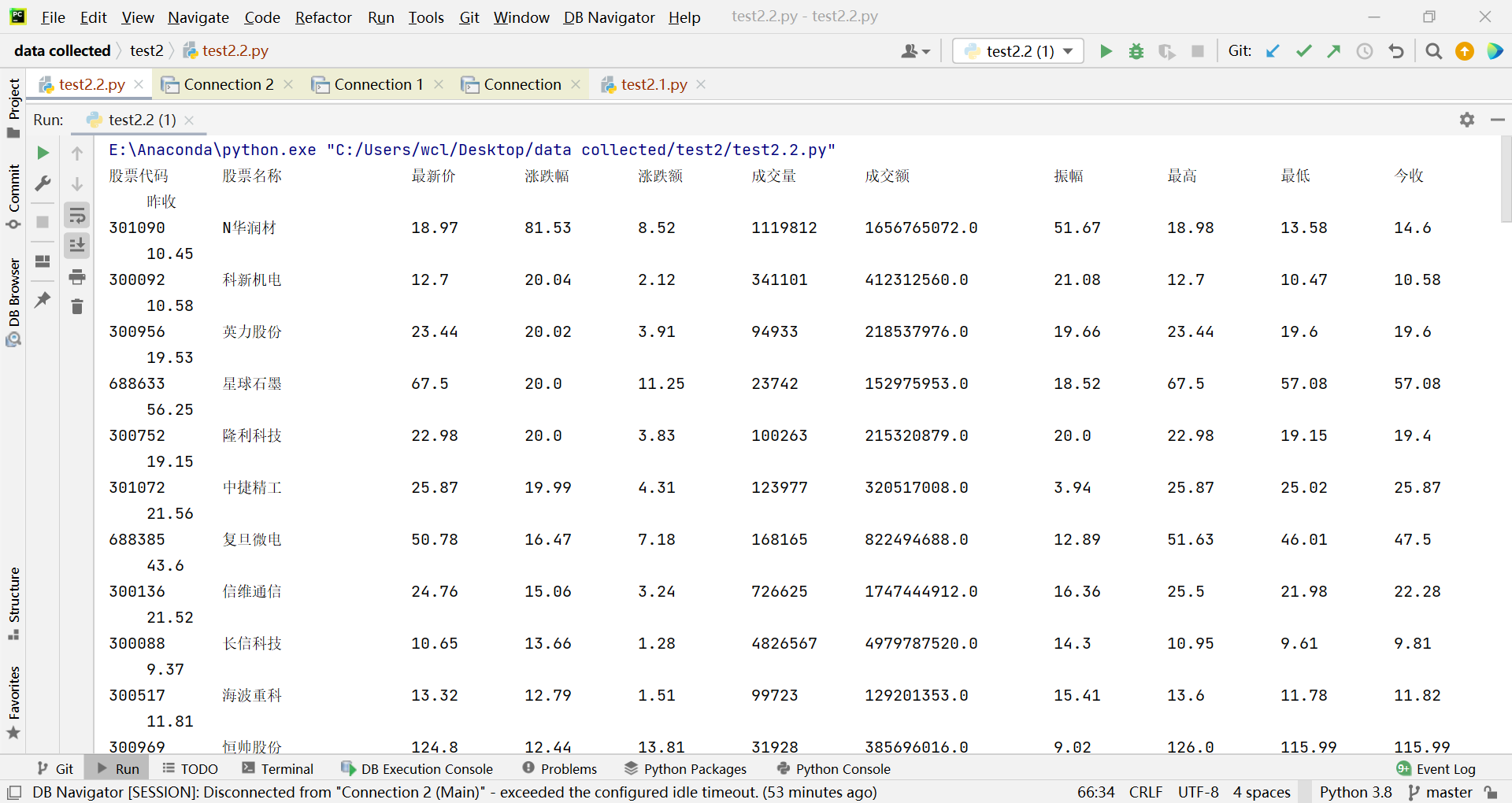

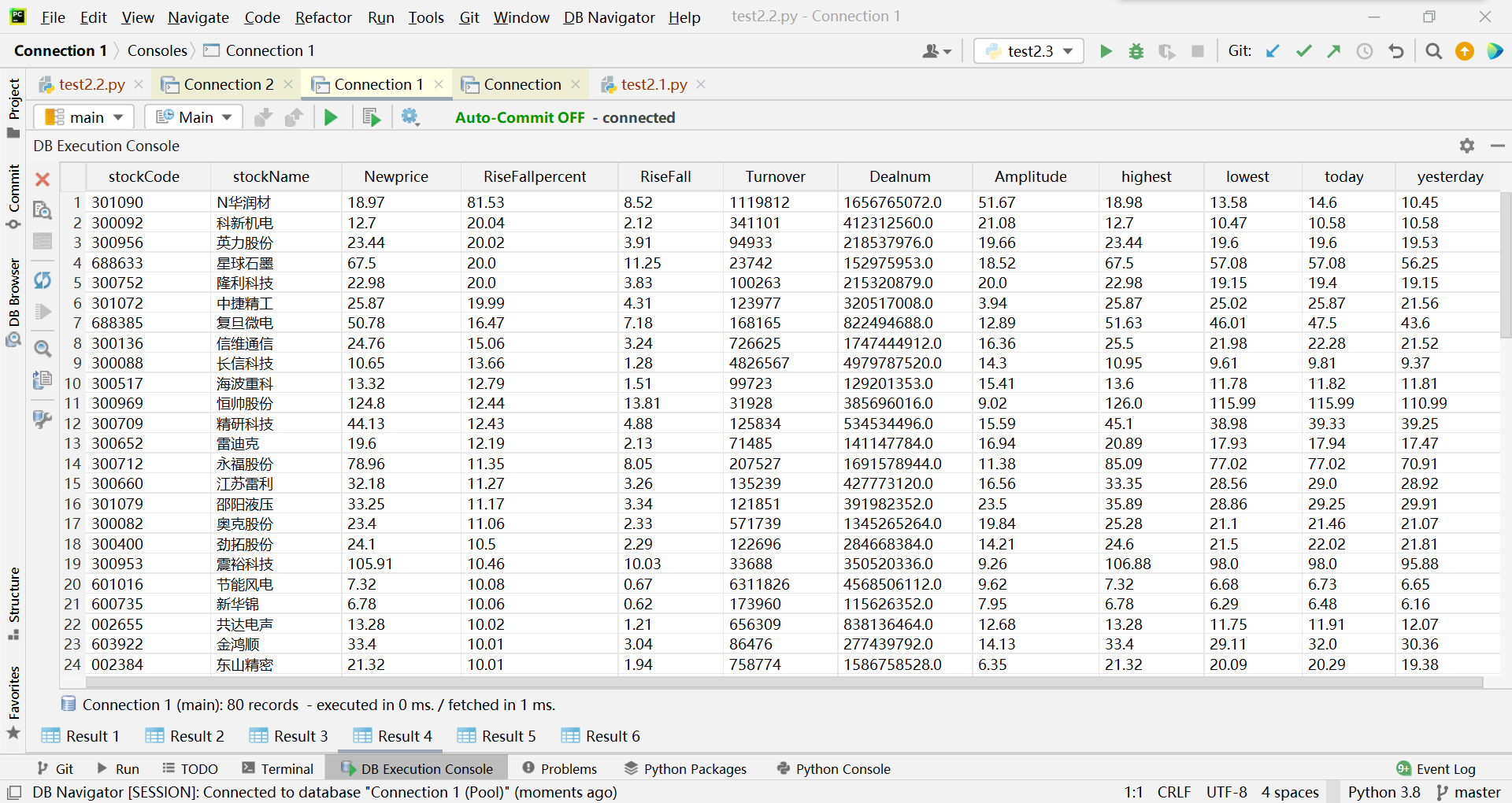

结果截图:

控制台:

数据库:

2)心得体会

这次实验,我懂得了如何爬取和读取处理json格式的数据,同时也巩固了对数据库的操作。

作业③

1)

-

要求: 爬取中国大学2021主榜 https://www.shanghairanking.cn/rankings/bcur/2021 所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

-

技巧: 分析该网站的发包情况,分析获取数据的api

-

输出信息:

| 排名 | 学校 | 总分 |

|---|---|---|

| 1 | 清华大学 | 969.2 |

1 import json 2 import requests 3 import re 4 from bs4 import BeautifulSoup 5 from bs4 import UnicodeDammit 6 import urllib.request 7 import sqlite3 8 9 class UniversityDB: 10 def openDB(self): 11 self.con=sqlite3.connect("UniversityInfo.db") 12 self.cursor=self.con.cursor() 13 try: 14 self.cursor.execute("create table UniversityInfo(Rank varchar(16),SchoolName varchar(16),Total_Score varchar(16))") 15 except: 16 self.cursor.execute("delete from UniversityInfo") 17 18 def closeDB(self): 19 self.con.commit() 20 self.con.close() 21 22 23 def insert(self,Rank,Schoolname,score): 24 try: 25 self.cursor.execute("insert into UniversityInfo(Rank, SchoolName, Total_Score) values (?,?,?)", 26 (Rank, Schoolname, score)) 27 except Exception as err: 28 print(err) 29 30 def show(self): 31 self.cursor.execute("select * from UniversityInfo") 32 rows = self.cursor.fetchall() 33 print("{:^8}{:^16}{:^8}" .format("排名", "学校", "总分", chr(12288))) 34 for row in rows: 35 if row[2] is None: 36 row = list(row) 37 row[2] = "-" 38 print("{:^8}{:^16}{:^8}".format(*row, chr(12288))) 39 40 class University: 41 42 def getHTML(url): 43 try: 44 headers = { 45 "User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"} 46 r = requests.get(url, timeout=30, headers=headers) 47 r.raise_for_status() 48 r.encoding = r.apparent_encoding 49 return r.text 50 except Exception as e: 51 print(e) 52 53 54 def getUniversityInfo(self,html): 55 schoolnames = re.findall(r'univNameCn:"(.*?)"', html) 56 totalscores = re.findall(r'score:(.*?),', html) 57 schoolname = [] 58 totalscore = [] 59 Rank = [] 60 for i in range(1,len(schoolnames)+1): 61 Rank.append(str(i)) 62 for i in schoolnames: 63 schoolname.append(i) 64 for j in totalscores: 65 totalscore.append(j) 66 temp = [] 67 for x in totalscore: 68 try: 69 temp.append(eval(x)) 70 except Exception as e: 71 temp.append(None) 72 totalscore = temp 73 for i in range(len(Rank)): 74 self.db.insert(Rank[i], schoolname[i], totalscore[i]) 75 76 77 def process(self): 78 self.db = UniversityDB() 79 self.db.openDB() 80 url = "https://www.shanghairanking.cn/_nuxt/static/1635233019/rankings/bcur/2021/payload.js" 81 html = University.getHTML(url) 82 self.getUniversityInfo(html) 83 84 85 self.db.show() 86 self.db.closeDB() 87 88 89 90 if __name__ =="__main__": 91 u = University() 92 u.process() 93 print("completed")

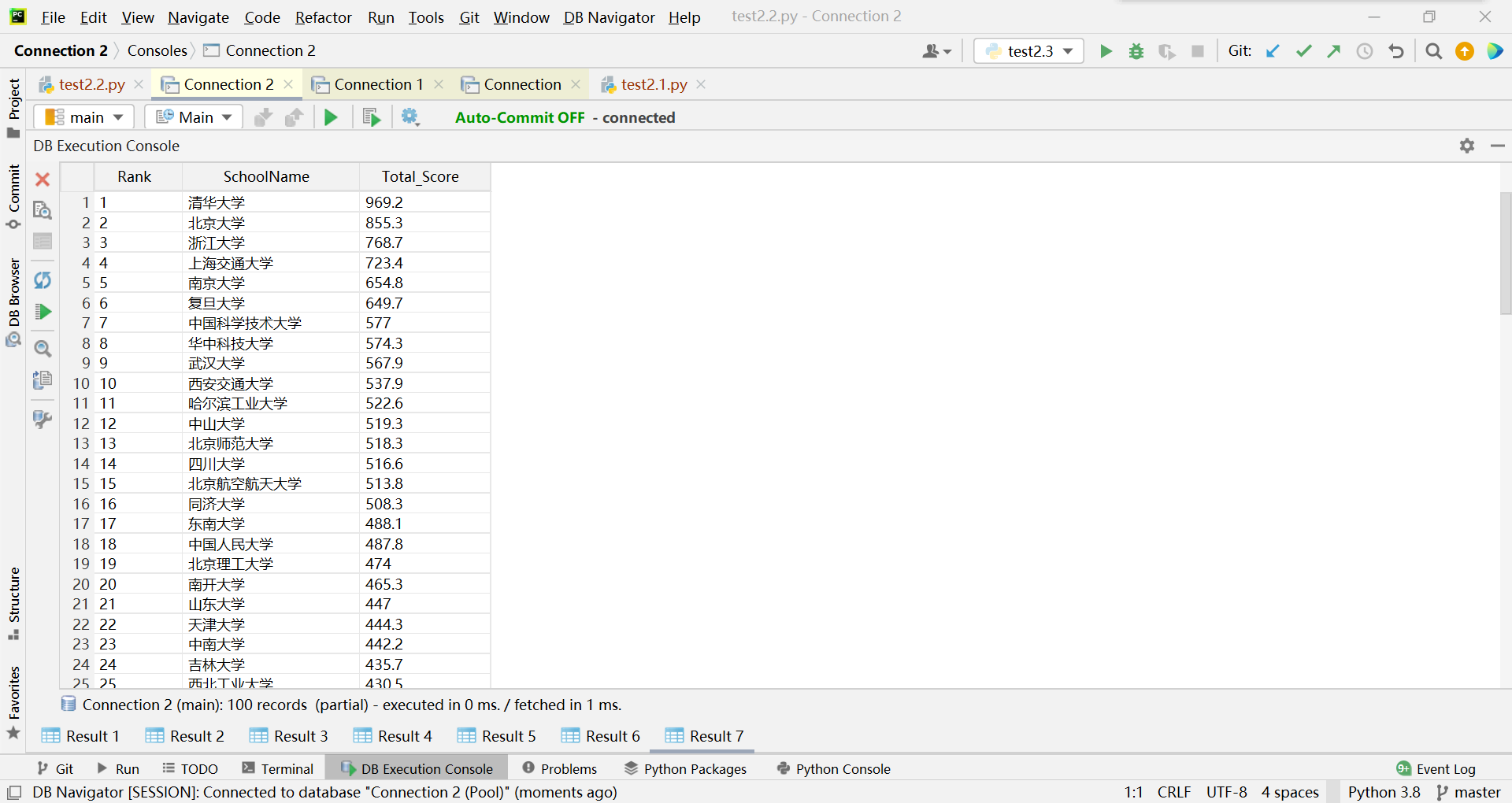

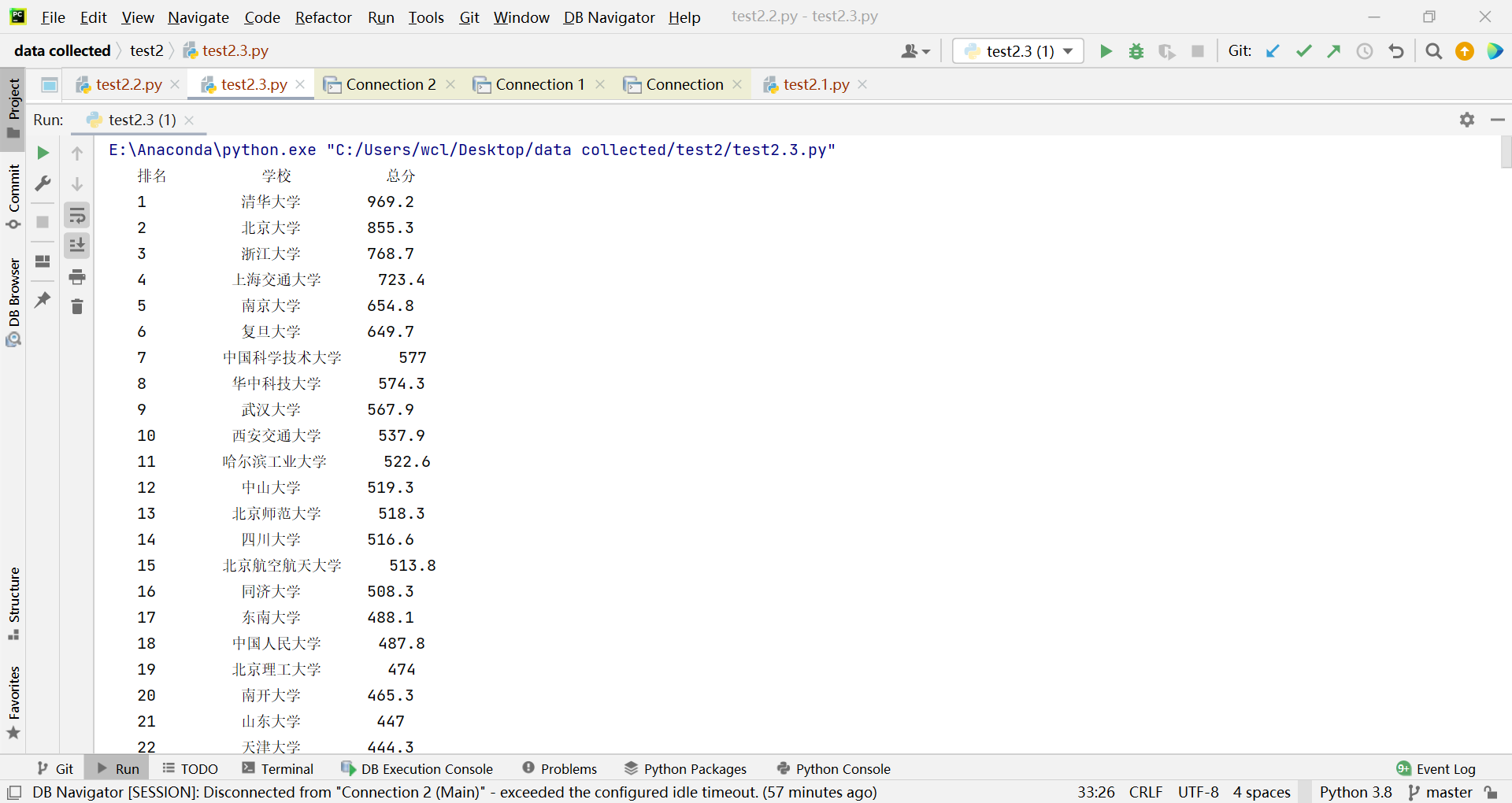

结果截图:

控制台:

数据库: