Airflow2.1.1超详细安装文档

Mysql

安装

MySQL安装可以参考我之前写过的博客:linux下安装MySQL5.7及遇到的问题总结

MySQL安装完成后,需要创建airflow数据库,用户,并赋予相关权限

CREATE DATABASE airflow CHARACTER SET utf8; CREATE USER 'airflow'@'%' IDENTIFIED BY 'yourpassword'; GRANT ALL PRIVILEGES ON *.* TO 'airflow'@'%' IDENTIFIED BY 'yourpassword' WITH GRANT OPTION; set global explicit_defaults_for_timestamp =1; FLUSH PRIVILEGES;

安装python3.7.5(重要)

该部分需要在所有airflow安装节点进行操作

Airflow官方文档中,给出的安装方式是Python3,CentOS7机器上是默认是python2,安装airflow过程中会出现各种各样的问题.

安装编译相关工具

yum -y groupinstall "Development tools" yum -y install zlib-devel bzip2-devel openssl-devel ncurses-devel sqlite-devel readline-devel tk-devel gdbm-devel db4-devel libpcap-devel xz-devel yum install libffi-devel -y

下载编译Python3.7

wget https://www.python.org/ftp/python/3.7.5/Python-3.7.5.tar.xz tar -xvJf Python-3.7.5.tar.xz mkdir /usr/python3.7 cd Python-3.7.5 ./configure --prefix=/usr/python3.7 make && make install

创建软链接

ln -s /usr/python3.7/bin/python3 /usr/bin/python3.7 ln -s /usr/python3.7/bin/pip3 /usr/bin/pip3.7

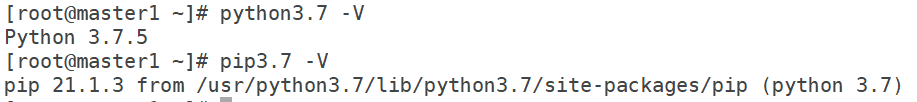

验证是否安装成功

python3.7 -V pip3.7 -V

如下所示,证明配置成功:

因为执行yum需要python2版本,所以我们还要修改yum的配置

vim /usr/bin/yum #! /usr/bin/python修改为#! /usr/bin/python2

vim /usr/libexec/urlgrabber-ext-down #! /usr/bin/python 也要修改为#! /usr/bin/python2

确保安装必要软件(重要)

# 安装airflow pip版本过低会导致安装失败 pip3.7 install --upgrade pip sudo pip3.7 install pymysql sudo pip3.7 install celery sudo pip3.7 install flower sudo pip3.7 install psycopg2-binary

二、安装Airflow(重要)

注意: 2.1,2.2,2.3部分需要在所有安装节点进行操作

2.1 配置 airflow sudo权限

这里使用airflow用户进行

配置airflow用户 sudo权限

# 以下命令使用root用户 useradd airflow vi /etc/sudoers ## Allow root to run any commands anywhere rootALL=(ALL) ALL airflow ALL=(ALL) NOPASSWD: ALL #加入这一行

2.2 设置Airflow环境变量

安装完后airflow安装路径默认为: /home/airflow/.local/bin

#使用root用户执行 vi /etc/profile export PATH=$PATH:/usr/python3.7/bin:/home/airflow/.local/bin source /etc/profile

此处的/home/airflow/.local/bin 为~/.local/bin,

根据实际配置PATH=$PATH:~/.local/bin

#配置环境变量,使用airflow用户执行(可选,默认为~/airflow) export AIRFLOW_HOME=~/airflow

2.3 安装airflow

su airflow #root用户

# 以下命令使用airflow用户

AIRFLOW_VERSION=2.1.1

PYTHON_VERSION="$(python3.7 --version | cut -d " " -f 2 | cut -d "." -f 1-2)"

CONSTRAINT_URL="https://raw.githubusercontent.com/apache/airflow/constraints-${AIRFLOW_VERSION}/constraints-no-providers-${PYTHON_VERSION}.txt"

# 这里要加sudo,否则会存在部分缺失,并且没有报错,这里要注意添加mysql,celery,cncf.kubernetes依赖,否则后续启动airflow时会报错

sudo pip3.7 install "apache-airflow[mysql,celery,cncf.kubernetes]==${AIRFLOW_VERSION}" --constraint "${CONSTRAINT_URL}" -i https://pypi.rasa.com/simple --use-deprecated=legacy-resolver

如果airflow安装正常,此时将能够使用airflow命令,并且airflow安装目录下有如下文件:

airflow.cfg webserver_config.py

2.4 配置ariflow

airflow高可用架构如下:

修改{AIRFLOW_HOME}/airflow.cfg文件

# 在{AIRFLOW_HOME}/airflow.cfg 添加或者修改如下配置

# 1. 修改Executor配置

# executor = LocalExecutor

executor = CeleryExecutor

# 2. 修改元数据库(metestore)配置

#sql_alchemy_conn = sqlite:home/apps/airflow/airflow.db

sql_alchemy_conn = mysql+pymysql://airflow:yourpassword@hostname:3306/airflow

# 3.设置消息队列broker,此处使用 RabbitMQ

# broker_url = redis://redis:6379/0

broker_url = amqp://admin:yourpassword@hostname:5672/

# 4.设定结果存储后端backend

# result_backend = db+postgresql://postgres:airflow@postgres/airflow

result_backend = db+mysql://airflow:yourpassword@hostname:3306/airflow

# 5. 修改时区

# default_timezone = utc

default_timezone = Asia/Shanghai

default_ui_timezone = Asia/Shanghai

# 6. 配置web端口(默认8080,因为被ambari占用所以改为8081)

endpoint_url = http://localhost:8081

base_url = http://localhost:8081

web_server_port = 8081

修改后的{AIRFLOW_HOME}/airflow.cfg需要同步到所有安装airflow的服务器上

同时,需要根据dags_folder,base_log_folder配置创建相关目录,防止后面执行dag时报错

2.5 启动airflow集群

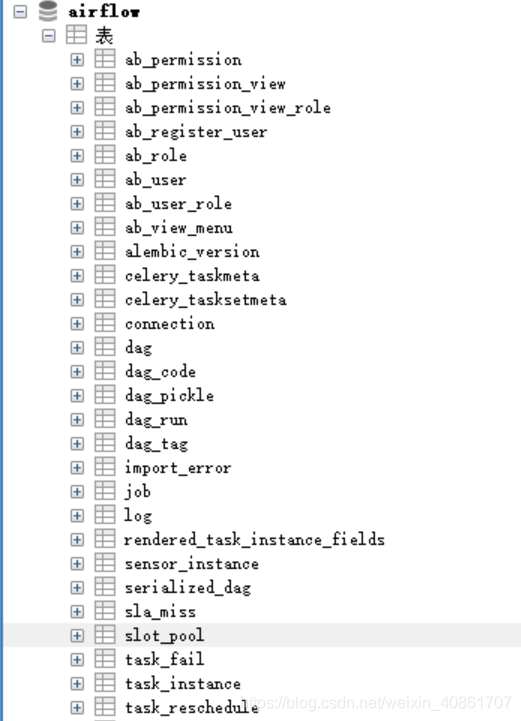

初始化数据库

airflow db init

mysql中出现如下表结构证明初始化成功

创建用户:

airflow users create \

--username admin \

--firstname Lixiaolong \

--lastname Bigdata \

--role Admin \

--email spiderman@superhero.org

根据控制台输出设置Password

Password设置为:yourpassword

启动webserver:

airflow webserver -D

启动scheduler

nohup airflow scheduler &;

启动worker

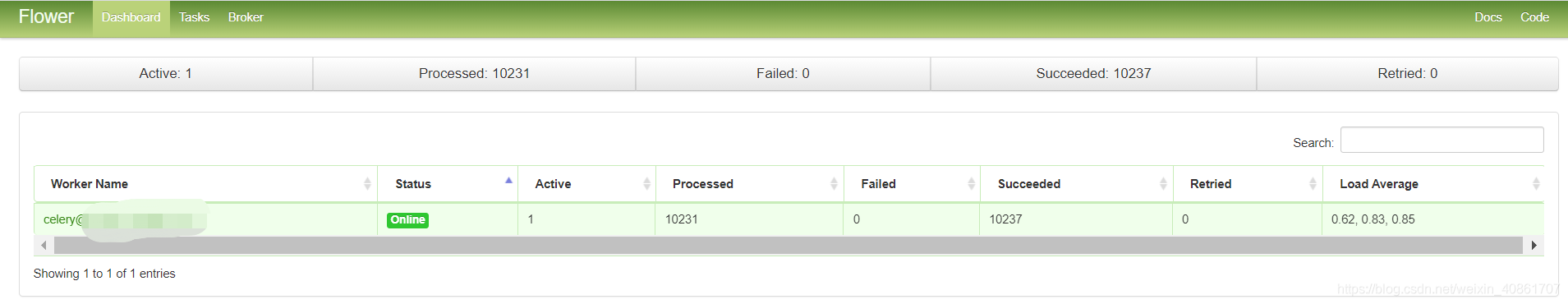

# 先启动flower,在需要启动worker服务器执行 airflow celery flower -D # 启动worker,在需要启动worker服务器执行 airflow celery worker -D

2.6 登录webui查看

webui: http://master1:8081/ 账号: admin 密码: 2.5阶段设置的密码

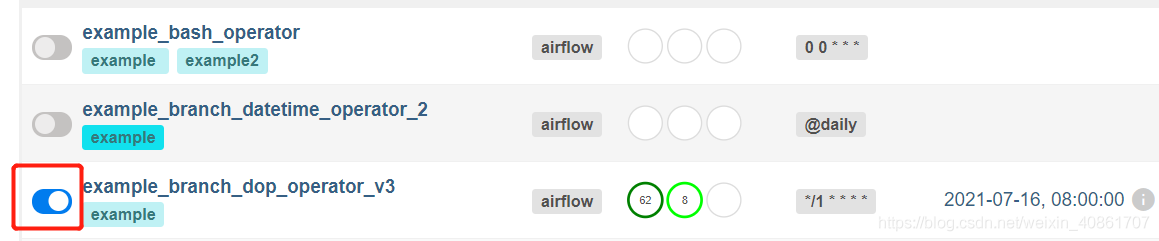

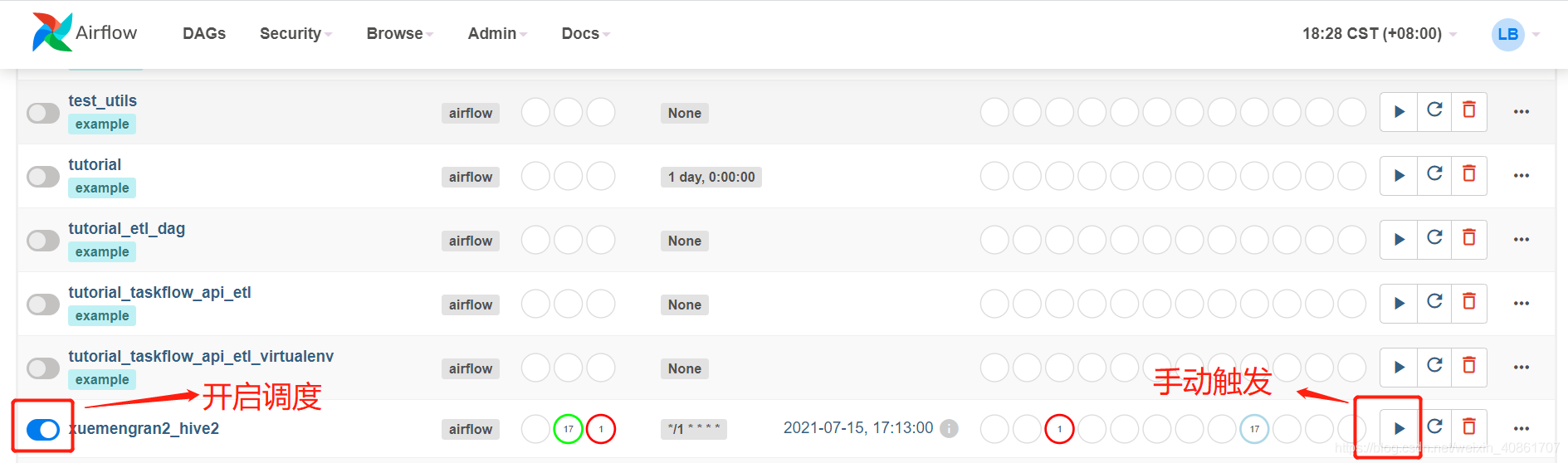

界面显示如下图:

worker的信息可以通过http://hostip:5555进行查看,如下图:

2.7 使用Airflow配置作业

Airflow默认配置了32个Dag供大家食用,webui选中Dag点击一下,即可变成Active状态

接下来,以常用的Hive Operator举例,如何编写并执行自定义Dag

依赖安装

使用Hive Operator,需要首先安装Hive相关依赖

如果使用中遇到类似如下的问题:

ModuleNotFoundError: No module named 'airflow.providers.apache'

就需要手动安装hive依赖,命令如下

su airflow pip3.7 install airflow[hive]

Dag编写

Dag目录: 见airflow.cfg配置项dags_folder

将写好的python文件放置该目录下,举例:

该示例为定时 每隔一分钟查询hive表中数据,Dag名称为test_hive2

from airflow import DAG

from airflow.providers.apache.hive.operators.hive import HiveOperator

from datetime import datetime, timedelta

from airflow.models import Variable

from airflow.utils.dates import days_ago

default_args = {

'owner': 'airflow',

'depends_on_past': True,

'start_date': days_ago(1),

'retries': 10,

'retry_delay': timedelta(seconds=5),

}

dag = DAG('test_hive2', default_args=default_args, schedule_interval='*/1 * * * *', catchup=False)

t1 = HiveOperator(

task_id='hive_task',

hql='select * from test.data_demo',

dag=dag)

t1

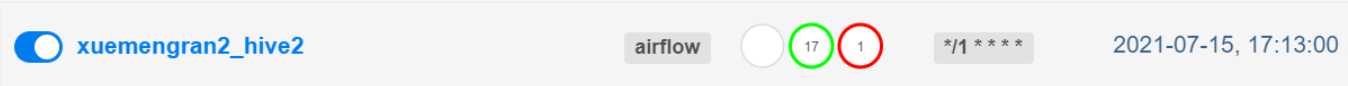

如果Dag格式正确,将会在webui上刷新出新添加的dag信息

配置Connection

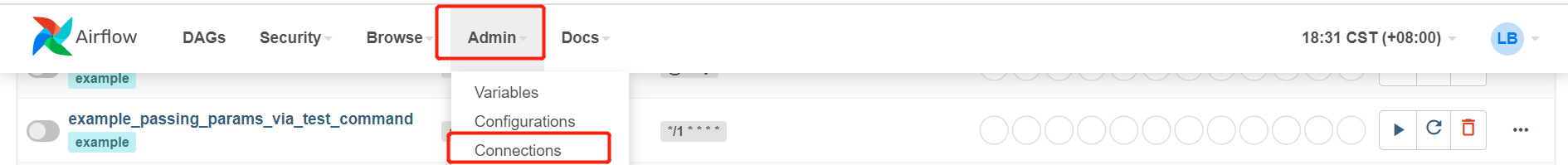

如下图所示,界面点击admin–>Connections配置connection

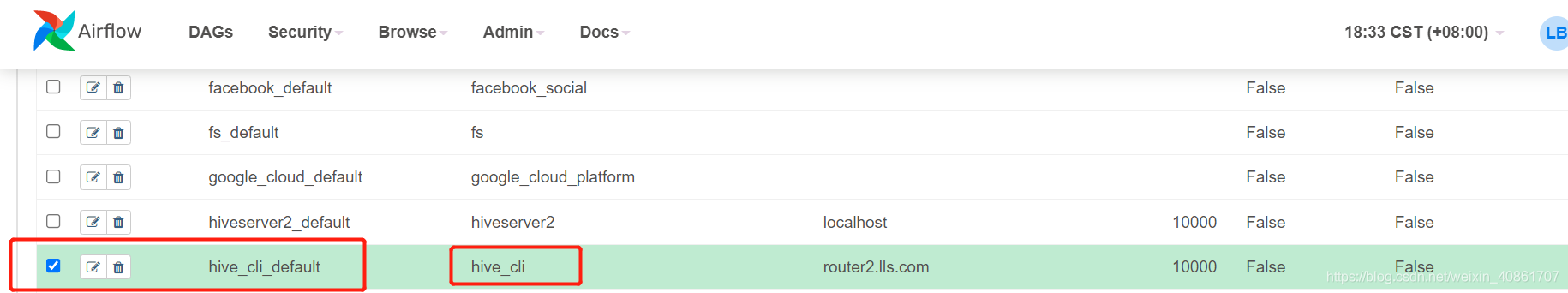

hive默认使用的connections是hive_cli_default

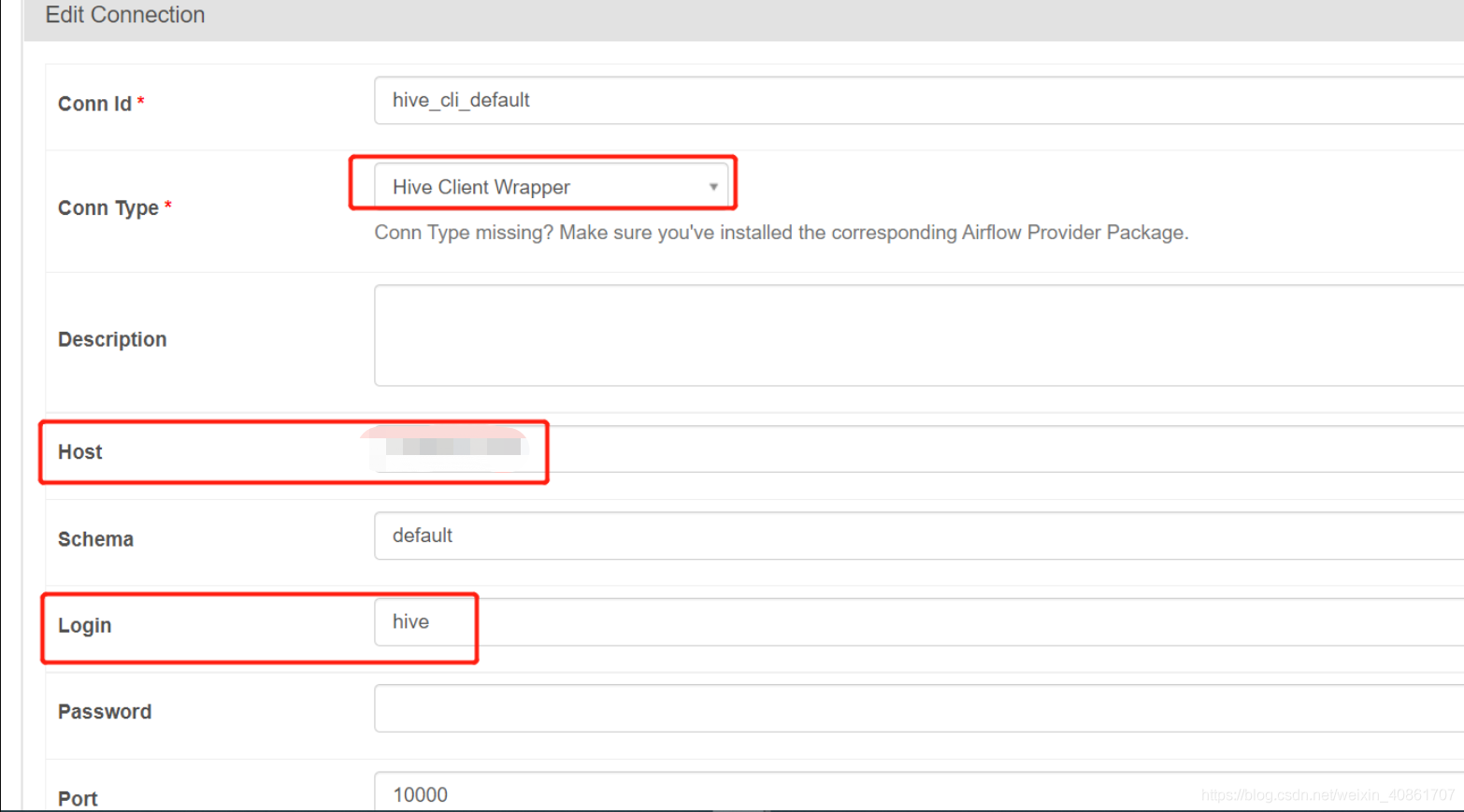

需要注意下图中标记出来的几个配置项:Conn Type选择Hive Client Wrapper(如果安装了hive依赖,默认就是这个)Host设置为安装了Hive的节点Login需要设置为一个有权限执行hive任务的用户

配置完成,保存即可

任务调度

如下图所示,为开启调度,和手动触发

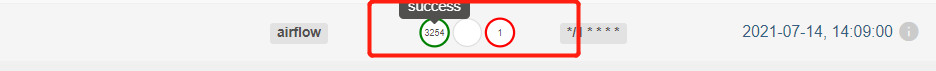

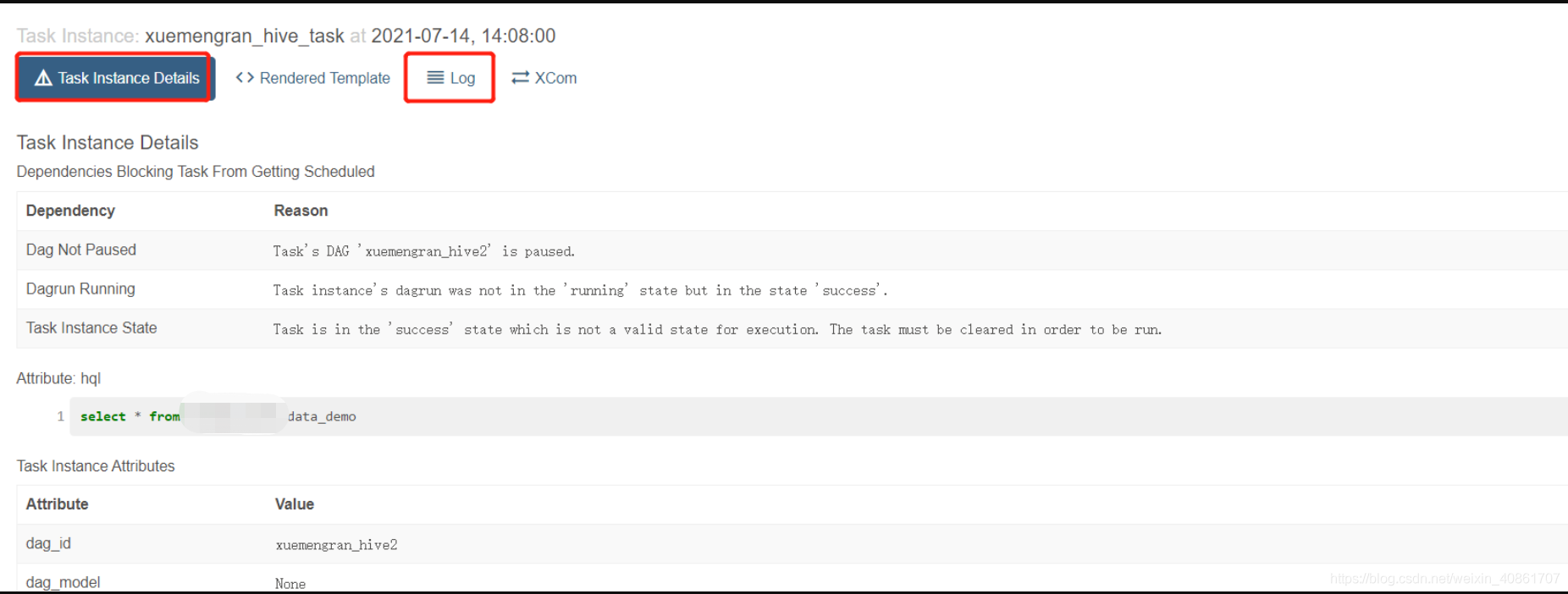

任务触发后,点击任务栏中间的部分,可以查看任务运行细节,

举例,点进去一个任务之后,我们可以看到它的运行细节和运行日志

三. 遇到的问题

3.1 python模块下载报错

Collecting flask-appbuilder<2.0.0,>=1.12.2; python_version < "3.6"

Using cached Flask-AppBuilder-1.13.1.tar.gz (1.5 MB)

ERROR: Command errored out with exit status 1:

command: /bin/python -c 'import sys, setuptools, tokenize; sys.argv[0] = '"'"'/tmp/pip-install-EFxJZq/flask-appbuilder/setup.py'"'"'; __file__='"'"'/tmp/pip-install-EFxJZq/flask-appbuilder/setup.py'"'"';f=getattr(tokenize, '"'"'open'"'"', open)(__file__);code=f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' egg_info --egg-base /tmp/pip-pip-egg-info-StYjJL

cwd: /tmp/pip-install-EFxJZq/flask-appbuilder/

Complete output (3 lines):

/usr/lib64/python2.7/distutils/dist.py:267: UserWarning: Unknown distribution option: 'long_description_content_type'

warnings.warn(msg)

error in Flask-AppBuilder setup command: 'install_requires' must be a string or list of strings containing valid project/version requirement specifiers

解决方案:

把setuptools升级到最新版即可

pip install setuptools -U

3.2 执行ariflow相关命令报错 error: sqlite C library version too old (< {min_sqlite_version}).

详细报错如下:

Traceback (most recent call last):

File "/usr/python3.7/bin/airflow", line 5, in <module>

from airflow.__main__ import main

File "/usr/python3.7/lib/python3.7/site-packages/airflow/__init__.py", line 34, in <module>

from airflow import settings

File "/usr/python3.7/lib/python3.7/site-packages/airflow/settings.py", line 35, in <module>

from airflow.configuration import AIRFLOW_HOME, WEBSERVER_CONFIG, conf # NOQA F401

File "/usr/python3.7/lib/python3.7/site-packages/airflow/configuration.py", line 1114, in <module>

conf.validate()

File "/usr/python3.7/lib/python3.7/site-packages/airflow/configuration.py", line 202, in validate

self._validate_config_dependencies()

File "/usr/python3.7/lib/python3.7/site-packages/airflow/configuration.py", line 243, in _validate_config_dependencies

f"error: sqlite C library version too old (< {min_sqlite_version}). "

airflow.exceptions.AirflowConfigException: error: sqlite C library version too old (< 3.15.0). See https://airflow.apache.org/docs/apache-airflow/2.1.1/howto/set-up-database.rst#setting-up-a-sqlite-database

原因: airflow默认使用sqlite作为metastore,但我们使用的是mysql,实际上用不到sqlite

解决方案:修改{AIRFLOW_HOME}/airflow.cfg,

将元数据库信息sql_alchemy_conn修改为

sql_alchemy_conn = mysql+pymysql://airflow:yourpassword@hostname:3306/airflow`

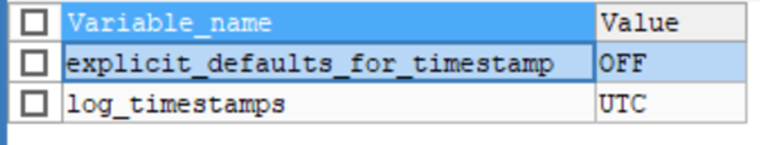

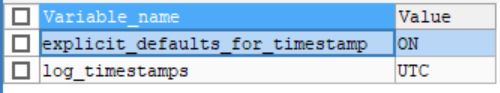

3.3 执行airflow db init失败 Global variable explicit_defaults_for_timestamp needs to be on (1) for mysql

File "/usr/python3.7/lib/python3.7/site-packages/airflow/migrations/versions/0e2a74e0fc9f_add_time_zone_awareness.py", line 44, in upgrade

raise Exception("Global variable explicit_defaults_for_timestamp needs to be on (1) for mysql")

Exception: Global variable explicit_defaults_for_timestamp needs to be on (1) for mysql

解决方法:

进入mysql airflow 数据库,设置global explicit_defaults_for_timestamp

SHOW GLOBAL VARIABLES LIKE '%timestamp%'; SET GLOBAL explicit_defaults_for_timestamp =1;

设置前:

设置后:

浙公网安备 33010602011771号

浙公网安备 33010602011771号