二进制部署k8s集群 基于v1.22.2版本(二)

一、新增Work节点

根据整体规划,我们还需要新增一台Work节点,IP为10.154.0.114

1.1 准备工作

1.更新内核

如果你的内核小于5.x,需要更新内核

不要忘了把docker的cgroupdriver改为systemd,详见安装docker配置镜像加速小节

2.环境配置

先配置一下环境,看这里

修改新增工作节点主机名跟各个节点的hosts文件

#修改node03的主机名

hostnamectl set-hostname k8s-node03

#修改node03的hosts文件

cat >> /etc/hosts << "EOF"

10.154.0.111 k8s-master01

10.154.0.112 k8s-node01

10.154.0.113 k8s-node02

10.154.0.114 k8s-node03

EOF

#在各个节点上添加hosts

echo "10.154.0.114 k8s-node03" >> /etc/hosts

#同步时间,让其他节点同步master01的时间

date --set="$(ssh root@10.154.0.111 date)"

#免密登录,在master01上操作

ssh-copy-id root@10.154.0.114

1.2 部署kubelet

1.拷贝证书,工具跟配置文件到工作节点

在master01上操作

#拷贝证书

scp -r /opt/cluster/ root@10.154.0.114:/opt/

#拷贝配置文件

scp -r \

/usr/lib/systemd/system/kubelet.service \

/usr/lib/systemd/system/kube-proxy.service \

root@10.154.0.114:/usr/lib/systemd/system/

#拷贝工具

cd ~/tools/kubernetes/server/bin

scp -r \

kubelet kube-proxy kubectl \

root@10.154.0.114:/usr/local/bin

kubelet证书每台Work节点的都不一样,是自动生成的,所以需要删除证书重新生成

rm -rf /opt/cluster/kubelet/kubelet.kubeconfig

rm -rf /opt/cluster/kubelet/ssl/*

2.生成kubeconfig配置文件

cd /opt/cluster/ssl

#这里注意不需要创建用户了

#kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.111:6443 \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=$(awk -F "," '{print $1}' /opt/cluster/ssl/kubernetes/kube-apiserver.token.csv) \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

3.编写kubelet.conf配置文件

cd /opt/cluster/ssl

cat > kubernetes/kubelet.conf << "EOF"

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 0

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/cluster/ssl/rootca/rootca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

healthzBindAddress: 127.0.0.1

healthzPort: 10248

rotateCertificates: true

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

启动

systemctl daemon-reload && \

systemctl enable --now kubelet.service && \

systemctl status kubelet.service

授权证书

#查看需要授权的证书

kubectl get csr

#授权证书

kubectl certificate approve <CSR_NAME>

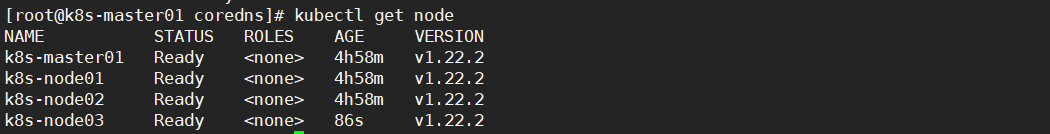

kubectl get node

1.3 部署kube-proxy

1.生成kubeconfig配置文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.111:6443 \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=/opt/cluster/ssl/kubernetes/kube-proxy.pem \

--client-key=/opt/cluster/ssl/kubernetes/kube-proxy-key.pem --embed-certs=true \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-proxy.kubeconfig

2.编写kube-proxy.conf配置文件

cat > kubernetes/kube-proxy.conf << "EOF"

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: /opt/cluster/ssl/kubernetes/kube-proxy.kubeconfig

bindAddress: 0.0.0.0

clusterCIDR: "10.97.0.0/16"

healthzBindAddress: "0.0.0.0:10256"

metricsBindAddress: "0.0.0.0:10249"

mode: ipvs

ipvs:

scheduler: "rr"

EOF

修改--hostname-override选项

vim /usr/lib/systemd/system/kube-proxy.service

...

--hostname-override=k8s-node03

...

3.启动kubelet

systemctl daemon-reload && \

systemctl enable --now kubelet.service && \

systemctl status kubelet.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

4.启动kube-proxy

systemctl daemon-reload && \

systemctl enable --now kube-proxy.service && \

systemctl status kube-proxy.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

二、新增Master节点

根据整体规划,我们需要新增一台Master节点,IP为10.154.0.115

2.1 准备工作

1.更新内核

如果你的内核小于5.x,需要更新内核

不要忘了把docker的cgroupdriver改为systemd,详见安装docker章节的配置镜像加速器小节

2.环境配置

先配置一下环境,看这里

修改新增工作节点主机名跟各个节点的hosts文件

#修改新增master的主机名

hostnamectl set-hostname k8s-master02

#修改master02的hosts文件

cat >> /etc/hosts << "EOF"

10.154.0.111 k8s-master01

10.154.0.115 k8s-master02

10.154.0.112 k8s-node01

10.154.0.113 k8s-node02

10.154.0.114 k8s-node03

EOF

#分别修改各个节点的hosts文件

echo "10.154.0.115 k8s-master02" >> /etc/hosts

#同步时间

date --set="$(ssh root@10.154.0.111 date)"

#免密登录,在master01上操作

ssh-copy-id root@10.154.0.114

3.拷贝证书,工具跟配置文件到新增master节点

在master01上操作

#拷贝证书

scp -r /opt/cluster/ root@10.154.0.115:/opt/

#拷贝配置文件

scp -r \

/usr/lib/systemd/system/kube-apiserver.service \

/usr/lib/systemd/system/kube-controller-manager.service \

/usr/lib/systemd/system/kube-scheduler.service \

/usr/lib/systemd/system/kubelet.service \

/usr/lib/systemd/system/kube-proxy.service \

root@10.154.0.115:/usr/lib/systemd/system/

#拷贝工具

cd ~/tools/kubernetes/server/bin

scp \

kube-apiserver \

kube-scheduler \

kube-controller-manager \

kubelet kube-proxy kubectl \

root@10.154.0.115:/usr/local/bin

#在master02上创建audit.log

mkdir -p /opt/cluster/log/kube-apiserver/

touch /opt/cluster/log/kube-apiserver/audit.log

2.2 部署API

以下均在master02上操作

1.修改api配置文件

vim /usr/lib/systemd/system/kube-apiserver.service

...

--advertise-address=10.154.0.115

...

2.启动

systemctl daemon-reload && \

systemctl enable --now kube-apiserver.service && \

systemctl status kube-apiserver.service

报错查看日志

journalctl -u kube-apiserver > error.log

vim error.log

2.3 部署kubectl

1.生成kubeconfig配置文件

这里填写Master02的地址,如果部署了负载均衡器,则填写VIP地址。

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true \

--server=https://10.154.0.115:6443 \

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config set-credentials clusteradmin \

--client-certificate=/opt/cluster/ssl/kubernetes/kubectl.pem \

--client-key=/opt/cluster/ssl/kubernetes/kubectl-key.pem \

--embed-certs=true \

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=clusteradmin \

--kubeconfig=kubernetes/kubectl.kubeconfig

kubectl config use-context default \

--kubeconfig=kubernetes/kubectl.kubeconfig

mkdir /root/.kube

cp /opt/cluster/ssl/kubernetes/kubectl.kubeconfig /root/.kube/config

报错看日志

journalctl -u kubectl > error.log

vim error.log

2.4 部署controller-manager

1.生成kubeconfig配置文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.115:6443 \

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config set-credentials kube-controller-manager --client-certificate=kubernetes/kube-controller-manager.pem \

--client-key=kubernetes/kube-controller-manager-key.pem --embed-certs=true \

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-controller-manager \

--kubeconfig=kubernetes/kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-controller-manager.kubeconfig

2.启动

systemctl daemon-reload && \

systemctl enable --now kube-controller-manager.service && \

systemctl status kube-controller-manager.service

验证

kubectl get componentstatuses

报错查看日志

journalctl -u kube-controller-manager > error.log

vim error.log

2.5 kube-scheduler

1.生成kubeconfig配置文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.115:6443 \

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config set-credentials kube-scheduler --client-certificate=kubernetes/kube-scheduler.pem \

--client-key=kubernetes/kube-scheduler-key.pem --embed-certs=true \

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-scheduler \

--kubeconfig=kubernetes/kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-scheduler.kubeconfig

2.启动

systemctl daemon-reload && \

systemctl enable --now kube-scheduler.service && \

systemctl status kube-scheduler.service

验证

kubectl get cs

报错查看日志

journalctl -u kube-controller-manager > error.log

vim error.log

2.6 部署kubelet

1.删除证书跟配置文件重新生成

rm -rf /opt/cluster/kubelet/kubelet.kubeconfig

rm -rf /opt/cluster/kubelet/ssl/*

2.生成kubeconfig配置文件

cd /opt/cluster/ssl

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.115:6443 \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=$(awk -F "," '{print $1}' /opt/cluster/ssl/kubernetes/kube-apiserver.token.csv) \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap \

--kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kubelet-bootstrap.kubeconfig

3.编写kubelet.conf配置文件

cd /opt/cluster/ssl

cat > kubernetes/kubelet.conf << "EOF"

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 0

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/cluster/ssl/rootca/rootca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

healthzBindAddress: 127.0.0.1

healthzPort: 10248

rotateCertificates: true

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

4.启动

systemctl daemon-reload && \

systemctl enable --now kubelet.service && \

systemctl status kubelet.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

5.授权证书

#查看需要授权的证书

kubectl get csr

#授权证书

kubectl certificate approve <CSR_NAME>

#查看

kubectl get node

kubectl get pods -n kube-system

2.7 部署kube-proxy

1.生成kubeconfig文件

cd /opt/cluster/ssl

kubectl config set-cluster kubernetes --certificate-authority=/opt/cluster/ssl/rootca/rootca.pem \

--embed-certs=true --server=https://10.154.0.115:6443 \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=/opt/cluster/ssl/kubernetes/kube-proxy.pem \

--client-key=/opt/cluster/ssl/kubernetes/kube-proxy-key.pem --embed-certs=true \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy \

--kubeconfig=kubernetes/kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kubernetes/kube-proxy.kubeconfig

2.编写kube-proxy配置文件

cat > kubernetes/kube-proxy.conf << "EOF"

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: /opt/cluster/ssl/kubernetes/kube-proxy.kubeconfig

bindAddress: 0.0.0.0

clusterCIDR: "10.97.0.0/16"

healthzBindAddress: "0.0.0.0:10256"

metricsBindAddress: "0.0.0.0:10249"

mode: ipvs

ipvs:

scheduler: "rr"

EOF

3.修改systemd配置文件

改为k8s-master02

vim /usr/lib/systemd/system/kube-proxy.service

...

--hostname-override=k8s-master02

...

4.启动

systemctl daemon-reload && \

systemctl enable --now kube-proxy.service && \

systemctl status kube-proxy.service

报错查看日志

journalctl -u kubelet> error.log

vim error.log

齐活!这里我的小本本跑不动了,就关了k8s-node03这台机器

三、部署负载均衡

在前面的整体规划中,我们还需要部署两台负载均衡器,我们开整吧。

本小节参考了这篇博文,多亏了博主,不然小白我还在坑里挖泥巴~

| 负载均衡节点 | IP |

|---|---|

| k8s-lb01 | 10.154.0.116 |

| k8s-lb02 | 10.154.0.117 |

| vip地址 | 10.154.0.118 |

3.1 安装keepalived

在k8s-lb01跟k8s-lb02上操作

# 安装Keepalived与LVS

yum install keepalived ipvsadm -y

#修改主机名

hostnamectl set-hostname k8s-lb01

hostnamectl set-hostname k8s-lb02

#关闭防火墙selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

3.2 创建配置文件

创建keepalived主节点配置文件,在k8s-lb01上操作

cat > /etc/keepalived/keepalived.conf << "EOF"

! Configuration File for keepalived

global_defs {

router_id LVS_DR01

}

vrrp_instance LVS_DR {

state MASTER

interface ens33

mcast_src_ip 10.154.0.116

advert_int 5

virtual_router_id 50

priority 150

authentication {

auth_type PASS

auth_pass tz996

}

virtual_ipaddress {

10.154.0.118/24 dev ens33

}

}

virtual_server 10.154.0.118 6443 {

delay_loop 10

protocol TCP

lvs_sched rr

lvs_method DR

#persistence_timeout 3600

real_server 10.154.0.111 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

real_server 10.154.0.115 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

}

EOF

创建keepalived从节点的配置文件,在k8s-lb02上操作

cat > /etc/keepalived/keepalived.conf << "EOF"

! Configuration File for keepalived

global_defs {

router_id LVS_DR02

}

vrrp_instance LVS_DR {

state BACKUP

interface ens33

mcast_src_ip 10.154.0.117

advert_int 5

virtual_router_id 50

priority 100

authentication {

auth_type PASS

auth_pass tz996

}

virtual_ipaddress {

10.154.0.118/24 dev ens33

}

}

virtual_server 10.154.0.118 6443 {

delay_loop 10

protocol TCP

lvs_sched rr

lvs_method DR

#persistence_timeout 3600

real_server 10.154.0.111 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

real_server 10.154.0.115 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

}

EOF

启动这两台机器

systemctl enable --now keepalived && systemctl status keepalived

查看日志

journalctl -u keepalived> error.log

vim error.log

3.3 配置Master节点

为所有K8S集群的master节点绑定一个在环回口上的VIP地址10.154.0.118

在所有master节点上操作

#创建一个环回子接口,并将VIP地址10.154.0.118绑定至子接口上

cat > /etc/sysconfig/network-scripts/ifcfg-lo_10 << "EOF"

DEVICE=lo:10

NAME=lo_10

IPADDR=10.154.0.118

NETMASK=255.255.255.255

ONBOOT=yes

EOF

#设置内核参数

cat > /etc/sysctl.d/k8s_keepalive.conf << "EOF"

net.ipv4.conf.lo.arp_ignore=1

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_ignore=1

net.ipv4.conf.all.arp_announce=2

EOF

sysctl -p /etc/sysctl.d/k8s_keepalive.conf

#修改kubeconfig将server的ip指向负载均衡器的VIP

#在master01上修改

sed -i 's#10.154.0.111:6443#10.154.0.118:6443#' /opt/cluster/ssl/kubernetes/*

#在master02上修改

sed -i 's#10.154.0.115:6443#10.154.0.118:6443#' /opt/cluster/ssl/kubernetes/*

#重启所有master

reboot

3.4 配置Work节点

#修改kubeconfig将server的ip指向负载均衡器的VIP

#我这里的work节点写的是master01的地址

sed -i 's#10.154.0.111:6443#10.154.0.118:6443#' /opt/cluster/ssl/kubernetes/*

#重启work

reboot

3.5 验证

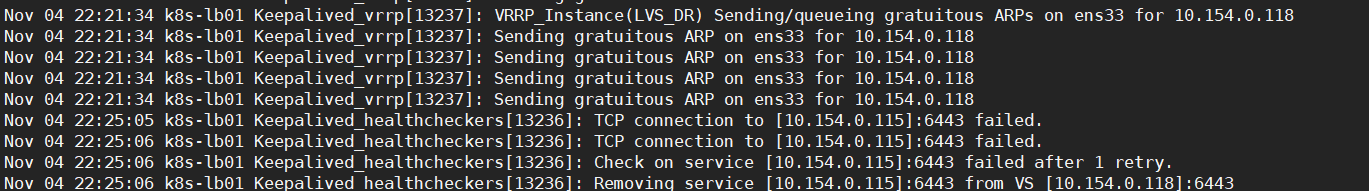

在k8s-lb01上查看日志

journalctl -u keepalived

关闭k8s-master02上的API服务

systemctl stop kube-apiserver.service

可以看到关闭了k8s-master02的API服务,端口6443也关闭了,keepalived连接不上master02的端口就把它移除了

四、总结

再也不没学原理就开整了,二进制部署k8s还是有点复杂的...

浙公网安备 33010602011771号

浙公网安备 33010602011771号