第五周作业:卷积神经网络(Part3)

一:MobileNets:Efficient Convolutional Neural Networks for Mobile Vision Applications

1.论文地址:https://arxiv.org/pdf/1704.04861.pdf

2.本篇论文提出一种网络架构,允许开发人员专门选择一个与其应用的资源限制相匹配的小型网络。

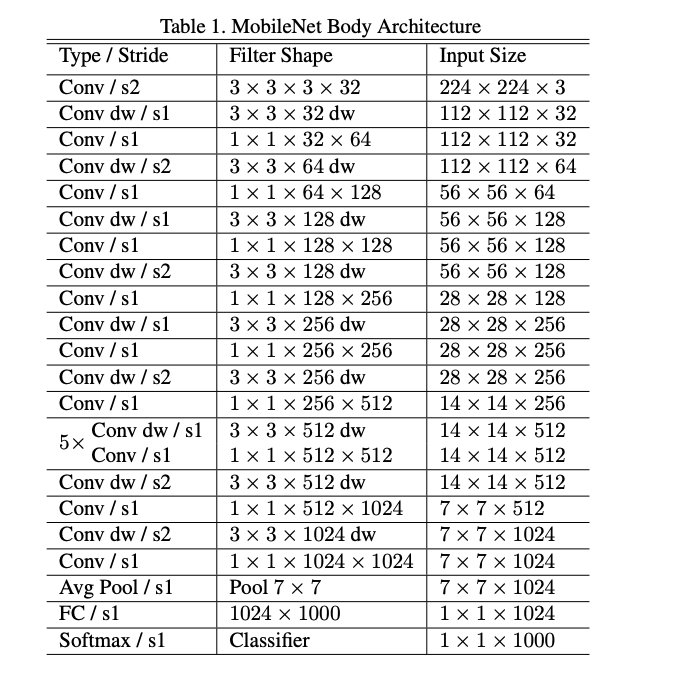

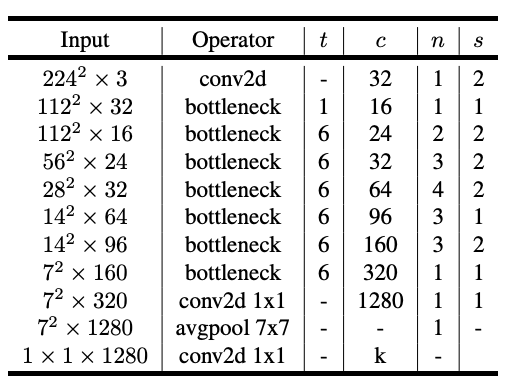

MobileNet Archtecture

MobileNet模型基于深度可分离卷积建立,将标准卷积分为一种深度卷积和一个1*1的卷积(逐点卷积),深度卷积在每个通道上都有一个卷积核,逐点卷积用1*1卷积把深度卷积和输出结合起来,。

3.计算代价计算:

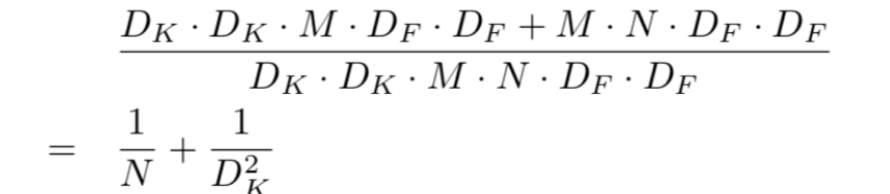

假设标准卷积步长为1,在考虑padding的情况下,计算代价:Dk*Dk*M*N*Df*Df

每个输入通道一个卷积核的深度卷积,计算代价:Dk*Dk*M*Df*Df

加上逐点卷积代价:M*N*Df*Df

深度可分离卷积代价:Dk*Dk*M*Df*Df+M*N*Df*Df

对两种不同方法进行比较:

发现减少了很多计算量

4.Depthwise卷积与Pointwise卷积

合起来称为Depthwise Separable Convolution,即深度可分离卷积。

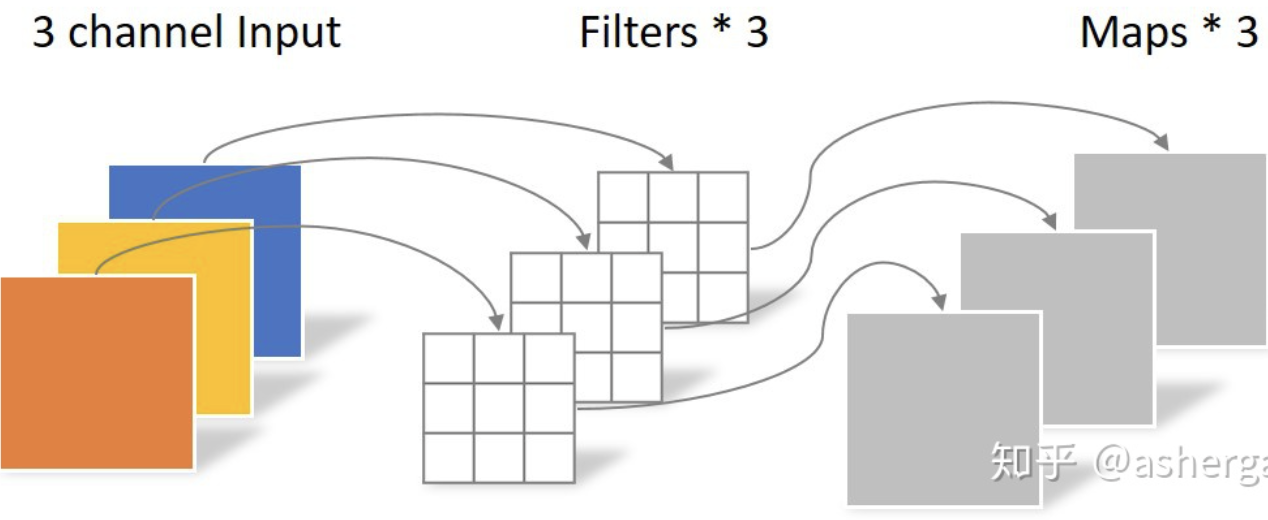

Depthwise卷积:

与常规卷积区别:一个卷积核负责一个通道,一个通道只被一个卷积核卷积(卷积核的数目跟上一层通道数相同)

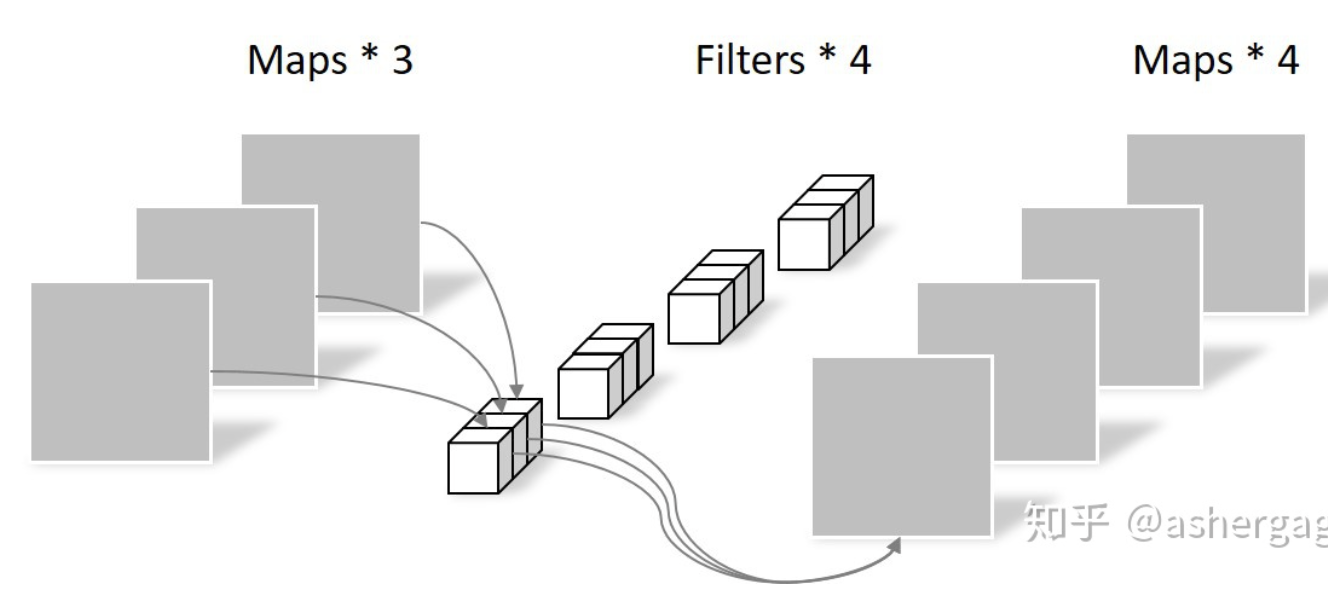

Pointwise Convolution

特点:卷积核尺寸为1*1*M(M为上一层通道数),这里的卷积运算将上一步的map在深度方向上加权组合,组成新的feature map,有几个卷积核就有几个输出。

网络结构:

网络代码:

class MobileNetV1(nn.Module):

# (128,2) means conv planes=128, stride=2

cfg = [(64,1), (128,2), (128,1), (256,2), (256,1), (512,2), (512,1),

(1024,2), (1024,1)]

def __init__(self, num_classes=10):

super(MobileNetV1, self).__init__()

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.layers = self._make_layers(in_planes=32)

self.linear = nn.Linear(1024, num_classes)

def _make_layers(self, in_planes):

layers = []

for x in self.cfg:

out_planes = x[0]

stride = x[1]

layers.append(Block(in_planes, out_planes, stride))

in_planes = out_planes

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layers(out)

out = F.avg_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

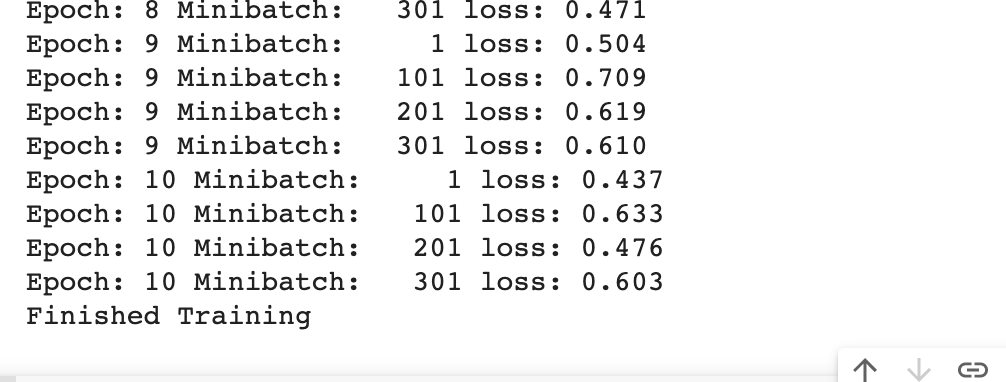

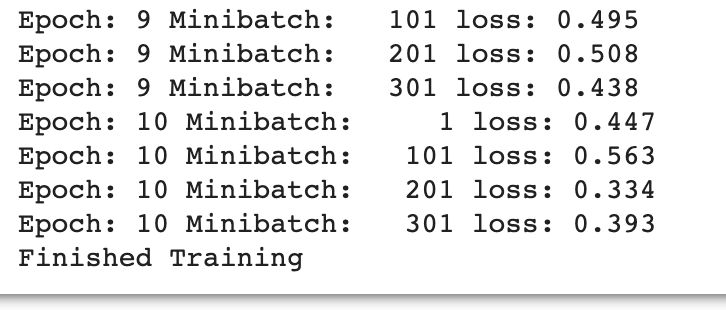

训练结果:

测试结果:

MobileNetV2: Inverted Residuals and Linear Bottlenecks

论文地址:https://arxiv.org/pdf/1801.04381.pdf

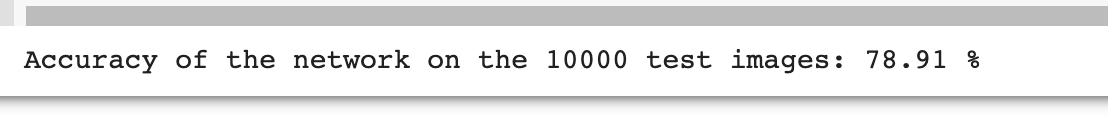

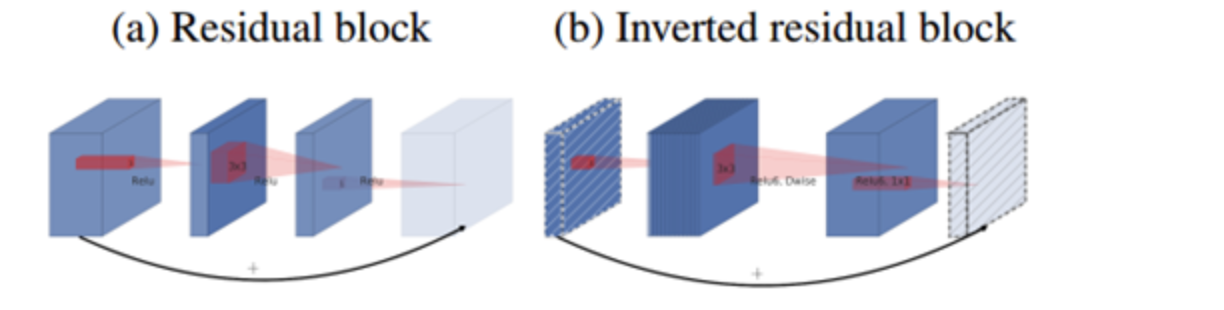

传统的residual block是先用1*1卷积将输入的维度降低,然后进行3*3卷积操作,最后使用1*1卷积将维度变大;而本文提出的Inverted residual block则采用残差结构,先通过1*1卷积进行升维然后再通过1*1卷积降维(卷积运算后使用线性激活函数,以保留更多特征信息)

residual block具体结构:

与MoBileNetV2的区别:

Depthwise convolution多了一个1*1的扩张层(提升通道数,获取更多特征)

使用Linear而非Relu

网络结构:

网络代码:

class MobileNetV2(nn.Module):

# (expansion, out_planes, num_blocks, stride)

cfg = [(1, 16, 1, 1),

(6, 24, 2, 1),

(6, 32, 3, 2),

(6, 64, 4, 2),

(6, 96, 3, 1),

(6, 160, 3, 2),

(6, 320, 1, 1)]

def __init__(self, num_classes=10):

super(MobileNetV2, self).__init__()

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.layers = self._make_layers(in_planes=32)

self.conv2 = nn.Conv2d(320, 1280, kernel_size=1, stride=1, padding=0, bias=False)

self.bn2 = nn.BatchNorm2d(1280)

self.linear = nn.Linear(1280, num_classes)

def _make_layers(self, in_planes):

layers = []

for expansion, out_planes, num_blocks, stride in self.cfg:

strides = [stride] + [1]*(num_blocks-1)

for stride in strides:

layers.append(Block(in_planes, out_planes, expansion, stride))

in_planes = out_planes

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.layers(out)

out = F.relu(self.bn2(self.conv2(out)))

out = F.avg_pool2d(out, 4)

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

代码测试:

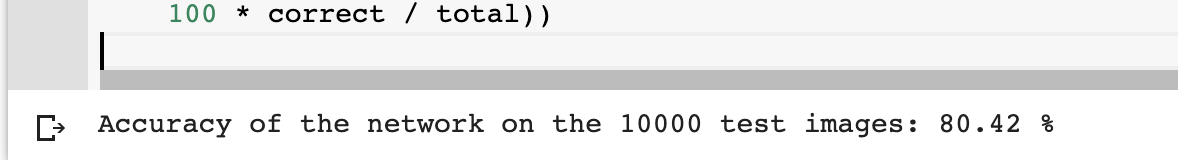

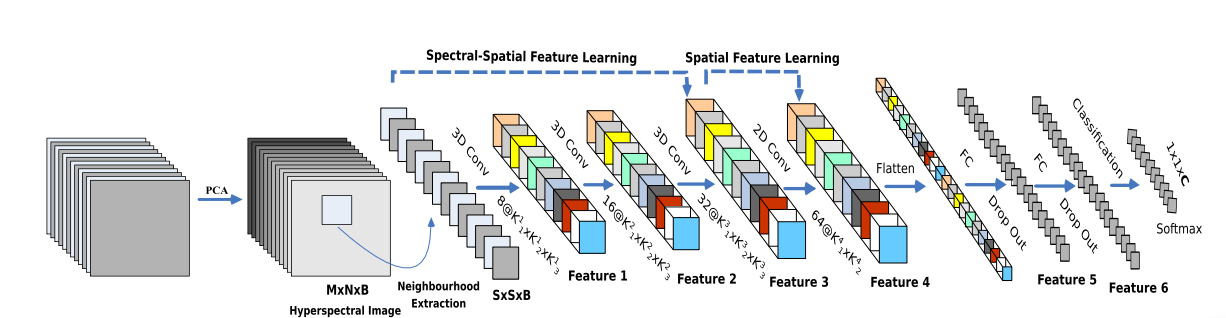

HybridSN: Exploring 3-D–2-DCNN Feature Hierarchy for Hyperspectral Image Classification

论文地址:https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8736016

高光谱图像:高光谱则是由很多通道组成的图像,具体有多少个通道,这需要看传感器的波长分辨率,每一个通道捕捉指定波长的光。

本文提出了一种混合光谱CNN(HybridSN)用于hsi分类,仅仅使用二维(由于HSIs是立体数据,二维无法提取良好的鉴别特征)或三维(计算过于复杂)的CNN都有一些缺点,而三维CNN和2d-CNN层的组合方式充分利用了光谱和空间特征映射,以达到最大准确。

二维卷积和三维卷积的区别:

对于输入来说,输入图像一个是二维,一个是三维(多了深度(depth)维度)

二维卷积核可以简单表示成(c,k_h,k_w),而三维卷积核则表示成(c,k_d,k_h,k_w),即多了一个k_d维度

二维卷积 三维卷积:

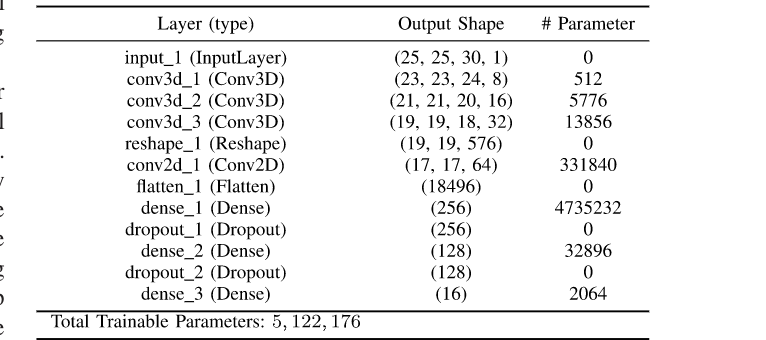

网络结构:

网络代码:

class HybridSN(nn.Module):

def __init__(self):

super(HybridSN, self).__init__()

#定义三维卷积部分conv1----conv3

self.conv1 = nn.Conv3d(1, 8, (7, 3, 3), stride=1, padding=0)

self.conv2 = nn.Conv3d(8, 16, (5, 3, 3), stride=1, padding=0)

self.conv3 = nn.Conv3d(16, 32, (3, 3, 3), stride=1, padding=0)

#定义二维卷积部分conv4

self.conv4 = nn.Conv2d(576, 64, kernel_size=3, stride=1, padding=0)

#全连接层

self.fc1 = nn.Linear(18496, 256)

self.dropout1 = nn.Dropout(p=0.4)

#全连接层

self.fc2 = nn.Linear(256, 128)

self.dropout2 = nn.Dropout(p=0.4)

#最终结果

self.fc3 = nn.Linear(128, class_num)

def forward(self, x):

out = self.conv1(x)

out = self.conv2(out)

out = self.conv3(out)

out = out.reshape(out.shape[0], 576, 19, 19)

out = self.conv4(out)

out = F.relu(out)

out = out.view(-1, 64 * 17 * 17)

out = self.fc1(out)

out = F.relu(out)

out = self.dropout1(out)

out = self.fc2(out)

out = F.relu(out)

out = self.dropout2(out)

out = self.fc3(out)

return out

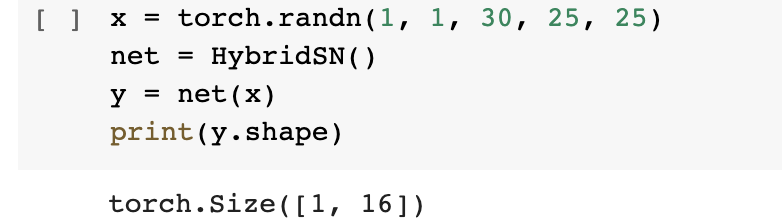

代码测试:

测试网络是否通过:

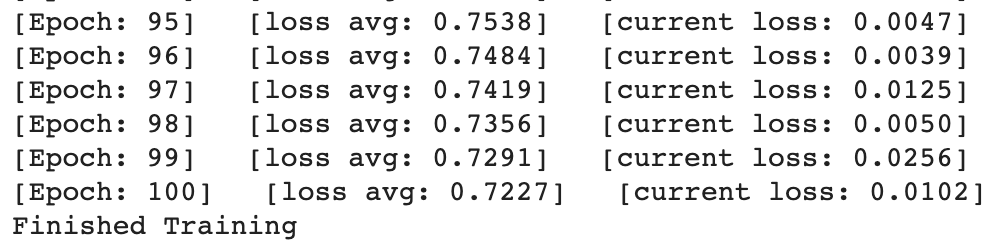

训练结果:

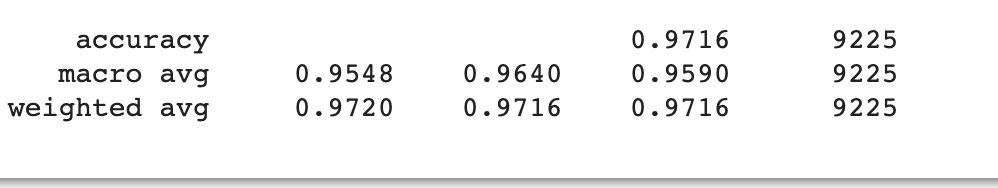

测试结果:

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号