RKNN Toolkit lite2工具详解与工程应用

一、RKNN Toolkit lite2介绍

在之前的博客中,有对rknn-toolkit lite2工具进行简要介绍,rknn-toolkit lite2在嵌入式平台上进行模型推理,它主要用来部署已经转换好的rknn模型。使用python接口对模型进行调用,实现模型推理,瑞芯微提供了两种模型部署到嵌入式平台上的工具,一种是RKNPU使用C/C++接口,另一种就是rknn toolkit lite2使用python接口。RKNN-Toolkit Lite2为用户提供板端模型推理的Python接口,方便用户使用Python语言进行AI应用开发。本博文中将详细对rknn toolkit lite2进行介绍

rknn-toolkit lite2工具的具体代码在瑞芯微AI github仓库中https://github.com/airockchip/rknn-toolkit2,可以通过clone的方式或者直接下载zip压缩包文件

| 文件夹名称 | 作用 |

|---|---|

| CHANGELOG.TXT | 主要记录了工具的版本更新内容 |

| example | 存放了rknn-toolkit lite2的示例工程文件 |

| packages | 存放了rknn-toolkit lite2的python安装whl文件以及环境依赖文件 |

二、环境搭建

rknn-toolkit lite2的环境搭建与rkknn-toolkit2工具的搭建流程类似只不过是平台换成了嵌入式平台,主要分为两个部分,第一就是python环境的搭建包括python的安装还有对应依赖模块的板状,第二就是rkknn-toolkit lite2 whl文件的安装,这里的使用的是rk3576,烧录的系统为debain系统,注意如果使用buildroot可能会缺少一些系统依赖导致后续出现奇奇怪怪的问题,这里博主没有深入研究buildroot系统下的情况。

2.1 rknn-toolkit lite2环境的搭建

1、miniconda安装

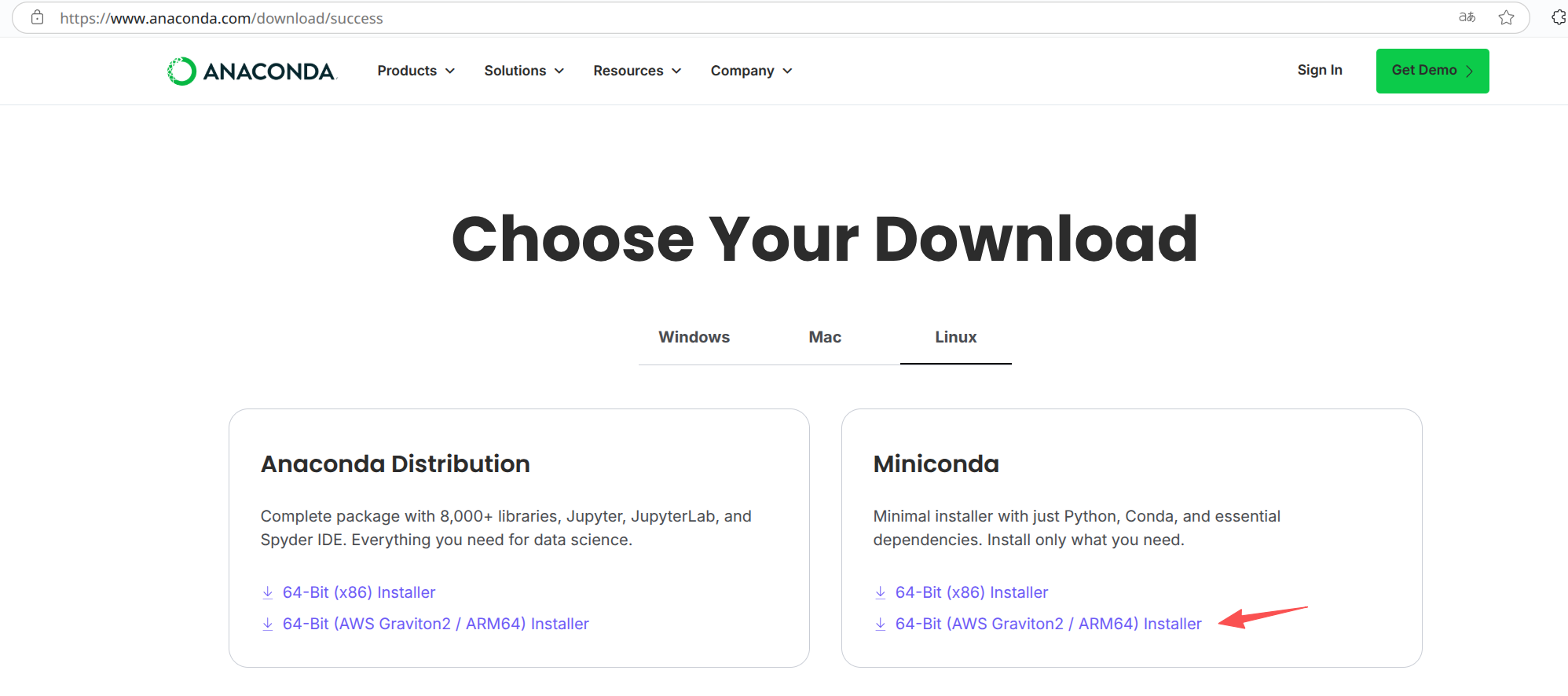

从anaconda官网上下载miniconda,需要注意的是现在是需要将miniconda安装在rk3576上,所以下载aarch64版本,也就是arm64版

将下载好的miniconda.sh脚本上传到嵌入式平台上,进行安装,之后的安装流程与在X86上安装miniconda的步骤相同,这里就不赘述了,可以参考我之前的rknn toolkit2的博客

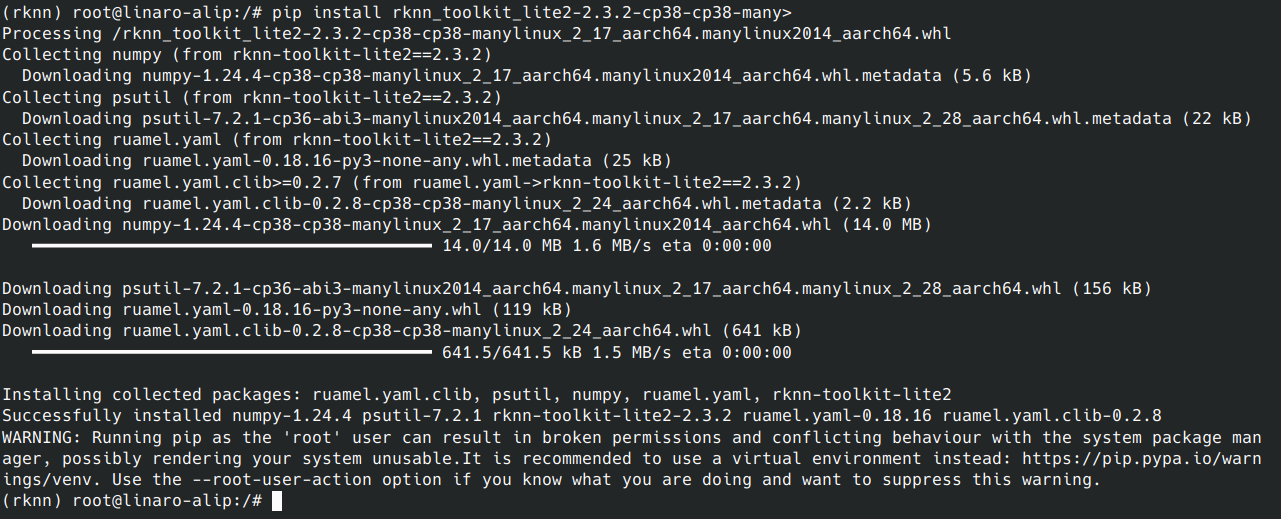

2、rknn-toolkit lite2 whl文件安装

在SDK中找到官方提供的rknn-toolkit lite2的whl软件包,这个文件主要用来安装rknn-toolkit lite2 的python模块,具体路径在rknn-toolkit2-master/rknn-toolkit-lite2/packages/下

在安装并配置好的conda环境中,具体conda环境配置可以参考之前的rknn toolkit2的博客,执行如下命令

pip install rknn_toolkit_lite2-2.3.2-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

安装完成之后,如下图所示

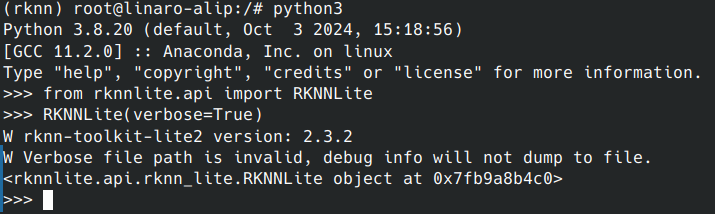

执行以下命令查看rknn-toolkit lite2 模块是否安装成功

# 先在conda环境中执行python3

python3

# 在python3环境中输入以下命令

from rknnlite.api import RKNNLite

RKNNLite(verbose=True)

如果成功打印处rknn-toolkit-lite2的版本号,说明环境已经安装完成,接下来就可以直接部署python接口的模型推理程序了

三、rknn-toolkit-lite2工具使用与RKNN模型部署

注意使用rknn-toolkit-lite2时,部分API接口与rknn-toolkit2不同,编写应用程序时,需要注意以下两点

1、导入模块不同

import numpy as np

import cv2

# rknn-toolkit-lite2导入的模块是RKNNLite

from rknnlite.api import RKNNLite

# 将详细的日志信息输出到屏幕,并写到inference.log文件中

rknn_lite = RKNNLite(verbose=True, verbose_file='./inference.log')

# 只在屏幕打印详细的日志信息

rknn_lite = RKNNLite(verbose=True)

rknn_lite.release()

2、init_runtime函数接口不同

在使用rknn-toolkit-lite2时,init_runtime没有target参数,只需要使用core_mask指定运行的NPU模式就行

# 初始化运行时环境

ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_AUTO)

if ret != 0:

print('Init runtime environment failed')

exit(ret)

示例代码如下

import numpy as np

import cv2

from rknnlite.api import RKNNLite

import os

def show_outputs(output):

index = sorted(range(len(output)), key=lambda k : output[k], reverse=True)

fp = open('./labels.txt', 'r')

labels = fp.readlines()

top5_str = 'resnet18\n-----TOP 5-----\n'

for i in range(5):

value = output[index[i]]

if value > 0:

topi = '[{:>3d}] score:{:.6f} class:"{}"\n'.format(index[i], value, labels[index[i]].strip().split(':')[-1])

else:

topi = '[ -1]: 0.0\n'

top5_str += topi

print(top5_str.strip())

def show_perfs(perfs):

perfs = 'perfs: {}\n'.format(perfs)

print(perfs)

def softmax(x):

return np.exp(x)/sum(np.exp(x))

if __name__ == '__main__':

# Create RKNN object

rknn = RKNNLite(verbose=True)

rknn.load_rknn(path='./resnet_18.rknn')

# Set inputs

img = cv2.imread('./space_shuttle_224.jpg')

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

img = np.expand_dims(img, 0)

# Init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime(core_mask=RKNNLite.NPU_CORE_AUTO)

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=[img])

show_outputs(softmax(np.array(outputs[0][0])))

print('done')

rknn.release()

通过ADB将例程程序运行需要的文件以及模型上传到嵌入式板卡中,激活conda环境,使用以下命令运行程序

wzy@wzy-JiaoLong:~/rknn/rknn-toolkit2-master/rknn-toolkit2/examples/pytorch/resnet18$ adb shell

root@linaro-alip:/# cd rknn/

root@linaro-alip:/rknn# conda activate rknn

(rknn) root@linaro-alip:/rknn# ls

labels.txt resnet_18.rknn space_shuttle_224.jpg test_rknn_lite2.py

(rknn) root@linaro-alip:/rknn# python3 test_rknn_lite2.py

附上rk3576嵌入式平台上官方例程的运行结果

wzy@wzy-JiaoLong:~/rknn/rknn-toolkit2-master/rknn-toolkit2/examples/pytorch/resnet18$ adb shell

root@linaro-alip:/# cd rknn/

root@linaro-alip:/rknn# conda activate rknn

(rknn) root@linaro-alip:/rknn# ls

labels.txt resnet_18.rknn space_shuttle_224.jpg test_rknn_lite2.py

(rknn) root@linaro-alip:/rknn# python3 test_rknn_lite2.py

W rknn-toolkit-lite2 version: 2.3.2

W Verbose file path is invalid, debug info will not dump to file.

--> Init runtime environment

D target set by user is: None

D Starting ntp or adb, target soc is RK3576, device id is: None

I RKNN: [03:36:13.388] RKNN Runtime Information, librknnrt version: 2.3.2 (429f97ae6b@2025-04-09T09:09:27)

I RKNN: [03:36:13.388] RKNN Driver Information, version: 0.9.8

I RKNN: [03:36:13.388] RKNN Model Information, version: 6, toolkit version: 2.3.2(compiler version: 2.3.2 (e045de294f@2025-04-07T19:48:25)), target: RKNPU f2, target platform: rk3576, fram

ework name: PyTorch, framework layout: NCHW, model inference type: static_shape

D RKNN: [03:36:13.389] allocated memory, name: task, virt addr: 0x7fb7ea5000, dma addr: 0xfffff000, obj addr: 0xffffff80c921a000, size: 4080, aligned size: 4096, fd: 4, handle: 1, flags: 0

x40b, gem name: 1, iommu domain id: 0

D RKNN: [03:36:13.406] allocated memory, name: weight, virt addr: 0x7f9a0a6000, dma addr: 0xff495000, obj addr: 0xffffff80c9218400, size: 11968000, aligned size: 11968512, fd: 5, handle: 2

, flags: 0x403, gem name: 2, iommu domain id: 0

D RKNN: [03:36:13.421] subgraph0 regcmdbuffer size: 110464, taskbuffer size: 4080

D RKNN: [03:36:13.423] allocated memory, name: internal, virt addr: 0x7fb420b000, dma addr: 0xff37b000, obj addr: 0xffffff80c921ec00, size: 1154048, aligned size: 1155072, fd: 6, handle: 3

, flags: 0x403, gem name: 3, iommu domain id: 0

D RKNN: [03:36:13.424] -------------------------------------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.424] Feature Tensor Information Table

D RKNN: [03:36:13.424] ---------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] ID User Tensor DataType DataFormat OrigShape NativeShape | [Start End) Size

D RKNN: [03:36:13.424] ---------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] 1 ConvRelu x.3 INT8 NC1HWC2 (1,3,224,224) (1,1,224,224,3) | 0xff37b000 0xff39fc00 0x00024c00

D RKNN: [03:36:13.424] 2 MaxPool 82 INT8 NC1HWC2 (1,64,112,112) (1,4,112,112,16) | 0xff39fc00 0xff463c00 0x000c4000

D RKNN: [03:36:13.424] 3 ConvRelu input.13 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff463c00*0xff494c00 0x00031000

D RKNN: [03:36:13.424] 4 ConvAddRelu 120 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff37b000 0xff3ac000 0x00031000

D RKNN: [03:36:13.424] 4 ConvAddRelu input.13 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff463c00*0xff494c00 0x00031000

D RKNN: [03:36:13.424] 5 ConvRelu 142 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff3ac000 0xff3dd000 0x00031000

D RKNN: [03:36:13.424] 6 ConvAddRelu 169 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff37b000 0xff3ac000 0x00031000

D RKNN: [03:36:13.424] 6 ConvAddRelu 142 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff3ac000 0xff3dd000 0x00031000

D RKNN: [03:36:13.424] 7 ConvRelu 191 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff3dd000 0xff40e000 0x00031000

D RKNN: [03:36:13.424] 8 Conv 222 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff37b000 0xff393800 0x00018800

D RKNN: [03:36:13.424] 9 ConvAddRelu 191 INT8 NC1HWC2 (1,64,56,56) (1,4,56,56,16) | 0xff3dd000 0xff40e000 0x00031000

D RKNN: [03:36:13.424] 9 ConvAddRelu out.7 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff393800 0xff3ac000 0x00018800

D RKNN: [03:36:13.424] 10 ConvRelu 267 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff37b000 0xff393800 0x00018800

D RKNN: [03:36:13.424] 11 ConvAddRelu 294 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff393800 0xff3ac000 0x00018800

D RKNN: [03:36:13.424] 11 ConvAddRelu 267 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff37b000 0xff393800 0x00018800

D RKNN: [03:36:13.424] 12 ConvRelu 316 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff3ac000 0xff3c4800 0x00018800

D RKNN: [03:36:13.424] 13 Conv 347 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff37b000 0xff387400 0x0000c400

D RKNN: [03:36:13.424] 14 ConvAddRelu 316 INT8 NC1HWC2 (1,128,28,28) (1,8,28,28,16) | 0xff3ac000 0xff3c4800 0x00018800

D RKNN: [03:36:13.424] 14 ConvAddRelu out.11 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff387400 0xff393800 0x0000c400

D RKNN: [03:36:13.424] 15 ConvRelu 392 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff37b000 0xff387400 0x0000c400

D RKNN: [03:36:13.424] 16 ConvAddRelu 419 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff387400 0xff393800 0x0000c400

D RKNN: [03:36:13.424] 16 ConvAddRelu 392 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff37b000 0xff387400 0x0000c400

D RKNN: [03:36:13.424] 17 ConvRelu 441 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff393800 0xff39fc00 0x0000c400

D RKNN: [03:36:13.424] 18 Conv 472 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff37b000 0xff381800 0x00006800

D RKNN: [03:36:13.424] 19 ConvAddRelu 441 INT8 NC1HWC2 (1,256,14,14) (1,16,14,14,16) | 0xff393800 0xff39fc00 0x0000c400

D RKNN: [03:36:13.424] 19 ConvAddRelu out.2 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff381800 0xff388000 0x00006800

D RKNN: [03:36:13.424] 20 ConvRelu 517 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff37b000 0xff381800 0x00006800

D RKNN: [03:36:13.424] 21 ConvAddRelu 544 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff381800 0xff388000 0x00006800

D RKNN: [03:36:13.424] 21 ConvAddRelu 517 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff37b000 0xff381800 0x00006800

D RKNN: [03:36:13.424] 22 Conv 566 INT8 NC1HWC2 (1,512,7,7) (1,33,7,7,16) | 0xff388000 0xff38e800 0x00006800

D RKNN: [03:36:13.424] 23 Conv x.1 INT8 NC1HWC2 (1,512,1,1) (1,32,1,1,16) | 0xff37b000 0xff37b200 0x00000200

D RKNN: [03:36:13.424] 24 Reshape 572_mm INT8 NC1HWC2 (1,1000,1,1) (1,64,1,1,16) | 0xff37b200 0xff37b600 0x00000400

D RKNN: [03:36:13.424] 25 OutputOperator 572 INT8 UNDEFINED (1,1000) (1,1000) | 0xff37b600 0xff37ba00 0x00000400

D RKNN: [03:36:13.424] ---------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] -----------------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.424] Const Tensor Information Table

D RKNN: [03:36:13.424] -------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] ID User Tensor DataType OrigShape | [Start End) Size

D RKNN: [03:36:13.424] -------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] 1 ConvRelu weight.12 INT8 (64,3,7,7) | 0xfffb8000 0xfffbf000 0x00007000

D RKNN: [03:36:13.424] 1 ConvRelu bias.8_1_bias INT32 (64) | 0xfffbf000 0xfffbf200 0x00000200

D RKNN: [03:36:13.424] 3 ConvRelu weight.20 INT8 (64,64,3,3) | 0xfffaf000 0xfffb8000 0x00009000

D RKNN: [03:36:13.424] 3 ConvRelu bias.6_5_bias INT32 (64) | 0xfffbf200 0xfffbf400 0x00000200

D RKNN: [03:36:13.424] 4 ConvAddRelu weight.16 INT8 (64,64,3,3) | 0xfffa6000 0xfffaf000 0x00009000

D RKNN: [03:36:13.424] 4 ConvAddRelu bias.10_8_bias INT32 (64) | 0xfffbf400 0xfffbf600 0x00000200

D RKNN: [03:36:13.424] 5 ConvRelu weight.24 INT8 (64,64,3,3) | 0xfff9d000 0xfffa6000 0x00009000

D RKNN: [03:36:13.424] 5 ConvRelu bias.12_12_bias INT32 (64) | 0xfffbf600 0xfffbf800 0x00000200

D RKNN: [03:36:13.424] 6 ConvAddRelu weight.28 INT8 (64,64,3,3) | 0xfff94000 0xfff9d000 0x00009000

D RKNN: [03:36:13.424] 6 ConvAddRelu bias.14_15_bias INT32 (64) | 0xfffbf800 0xfffbfa00 0x00000200

D RKNN: [03:36:13.424] 7 ConvRelu weight.32 INT8 (128,64,3,3) | 0xfff82000 0xfff94000 0x00012000

D RKNN: [03:36:13.424] 7 ConvRelu bias.16_19_bias INT32 (128) | 0xfffbfa00 0xfffbfe00 0x00000400

D RKNN: [03:36:13.424] 8 Conv weight.36 INT8 (128,128,3,3) | 0xfff5e000 0xfff82000 0x00024000

D RKNN: [03:36:13.424] 8 Conv bias.18_22_bias INT32 (128) | 0xfffbfe00 0xfffc0200 0x00000400

D RKNN: [03:36:13.424] 9 ConvAddRelu weight.40 INT8 (128,64,1,1) | 0xfff5c000 0xfff5e000 0x00002000

D RKNN: [03:36:13.424] 9 ConvAddRelu bias.20_24_bias INT32 (128) | 0xfffc0200 0xfffc0600 0x00000400

D RKNN: [03:36:13.424] 10 ConvRelu weight.44 INT8 (128,128,3,3) | 0xfff38000 0xfff5c000 0x00024000

D RKNN: [03:36:13.424] 10 ConvRelu bias.22_28_bias INT32 (128) | 0xfffc0600 0xfffc0a00 0x00000400

D RKNN: [03:36:13.424] 11 ConvAddRelu weight.48 INT8 (128,128,3,3) | 0xfff14000 0xfff38000 0x00024000

D RKNN: [03:36:13.424] 11 ConvAddRelu bias.24_31_bias INT32 (128) | 0xfffc0a00 0xfffc0e00 0x00000400

D RKNN: [03:36:13.424] 12 ConvRelu weight.52 INT8 (256,128,3,3) | 0xffecc000 0xfff14000 0x00048000

D RKNN: [03:36:13.424] 12 ConvRelu bias.26_35_bias INT32 (256) | 0xfffc0e00 0xfffc1600 0x00000800

D RKNN: [03:36:13.424] 13 Conv weight.56 INT8 (256,256,3,3) | 0xffe3c000 0xffecc000 0x00090000

D RKNN: [03:36:13.424] 13 Conv bias.28_38_bias INT32 (256) | 0xfffc1600 0xfffc1e00 0x00000800

D RKNN: [03:36:13.424] 14 ConvAddRelu weight.60 INT8 (256,128,1,1) | 0xffe34000 0xffe3c000 0x00008000

D RKNN: [03:36:13.424] 14 ConvAddRelu bias.30_40_bias INT32 (256) | 0xfffc1e00 0xfffc2600 0x00000800

D RKNN: [03:36:13.424] 15 ConvRelu weight.64 INT8 (256,256,3,3) | 0xffda4000 0xffe34000 0x00090000

D RKNN: [03:36:13.424] 15 ConvRelu bias.32_44_bias INT32 (256) | 0xfffc2600 0xfffc2e00 0x00000800

D RKNN: [03:36:13.424] 16 ConvAddRelu weight.68 INT8 (256,256,3,3) | 0xffd14000 0xffda4000 0x00090000

D RKNN: [03:36:13.424] 16 ConvAddRelu bias.34_47_bias INT32 (256) | 0xfffc2e00 0xfffc3600 0x00000800

D RKNN: [03:36:13.424] 17 ConvRelu weight.8 INT8 (512,256,3,3) | 0xffbf4000 0xffd14000 0x00120000

D RKNN: [03:36:13.424] 17 ConvRelu bias.3_51_bias INT32 (512) | 0xfffc3600 0xfffc4600 0x00001000

D RKNN: [03:36:13.424] 18 Conv weight.7 INT8 (512,512,3,3) | 0xff9b4000 0xffbf4000 0x00240000

D RKNN: [03:36:13.424] 18 Conv bias.4_54_bias INT32 (512) | 0xfffc4600 0xfffc5600 0x00001000

D RKNN: [03:36:13.424] 19 ConvAddRelu weight.3 INT8 (512,256,1,1) | 0xff994000 0xff9b4000 0x00020000

D RKNN: [03:36:13.424] 19 ConvAddRelu bias.5_56_bias INT32 (512) | 0xfffc5600 0xfffc6600 0x00001000

D RKNN: [03:36:13.424] 20 ConvRelu weight.2 INT8 (512,512,3,3) | 0xff754000 0xff994000 0x00240000

D RKNN: [03:36:13.424] 20 ConvRelu bias.2_60_bias INT32 (512) | 0xfffc6600 0xfffc7600 0x00001000

D RKNN: [03:36:13.424] 21 ConvAddRelu weight.6 INT8 (512,512,3,3) | 0xff514000 0xff754000 0x00240000

D RKNN: [03:36:13.424] 21 ConvAddRelu bias.36_63_bias INT32 (512) | 0xfffc7600 0xfffc8600 0x00001000

D RKNN: [03:36:13.424] 22 Conv x.1_GlobalAveragePool_2conv0_i1 INT8 (1,512,7,7) | 0xfffc8600 0xfffd4a00 0x0000c400

D RKNN: [03:36:13.424] 22 Conv x.1_GlobalAveragePool_2conv0_i2 INT32 (512) | 0xfffd4a00 0xfffd5600 0x00000c00

D RKNN: [03:36:13.424] 23 Conv weight.1 INT8 (1000,512,1,1) | 0xff497000 0xff514000 0x0007d000

D RKNN: [03:36:13.424] 23 Conv bias.1 INT32 (1000) | 0xff495000 0xff497000 0x00002000

D RKNN: [03:36:13.424] 24 Reshape 572_mm_tp_rs_i1 INT64 (2) | 0xfffd5600 0xfffd5610 0x00000010

D RKNN: [03:36:13.424] -------------------------------------------------------------------------+---------------------------------

D RKNN: [03:36:13.424] ----------------------------------------

D RKNN: [03:36:13.424] Total Internal Memory Size: 1127KB

D RKNN: [03:36:13.424] Total Weight Memory Size: 11550.8KB

D RKNN: [03:36:13.425] ----------------------------------------

D RKNN: [03:36:13.425] The RKNN_FLAG_EXECUTE_FALLBACK_PRIOR_DEVICE_GPU is not set and without GPU op in Graphs, OpenCL will not be initialized

W RKNN: [03:36:13.426] query RKNN_QUERY_INPUT_DYNAMIC_RANGE error, rknn model is static shape type, please export rknn with dynamic_shapes

W Query dynamic range failed. Ret code: RKNN_ERR_MODEL_INVALID. (If it is a static shape RKNN model, please ignore the above warning message.)

done

--> Running model

D RKNN: [03:36:13.428] allocated memory, name: x.3, virt addr: 0x7fb41e6000, dma addr: 0xff356000, obj addr: 0xffffff80c9228800, size: 150528, aligned size: 151552, fd: 7, handle: 4, flags

: 0x403, gem name: 4, iommu domain id: 0

D RKNN: [03:36:13.428] allocated memory, name: 572, virt addr: 0x7fb7ea4000, dma addr: 0xff355000, obj addr: 0xffffff80c922c400, size: 1024, aligned size: 4096, fd: 8, handle: 5, flags: 0x

403, gem name: 5, iommu domain id: 0

D RKNN: [03:36:13.428] enable argb mode, dtype: UINT8, channel: 3

D RKNN: [03:36:13.428] normalize target: NPU

D RKNN: [03:36:13.428] update cvt sign: 0

D RKNN: [03:36:13.429] Get NPU frequency: 950MHz

D RKNN: [03:36:13.429] Get DDR frequency: 528MHz

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------

D RKNN: [03:36:13.439] Network Layer Information Table

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------

D RKNN: [03:36:13.439] ID OpType DataType Target InputShape OutputShape Cycles(DDR/NPU/Total) Time(us) MacUsage(%) Wo

rkLoad(0/1) SparseRatio RW(KB) FullName

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------

D RKNN: [03:36:13.439] 1 InputOperator UINT8 CPU \ (1,3,224,224) 0/0/0 12 0.

0%/0.0% 0 InputOperator:x.3

D RKNN: [03:36:13.439] 2 ConvRelu UINT8 NPU (1,3,224,224),(64,3,7,7),(64) (1,64,112,112) 569350/1229312/1229312 418 29.02/0.00 10

0.0%/0.0% 0% 946 Conv:input.21_Conv

D RKNN: [03:36:13.439] 3 MaxPool INT8 NPU (1,64,112,112) (1,64,56,56) 0/0/0 454 10

0.0%/0.0% 980 MaxPool:input.13_MaxPool

D RKNN: [03:36:13.439] 4 ConvRelu INT8 NPU (1,64,56,56),(64,64,3,3),(64) (1,64,56,56) 205825/112896/205825 315 37.73/0.00 10

0.0%/0.0% 0% 429 Conv:input.17_Conv

D RKNN: [03:36:13.439] 5 ConvAddRelu INT8 NPU (1,64,56,56),(64,64,3,3),(64),... (1,64,56,56) 270306/112896/270306 255 46.60/0.00 10

0.0%/0.0% 0% 625 Conv:input.23_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 6 ConvRelu INT8 NPU (1,64,56,56),(64,64,3,3),(64) (1,64,56,56) 205825/112896/205825 254 46.79/0.00 10

0.0%/0.0% 0% 429 Conv:input.27_Conv

D RKNN: [03:36:13.439] 7 ConvAddRelu INT8 NPU (1,64,56,56),(64,64,3,3),(64),... (1,64,56,56) 270306/112896/270306 268 44.34/0.00 10

0.0%/0.0% 0% 625 Conv:input.31_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 8 ConvRelu INT8 NPU (1,64,56,56),(128,64,3,3),(128) (1,128,28,28) 153352/56448/153352 274 21.69/0.00 10

0.0%/0.0% 0% 368 Conv:input.35_Conv

D RKNN: [03:36:13.439] 9 Conv INT8 NPU (1,128,28,28),(128,128,3,3),(128) (1,128,28,28) 144799/112896/144799 305 38.96/0.00 10

0.0%/0.0% 0% 342 Conv:input.39_Conv

D RKNN: [03:36:13.439] 10 ConvAddRelu INT8 NPU (1,64,56,56),(128,64,1,1),(128),... (1,128,28,28) 162255/12544/162255 187 3.53/0.00 10

0.0%/0.0% 0% 395 Conv:input.41_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 11 ConvRelu INT8 NPU (1,128,28,28),(128,128,3,3),(128) (1,128,28,28) 144799/112896/144799 246 48.31/0.00 10

0.0%/0.0% 0% 342 Conv:input.45_Conv

D RKNN: [03:36:13.439] 12 ConvAddRelu INT8 NPU (1,128,28,28),(128,128,3,3),(128),... (1,128,28,28) 177039/112896/177039 249 47.73/0.00 10

0.0%/0.0% 0% 440 Conv:input.49_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 13 ConvRelu INT8 NPU (1,128,28,28),(256,128,3,3),(256) (1,256,14,14) 160261/59904/160261 195 30.47/0.00 10

0.0%/0.0% 0% 438 Conv:input.53_Conv

D RKNN: [03:36:13.439] 14 Conv INT8 NPU (1,256,14,14),(256,256,3,3),(256) (1,256,14,14) 238888/119808/238888 318 37.37/0.00 10

0.0%/0.0% 0% 677 Conv:input.57_Conv

D RKNN: [03:36:13.439] 15 ConvAddRelu INT8 NPU (1,128,28,28),(256,128,1,1),(256),... (1,256,14,14) 89900/6656/89900 138 4.78/0.00 10

0.0%/0.0% 0% 224 Conv:input.59_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 16 ConvRelu INT8 NPU (1,256,14,14),(256,256,3,3),(256) (1,256,14,14) 238888/119808/238888 259 45.88/0.00 10

0.0%/0.0% 0% 677 Conv:input.63_Conv

D RKNN: [03:36:13.439] 17 ConvAddRelu INT8 NPU (1,256,14,14),(256,256,3,3),(256),... (1,256,14,14) 255008/119808/255008 330 36.01/0.00 10

0.0%/0.0% 0% 726 Conv:input.67_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 18 ConvRelu INT8 NPU (1,256,14,14),(512,256,3,3),(512) (1,512,7,7) 412919/73728/412919 532 11.17/0.00 10

0.0%/0.0% 0% 1230 Conv:input.6_Conv

D RKNN: [03:36:13.439] 19 Conv INT8 NPU (1,512,7,7),(512,512,3,3),(512) (1,512,7,7) 783846/147456/783846 915 12.99/0.00 10

0.0%/0.0% 0% 2358 Conv:input.9_Conv

D RKNN: [03:36:13.439] 20 ConvAddRelu INT8 NPU (1,256,14,14),(512,256,1,1),(512),... (1,512,7,7) 81881/8192/81881 229 2.88/0.00 10

0.0%/0.0% 0% 224 Conv:input.10_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 21 ConvRelu INT8 NPU (1,512,7,7),(512,512,3,3),(512) (1,512,7,7) 783846/147456/783846 894 13.29/0.00 10

0.0%/0.0% 0% 2358 Conv:input.5_Conv

D RKNN: [03:36:13.439] 22 ConvAddRelu INT8 NPU (1,512,7,7),(512,512,3,3),(512),... (1,512,7,7) 791907/147456/791907 888 13.38/0.00 10

0.0%/0.0% 0% 2382 Conv:input.1_Conv_ConvAddRelu

D RKNN: [03:36:13.439] 23 Conv INT8 NPU (1,512,7,7),(1,512,7,7),(512) (1,512,1,1) 25872/6272/25872 170 0.02/0.00 10

0.0%/0.0% 0% 78 Conv:x.1_GlobalAveragePool_2conv0

D RKNN: [03:36:13.439] 24 Conv INT8 NPU (1,512,1,1),(1000,512,1,1),(1000) (1,1000,1,1) 168249/8192/168249 278 0.19/0.00 10

0.0%/0.0% 0% 510 Conv:572_Gemm#2

D RKNN: [03:36:13.439] 25 Reshape INT8 NPU (1,1000,1,1),(2) (1,1000) 0/0/0 82 10

0.0%/0.0% 2 Reshape:572_mm_tp_rs

D RKNN: [03:36:13.439] 26 OutputOperator INT8 CPU (1,1000) \ 0/0/0 11 0.

0%/0.0% 0 OutputOperator:572

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------

D RKNN: [03:36:13.439] Total Operator Elapsed Per Frame Time(us): 8476

D RKNN: [03:36:13.439] Total Internal Memory Read/Write Per Frame Size(KB): 6354.34

D RKNN: [03:36:13.439] Total Weight Memory Read/Write Per Frame Size(KB): 11459.00

D RKNN: [03:36:13.439] Total Memory Read/Write Per Frame Size(KB): 17813.34

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------------------------------------------------------------------------

---------------------------------------------------------------------

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.439] Operator Time Consuming Ranking Table

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.439] OpType CallNumber CPUTime(us) GPUTime(us) NPUTime(us) TotalTime(us) TimeRatio(%)

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.439] ConvRelu 9 0 0 3387 3387 39.96%

D RKNN: [03:36:13.439] ConvAddRelu 8 0 0 2544 2544 30.01%

D RKNN: [03:36:13.439] Conv 5 0 0 1986 1986 23.43%

D RKNN: [03:36:13.439] MaxPool 1 0 0 454 454 5.36%

D RKNN: [03:36:13.439] Reshape 1 0 0 82 82 0.97%

D RKNN: [03:36:13.439] InputOperator 1 12 0 0 12 0.14%

D RKNN: [03:36:13.439] OutputOperator 1 11 0 0 11 0.13%

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------

D RKNN: [03:36:13.439] Total 23 0 8453 8476

D RKNN: [03:36:13.439] ---------------------------------------------------------------------------------------------------

resnet18

-----TOP 5-----

[812] score:0.999638 class:"space shuttle"

[404] score:0.000281 class:"airliner"

[657] score:0.000014 class:"missile"

[466] score:0.000010 class:"bullet train, bullet"

[833] score:0.000010 class:"submarine, pigboat, sub, U-boat"

done

D RKNN: [03:36:13.444] free memory, name: internal, virt addr: 0x7fb420b000, dma addr: 0xff37b000, obj addr: 0xffffff80c921ec00, size: 1154048, aligned size: 1155072, fd: 6, handle: 3, fla

gs: 0x403, gem name: 3, iommu domain id: 0

D RKNN: [03:36:13.445] free memory, name: weight, virt addr: 0x7f9a0a6000, dma addr: 0xff495000, obj addr: 0xffffff80c9218400, size: 11968000, aligned size: 11968512, fd: 5, handle: 2, fla

gs: 0x403, gem name: 2, iommu domain id: 0

D RKNN: [03:36:13.452] free memory, name: task, virt addr: 0x7fb7ea5000, dma addr: 0xfffff000, obj addr: 0xffffff80c921a000, size: 4080, aligned size: 4096, fd: 4, handle: 1, flags: 0x40b,

gem name: 1, iommu domain id: 0

D RKNN: [03:36:13.453] free memory, name: x.3, virt addr: 0x7fb41e6000, dma addr: 0xff356000, obj addr: 0xffffff80c9228800, size: 150528, aligned size: 151552, fd: 7, handle: 4, flags: 0x4

03, gem name: 4, iommu domain id: 0

D RKNN: [03:36:13.453] free memory, name: 572, virt addr: 0x7fb7ea4000, dma addr: 0xff355000, obj addr: 0xffffff80c922c400, size: 1024, aligned size: 4096, fd: 8, handle: 5, flags: 0x403,

gem name: 5, iommu domain id: 0

(rknn) root@linaro-alip:/rknn#

可以看见,加载的resnet18 rknn模型正确将space shuttle图片分类出来,到这里,rknn-toolkit-lite2工具的使用,环境搭建以及rknn模型在嵌入式平台python接口部署就介绍完成了

四、总结

如果后续需要在嵌入式平台上使用python接口来部署rknn模型,一般的流程为

1、先在x86平台上使用rknn-toolkit2工具将模型调试好

2、在同一python文件内修改模块名称为rknn-toolkit-lite2,修改一些接口环境,就转换成了rknn-toolkit-lite2相应程序

3、拷贝所需依赖资源与文件到嵌入式开发平台上,之后直接运行rknn-toolkit-lite2对应的程序就可以直接在开发板上使用了

浙公网安备 33010602011771号

浙公网安备 33010602011771号