『论文』PointPainting

『论文』PointPainting

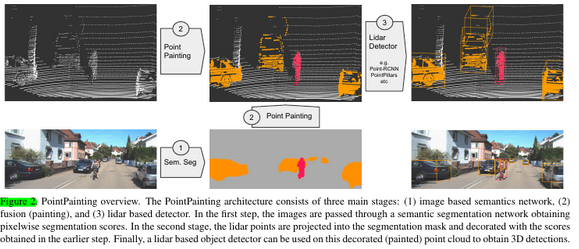

2. PointPainting Architecture

2.1. Image Based Semantics Network

The image sem.seg. network takes in an input image and outputs per pixel class scores. In this paper, the segmentation scores for our KITTI experiments are generated from DeepLabv3+ [2, 36, 19], while for nuScenes experiments we trained a custom, lighter, network. However, we note that PointPainting is agnostic to the image segmentation network design

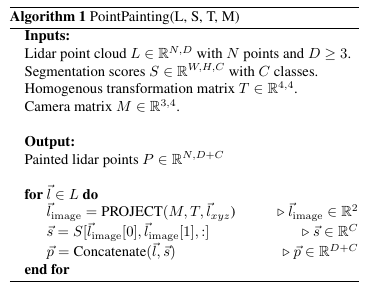

2.2. PointPainting

The output of the segmentation network is C class scores, where for KITTI C = 4 (car, pedestrian, cyclist, background) and for nuScenes C = 11 (10 detection classes plus background)

2.3. Lidar Detection

The decorated point clouds can be consumed by any lidar network that learns an encoder, since PointPainting just changes the input dimension of the lidar points.

In this paper, we demon- strate that PointPainting works with three different lidar de- tectors: PointPillars [11], VoxelNet [34, 29], and PointR- CNN [21]

浙公网安备 33010602011771号

浙公网安备 33010602011771号