『论文』RetinaNet

『论文』RetinaNet

关于RetinaNet,个人觉得总得来说,其独特的地方就是1. 在classfication上用focal loss,使得easily classified的样本没什么权重 2. FPN的使用 3. classification和regression最后的detection head部分分别用两个subnet,而不是一起输

Focal loss

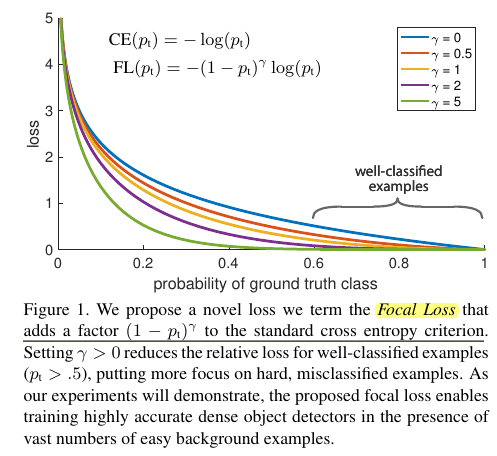

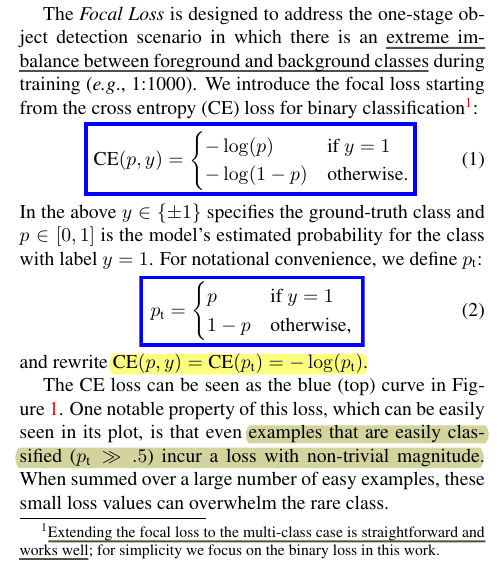

这部分直接截图原文了,说得本来就很清楚。总而言之,就是调制了一下经典的CE loss的那个两边曲线(之前自己CS4240的笔记第二章那里)

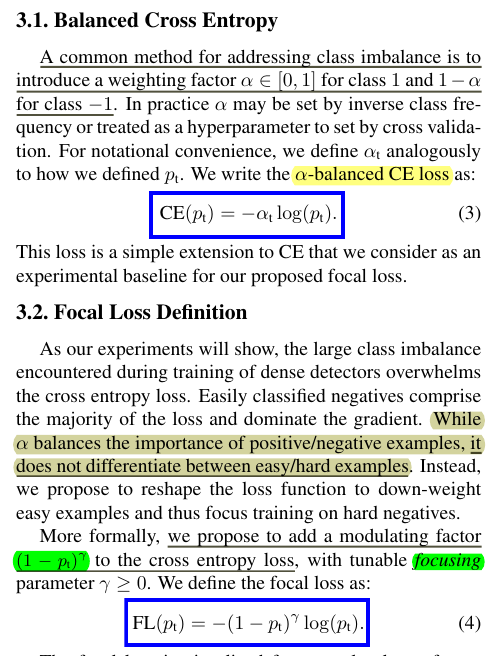

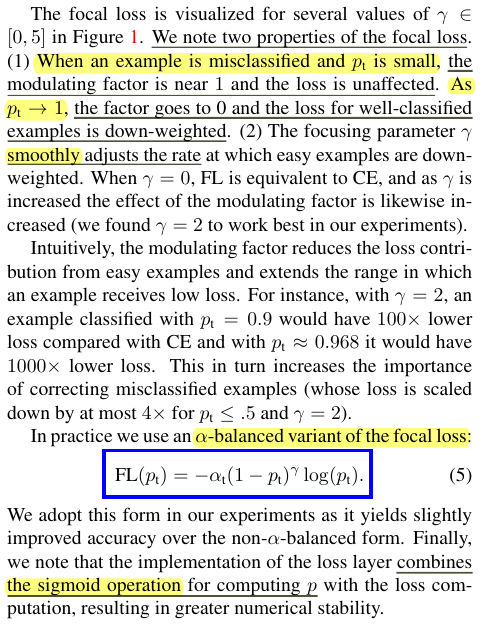

很明显balanced CE只不过是在label为0(negatives)和label为1(positives)之间加了alpha来权衡,并没有触动到easy/hard,而这就是focal要针对的事情. 其方式就是,原来balanced CE的时候在-log前头加的是一个设置的值,而在focal CE这个地方的值也随输入pt而变化,也就是说这个modulating factor也作为了loss正式的一项接受输入了。modulating factor为\((1-p_t)^\gamma\),其中\(\gamma\)称作focusing parameter

然后,文章中所展示的最终版本是把alpha和focal的modualting factor都拿到一起用了,当然重点还是focal部分

FPN

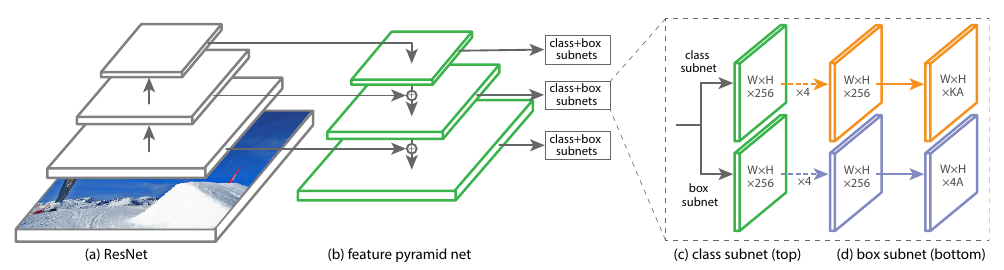

The featurized pyramid is constructed on top of the ResNet architecture. Recall that ResNet has 5 conv blocks (= network stages / pyramid levels). The last layer of the i-th pyramid level, \(C_i\), has resolution 2^i lower than the raw input dimension.

RetinaNet utilizes feature pyramid levels P3 to P7:

- P3 to P5 are computed from the corresponding ResNet residual stage from C3 to C5. They are connected by both top-down and bottom-up pathways.

- P6 is obtained via a 3×3 stride-2 conv on top of C5

- P7 applies ReLU and a 3×3 stride-2 conv on P6

Adding higher pyramid levels on ResNet improves the performance for detecting large objects

Same as in SSD, detection happens in all pyramid levels by making a prediction out of every merged feature map. Because predictions share the same classifier and the box regressor, they are all formed to have the same channel dimension d=256.

There are A=9 anchor boxes per level:

- The base size corresponds to areas of 32^2 to 512^2 pixels on P3 to P7 respectively. There are three size ratios, {20,2(1/3),2^(2/3)}

- For each size, there are three aspect ratios

As usual, for each anchor box, the model outputs a class probability for each of K classes in the classification subnet and regresses the offset from this anchor box to the nearest ground truth object in the box regression subnet. The classification subnet adopts the focal loss introduced above

浙公网安备 33010602011771号

浙公网安备 33010602011771号