『记录』通用技巧

『记录』通用技巧

bash命令

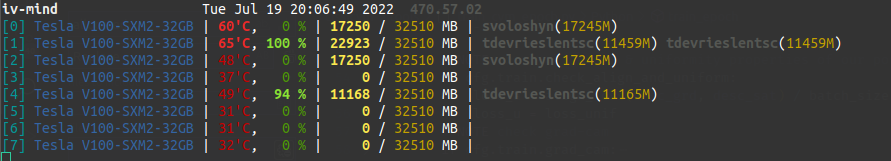

使用gpustat

pip install gpustat

gpustat --watch

查看显卡型号

sudo lspci | grep VGA

解决GPU显存无法释放问题

在iv-mind上用多gpu训练断掉后,nvidia-smi后发现出现了gpu显存仍然被占据,并且底下已经没有了自己的进程。此帖给出了实测确实简洁好用的处理方式:

经常有开发反馈他们的程序已停掉,但是GPU显存无法释放,我们在使用tensorflow+pycharm 或者PyTorch写程序的时候, 有时候会在控制台终止掉正在运行的程序,但是有时候程序已经结束了,nvidia-smi也看到没有程序了,但是GPU的内存并没有释放,这是怎么回事呢?

使用PyTorch设置多线程(threads)进行数据读取(DataLoader),其实是假的多线程,他是开了N个子进程(PID都连着)进行模拟多线程工作,所以你的程序跑完或者中途kill掉主进程的话,子进程的GPU显存并不会被释放,需要手动一个一个kill才行,具体方法描述如下:

# 查看进程

fuser -v /dev/nvidia*

# 取出PID

fuser -v /dev/nvidia*|awk -F " " '{print $0}' >/tmp/pid.file

# 强制杀掉进程

while read pid ; do kill -9 $pid; done </tmp/pid.file

bash脚本设置argument的默认值

在脚本中设置default-value. 另外感觉一种很好用的是操作直接把默认值设置为空字符串,例如XXX=${1:-""},然后在里面的命令中使用${XXX}

在hpc上出现Disk quota exceeded错误

在hpc上自己的home位置的storage其实很小。虽然已经将~/.conda给symbolic link到了根目录自己的文件夹下,在env内安装时但是还是由于pip时使用的--user安装到了~/.local的位置中(进入~/.local/lib能够看到各版本python文件夹和其下面有site-packages)

storage不够可能会出现1. pip下载到99%后显示killed然后退出,此时一个解决方法是加--no-cache-dir来不保存下载文件至~/.cache,但后面安装还是会可能2. Disk quota exceeded的error. 经过搜索后发现是磁盘已超出用户所能使用的配额上限,使用如下命令查看配额限制和已经使用配额情况:

quota -uvs username

在清理时可以直接把~/.cache下的文件都删除,实测不会影响

重命名当前目录下所有文件夹为其原本的前10个字符

for f in * ; do mv "$f" "${f:0:10}" ; done

anaconda使用

ssh相关

单或多node在slurm上srun

记录一下经过摸索之后在slurm上训练时用的参数,以使用了mmdet3d为例。尤其是多nodes的情况,在一开始不成功之后先添加一下NCCL_DEBUG=INFO来获取真实debug信息

单node多gpu使用srun

#!/usr/bin/env bash

set -x

CONFIG=$1

WORK_DIR=$2

PY_ARGS=${@:3}

SRUN_ARGS=${SRUN_ARGS:-""}

PYTHONPATH="$(dirname $0)/..":$PYTHONPATH \

srun --partition=general \

--nodelist=ewi3 \

--qos=long \

--job-name=centerpoint \

--cpus-per-task=64 \

--mem=300000 \

--time=120:00:00 \

--gpus-per-node=2 \

--kill-on-bad-exit=1 \

${SRUN_ARGS} \

python -u tools/train.py ${CONFIG} --work-dir=${WORK_DIR} --launcher="slurm" ${PY_ARGS}

#./tools/slurm_train_my.sh configs/*** work_dirs/***

# sinteractive --nodelist=influ1 --gpus-per-node=2

多node多gpu使用srun

#!/usr/bin/env bash

set -x

CONFIG=$1

WORK_DIR=$2

PY_ARGS=${@:3}

SRUN_ARGS=${SRUN_ARGS:-""}

PYTHONPATH="$(dirname $0)/..":$PYTHONPATH \

NCCL_DEBUG=INFO \

NCCL_SOCKET_IFNAME="campus" \

srun --partition=general \

--job-name=centerpoint \

--nodelist=gpu05,gpu06 \

--nodes=2 \

--gpus-per-node=a40:2 \

--qos=long \

--time=120:00:00 \

--mem=350000 \

--cpus-per-task=60 \

--kill-on-bad-exit=1 \

${SRUN_ARGS} \

python -u tools/train.py ${CONFIG} --work-dir=${WORK_DIR} --launcher="slurm" ${PY_ARGS}

# NCCL_SOCKET_IFNAME="131.180.180.7" \

在使用多node的时候经过了一些探索。在一开始仅仅加上--nodes时在建立任务时会显示

点击查看代码

/home/nfs/jianfengcui/.local/lib/python3.8/site-packages/torch/utils/data/dataloader.py:487: UserWarning: This DataLoader will create 32 worker processes in total. Our suggested max number of worker in current system is 16, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

warnings.warn(_create_warning_msg(

/home/nfs/jianfengcui/.local/lib/python3.8/site-packages/torch/utils/data/dataloader.py:487: UserWarning: This DataLoader will create 32 worker processes in total. Our suggested max number of worker in current system is 16, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

warnings.warn(_create_warning_msg(

gpu03:79559:79559 [0] NCCL INFO Bootstrap : Using campus:131.180.180.7<0>

gpu03:79559:79559 [0] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

gpu03:79559:79559 [0] NCCL INFO NET/IB : No device found.

gpu03:79559:79559 [0] NCCL INFO NET/Socket : Using [0]campus:131.180.180.7<0> [1]storage:172.16.39.229<0>

gpu03:79559:79559 [0] NCCL INFO Using network Socket

NCCL version 2.10.3+cuda11.5

gpu05:22549:22549 [1] NCCL INFO Bootstrap : Using campus:131.180.180.145<0>

gpu05:22549:22549 [1] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

gpu05:22549:22549 [1] NCCL INFO NET/IB : No device found.

gpu05:22549:22549 [1] NCCL INFO NET/Socket : Using [0]campus:131.180.180.145<0> [1]storage:172.16.39.231<0>

gpu05:22549:22549 [1] NCCL INFO Using network Socket

gpu05:22549:22779 [1] NCCL INFO Trees [0] -1/-1/-1->1->0 [1] 0/-1/-1->1->-1

gpu03:79559:79800 [0] NCCL INFO Channel 00/02 : 0 1

gpu03:79559:79800 [0] NCCL INFO Channel 01/02 : 0 1

gpu03:79559:79800 [0] NCCL INFO Trees [0] 1/-1/-1->0->-1 [1] -1/-1/-1->0->1

gpu03:79559:79800 [0] NCCL INFO Setting affinity for GPU 0 to ff0000,000000ff

gpu03:79559:79800 [0] NCCL INFO Channel 00 : 1[81000] -> 0[21000] [receive] via NET/Socket/0

gpu05:22549:22779 [1] NCCL INFO Channel 00 : 0[21000] -> 1[81000] [receive] via NET/Socket/1

gpu03:79559:79800 [0] NCCL INFO Channel 01 : 1[81000] -> 0[21000] [receive] via NET/Socket/0

gpu05:22549:22779 [1] NCCL INFO Channel 01 : 0[21000] -> 1[81000] [receive] via NET/Socket/1

gpu05:22549:22779 [1] NCCL INFO Channel 00 : 1[81000] -> 0[21000] [send] via NET/Socket/1

gpu03:79559:79800 [0] NCCL INFO Channel 00 : 0[21000] -> 1[81000] [send] via NET/Socket/0

gpu05:22549:22779 [1] NCCL INFO Channel 01 : 1[81000] -> 0[21000] [send] via NET/Socket/1

gpu03:79559:79800 [0] NCCL INFO Channel 01 : 0[21000] -> 1[81000] [send] via NET/Socket/0

gpu03:79559:79800 [0] include/socket.h:409 NCCL WARN Net : Connect to 172.16.39.231<51516> failed : No route to host

gpu03:79559:79800 [0] NCCL INFO transport/net_socket.cc:316 -> 2

gpu03:79559:79800 [0] NCCL INFO include/net.h:21 -> 2

gpu03:79559:79800 [0] NCCL INFO transport/net.cc:210 -> 2

gpu03:79559:79800 [0] NCCL INFO transport.cc:111 -> 2

gpu03:79559:79800 [0] NCCL INFO init.cc:778 -> 2

gpu03:79559:79800 [0] NCCL INFO init.cc:904 -> 2

gpu03:79559:79800 [0] NCCL INFO group.cc:72 -> 2 [Async thread]

看到了这个在issue中的回复:https://github.com/NVIDIA/nccl/issues/207#issuecomment-484618409. 以及这个人说的这句话:https://discuss.pytorch.org/t/nccl-network-is-unreachable-connection-refused-when-initializing-ddp/137352/3?u=jcuic5. 大概了解到或许设置NCCL_SOCKET_IFNAME环境变量可能有用,而且通过其设置为'ens5'在他info中的位置,确定我的设置应该为'campus',一开始设为了其中一个node的ip是不行的

成功建立连接

gpu03:90711:90711 [0] NCCL INFO Bootstrap : Using campus:131.180.180.7<0>

gpu03:90711:90711 [0] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

gpu03:90711:90711 [0] NCCL INFO NET/IB : No device found.

gpu03:90711:90711 [0] NCCL INFO NET/Socket : Using [0]campus:131.180.180.7<0>

gpu03:90711:90711 [0] NCCL INFO Using network Socket

NCCL version 2.10.3+cuda11.5

gpu04:5338:5338 [1] NCCL INFO Bootstrap : Using campus:131.180.180.8<0>

gpu04:5338:5338 [1] NCCL INFO NET/Plugin : No plugin found (libnccl-net.so), using internal implementation

gpu04:5338:5338 [1] NCCL INFO NET/IB : No device found.

gpu04:5338:5338 [1] NCCL INFO NET/Socket : Using [0]campus:131.180.180.8<0>

gpu04:5338:5338 [1] NCCL INFO Using network Socket

gpu03:90711:91000 [0] NCCL INFO Channel 00/02 : 0 1

gpu03:90711:91000 [0] NCCL INFO Channel 01/02 : 0 1

gpu03:90711:91000 [0] NCCL INFO Trees [0] 1/-1/-1->0->-1 [1] -1/-1/-1->0->1

gpu03:90711:91000 [0] NCCL INFO Setting affinity for GPU 0 to ff,ffff0000,00ffffff

gpu04:5338:5628 [1] NCCL INFO Trees [0] -1/-1/-1->1->0 [1] 0/-1/-1->1->-1

gpu04:5338:5628 [1] NCCL INFO Setting affinity for GPU 1 to 03ffff00,000003ff,ff000000

gpu03:90711:91000 [0] NCCL INFO Channel 00 : 1[81000] -> 0[21000] [receive] via NET/Socket/0

gpu04:5338:5628 [1] NCCL INFO Channel 00 : 0[21000] -> 1[81000] [receive] via NET/Socket/0

gpu03:90711:91000 [0] NCCL INFO Channel 01 : 1[81000] -> 0[21000] [receive] via NET/Socket/0

gpu04:5338:5628 [1] NCCL INFO Channel 01 : 0[21000] -> 1[81000] [receive] via NET/Socket/0

gpu04:5338:5628 [1] NCCL INFO Channel 00 : 1[81000] -> 0[21000] [send] via NET/Socket/0

gpu03:90711:91000 [0] NCCL INFO Channel 00 : 0[21000] -> 1[81000] [send] via NET/Socket/0

gpu04:5338:5628 [1] NCCL INFO Channel 01 : 1[81000] -> 0[21000] [send] via NET/Socket/0

gpu03:90711:91000 [0] NCCL INFO Channel 01 : 0[21000] -> 1[81000] [send] via NET/Socket/0

gpu04:5338:5628 [1] NCCL INFO Connected all rings

gpu04:5338:5628 [1] NCCL INFO Connected all trees

gpu04:5338:5628 [1] NCCL INFO threadThresholds 8/8/64 | 16/8/64 | 8/8/512

gpu04:5338:5628 [1] NCCL INFO 2 coll channels, 2 p2p channels, 1 p2p channels per peer

gpu03:90711:91000 [0] NCCL INFO Connected all rings

gpu03:90711:91000 [0] NCCL INFO Connected all trees

gpu03:90711:91000 [0] NCCL INFO threadThresholds 8/8/64 | 16/8/64 | 8/8/512

gpu03:90711:91000 [0] NCCL INFO 2 coll channels, 2 p2p channels, 1 p2p channels per peer

gpu04:5338:5628 [1] NCCL INFO comm 0x7f2d48002010 rank 1 nranks 2 cudaDev 1 busId 81000 - Init COMPLETE

gpu03:90711:91000 [0] NCCL INFO comm 0x7f6e34002010 rank 0 nranks 2 cudaDev 0 busId 21000 - Init COMPLETE

gpu03:90711:90711 [0] NCCL INFO Launch mode Parallel

2022-07-07 03:59:29,850 - mmdet - INFO - Start running, host: jianfengcui@gpu03.hpc.tudelft.nl, work_dir: /tudelft.net/staff-umbrella/CVMdata/Jianfeng/projects/mmdetection3d-hpc/work_dirs/Thu-Jul07-0336

浙公网安备 33010602011771号

浙公网安备 33010602011771号