搭建hbase2.2.0完全分布式集群

参考 https://blog.csdn.net/qq_26848943/article/details/81011709

操作系统:centos 7

1、集群如下:

| ip | 主机名 | 角色 |

| 192.168.1.250 | node1.jacky.com | master |

| 192.168.1.251 | node2.jacky.com | slave |

| 192.168.1.252 | node3.jacky.com | slave |

先关闭防火墙

#systemctl stop iptables #关闭iptables防火墙 #systemctl disable iptables #禁止开机启动iptables防火墙 #systemctl stop firewalld.service #关闭firewalld防火墙 #systemctl disable firewalld.service #禁止开机启动firewalld防火墙

安装文件:

[root@localhost sbin]# ls /opt

hadoop-2.9.2.tar.gz

jdk-8u171-linux-x64.tar.gz

hbase-2.2.0-bin.tar.gz

zookeeper-3.5.6.tar.gz

在3台机器上安装同步时间的工具,并同步时间,因为不同的机器时间相差太多hbase会出问题

# yum -y install ntp ntpdate # systemctl enable ntpd # ntpdate cn.pool.ntp.org # timedatectl set-timezone Asia/Shanghai # hwclock --systohc # timedatectl set-local-rtc 1 #crontab -e */10 * * * * /usr/sbin/ntpdate -u pool.ntp.org >/dev/null 2>&1 #systemctl restart crond.service # date

分别在3台机器上安装java环境

java安装路径为/usr/local/java/jdk1.8.0_171

#tar zxvf jdk-8u171-linux-x64.tar.gz -C /usr/local

export JAVA_HOME=/usr/local/java/jdk1.8.0_171

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH

在3个机器上执行修改主机名和ip的映射:

# vi /etc/hosts

192.168.1.250 node1.jacky.com

192.168.1.251 node2.jacky.com

192.168.1.252 node3.jacky.com

修改3台机器hostname文件

在192.168.1.250机器中修改,修改hostname为

node1.jacky.com

在192.168.1.251机器中修改,修改hostname为

node2.jacky.com

在192.168.1.252机器中修改,修改hostname为

node3.jacky.com

配置192.168.1.250可以免密码登录192.168.1.251和192.168.1.252

步骤:

- 生成公钥和私钥

- 修改公钥名称为authorized_keys

-

[root@node1 ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:pvR6iWfppGPSFZlAqP35/6DEtGTvaMY64otThWoBTuk root@localhost.localdomain The key's randomart image is: +---[RSA 2048]----+ | . o. | |.o . . | |+. o . . o | | Eo o . + | | o o..S. | | o ..oO.o | | . . ..=*oo | | ..o *=@+ . | | .oo=+@+.o.. | +----[SHA256]-----+

[root@node1 .ssh]#cd /root/.ssh

[root@node1 .ssh]# cp id_rsa.pub authorized_keys [root@node1 .ssh]# chmod 777 authorized_keys #修改文件权限

说明:

authorized_keys:存放远程免密登录的公钥,主要通过这个文件记录多台机器的公钥

id_rsa : 生成的私钥文件

id_rsa.pub : 生成的公钥文件在192.168.1.250上执行:

-

[root@node1 .ssh]# ssh-copy-id -i root@node1.jacky.com(给自己授权免密码ssh登录) [root@node1 .ssh]# ssh-copy-id -i root@0.0.0.0.com [root@node1 .ssh]# ssh-copy-id -i root@node2.jacky.com [root@node1 .ssh]# ssh-copy-id -i root@node3.jacky.com

- 先安装zookeeper

-

安装zookeeper的教程 https://www.cnblogs.com/tochw/p/15395966.html

- #tar -zxvf hadoop-2.9.2.tar.gz -C /usr/local

- # vim /etc/profile

-

export HADOOP_HOME=/usr/local/hadoop-2.9.2

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export JAVA_LIBRAY_PATH=$HADOOP_HOME/lib/native -

#source /etc/profile

- #cd /usr/local/hadoop-2.9.2

-

#mkdir hdfs

#mkdir hdfs/data

#mkdir hdfs/name

#mkdir hdfs/tmp -

# cd /usr/local/hadoop-2.9.2/etc/hadoop

-

#vi hadoop-env.sh

-

export JAVA_HOME=/usr/local/java/jdk1.8.0_171

- # vi yarn-env.sh

-

export JAVA_HOME=/usr/local/java/jdk1.8.0_171

修改slaves文件,指定master的小弟,在master机器上,sbin目录下只执行start-all.sh,能够启动所有slave的DataNode和NodeManager

- # vi slaves

-

node2.jacky.com node3.jacky.com

修改hadoop核心配置文件core-site.xml

- # vi /usr/local/hadoop-2.9.2/etc/hadoop/core-site.xml

-

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://node1.jacky.com:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/hadoop-2.9.2/hdfs/tmp</value> </property> </configuration> -

修改hdfs-site.xml文件

- #vi /usr/local/hadoop-2.9.2/etc/hadoop/hdfs-site.xml

-

<configuration> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop-2.9.2/hdfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop-2.9.2/hdfs/data</value> </property> <property> <name>dfs.replication</name> <value>2</value> </property> </configuration>修改mapred-site.xml文件

-

# vi /usr/local/hadoop-2.9.2/etc/hadoop/mapred-site.xml

<configuration> <property> <name>mapred.job.tracker</name> <value>node1.jacky.com:9001</value> </property> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>修改yarn-site.xml文件

- # vi /usr/local/hadoop-2.9.2/etc/hadoop/yarn-site.xml

-

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>node1.jacky.com</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> </configuration>

-

然后把在master的配置拷贝到node2.jacky.com和node3.jacky.com节点上

-

在192.168.1.250上执行:

-

# scp -r /usr/local/hadoop-2.9.2 root@node2.jacky.com:/usr/local/ # scp -r /usr/local/hadoop-2.9.2 root@node3.jacky.com:/usr/local/

格式化namenode

-

# /usr/local/hadoop-2.9.2/bin/hdfs namenode -format

能在master的

/usr/local/hadoop-2.9.2/hdfs/name生成一个current文件夹在master上启动hadoop

-

/usr/local/hadoop-2.9.2/sbin/start-all.sh

用jps命令查看三台机器上hadoop有没起来

-

192.168.1.250

-

[root@node1 sbin]# jps 7969 QuorumPeerMain 25113 NameNode 25483 ResourceManager 73116 Jps 25311 SecondaryNameNode

192.168.1.251

-

[root@node2 jacky]# jps 43986 Jps 60437 DataNode 12855 QuorumPeerMain 60621 NodeManager

192.168.1.252

-

[root@node2 jacky]# jps 43986 Jps 60437 DataNode 12855 QuorumPeerMain 60621 NodeManager

5.4、界面查看验证

-

http://192.168.1.250:8088/cluster/nodes

-

![]()

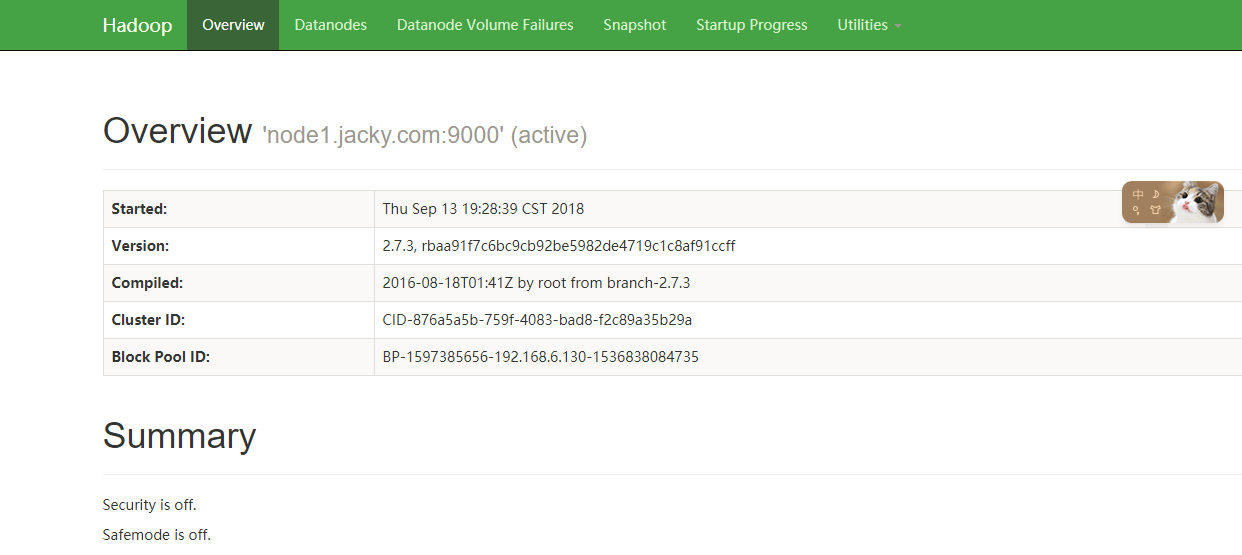

查看dataNode是否启动

http://192.168.1.250:50070/

-

![]()

好了,到这里,hadoop-2.9.2完全分布式集群搭建成功了,接下来我们将进入hbase搭建

#tar zxvf /opt/hbase-2.2.0-bin.tar.gz -C /usr/local

-

#mkdir /usr/local/hbase-2.2.0/pids

#chmod -R 777 /usr/local/hbase-2.2.0/pids#vi /usr/local/hbase-2.2.0/conf/hbase-env.sh

-

export JAVA_HOME=/usr/local/java/jdk1.8.0_171 export HBASE_LOG_DIR=${HBASE_HOME}/logs export HBASE_CLASSPATH=/usr/local/hadoop-2.9.2/etc/hadoop export HBASE_MANAGES_ZK=false export HBASE_PID_DIR=/usr/local/hbase-2.2.0/pids -

#vi /usr/local/hbase-2.2.0/conf/hbase-site.xml

- hbase.unsafe.stream.capability.enforce 在分布式情况下, 一定设置为false

-

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://node1.jacky.com:9000/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.master.info.port</name> <value>16010</value> </property> <property> <name>hbase.regionserver.info.port</name> <value>16030</value> </property> <property> <name>hbase.zookeeper.property.clientPort</name> <value>2181</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>node1.jacky.com,node2.jacky.com,node3.jacky.com</value> </property> <property> <name>zookeeper.session.timeout</name> <value>60000000</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> <property> <name>hbase.coprocessor.user.region.classes</name> <value>org.apache.hadoop.hbase.coprocessor.AggregateImplementation</value> </property> <property> <name>hbase.unsafe.stream.capability.enforce</name> <value>false</value> </property> </configuration> -

#vi /usr/local/hbase-2.2.0/conf/regionservers

-

node2.jacky.com node3.jacky.com

部署hbase到其他节点

-

#scp -r /usr/local/hbase-2.2.0 node2.jacky.com:/usr/local #scp -r /usr/local/hbase-2.2.0 node3.jacky.com:/usr/local

-

#ln -s /usr/local/hadoop-2.9.2/etc/hadoop/core-site.xml /usr/local/hbase-2.2.0/conf/core-site.xml #ln -s /usr/local/hadoop-2.9.2/etc/hadoop/hdfs-site.xml /usr/local/hbase-2.2.0/conf/hdfs-site.xml

- 在3台机器上都执行上面的创建软链接的命令

-

#/usr/local/hbase-2.2.0/bin/start-hbase.sh(停止hbase的命令是#/usr/local/hbase-2.2.0/bin/stop-hbase.sh)

- 如果提示 log4j重复,把hbase库里面的删除就可以了。rm -f /usr/local/hadoop-2.9.2/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar

-

同时也能在浏览器上通过

http://192.168.1.250:16010web端查看Hbase信息 -

通过hbase shell命令运行看看

- #hbase shell

- >list

- >status

- 如果这两个命令有问题,试着通过zookeeper客户端连接,把/hbase路径删除看看

- #/usr/local/zookeeper-3.5.6/bin/zkCli.sh -server 192.168.1.250:2181,192.168.1.251:2181,192.168.1.252:2181

- 运行zookeeper的命令

- ls /

- rmr /hbase

- 关闭系统的过程:先关闭hbase、hadoop、zookeeper

- 启动过程则反着来

- 关闭hbase集群

-

#/usr/local/hbase-2.2.0/bin/hbase-daemon.sh stop thrift

在node1上执行:

#/usr/local/hbase-2.2.0/bin/stop-hbase.sh

#/usr/local/hadoop-2.9.2/sbin/stop-all.sh

停止zookeeper需要在每台安装有zookeeper的机器上执行如下停止命令:

#/usr/local/zookeeper-3.5.6/bin/zkServer.sh stop - 启动hbase集群

-

启动zookeeper需要在每台安装有zookeeper的机器上执行如下启动命令:

#/usr/local/zookeeper-3.5.6/bin/zkServer.sh start

在node1上执行:

#/usr/local/hadoop-2.9.2/sbin/start-all.sh #/usr/local/hbase-2.2.0/bin/start-hbase.sh 启动thrift服务方便python等客户端语言连接查询,需要在每台装有hbase的机器上执行! # /usr/local/hbase-2.2.0/bin/hbase-daemon.sh start thrift -

启停单个Hbase的hmaster或reginserver进程

-

#/usr/local/hbase-2.2.0/bin/hbase-daemon.sh start | stop master #/usr/local/hbase-2.2.0/bin/hbase-daemon.sh start | stop regionserver

浙公网安备 33010602011771号

浙公网安备 33010602011771号