【八】强化学习之DDPG---PaddlePaddlle【PARL】框架{飞桨}

相关文章:

【一】飞桨paddle【GPU、CPU】安装以及环境配置+python入门教学

代码链接:码云:https://gitee.com/dingding962285595/parl_work ;github:https://github.com/PaddlePaddle/PARL

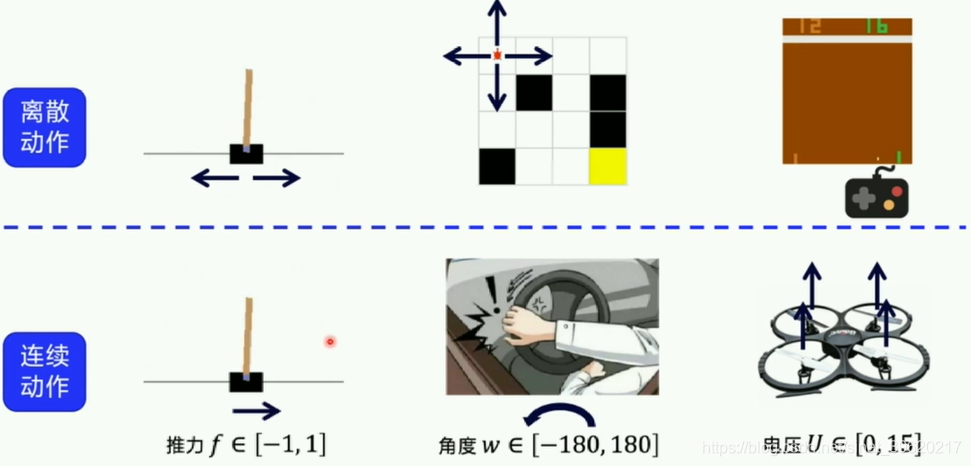

1. 连续动作空间

离散动作&连续动作

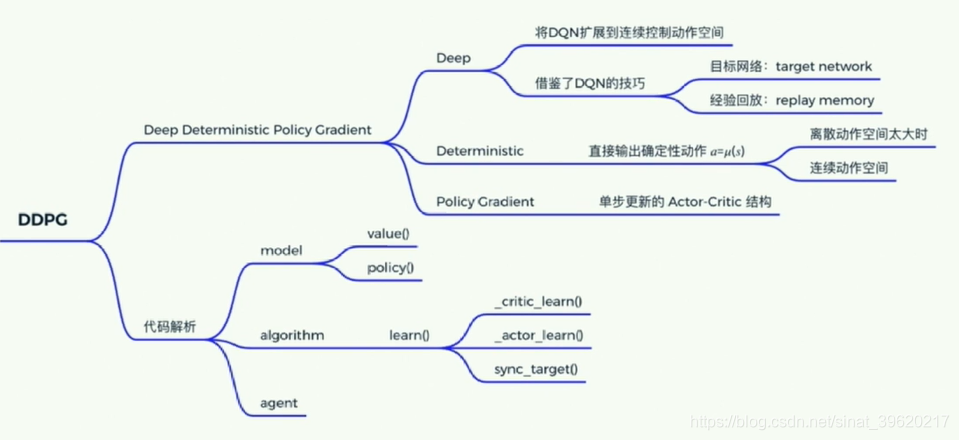

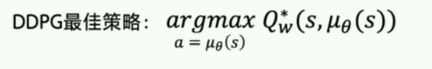

2.DDPG讲解Deep Deterministic Policy Gradient

- deep-神经网络--DNQ扩展

目标网络 target work

经验回放 replay memory

- Deterministic Policy Gradient

·Deterministic 直接输出确定的动作

·Policy Gradient 单步更新的policy网络

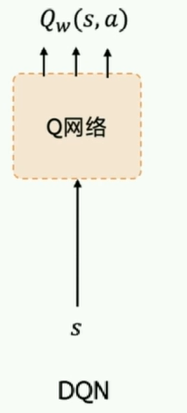

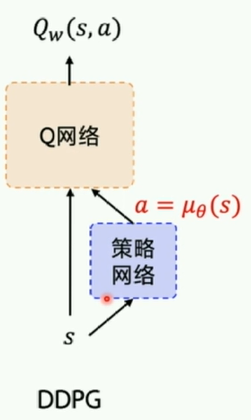

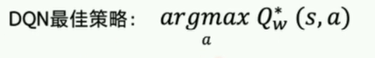

DDPG是DQN的扩展版本,可以扩展到连续控制动作空间

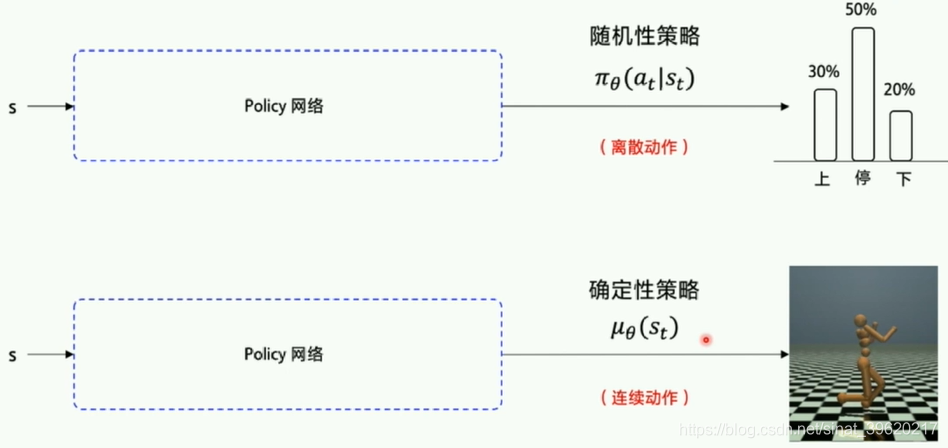

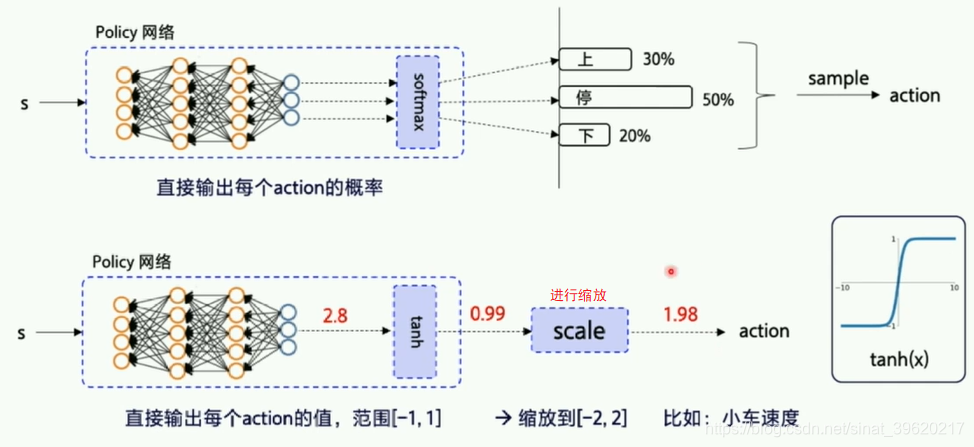

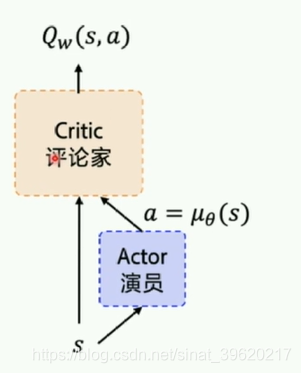

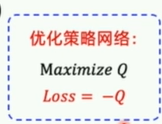

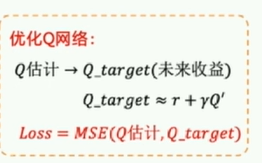

2.1 策略网络:

actor对外输出动作;critic会对每个输出的网络进行评估。刚开始随机参数初始化,然后根据reward不断地反馈。

![]()

![]()

目标网络target network +经验回放ReplayMemory

两个target_Q/P网络的作用是稳定Q网络里的Q_target 复制原网络一段时间不变。

2.2 经验回放ReplayMemory

用到数据:

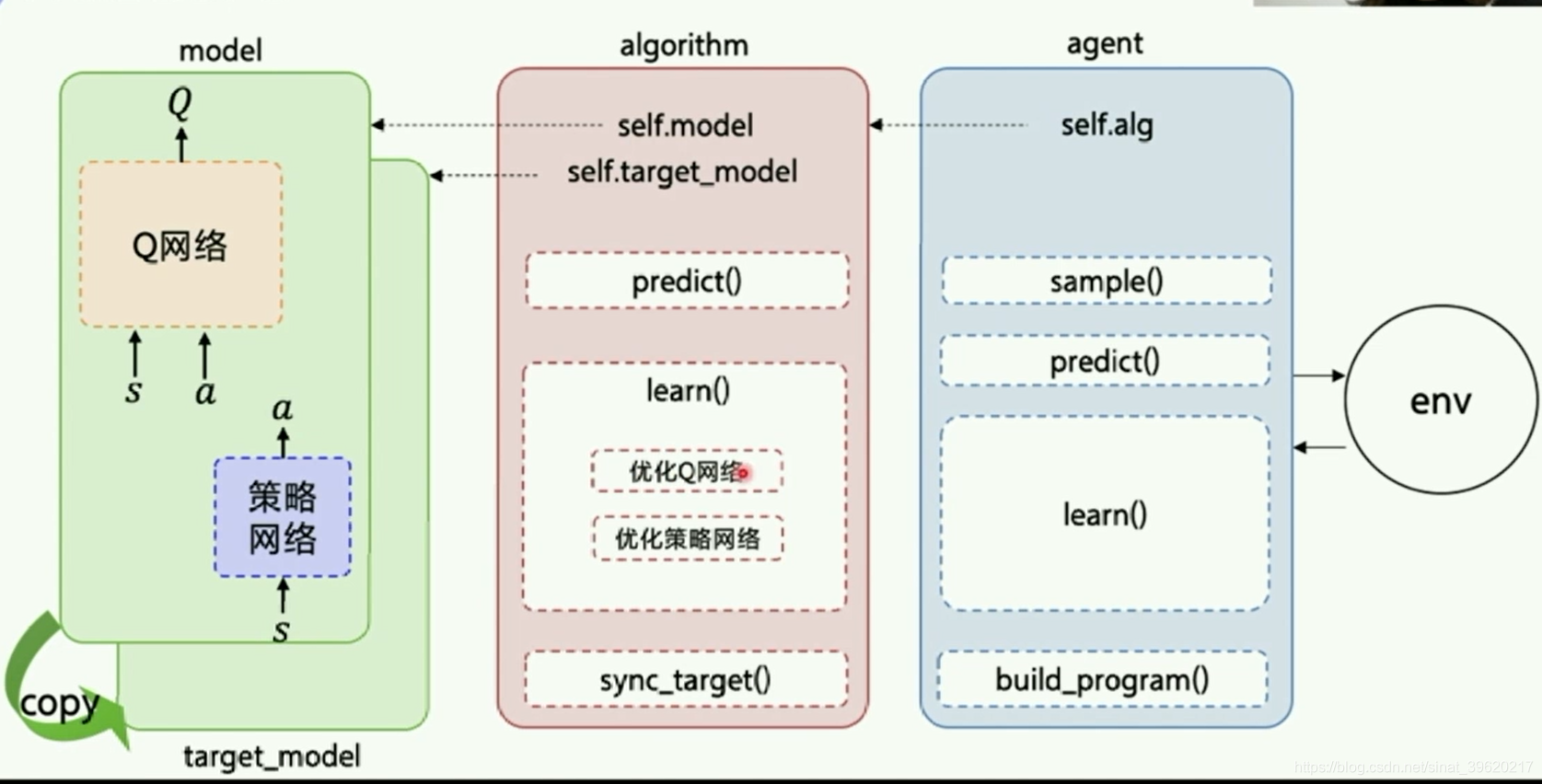

Agent把产生的数据传给algorithm,algorithm根据model的模型结构计算出Loss,使用SGD或者其他优化器不断的优化,PARL这种架构可以很方便的应用在各类深度强化学习问题中。

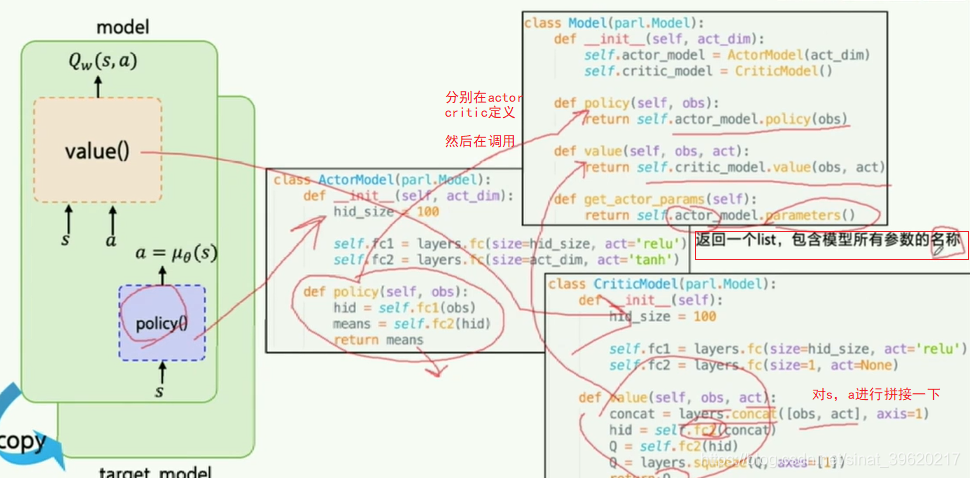

(1)Model

Model用来定义前向(Forward)网络,用户可以自由的定制自己的网络结构

class Model(parl.Model): def __init__(self, act_dim): self.actor_model = ActorModel(act_dim) self.critic_model = CriticModel() def policy(self, obs): return self.actor_model.policy(obs) def value(self, obs, act): return self.critic_model.value(obs, act) def get_actor_params(self): return self.actor_model.parameters()class ActorModel(parl.Model): def __init__(self, act_dim): hid_size = 100 self.fc1 = layers.fc(size=hid_size, act='relu') self.fc2 = layers.fc(size=act_dim, act='tanh') def policy(self, obs): hid = self.fc1(obs) means = self.fc2(hid) return meansclass CriticModel(parl.Model): def __init__(self): hid_size = 100 self.fc1 = layers.fc(size=hid_size, act='relu') self.fc2 = layers.fc(size=1, act=None) def value(self, obs, act): concat = layers.concat([obs, act], axis=1) hid = self.fc1(concat) Q = self.fc2(hid) Q = layers.squeeze(Q, axes=[1]) return Q

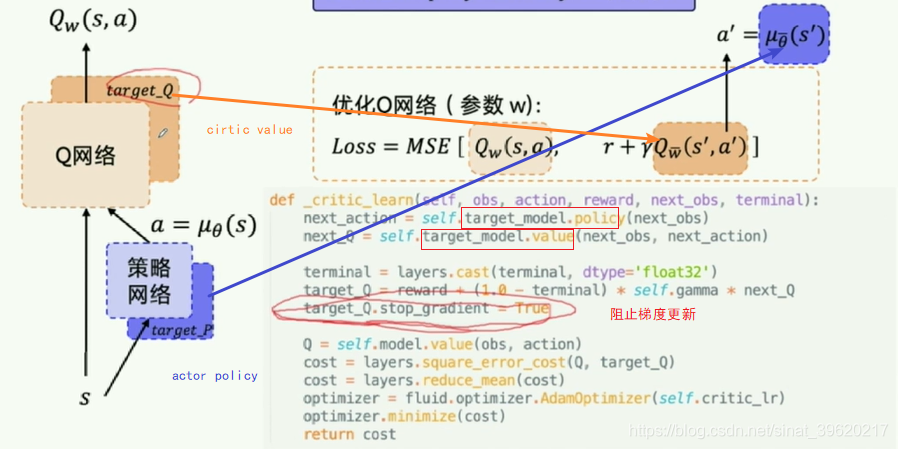

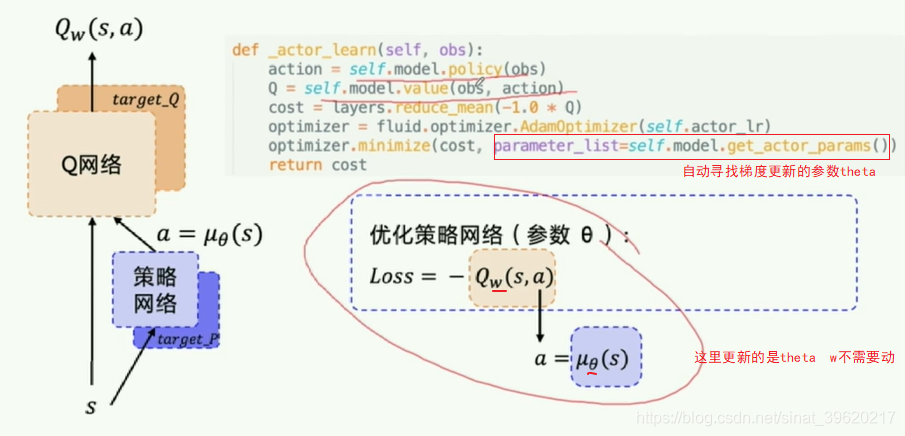

(2)Algorithm

Algorithm定义了具体的算法来更新前向网络(Model),也就是通过定义损失函数来更新Model,和算法相关的计算都放在algorithm中。

def _critic_learn(self, obs, action, reward, next_obs, terminal): next_action = self.target_model.policy(next_obs) next_Q = self.target_model.value(next_obs, next_action) terminal = layers.cast(terminal, dtype='float32') target_Q = reward + (1.0 - terminal) * self.gamma * next_Q target_Q.stop_gradient = True Q = self.model.value(obs, action) cost = layers.square_error_cost(Q, target_Q) cost = layers.reduce_mean(cost) optimizer = fluid.optimizer.AdamOptimizer(self.critic_lr) optimizer.minimize(cost) return cost

def _actor_learn(self, obs): action = self.model.policy(obs) Q = self.model.value(obs, action) cost = layers.reduce_mean(-1.0 * Q) optimizer = fluid.optimizer.AdamOptimizer(self.actor_lr) optimizer.minimize(cost, parameter_list=self.model.get_actor_params()) return cost

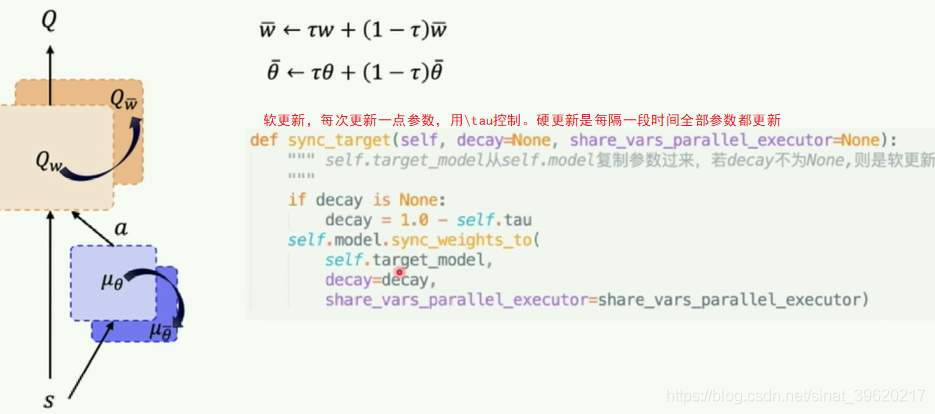

软更新:每次更新一点参数,用\tau控制,按比例更新

硬更新:是每隔一段时间全部参数都更新

def sync_target(self, decay=None, share_vars_parallel_executor=None): """ self.target_model从self.model复制参数过来,若decay不为None,则是软更新 """ if decay is None: decay = 1.0 - self.tau self.model.sync_weights_to( self.target_model, decay=decay, share_vars_parallel_executor=share_vars_parallel_executor)

(3)Agent

Agent负责算法与环境的交互,在交互过程中把生成的数据提供给Algorithm来更新模型(Model),数据的预处理流程也一般定义在这里。class Agent(parl.Agent): def __init__(self, algorithm, obs_dim, act_dim): assert isinstance(obs_dim, int) assert isinstance(act_dim, int) self.obs_dim = obs_dim self.act_dim = act_dim super(Agent, self).__init__(algorithm) # 注意:最开始先同步self.model和self.target_model的参数. self.alg.sync_target(decay=0) def build_program(self): self.pred_program = fluid.Program() self.learn_program = fluid.Program() with fluid.program_guard(self.pred_program): obs = layers.data( name='obs', shape=[self.obs_dim], dtype='float32') self.pred_act = self.alg.predict(obs) with fluid.program_guard(self.learn_program): obs = layers.data( name='obs', shape=[self.obs_dim], dtype='float32') act = layers.data( name='act', shape=[self.act_dim], dtype='float32') reward = layers.data(name='reward', shape=[], dtype='float32') next_obs = layers.data( name='next_obs', shape=[self.obs_dim], dtype='float32') terminal = layers.data(name='terminal', shape=[], dtype='bool') _, self.critic_cost = self.alg.learn(obs, act, reward, next_obs, terminal) def predict(self, obs): obs = np.expand_dims(obs, axis=0) act = self.fluid_executor.run( self.pred_program, feed={'obs': obs}, fetch_list=[self.pred_act])[0] act = np.squeeze(act) return act def learn(self, obs, act, reward, next_obs, terminal): feed = { 'obs': obs, 'act': act, 'reward': reward, 'next_obs': next_obs, 'terminal': terminal } critic_cost = self.fluid_executor.run( self.learn_program, feed=feed, fetch_list=[self.critic_cost])[0] self.alg.sync_target() return critic_cost

(4)env.py

连续控制版本的CartPole环境

- 该环境代码与算法无关,可忽略不看,参考gym

(5)经验池 ReplayMemory

- 与

DQN的replay_mamory.py代码一致class ReplayMemory(object): def __init__(self, max_size): self.buffer = collections.deque(maxlen=max_size) def append(self, exp): self.buffer.append(exp) def sample(self, batch_size): mini_batch = random.sample(self.buffer, batch_size) obs_batch, action_batch, reward_batch, next_obs_batch, done_batch = [], [], [], [], [] for experience in mini_batch: s, a, r, s_p, done = experience obs_batch.append(s) action_batch.append(a) reward_batch.append(r) next_obs_batch.append(s_p) done_batch.append(done) return np.array(obs_batch).astype('float32'), \ np.array(action_batch).astype('float32'), np.array(reward_batch).astype('float32'),\ np.array(next_obs_batch).astype('float32'), np.array(done_batch).astype('float32') def __len__(self): return len(self.buffer)

(6)train

# 训练一个episode def run_episode(agent, env, rpm): obs = env.reset() total_reward = 0 steps = 0 while True: steps += 1 batch_obs = np.expand_dims(obs, axis=0) action = agent.predict(batch_obs.astype('float32')) # 增加探索扰动, 输出限制在 [-1.0, 1.0] 范围内 action = np.clip(np.random.normal(action, NOISE), -1.0, 1.0) next_obs, reward, done, info = env.step(action) action = [action] # 方便存入replaymemory rpm.append((obs, action, REWARD_SCALE * reward, next_obs, done)) if len(rpm) > MEMORY_WARMUP_SIZE and (steps % 5) == 0: (batch_obs, batch_action, batch_reward, batch_next_obs, batch_done) = rpm.sample(BATCH_SIZE) agent.learn(batch_obs, batch_action, batch_reward, batch_next_obs, batch_done) obs = next_obs total_reward += reward if done or steps >= 200: break return total_reward增加扰动保持探索,添加一个高斯噪声。np.clip做一下裁剪,确保在合适的范围内。

总结

浙公网安备 33010602011771号

浙公网安备 33010602011771号