注意:内核升级

关于 **Flannel 使用 IPv6**,对 Linux 内核的要求主要集中在以下几个方面:

---

## 1. **内核版本**

* **推荐内核版本:Linux 4.x 及以上**

* IPv6 支持从较早的内核版本就已经存在(2.6.x 以后),但现代网络功能和容器网络要求较高,建议使用 4.x 及以上稳定内核版本。

* 新版本内核对 IPv6 的性能和稳定性更好,修复了很多旧版本的 bug。

---

## 2. **内核配置项**

确保内核编译时启用了以下选项:

* `CONFIG_IPV6`

* 必须启用 IPv6 支持(通常默认启用)。

* `CONFIG_NETFILTER` 和相关模块(如果你需要使用 iptables/nftables 过滤 IPv6 包)

* 如 `CONFIG_NF_CONNTRACK_IPV6`

* `CONFIG_NF_TABLES_IPV6` (iptables 的 IPv6 支持)

* `CONFIG_BRIDGE` 和 `CONFIG_BRIDGE_NETFILTER`

* Flannel 默认使用 overlay 或 vxlan 模式,需要桥接支持。

* `bridge-nf-call-ip6tables=1` 可用于启用桥接链的 IPv6 数据包过滤。

---

## 3. **内核网络参数**

以下 sysctl 参数需要正确设置以保证 IPv6 网络正常工作:

```bash

net.ipv6.conf.all.forwarding = 1 # 启用IPv6路由转发(K8s 节点常需)

net.ipv6.conf.all.disable_ipv6 = 0 # 确保IPv6未被禁用

net.ipv6.conf.default.accept_ra = 1 # 接受路由通告,自动配置

net.ipv6.conf.all.accept_ra = 1

```

---

## 4. **容器网络支持**

Flannel 本身是一个容器网络插件(CNI),它支持 IPv6 但配置较复杂:

* **Flannel 原生对 IPv6 支持有限**,主流版本对 IPv6 的支持还在完善中。

* 如果你用的是 Flannel 的 **vxlan 模式**,IPv6 支持更好,但还是建议确认版本支持情况。

* **双栈(IPv4+IPv6)支持**需要 Flannel 和 Kubernetes 配合良好,且内核网络栈支持双栈。

---

## 5. **总结**

| 要求 | 说明 |

| ------------- | ---------------------------- |

| Linux 内核版本 | 4.x 及以上版本更佳 |

| 内核 IPv6 支持 | 必须开启 `CONFIG_IPV6` |

| 网络过滤和桥接支持 | 需要启用对应 netfilter 和 bridge 选项 |

| sysctl 设置 | 启用 IPv6 转发等参数 |

| Flannel 版本和配置 | 确认支持 IPv6(尤其 vxlan 模式) |

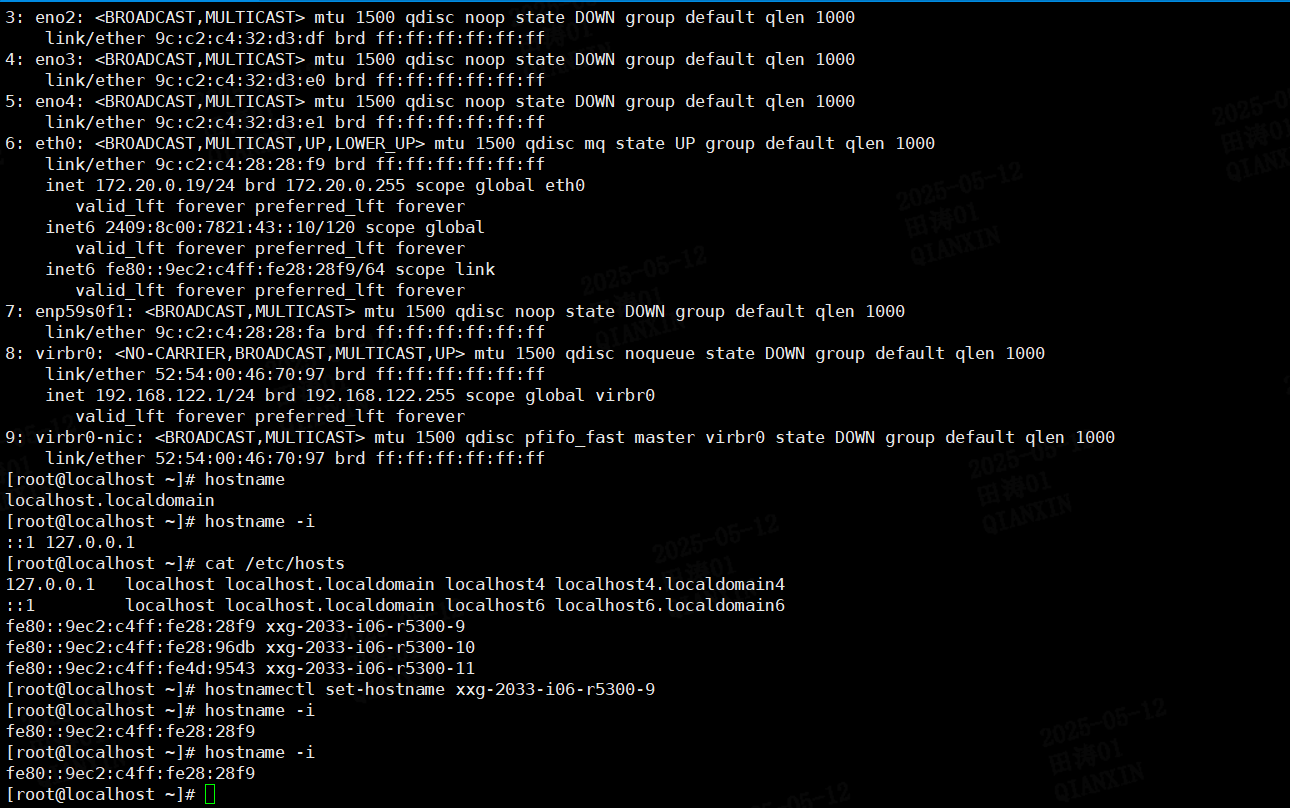

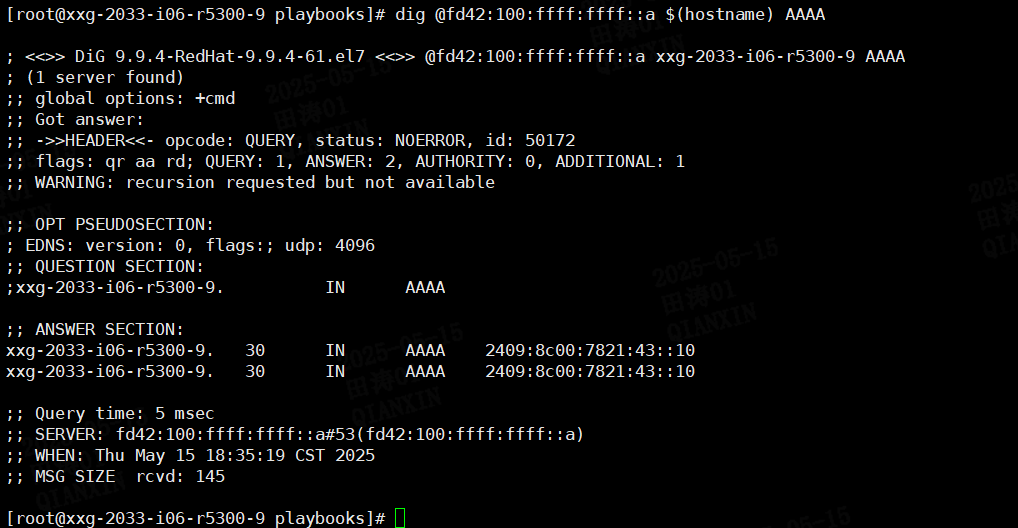

一,网络

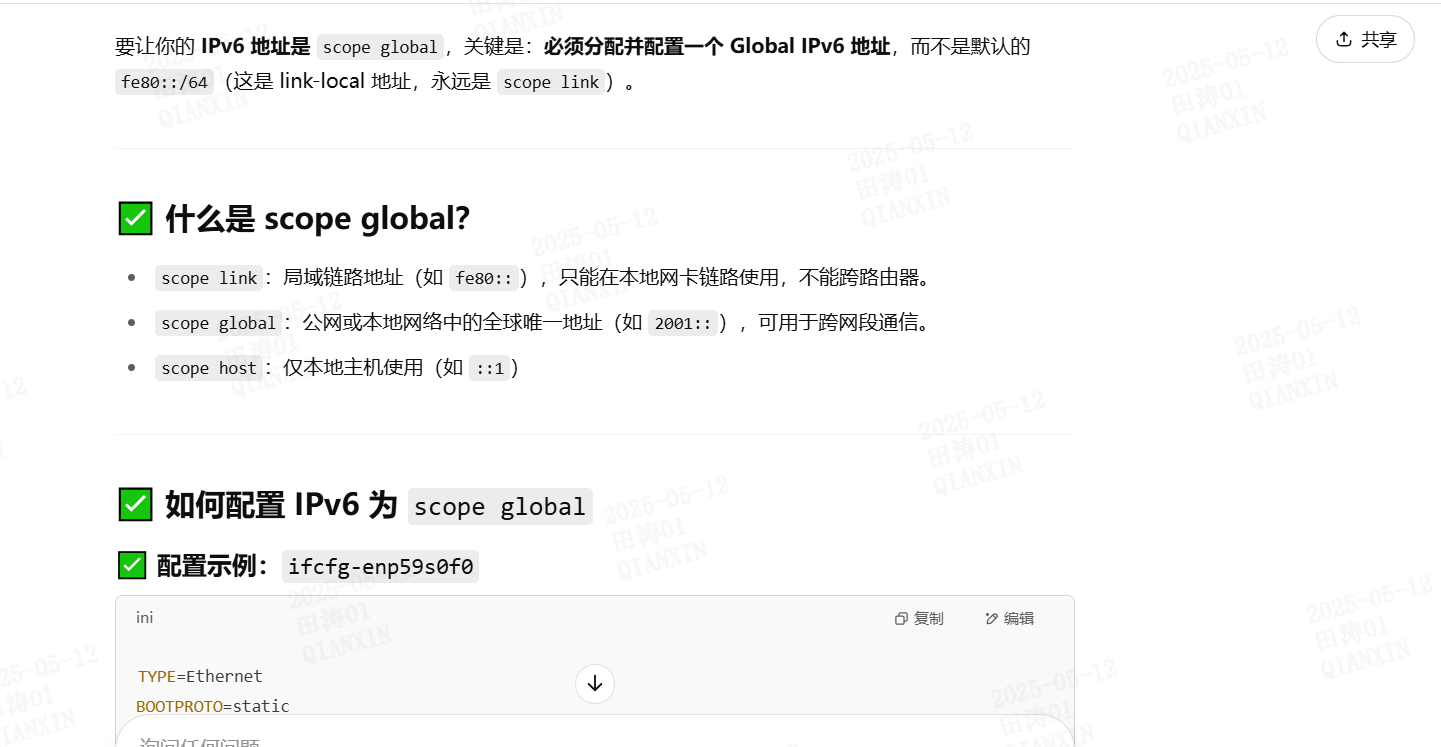

# cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

NAME=eth0

UUID=d699ceb9-ab14-48d9-a6e9-ab6de0af187d

HWADDR=9c:c2:c4:28:28:f9

DEVICE=eth0

ONBOOT=yes

IPADDR=172.20.0.19

NETMASK=255.255.255.0

GATEWAY=172.20.0.254

IPV6INIT=yes

IPV6_AUTOCONF=no

IPV6_PRIVACY=no

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

IPV6ADDR=2409:8c00:7821:43::10/120

IPV6_DEFAULTGW=2409:8c00:7821:43::FF

# cat /etc/udev/rules.d/70-persistent-net.rules

ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="1", ATTR{address}=="9c:c2:c4:28:28:f9", NAME="eth0"

二,安装

kind: InitConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kind: InitConfiguration

{% if isuseipv6 is defined and isuseipv6|bool %}

localAPIEndpoint:

advertiseAddress: "{{ (hostvars[inventory_hostname]['ansible_'+interface]['ipv6'] | selectattr('scope', 'equalto', 'global') | list | first).address}}"

bindPort: 6443

{% endif %}

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

name: {{ inventory_hostname }}

kubeletExtraArgs:

{% if isuseipv6 is defined and isuseipv6|bool %}

node-ip: "{{ (hostvars[inventory_hostname]['ansible_'+interface]['ipv6'] | selectattr('scope', 'equalto', 'global') | list | first).address}}"

{% endif %}

cgroup-driver: "systemd"

tls-cipher-suites: "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384"

---

ansible_python_interpreter: /usr/bin/python

app_group: bsafe

app_user: bsafe

config_ns: da.xian.test

da_ssl_crt: QAX-ATS-CA.crt

da_ssl_key: QAX-ATS-CA.key

da_ssl_oid: "2715651939760080161"

dbup_arch: amd64

dbup_version: v1.2.31

edge2_images: harbor.ops.qianxin-inc.cn/skylar/edge2-service:0905.prs_edge_ipv6_62582a3f.5

edge2_package_name: edge2.0905

gossip_images: harbor.ops.qianxin-inc.cn/skylar/gossip-hub:0814.release-1.0.0SP101_e129fd4c.3

gossip_package_name: gossip-hub.0905.v1.0.0

event_dir: /qaxdata/s/services/tmp

event_id: xian

global_vip_list: ""

install_app_path: '{{install_root_path}}/apps'

install_data_path: '{{install_root_path}}/data'

install_etc_path: '{{install_root_path}}/etc'

install_log_path: '{{install_root_path}}/logs'

install_root_path: /qaxdata/s/services

install_run_path: '{{install_root_path}}/run'

install_tmp_path: '{{install_root_path}}/tmp'

interface: eth0

isuseceph: "true"

isusecheckcpumem: "true"

isusechecksize: "true"

isusefesendproxy: "false"

isuseisolationdiskpartition: "false"

isuseqaximageslink: "true"

isuserepotype: "true"

isusersynckvmimages: "true"

isuseunisolationdiskpartition: "false"

isuseunisolationkernel: "false"

isuseiptables: "false"

isuseiptableswhitelist: "false"

isusemongoshared: "true"

islargercephdiskqaxdisk: "true"

isloadapppushimage: "true"

isuseipv6: "true"

license_asset_id: "2731894131868566846"

license_device_id: 25dc922e0b00b04c

prometheus_cnf_dir: '{{install_root_path}}/data/prometheus/prometheus'

prometheus_file_type: yaml

prometheus_sd_cnf: '{{install_root_path}}/prometheus/conf'

promethues_extend_ports: "9090"

registry_domain: registry.domain.com

all:

vars:

security_salt: WmEYhBGG

pg:

hosts:

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

pg_is_master: "true"

vars:

pg_service_port: "5432"

pg_service_user: "postgres"

use_dbup: "true"

etcd:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

etcd_service_port: "2483"

etcd_version: v3.5.4

etcd_with_tls: "false"

ceph:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ceph_is_master: "false"

ignore_disks: ""

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ceph_is_master: "false"

ignore_disks: ""

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ceph_is_master: "false"

ignore_disks: ""

vars:

ceph_env: xian

ceph_service_port: "9345"

ceph_service_user: hzq6FYG-ham9hgb4efw

ceph_vip: ""

dashboard_port: "58443"

data_devices_size: '40G:'

db_devices_size: ""

minio:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

minio_admin_user: admin

minio_service_port: "9345"

minio_service_user: pfnJjCoizCCMQdL2qa

minio_sts_with_etcd: "true"

minio_vip: ""

kafka:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

kafka_jmx_port: "21097"

kafka_service_port: "29092"

kafka_service_user: kafka

kafka_zookeeper_jmx_port: "21099"

kafka_zookeeper_leader_connect_port: "22888"

kafka_zookeeper_leader_election_port: "23888"

kafka_zookeeper_service_port: "22181"

schema-registry:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

schema_registry_service_port: "8081"

schema_registry_version: 7.2.0

tcp:

hosts:

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

tcp_demo_name: xian

tcp_docker_mode: "false"

tcp_service_port: "29000"

tcp_version: 0.13.1-debian

zookeeper:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

zookeeper_version: 3.4.14

zookeeper_jmx_port: 11099

zookeeper_service_port: 12181

zookeeper_leader_connect_port: 12888

zookeeper_leader_election_port: 13888

redis:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

redis_cluster_port: "16379"

redis_max_memory: 4g

redis_standalone_port: "16379"

redis_pseudo_mode: "false"

es:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

es_service_port: 19200

es_service_user: es

mongo:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

mongo_service_port: "27017"

mongo_service_user: admin

prometheus:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

prometheus_service_port: "9090"

k8s:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ansible_user: root

k8s_is_master: "true"

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ansible_user: root

k8s_is_master: "true"

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

ansible_user: root

k8s_is_master: "true"

vars:

k8s_flinkrabc: "true"

k8s_version: v1.26.3

kube_config_dir: /tmp

namespace: default

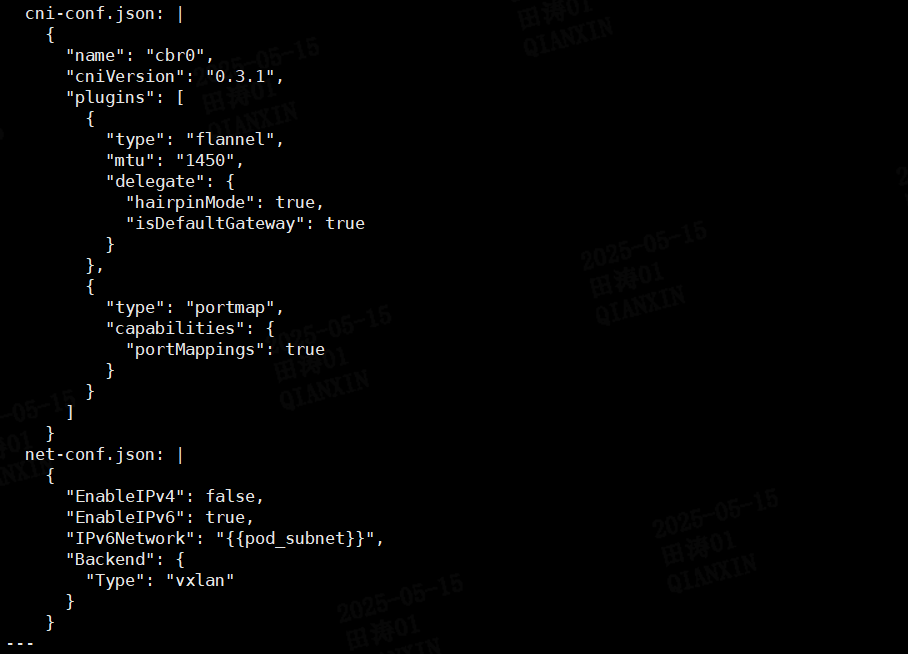

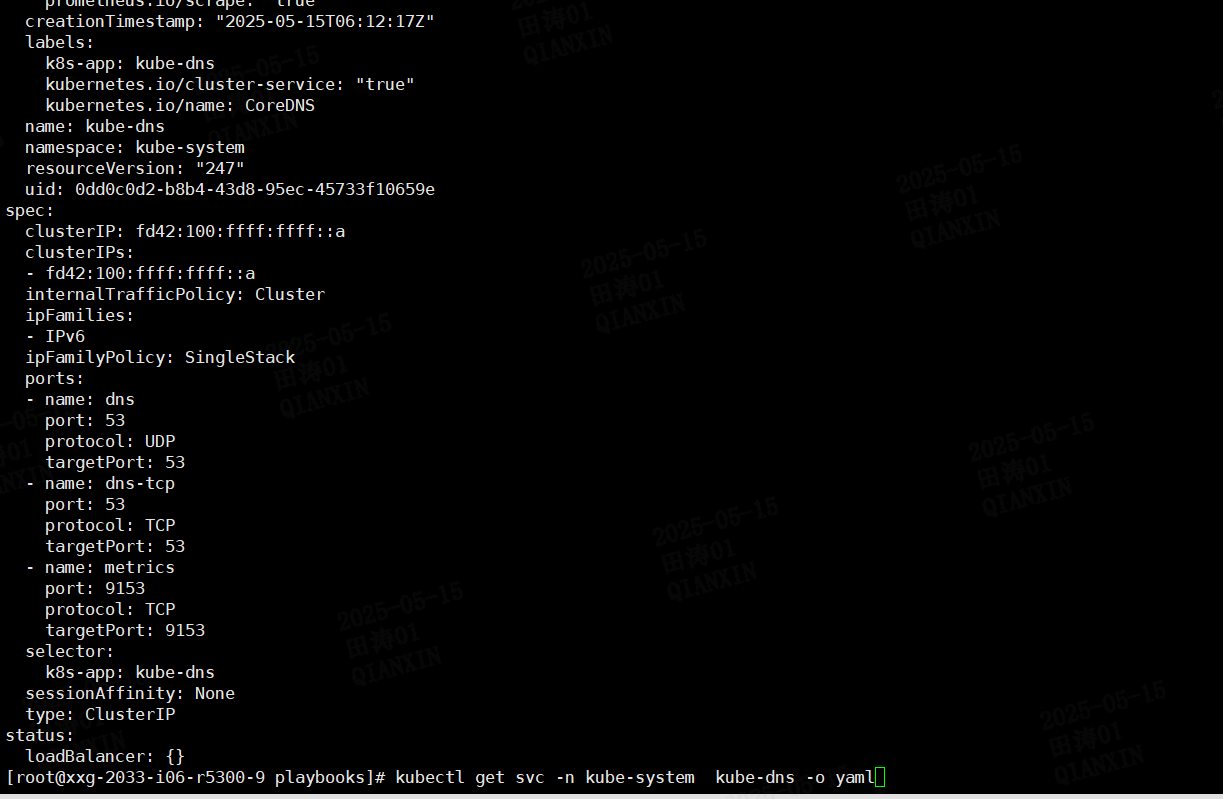

pod_subnet: "fd42:100:ffff:fffe::/112"

service_subnet: "fd42:100:ffff:ffff::/112"

preflight:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

unisolation:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

web:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

k8s_service_name: butcher-fe-xian

web_service_port: "31404"

grafana:

hosts:

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

grafana_service_port: "3000"

cdn:

hosts:

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

cdn_service_port: "31290"

gossip.v2:

hosts:

xxg-2033-i06-r5300-9:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

gossip_k8s_mode: "true"

gossip_v2_service_port: "31120"

edge:

hosts:

xxg-2033-i06-r5300-10:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

edge_vip: ""

edge2_agent_port: "31001"

edge2_area: edge-1673513417

edge2_desc: edge-1673513417

edge2_grpc_port: "30001"

edge2_id: edge-1673513417

edge2_region: edge-1673513417

registry:

hosts:

xxg-2033-i06-r5300-11:

ansible_user: root

ansible_port: "22"

ansible_ssh_pass: Admin@12345

ansible_sudo_pass: Admin@12345

vars:

registry_admin_htppasswd: $2y$05$i3rpN0kJasQg/mymv9zm5eUtas1UtrpRGc9YgJ7wz5u6y.2QiXHK6

registry_service_port: "30500"

registry_service_user: admin

@@@@@@@@@@@@@@@@@@@@@@@@@

ansible-playbook --extra-vars '{"case":"hostname","event_id":"1704880614"}' --inventory inventory install_preflight.yaml

ansible-playbook --extra-vars '{"case":"disk","event_id":"1704880614"}' --inventory inventory install_preflight.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags k8s install_k8s.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags es install_search.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags etcd install_config.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags kafka install_mq.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags install_db.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags zookeeperinstall_kvs.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags redis install_cache.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags schema-registry install_schema_registry.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags tcp install_logger.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags gossip.v2 install_registry.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags edge install_edge.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags prometheus install_prometheus.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags grafana install_grafana.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags web install_web.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory --tags monitor install_monitor.yaml

注意:不支持ceph,pg只支持单机 注意升级内核

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

pg/redis/s3/mongo/es/etcd/k8s

ansible-playbook --extra-vars '{"case":"hostname","event_id":"1704880614"}' --inventory inventory -b install_preflight.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags kernel install_kernel.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags k8s install_k8s.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags es install_search.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags etcd install_config.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags kafka install_mq.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags mongo install_db.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags pg install_db.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags redis install_cache.yaml

ansible-playbook --extra-vars '{"event_id":"1704880614"}' --inventory inventory -b --tags minio install_s3.yaml

# cat .secrets.json

{

"ceph_service_password": "Us0qJnVtJnMmPV2kdXtVfSw6chG___Aa1",

"es_service_password": "hpx5saywXhR5FMc___Aa1",

"grafana_service_password": "ls4sn7GaQCyJFxF1__Aa1",

"kafka_service_password": "fyw3KuJLzF5M3Nb0__Aa1",

"minio_service_password": "3xmJkFTEwmZ9vzUty8Orv7rTl3K0__Aa1",

"mongo_service_password": "YdaDJNa2yrDjyOW0__Aa1",

"pg_service_password": "4m8jyhtR1h1e5101__Aa1",

"redis_service_password": "AFfVUGGnbIANKU80__Aa1",

"registry_service_password": "0I0bH_UPRi_7PdZ0__Aa1"

}

redis:端口 16379 16380 用户密码 /AFfVUGGnbIANKU80__Aa1

xxg-2033-i06-r5300-9

xxg-2033-i06-r5300-10

xxg-2033-i06-r5300-11

# curl -u"es:hpx5saywXhR5FMc___Aa1" '2409:8c00:7821:43::10:19200/_cat/nodes'

# redis-cli -p 16379 -h 2409:8c00:7821:43::10 -c -a 'AFfVUGGnbIANKU80__Aa1'

mongo:端口27017 用户密码 admin/YdaDJNa2yrDjyOW0__Aa1

xxg-2033-i06-r5300-9

xxg-2033-i06-r5300-10

xxg-2033-i06-r5300-11

# /qaxdata/s/services/mongo/mongos27017/bin/mongo

MongoDB shell version v4.2.21

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("fc05e206-9d74-42ed-8bb2-b1900898c48a") }

MongoDB server version: 4.2.21

mongos> use admin

switched to db admin

mongos> db.auth('admin','YdaDJNa2yrDjyOW0__Aa1')

1

mongos> show dbs

admin 0.000GB

config 0.001GB

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6825780df9e81f8aeadf2a94")

}

shards:

{ "_id" : "Shard1-26817", "host" : "Shard1-26817/xxg-2033-i06-r5300-10:26817,xxg-2033-i06-r5300-11:26817,xxg-2033-i06-r5300-9:26817", "state" : 1 }

k8s:

xxg-2033-i06-r5300-9

xxg-2033-i06-r5300-10

xxg-2033-i06-r5300-11

# kubectl get node

NAME STATUS ROLES AGE VERSION

xxg-2033-i06-r5300-10 Ready control-plane 20m v1.26.3

xxg-2033-i06-r5300-11 Ready control-plane 19m v1.26.3

xxg-2033-i06-r5300-9 Ready control-plane 19m v1.26.3

etcd:端口2483 用户密码 /

xxg-2033-i06-r5300-9

xxg-2033-i06-r5300-10

xxg-2033-i06-r5300-11

# /qaxdata/s/services/etcd/etcd_2483/bin/etcdctl --endpoints=127.0.0.1:2483 member list

19770dc3249f57b5, started, xxg-2033-i06-r5300-11, http://xxg-2033-i06-r5300-11:2484, http://xxg-2033-i06-r5300-11:2483, false

4a87baff43889d93, started, xxg-2033-i06-r5300-10, http://xxg-2033-i06-r5300-10:2484, http://xxg-2033-i06-r5300-10:2483, false

da226c5b59fd82a5, started, xxg-2033-i06-r5300-9, http://xxg-2033-i06-r5300-9:2484, http://xxg-2033-i06-r5300-9:2483, false

pg:端口5432 用户密码 postgres/4m8jyhtR1h1e5101__Aa1

xxg-2033-i06-r5300-11

# /qaxdata/s/services/pgsql/5432/server/bin/psql -h $(hostname) -p 5432 -U postgres -W

Password:

minio: 端口9345 9346 用户密码 pfnJjCoizCCMQdL2qa/3xmJkFTEwmZ9vzUty8Orv7rTl3K0__Aa1

xxg-2033-i06-r5300-9

xxg-2033-i06-r5300-10

xxg-2033-i06-r5300-11

# cat /qaxdata/s/services/minio/9345/etc/minio1.env

MINIO_PROMETHEUS_AUTH_TYPE="public"

MINIO_ACCESS_KEY=admin

MINIO_SECRET_KEY=Hdk3SbAEuVpjgzDqTLjYuL7xqYz0__Aa1

MINIO_STORAGE_CLASS_STANDARD="EC:2"

MINIO_NODES="https://xxg-2033-i06-r5300-10:9345/qaxdata/s/services/minio/9345/data https://xxg-2033-i06-r5300-10:9346/qaxdata/s/services/minio/9346/data https://xxg-2033-i06-r5300-11:9345/qaxdata/s/services/minio/9345/data https://xxg-2033-i06-r5300-11:9346/qaxdata/s/services/minio/9346/data https://xxg-2033-i06-r5300-9:9345/qaxdata/s/services/minio/9345/data https://xxg-2033-i06-r5300-9:9346/qaxdata/s/services/minio/9346/data"

MINIO_OPTS="--address=:9345 --config-dir /qaxdata/s/services/minio/9345/etc"

# /qaxdata/s/services/minio/9345/bin/mc config host add minio https://127.0.0.1:9345 admin Hdk3SbAEuVpjgzDqTLjYuL7xqYz0__Aa1

Added `minio` successfully.

# /qaxdata/s/services/minio/9345/bin/mc admin info minio/

● xxg-2033-i06-r5300-10:9345

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

● xxg-2033-i06-r5300-10:9346

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

● xxg-2033-i06-r5300-11:9345

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

● xxg-2033-i06-r5300-11:9346

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

● xxg-2033-i06-r5300-9:9346

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

● xxg-2033-i06-r5300-9:9345

Uptime: 7 minutes

Version: <development>

Network: 6/6 OK

Drives: 1/1 OK

0 B Used, 4 Buckets, 0 Objects

6 drives online, 0 drives offline

三,扩容

minio EC=2

mongo share=1

四,资料

https://kubernetes.io/docs/concepts/services-networking/dns-pod-service/

https://kubernetes.io/docs/concepts/services-networking/dual-stack/

https://github.com/sgryphon/kubernetes-ipv6

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/dual-stack-support/

https://www.alibabacloud.com/help/tc/ecs/use-cases/install-and-use-docker#4787944e3bwid

浙公网安备 33010602011771号

浙公网安备 33010602011771号