一次失败的尝试hdfs的java客户端编写(在linux下使用eclipse)

一次失败的尝试hdfs的java客户端编写(在linux下使用eclipse)

给centOS安装图形界面

GNOME桌面环境

https://blog.csdn.net/wh211212/article/details/52937299

在linux下安装eclipse开发hadoop的配置

file -> properties -> java build path -> add libiary -> user libiary

点击按钮 user libiary -> new 取名叫 hdfslib

选中 hdfslib 的状态下点击 Add External JARs ,在安装路径下找到

- hdfs 的核心jar包:hadoop-3.0.0/share/hadoop/hdfs/hadoop-hdfs-3.0.0.jar,点OK。及其依赖:hadoop-3.0.0/share/hadoop/hdfs/lib,全选然后 OK

- commons 的核心jar包:hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar,ok,及其依赖:hadoop-3.0.0/share/hadoop/common/lib,全选然后 OK

然后在eclipse中创建一个工程,新建一个class,把core-site.xml和hdfs-site.xml两个配置文件拷贝到src中。

代码部分

写一段代码完成下载操作:开一个hdfs输入流,在本地开一个输出流,把输入流拷贝到输出流里就完成了下载

FileSystem(org.apache.hadoop.fs):访问hdfs的核心入口类 是一个抽象类,用静态方法 get() 拿到它的对象,这个对象就是hdfs的一个客户端

Configuration(org.apache.hadoop.conf):读配置文件,保存配置文件中的各种key-value

package cn.hadoop.hdfs;

import java.io.FileOutputStream;

import java.io.IOException;

import org.apache.commons.io.IOUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class HdfsUtil {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

FileSystem fs = FileSystem.get(conf);

Path src = new Path("hdfs://node01:9000/jdk-8u161-linux-x64.tar.gz");

//打开输入流

FSDataInputStream inputStream = fs.open(src);

//本地输出流

FileOutputStream outputStream = new FileOutputStream("/home/app/download/jdk.tgz");

//将输入流写入输出流

IOUtils.copy(inputStream, outputStream);

}

}

运行报错 1

hadoop 3.0.0 No FileSystem for scheme "hdfs"

参照:https://blog.csdn.net/u013281331/article/details/17992077

在core-site.xml中加入

<property>

<name>fs.hdfs.impl</name>

<value>org.apache.hadoop.hdfs.DistributedFileSystem</value>

<description>The FileSystem for hdfs: uris.</description>

</property>

(或者在代码中写一行 conf.set("fs.hdfs.impl",org.apache.hadoop.hdfs.DistributedFileSystem.class.getName()); )

运行报错 2

Class org.apache.hadoop.hdfs.DistributedFileSystem not found

去官网下载导入了hadoop-core-1.2.1.jar之后好了

运行报错 3

之前的报错是没有了,但再次GG了

Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hdfs.server.namenode.NameNode.getAddress(Ljava/lang/String;)Ljava/net/InetSocketAddress;

看到有说是hadoop本地库是32位的,而在64位的服务器上就会有问题(估计是老版本)。但是查看本地库

cd hadoop-2.4.1/lib/native

file libhadoop.so.1.0.0

看到就是64-bit的,说明不是这个问题。

命令行运行如下代码打印错误信息(参照:https://blog.csdn.net/znb769525443/article/details/51507283)

export HADOOP_ROOT_LOGGER=DEBUG,console

hadoop fs -text /test/data/origz/access.log.gz

打印出来一大堆

2018-03-25 15:35:15,338 DEBUG util.Shell: setsid exited with exit code 0

2018-03-25 15:35:15,379 DEBUG conf.C8onfiguration: parsing URL jar:file:/home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar!/core-default.xml

2018-03-25 15:35:15,393 DEBUG conf.Configuration: parsing input stream sun.net.www.protocol.jar.JarURLConnection\(JarURLInputStream@26653222 2018-03-25 15:35:16,054 DEBUG conf.Configuration: parsing URL file:/home/thousfeet/app/hadoop-3.0.0/etc/hadoop/core-site.xml 2018-03-25 15:35:16,054 DEBUG conf.Configuration: parsing input stream java.io.BufferedInputStream@5a8e6209 2018-03-25 15:35:17,071 DEBUG core.Tracer: sampler.classes = ; loaded no samplers 2018-03-25 15:35:17,173 DEBUG core.Tracer: span.receiver.classes = ; loaded no span receivers 2018-03-25 15:35:18,565 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation\)UgiMetrics.loginSuccess with annotation @org.apache.hadoop.metrics2.annotation.Metric(about=, sampleName=Ops, always=false, type=DEFAULT, valueName=Time, value=[Rate of successful kerberos logins and latency (milliseconds)])

2018-03-25 15:35:18,570 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation\(UgiMetrics.loginFailure with annotation @org.apache.hadoop.metrics2.annotation.Metric(about=, sampleName=Ops, always=false, type=DEFAULT, valueName=Time, value=[Rate of failed kerberos logins and latency (milliseconds)]) 2018-03-25 15:35:18,570 DEBUG lib.MutableMetricsFactory: field org.apache.hadoop.metrics2.lib.MutableRate org.apache.hadoop.security.UserGroupInformation\)UgiMetrics.getGroups with annotation @org.apache.hadoop.metrics2.annotation.Metric(about=, sampleName=Ops, always=false, type=DEFAULT, valueName=Time, value=[GetGroups])

2018-03-25 15:35:18,571 DEBUG lib.MutableMetricsFactory: field private org.apache.hadoop.metrics2.lib.MutableGaugeLong org.apache.hadoop.security.UserGroupInformation\(UgiMetrics.renewalFailuresTotal with annotation @org.apache.hadoop.metrics2.annotation.Metric(about=, sampleName=Ops, always=false, type=DEFAULT, valueName=Time, value=[Renewal failures since startup]) 2018-03-25 15:35:18,571 DEBUG lib.MutableMetricsFactory: field private org.apache.hadoop.metrics2.lib.MutableGaugeInt org.apache.hadoop.security.UserGroupInformation\)UgiMetrics.renewalFailures with annotation @org.apache.hadoop.metrics2.annotation.Metric(about=, sampleName=Ops, always=false, type=DEFAULT, valueName=Time, value=[Renewal failures since last successful login])

2018-03-25 15:35:18,572 DEBUG impl.MetricsSystemImpl: UgiMetrics, User and group related metrics

2018-03-25 15:35:18,651 DEBUG security.SecurityUtil: Setting hadoop.security.token.service.use_ip to true

2018-03-25 15:35:18,738 DEBUG security.Groups: Creating new Groups object

2018-03-25 15:35:18,740 DEBUG util.NativeCodeLoader: Trying to load the custom-built native-hadoop library...

2018-03-25 15:35:18,868 DEBUG util.NativeCodeLoader: Loaded the native-hadoop library

2018-03-25 15:35:18,869 DEBUG security.JniBasedUnixGroupsMapping: Using JniBasedUnixGroupsMapping for Group resolution

2018-03-25 15:35:18,869 DEBUG security.JniBasedUnixGroupsMappingWithFallback: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMapping

2018-03-25 15:35:19,088 DEBUG security.Groups: Group mapping impl=org.apache.hadoop.security.JniBasedUnixGroupsMappingWithFallback; cacheTimeout=300000; warningDeltaMs=5000

2018-03-25 15:35:19,157 DEBUG security.UserGroupInformation: hadoop login

2018-03-25 15:35:19,157 DEBUG security.UserGroupInformation: hadoop login commit

2018-03-25 15:35:19,256 DEBUG security.UserGroupInformation: using local user:UnixPrincipal: thousfeet

2018-03-25 15:35:19,256 DEBUG security.UserGroupInformation: Using user: "UnixPrincipal: thousfeet" with name thousfeet

2018-03-25 15:35:19,257 DEBUG security.UserGroupInformation: User entry: "thousfeet"

2018-03-25 15:35:19,257 DEBUG security.UserGroupInformation: UGI loginUser:thousfeet (auth:SIMPLE)

2018-03-25 15:35:19,258 DEBUG core.Tracer: sampler.classes = ; loaded no samplers

2018-03-25 15:35:19,258 DEBUG core.Tracer: span.receiver.classes = ; loaded no span receivers

2018-03-25 15:35:19,258 DEBUG fs.FileSystem: Loading filesystems

2018-03-25 15:35:19,304 DEBUG fs.FileSystem: file:// = class org.apache.hadoop.fs.LocalFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,339 DEBUG fs.FileSystem: viewfs:// = class org.apache.hadoop.fs.viewfs.ViewFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,343 DEBUG fs.FileSystem: ftp:// = class org.apache.hadoop.fs.ftp.FTPFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,349 DEBUG fs.FileSystem: har:// = class org.apache.hadoop.fs.HarFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,368 DEBUG fs.FileSystem: http:// = class org.apache.hadoop.fs.http.HttpFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,395 DEBUG fs.FileSystem: https:// = class org.apache.hadoop.fs.http.HttpsFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/common/hadoop-common-3.0.0.jar

2018-03-25 15:35:19,450 DEBUG fs.FileSystem: hdfs:// = class org.apache.hadoop.hdfs.DistributedFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/hdfs/hadoop-hdfs-client-3.0.0.jar

2018-03-25 15:35:20,568 DEBUG fs.FileSystem: webhdfs:// = class org.apache.hadoop.hdfs.web.WebHdfsFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/hdfs/hadoop-hdfs-client-3.0.0.jar

2018-03-25 15:35:20,569 DEBUG fs.FileSystem: swebhdfs:// = class org.apache.hadoop.hdfs.web.SWebHdfsFileSystem from /home/thousfeet/app/hadoop-3.0.0/share/hadoop/hdfs/hadoop-hdfs-client-3.0.0.jar

2018-03-25 15:35:20,569 DEBUG fs.FileSystem: Looking for FS supporting hdfs

2018-03-25 15:35:20,569 DEBUG fs.FileSystem: looking for configuration option fs.hdfs.impl

2018-03-25 15:35:20,654 DEBUG fs.FileSystem: Looking in service filesystems for implementation class

2018-03-25 15:35:20,655 DEBUG fs.FileSystem: FS for hdfs is class org.apache.hadoop.hdfs.DistributedFileSystem

2018-03-25 15:35:20,760 DEBUG impl.DfsClientConf: dfs.client.use.legacy.blockreader.local = false

2018-03-25 15:35:20,761 DEBUG impl.DfsClientConf: dfs.client.read.shortcircuit = false

2018-03-25 15:35:20,761 DEBUG impl.DfsClientConf: dfs.client.domain.socket.data.traffic = false

2018-03-25 15:35:20,761 DEBUG impl.DfsClientConf: dfs.domain.socket.path =

2018-03-25 15:35:20,823 DEBUG hdfs.DFSClient: Sets dfs.client.block.write.replace-datanode-on-failure.min-replication to 0

2018-03-25 15:35:20,928 DEBUG retry.RetryUtils: multipleLinearRandomRetry = null

2018-03-25 15:35:20,989 DEBUG ipc.Server: rpcKind=RPC_PROTOCOL_BUFFER, rpcRequestWrapperClass=class org.apache.hadoop.ipc.ProtobufRpcEngine\(RpcProtobufRequest, rpcInvoker=org.apache.hadoop.ipc.ProtobufRpcEngine\)Server$ProtoBufRpcInvoker@272113c4

2018-03-25 15:35:21,060 DEBUG ipc.Client: getting client out of cache: org.apache.hadoop.ipc.Client@2794eab6

2018-03-25 15:35:23,045 DEBUG unix.DomainSocketWatcher: org.apache.hadoop.net.unix.DomainSocketWatcher$2@4b4bda67: starting with interruptCheckPeriodMs = 60000

2018-03-25 15:35:23,115 DEBUG util.PerformanceAdvisory: Both short-circuit local reads and UNIX domain socket are disabled.

2018-03-25 15:35:23,123 DEBUG sasl.DataTransferSaslUtil: DataTransferProtocol not using SaslPropertiesResolver, no QOP found in configuration for dfs.data.transfer.protection

2018-03-25 15:35:23,329 DEBUG ipc.Client: The ping interval is 60000 ms.

2018-03-25 15:35:23,331 DEBUG ipc.Client: Connecting to node01/192.168.216.100:9000

2018-03-25 15:35:24,267 DEBUG ipc.Client: IPC Client (2146338580) connection to node01/192.168.216.100:9000 from thousfeet: starting, having connections 1

2018-03-25 15:35:24,381 DEBUG ipc.Client: IPC Client (2146338580) connection to node01/192.168.216.100:9000 from thousfeet sending #0 org.apache.hadoop.hdfs.protocol.ClientProtocol.getFileInfo

2018-03-25 15:35:24,427 DEBUG ipc.Client: IPC Client (2146338580) connection to node01/192.168.216.100:9000 from thousfeet got value #0

2018-03-25 15:35:24,427 DEBUG ipc.ProtobufRpcEngine: Call: getFileInfo took 1193ms

text: `/text/data/origz/access.log.gz': No such file or directory

2018-03-25 15:35:24,575 DEBUG ipc.Client: stopping client from cache: org.apache.hadoop.ipc.Client@2794eab6

2018-03-25 15:35:24,576 DEBUG ipc.Client: removing client from cache: org.apache.hadoop.ipc.Client@2794eab6

2018-03-25 15:35:24,576 DEBUG ipc.Client: stopping actual client because no more references remain: org.apache.hadoop.ipc.Client@2794eab6

2018-03-25 15:35:24,576 DEBUG ipc.Client: Stopping client

2018-03-25 15:35:24,598 DEBUG ipc.Client: IPC Client (2146338580) connection to node01/192.168.216.100:9000 from thousfeet: closed

2018-03-25 15:35:24,598 DEBUG ipc.Client: IPC Client (2146338580) connection to node01/192.168.216.100:9000 from thousfeet: stopped, remaining connections 0

2018-03-25 15:35:24,699 DEBUG util.ShutdownHookManager: ShutdownHookManger complete shutdown.

感觉问题出在

Both short-circuit local reads and UNIX domain socket are disabled.

查了一大堆的的博客教程都不对,直接找到官网去了 http://hadoop.apache.org/docs/current/hadoop-project-dist/hadoop-hdfs/ShortCircuitLocalReads.html

给hdfs-site.xml配置加上

<property>

<name>dfs.client.read.shortcircuit</name>

<value>true</value>

</property>

<property>

<name>dfs.client.use.legacy.blockreader.local</name>

<value>true</value>

</property>

<property>

<name>dfs.datanode.data.dir.perm</name>

<value>750</value>

</property>

<property>

<name>dfs.block.local-path-access.user</name>

<value>foo,bar</value>

</property>

还是不行

参考 https://www.cnblogs.com/dandingyy/archive/2013/03/08/2950696.html ,在run configuration中 Arguments/VM arguments加上 -Djava.library.path=/home/thousfeet/app/hadoop-3.0.0/lib/native

运行报错 4

之前报错的第一行unable xxx不见了...但第二行的报错还在

Exception in thread "main" java.lang.NoSuchMethodError: org.apache.hadoop.hdfs.server.namenode.NameNode.getAddress(Ljava/lang/String;)Ljava/net/InetSocketAddress;

参考 https://blog.csdn.net/yzl_8877/article/details/53216923

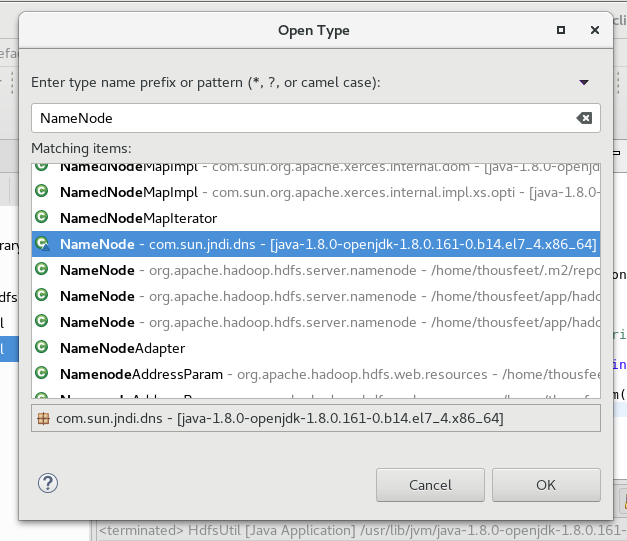

java.net.URL urlOfClass = NameNode.class.getClassLoader().getResource("com/sun/jndi/dns/NameNode.class"); //InsertProgarm是该语句所在的类

System.out.println(urlOfClass);

输出

jar:file:/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.161-0.b14.el7_4.x86_64/jre/lib/rt.jar!/com/sun/jndi/dns/NameNode.class

但是我总不能把 rt.jar 移除了吧!甚至都有点想修改rt.jar把这个class的getAddress()方法给去了,但是动了JRE的标准API后续不知道的某些地方有牵连就会很爆炸()

到这里瓶颈了,还没解决

猜测可能最好还是用maven直接配置(?)...打算再折腾半天,如果还解决不了就算了,反正现在的需求来看暂时不会用上这个。

浙公网安备 33010602011771号

浙公网安备 33010602011771号